Systems and Methods for Improving Visual Search Using Summarization Feature

Zheng; Rui ; et al.

U.S. patent application number 17/160399 was filed with the patent office on 2021-05-20 for systems and methods for improving visual search using summarization feature. The applicant listed for this patent is Markable, Inc.. Invention is credited to Suren Kumar, Rui Zheng.

| Application Number | 20210150249 17/160399 |

| Document ID | / |

| Family ID | 1000005370729 |

| Filed Date | 2021-05-20 |

View All Diagrams

| United States Patent Application | 20210150249 |

| Kind Code | A1 |

| Zheng; Rui ; et al. | May 20, 2021 |

Systems and Methods for Improving Visual Search Using Summarization Feature

Abstract

Methods and systems for training a metric learning convolutional neural network (CNN)-based model for cross-domain image retrieval are disclosed. The methods and systems perform steps of generating a plurality of batches sampled from a cross-domain training dataset to train the CNN-based model to match images of different sub-categories from one domain to another, and training the CNN-based model using the generated batches. The CNN-based model comprises various pooling, normalization, and concatenation layers that enable it to concatenate the normalized outputs of multiple concatenation layers. Use of the generated batches comprises executing a loss function based on one or more batches, where the loss function is a triplet, contrastive, or cluster loss function. Embodiments of the present invention enable the CNN-based model to summarize information from multiple convolutional layers, thus improving visual search. Also disclosed are benefits of the new methods, and alternative embodiments of implementation.

| Inventors: | Zheng; Rui; (Shenzhen, CN) ; Kumar; Suren; (Seattle, WA) | ||||||||||

| Applicant: |

|

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Family ID: | 1000005370729 | ||||||||||

| Appl. No.: | 17/160399 | ||||||||||

| Filed: | January 28, 2021 |

Related U.S. Patent Documents

| Application Number | Filing Date | Patent Number | ||

|---|---|---|---|---|

| 16623323 | Dec 16, 2019 | |||

| PCT/US2018/037955 | Jun 15, 2018 | |||

| 17160399 | ||||

| 62645727 | Mar 20, 2018 | |||

| 62639938 | Mar 7, 2018 | |||

| 62639944 | Mar 7, 2018 | |||

| 62561637 | Sep 21, 2017 | |||

| 62521284 | Jun 16, 2017 | |||

| Current U.S. Class: | 1/1 |

| Current CPC Class: | G06N 3/08 20130101; G06K 9/4652 20130101; G06K 9/4609 20130101; G06Q 30/0643 20130101; G06K 9/3233 20130101 |

| International Class: | G06K 9/46 20060101 G06K009/46; G06N 3/08 20060101 G06N003/08; G06Q 30/06 20060101 G06Q030/06; G06K 9/32 20060101 G06K009/32 |

Claims

1. A computer-implemented method for training a metric learning convolutional neural network (CNN)-based model for a target cross-domain image retrieval application, the computer-implemented method executable by a processor, the method comprising: generating a plurality of batches sampled from a cross-domain training dataset to train the metric learning CNN-based model to match one or more images of a first domain with one or more images of a second domain, wherein each image of the one or more images of the first domain and the one or more images of the second domain belong to one of a plurality of sub-categories, each sub-category belonging to one of a plurality of categories, wherein each batch of the plurality of batches comprises a first plurality of images sampled from a first number of sub-categories, wherein each sub-category of the first number of sub-categories of each batch of the plurality of batches comprises a first set of images sampled from the first domain, and a second set of images sampled from the second domain, wherein the first set of images comprises a second number of images, and wherein the second set of images comprises a third number of images; and training the metric learning CNN-based model using the generated plurality of batches, wherein the metric learning CNN-based model generates an embedding vector from a given input image, wherein the metric learning CNN-based model comprises: a first pooling layer pooling an output feature map of a first convolutional layer, generating a first pooled feature vector; a first normalization layer normalizing the first pooled feature vector, generating a first normalized feature vector; a second pooling layer pooling an output feature map of a second convolutional layer different from the first convolutional layer, generating a second pooled feature vector; a second normalization layer normalizing the second pooled feature vector of the second pooling layer, generating a second normalized feature vector; a concatenation layer concatenating the first normalized feature vector with the second normalized feature vector, generating a concatenated vector; and one or more fully connected (FC) layers reducing a dimensionality of the concatenated vector to a lower output embedding vector dimensionality, wherein the using of the generated plurality of batches comprises executing a loss function based on one or more batches of the plurality of batches, wherein the loss function is selected from the group consisting of a triplet loss function, a contrastive loss function, and a cluster loss function, wherein the loss function is configured to allow the metric learning CNN-based model to learn a mapping from the cross-domain training dataset to an embedding space, wherein a similarity between a first given image and a second given image of the cross-domain training dataset corresponds to an embedding distance between the first given image and the second given image, and wherein the embedding distance between the first given image and the second given image is a distance between the corresponding embedding vectors generated from the first given image and the second given image using the metric learning CNN-based model.

2. The computer-implemented method of claim 1, wherein the target application is fashion image retrieval.

3. The computer-implemented method of claim 1, wherein the first domain is a street domain, the second domain is a shop domain, and the shop domain comprises a plurality of product images from retailer catalogues, retailer inventories, and stock photos.

4. The computer-implemented method of claim 1, wherein the categories are product categories and the sub-categories are products.

5. The computer-implemented method of claim 1, wherein the metric learning CNN model is based on a CNN model selected from the group consisting of a Visual Geometric Group 16 (VGG-16) model, a residual network (ResNet), and a feature pyramid network.

6. The computer-implemented method of claim 1, wherein the metric learning CNN model is based on a Visual Geometric Group 16 (VGG-16) model, wherein the first convolutional layer is a thirteenth convolutional layer of the VGG-16 model, and wherein the second convolutional layer is a tenth convolutional layer of the VGG-16 model.

7. The computer-implemented method of claim 1, wherein the first normalization layer and the second normalization layer use Batch Normalization (BN).

8. The computer-implemented method of claim 1, wherein the distance is a Euclidean distance.

9. The computer-implemented method of claim 1, wherein the cluster loss is based on a loss formula selected from the group consisting of N-Pair loss, NCA loss, Magnet Loss, and Proxy Loss.

10. The computer-implemented method of claim 1, wherein either the first pooling layer or the second pooling layer uses sum pooling.

11. The computer-implemented method of claim 1, wherein the cross-domain training dataset comprises, for each sub-category, two images of the first domain and two images of the second domain, and wherein the two images of the first domain and the two images of the second domain are human annotated.

12. The computer-implemented method of claim 11, wherein the cross-domain training dataset is supplemented, for each sub-category, with a second plurality of images of the first domain and a fourth number of images of the second domain, and wherein the second plurality of images of the first domain and the fourth number of images of the second domain are computer annotated.

13. The computer-implemented method of claim 11, wherein the cross-domain training dataset is based on a first public image dataset, and wherein the second plurality of images of the first domain and the fourth number of images of the second domain are selected from the group consisting of one or more social media websites, a second public image dataset, one or more retailer catalogues, one or more retailer inventories, one or more sets of stock photos, and one or more online retail image sets.

14. The computer-implemented method of claim 1, wherein the loss function is a triplet loss function, and wherein the generating of a plurality of batches sampled from a cross-domain training dataset comprises generating a triplet from one batch of the plurality of batches by selecting, for a given sub-category of the first number of sub-categories of the one batch of the plurality of batches: an anchor image sampled from the first set of images of the given sub-category, a positive image sampled from the second set of images of the given sub-category, and a negative image sampled from the second set of images of a sub-category of the first number of sub-categories of the one batch of the plurality of batches different from the given sub-category.

15. The computer-implemented method of claim 14, wherein the generated triplet is a random triplet, and wherein the selecting of the anchor image, the positive image, and the negative image, is random.

16. The computer-implemented method of claim 15, wherein the training of the metric learning CNN-based model using the generated plurality of batches comprises a random training stage, and wherein the random training stage uses one or more random triplets.

17. The computer-implemented method of claim 1, wherein the loss function is a triplet loss function, wherein the generating of a plurality of batches sampled from a cross-domain training dataset comprises generating a triplet, the triplet consisting of an anchor image, a positive image, and a negative image, and wherein the generating of a triplet comprises: receiving a query image of the first domain, the query image belonging to a query image sub-category; generating a ranked set by ranking a second plurality of images of the second domain in order of increasing embedding distance from the query image; determining a closest image of the query image sub-category by selecting the first image in the ranked set that is of the query image sub-category; determining a mining group comprising a fourth number or fewer consecutive images of the ranked set, the mining group comprising the closest image of the query image sub-category; and forming the triplet, wherein the anchor is the query image, and wherein the positive image is the closest image of the query image sub-category.

18. The computer-implemented method of claim 17, wherein the mining group further comprises at least one closer image of a different sub-category from the query image sub-category, wherein the embedding distance between the query image and the closer image of the different sub-category from the query image sub-category is smaller than the embedding distance between the query image and the closest image of the query image sub-category, wherein the negative image of the formed triplet is the closer image of the different sub-category from the query image sub-category, and wherein the formed triplet is a negative triplet.

19. The computer-implemented method of claim 18, wherein the training of the metric learning CNN-based model using the generated plurality of batches comprises a negative training stage, and wherein the negative training stage uses one or more negative triplets.

20. A non-transitory computer-readable storage medium having program instructions stored therein, for training a metric learning convolutional neural network (CNN)-based model for a target cross-domain image retrieval application, the program instructions executable by a processor to cause the processor to: generate a plurality of batches sampled from a cross-domain training dataset to train the metric learning CNN-based model to match one or more images of a first domain with one or more images of a second domain, wherein each image of the one or more images of the first domain and the one or more images of the second domain belong to one of a plurality of sub-categories, each sub-category belonging to one of a plurality of categories, wherein each batch of the plurality of batches comprises a plurality of images sampled from a first number of sub-categories, wherein each sub-category of the first number of sub-categories of each batch of the plurality of batches comprises a first set of images sampled from the first domain, and a second set of images sampled from the second domain, wherein the first set of images comprises a second number of images, and wherein the second set of images comprises a third number of images; and train the metric learning CNN-based model using the generated plurality of batches, wherein the metric learning CNN-based model generates an embedding vector from a given input image, wherein the metric learning CNN-based model comprises: a first pooling layer pooling an output feature map of a first convolutional layer; a first normalization layer normalizing an output feature vector of the first pooling layer; a second pooling layer pooling an output feature map of a second convolutional layer different from the first convolutional layer; a second normalization layer normalizing an output feature vector of the second pooling layer; a concatenation layer concatenating an output feature vector of the first normalization layer with an output feature vector of the second normalization layer; and one or more fully connected (FC) layers reducing a dimensionality of the output of the concatenation layer to a lower output embedding vector dimensionality, wherein the using the generated plurality of batches comprises executing a loss function based on one or more batches of the plurality of batches, wherein the loss function is selected from the group consisting of a triplet loss function, a contrastive loss function, and a cluster loss function, wherein the loss function is configured to allow the metric learning CNN-based model to learn a mapping from the cross-domain training dataset to an embedding space such that a similarity between two given images of the cross-domain training dataset corresponds to an embedding distance between the two given images, and wherein the embedding distance between the two given images is a distance between the two embedding vectors generated from each of the two given images using the metric learning CNN-based model.

Description

BACKGROUND

[0001] Object detection from images and videos is an important computer vision research problem. Object detection from images and videos paves the way for a multitude of computer vision tasks including similar object search, object tracking, and collision avoidance for self-driving cars. Object detection performance may be affected by multiple challenges including imaging noises (motion blur, lighting variations), scale, object occlusion, self-occlusion and appearance similarity with the background or other objects. Therefore, it is desirable to develop robust image processing systems that improve the identification of objects belonging to a particular category from other objects in the image, and that are capable of accurately determining the location of the object within the image (localization).

BRIEF DESCRIPTION OF THE DRAWINGS

[0002] Various techniques will be described with reference to the drawings, in which:

[0003] FIG. 1 shows an illustrative example of a system that presents product recommendations to a user, in an embodiment;

[0004] FIG. 2 shows an illustrative example of a data record for storing information associated with a look, in an embodiment;

[0005] FIG. 3 shows an illustrative example of a data record for storing information associated with an image, in an embodiment;

[0006] FIG. 4 shows an illustrative example of a data record for storing information associated with a product, in an embodiment;

[0007] FIG. 5 shows an illustrative example of an association between an image record and a look record, in an embodiment;

[0008] FIG. 6 shows an illustrative example of a process that, as a result of being performed by a computer system, generates a look record based on an image, in an embodiment;

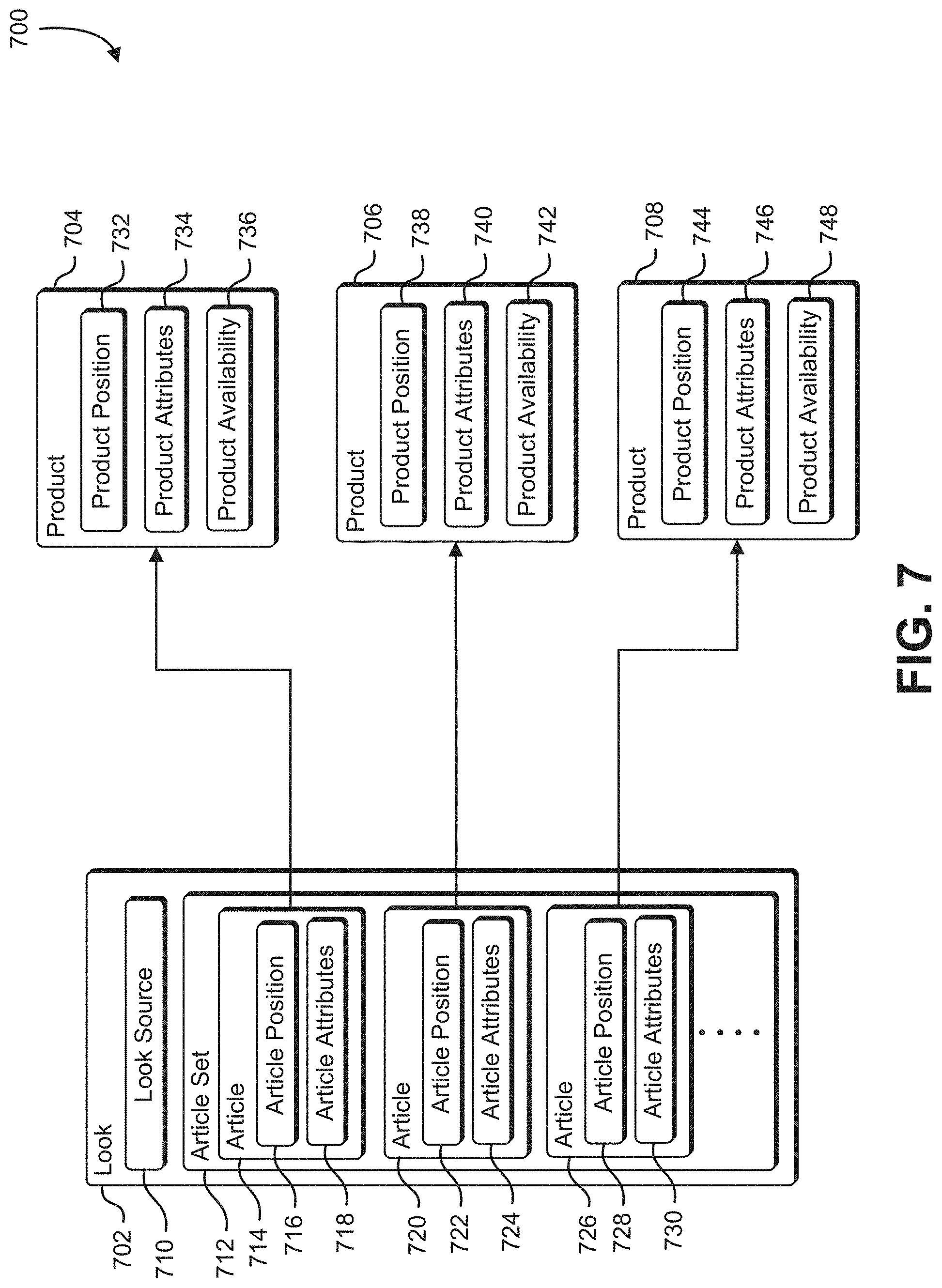

[0009] FIG. 7 shows an illustrative example of an association between a look record and a set of product record, in an embodiment;

[0010] FIG. 8 shows an illustrative example of a process that, as a result of being performed by a computer system, identifies a set of products to achieve a desired look, in an embodiment;

[0011] FIG. 9 shows an illustrative example of an association between a product owned by a user, and related product that may be worn with the user's product to achieve a look, in an embodiment;

[0012] FIG. 10 shows an illustrative example of a process that, as a result of being performed by a computer system, identifies a product that may be worn with an indicated product to achieve a particular look, in an embodiment;

[0013] FIG. 11 shows an illustrative example of a process that identifies, based at least in part on a specified article of clothing, a set of additional articles that, when worn in combination with the selected article of clothing, achieve a particular look, in an embodiment;

[0014] FIG. 12 shows an illustrative example of a user interface product search system displayed on a laptop computer and mobile device, in an embodiment;

[0015] FIG. 13 shows an illustrative example of executable instructions that install a product search user interface on a website, in an embodiment;

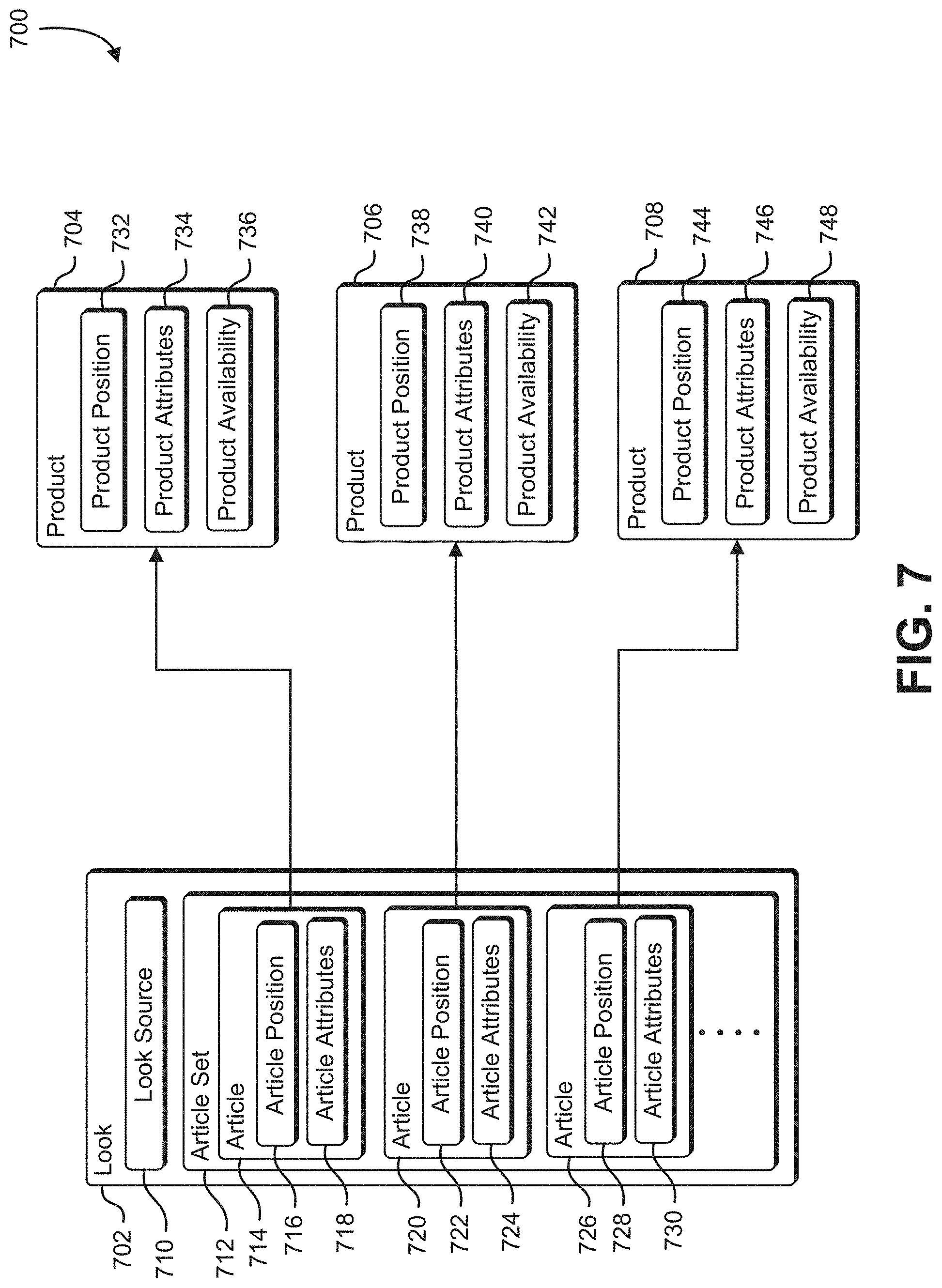

[0016] FIG. 14 shows an illustrative example of a user interface for identifying similar products using a pop-up dialog, in an embodiment;

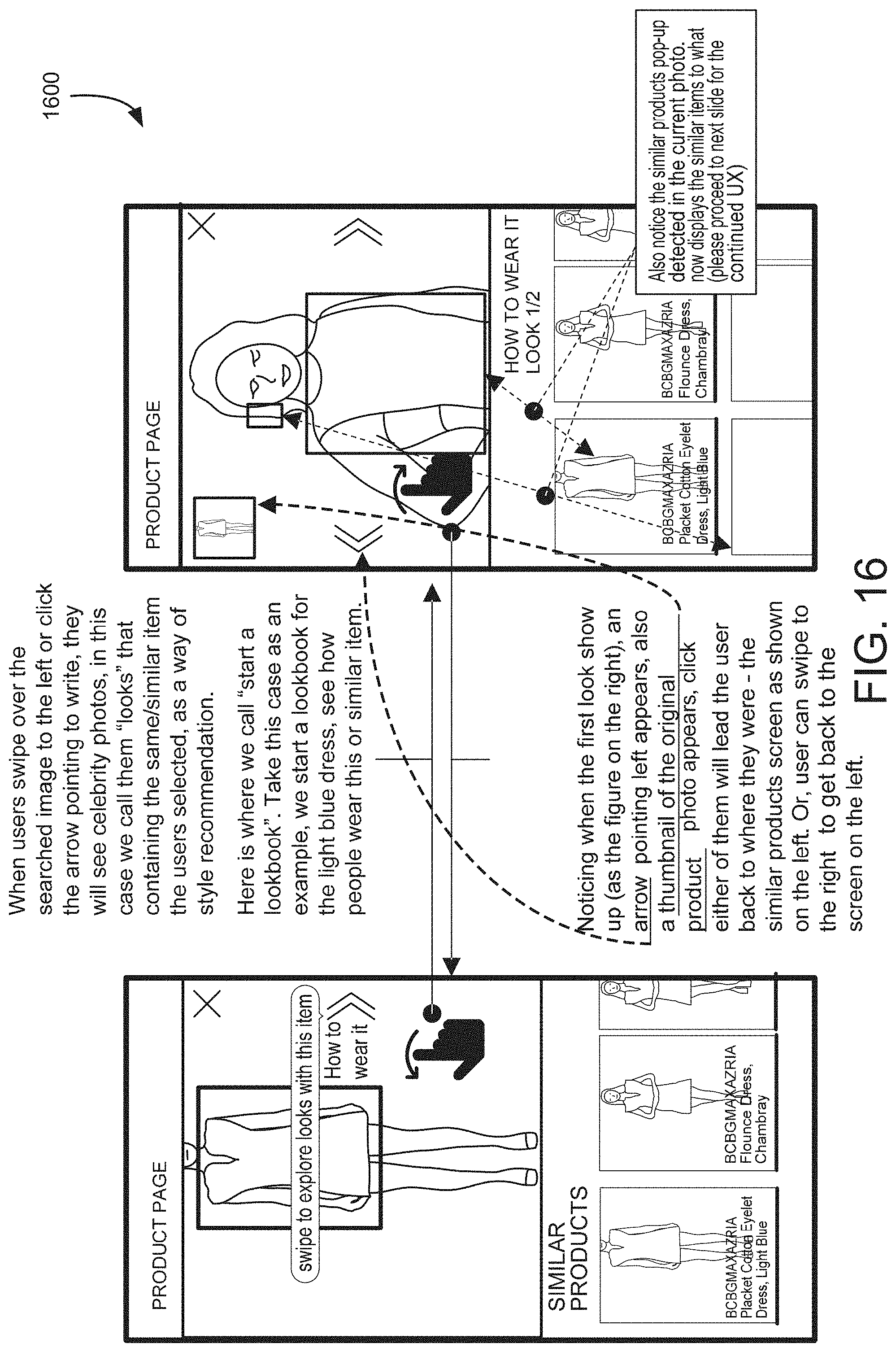

[0017] FIG. 15 shows an illustrative example of a user interface for identifying similar products, in an embodiment;

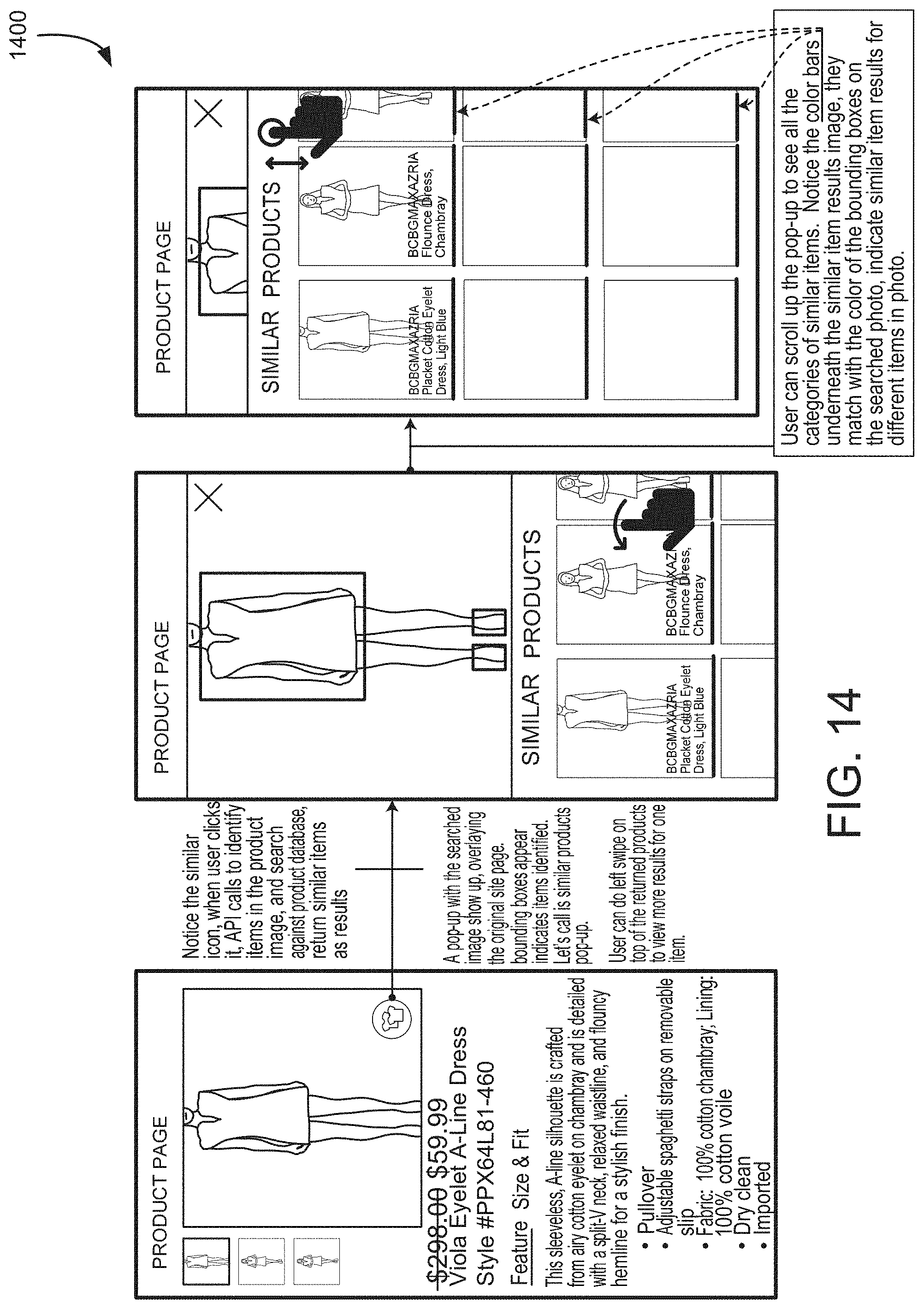

[0018] FIG. 16 shows an illustrative example of a user interface for identifying a look based on a selected article of clothing, in an embodiment;

[0019] FIG. 17 shows an illustrative example of a user interface that allows the user to select a look from a plurality of looks, in an embodiment;

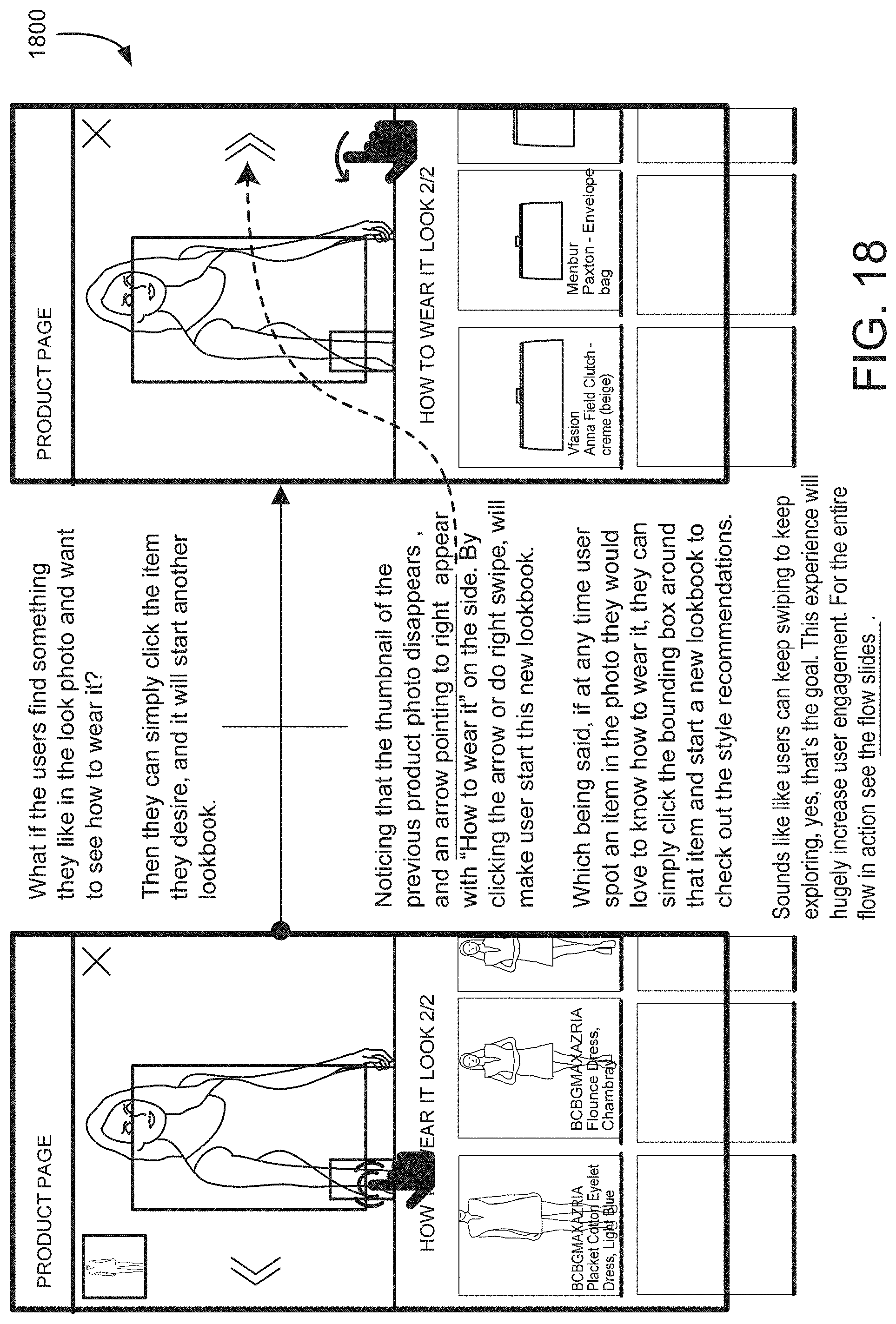

[0020] FIG. 18 shows an illustrative example of a user interface that allows the user to select a particular article of clothing from within a look, in an embodiment;

[0021] FIG. 19 shows an illustrative example of a desktop user interface for navigating looks and related articles of clothing, in an embodiment;

[0022] FIG. 20 shows an illustrative example of a user interface for navigating looks implemented on mobile device, in an embodiment;

[0023] FIG. 21 shows an illustrative example of a user interface for navigating looks implemented on a web browser, in an embodiment;

[0024] FIG. 22 shows an illustrative example of a generic object detector and a hierarchical detector, in an embodiment;

[0025] FIG. 23 shows an illustrative example of a category tree representing nodes at various levels, in an embodiment;

[0026] FIG. 24 shows an illustrative example of a normalized error matrix, in an embodiment;

[0027] FIG. 25 shows an illustrative example of a hierarchical detector that can correct for missing detections from a generic detector, in an embodiment;

[0028] FIG. 26 shows an illustrative example of how a hierarchical detector suppresses sibling output in contrast to a generic detector, in an embodiment;

[0029] FIG. 27 shows an illustrative example of a graphical user interface that enables utilization of techniques described herein, in an embodiment;

[0030] FIG. 28 shows an illustrative example of a graphical user interface that enables utilization of techniques described herein, in an embodiment;

[0031] FIG. 29 shows an illustrative example of a triplet with overlaid bounding boxes, in an embodiment;

[0032] FIG. 30 shows a first portion of an illustrative example of a network design that captures both coarse-grained and fine-grained representations of fashion items in an image, in an embodiment;

[0033] FIG. 31 shows a second portion of an illustrative example of a network design that captures both coarse-grained and fine-grained representations of fashion items in an image, in an embodiment;

[0034] FIG. 32 shows an illustrative example of how batches are formed to generate triplets online, in an embodiment;

[0035] FIG. 33 shows an illustrative example of hard negative products mining steps, in an embodiment;

[0036] FIG. 34 shows an illustrative example of image and video product retrieval, in an embodiment;

[0037] FIG. 35 shows an illustrative example of a video product retrieval system that identifies one or more products from a video or image, in an embodiment;

[0038] FIG. 36 shows an illustrative example of quality head branch training, in an embodiment;

[0039] FIG. 37 shows an illustrative example of a product web page that includes product attributes, in an embodiment;

[0040] FIG. 38 shows an illustrative example of output from a detection and attribute network, in an embodiment;

[0041] FIG. 39 shows an illustrative example of a schematic of a detection and attribute network, in an embodiment;

[0042] FIG. 40 illustrates an environment in which various embodiments can be implemented; and

[0043] FIG. 41 illustrates aspects of an example environment for implementing aspects in accordance with various embodiments.

DETAILED DESCRIPTION

[0044] The current document describes an image processing system that is capable of identifying objects within images or video segments. In an embodiment, the system operates by identifying regions of an image that contain an object. In an embodiment, for each region, attributes of the object are determined, and based on the attributes, the system may identify the object, or identify similar objects. In some embodiments, the system uses a tracklet to track an object though a plurality of image frames within a video segment, allowing more than one image frame to be used in object detection, and thereby increasing the accuracy of the object detection.

[0045] In an embodiment, the system determines a category for each object detected. In one example, a hierarchical detector predicts a tree of categories as output. The approach learns the visual similarities between various object categories and predicts a tree for categories. The resulting framework significantly improves the generalization capabilities of the detector to the novel objects. In some examples, the system can detect the addition of novel categories without the need of obtaining new labeled data or retraining the network.

[0046] Various embodiments described herein utilize a deep learning based object detection framework and similar object search framework that explicitly models the correlations present between various object categories. In an embodiment, an object detection framework predicts a hierarchical tree as output instead of a single category. For example, for a `t-shirt` object, a detector predicts [`top innerwear``t-shirt`]. The upper level category `top innerwear` includes [blouses_shirts`, `tees`, `tank_camis`, `tunics`, `sweater`]. The hierarchical tree is estimated by analyzing the errors of an object detector which does not use any correlation between the object categories. Accordingly, techniques described herein comprise; [0047] 1. A hierarchical detection framework for the object domain. [0048] 2. A method to estimate the hierarchical/semantic tree based at least in part on directly analyzing the detection errors. [0049] 3. Using the estimated hierarchy tree to demonstrate addition of novel category object and performing search.

[0050] In an embodiment, the system determines regions of interest within an image by computing bounding boxes and the corresponding categories for the relevant objects using visual data. In some examples, the category prediction assumes that only one of the K total object categories is associated with each bounding boxes. The 1-of-K classification may be achieved by a `Softmax` layer which encourages each object category to be far away as possible from the other object categories. However, in some examples, this process may fail to exploit the correlation information present in the object categories. For example, a `jeans` is closer to `pants` compared to `coat`. In an embodiment, exploitation of this correlation is accomplished by first predicting `lower body` and choosing one element from the `lower body` category which is a set of `jeans`, `pants`, and `leggings` via hierarchical tree prediction. In some embodiments, the system improves the separation of objects belonging to a particular category from other objects, and improves the identification of the location of the object in the image.

[0051] In an embodiment, a hierarchical prediction framework is integrated with an object detector. In some embodiments, the generic detector can be any differentiable (e.g., any deep learning based detector) mapping f(I)bb, c that takes an input image I and produces a list of bounding boxes bb and a corresponding category c for each of the bounding box. The hierarchical detector learns a new differentiable mapping fh(I)bb F(c) that produces a path/flow from root category to the leaf category F(C) for each bounding box. A differentiable mapping, in an embodiment, is a mathematical function that can be differentiated with respect to its parameters to estimate the value of those parameters from ground truth data via gradient-based optimization. In an example implementation, there are two steps involved in going from a generic detector to the hierarchical detector. The first step, in an embodiment, is to train a generic detector and estimate the category hierarchy tree as discussed below. Based on the category hierarchy, the deep learning framework is retrained with a loss function designed to predict the hierarchical category.

[0052] To estimate the category tree, in an embodiment, one estimates the visual similarity between various categories. Techniques disclosed and suggested herein improve on conventional techniques by organizing the visually similar categories for an object detector. Much prior work has focused on using attribute-level annotations to generate annotation tag hierarchy instead of category-level information. However, such an effort requires large amounts of additional human effort to annotate each category with information such as, viewpoint, object part location, rotation, object specific attributes. Some examples generate an attribute-based (viewpoint, rotation, part location etc.) hierarchical clustering for each object category to improve detection. In contrast, some embodiments disclosed herein, use category level information and only generate a single hierarchical tree for the object categories.

[0053] Example implementations of the present disclosure estimate a category hierarchy by first evaluating the errors of a generic detector trained without any consideration of distance between categories and subsequently analyzing the cross-errors generated due to visual-similarity between various categories. In an embodiment, a Faster-RCNN based detector is trained and detector errors are evaluated. For instance, a false positive generated by generic detector (Faster-RCNN detector in the current case) can be detected and some or all the errors that result from visually similar categories are computed. These errors, for example, may be computed by measuring the false positives with bounding boxes having an intersection-over-union ("IOU") ratio between 0.1 to 0.5 with another object category. In this manner, visually similar classes such as `shoes` and `boots` will be frequently misclassified with each other resulting in higher cross-category false positive errors.

[0054] Many conventional techniques have focused on using attribute-level information apart from the category specific information to perform detection for novel object categories. Some examples use attribute-level information to detect objects from novel categories. For instance, a new object category `horse` is recognized as a combination of `legs`, `mammal` and `animal` categories. Attribute-based recognition requires one to learn attribute specific classifiers and attribute-level annotation for each of object categories. In comparison, some embodiments of the present disclosure neither require attribute annotations nor any attribute specific classifiers. For each new category, an expected root-level category may be assigned and subsequently a bounding box with highest confidence score for that category may be estimated.

[0055] Systems operating according to various embodiments disclosed herein perform category specific non-maximal suppression to select bounding boxes for each leaf node categories, where the bounding boxes may be unique. For all the lower level categories, such systems may also suppress the output by considering bounding boxes from all the children nodes. In some embodiments, this helps reduce spurious lower level category boxes whenever bounding boxes from more specific categories can be detected.

[0056] In various embodiments, a user interface on a client computer system presents product information to a user. In some examples, the client computer system is a desktop computer system, a notebook computer system, a tablet device, a cellular phone, a thin client terminal, a kiosk, or a point-of-sale device. In one example, the client computer system is a personal computer device running a web browser, and the user interface is served from a web server operated by the merchant to the web browser. The web browser renders the user interface on a display of the personal computer device, and the user interacts with the display via a virtual keyboard or touch screen. In another example, the personal computer device is a personal computer system running a web browser, and the user interacts with the user interface using a keyboard and a mouse. Information exchanged between the client computer system and the Web server operated by the merchant may be exchanged over a computer network. In some embodiments, information is encrypted and transmitted over a secure sockets layer ("SSL") or transport layer security ("TLS") connection.

[0057] In various examples, an important consideration is whether the user is able to determine how to combine the offered product with other products to produce a desired appearance or "look." For example, the user may wish to determine whether the offered product "goes with" other products or articles of clothing already owned by the user. In other examples, the user may wish to identify other products that may be purchased to wear with the offered product. In some situations, how the product will be used or worn to produce a desired look may be more definitive than the attractiveness of the individual product. Therefore, it is desirable to produce a system and a user interface that allows the user to easily identify related items that can be used with the offered product to produce various looks.

[0058] In an embodiment, the system provides a software development kit ("SDK") that can be added to the web code of a retailer's website. The SDK adds functionality to the retailer's website allowing users to identify items related to products offered for sale that will produce a desired look. The added functionality allow users to feel at ease by providing information on how to wear the offered product by providing style recommendations related to the offered product.

[0059] In an embodiment, the SDK visually matches a brand's social media content and lookbook photos to corresponding product pages on the merchant's Web site. The SDK presents a user interface that allows the users to see how celebrities and ordinary people wear the products offered for sale. The system also identifies similar products to the items that the people are wearing with in the recommended look, so that users can compare the entire look.

[0060] In an embodiment, the visual search functionality is added to the merchant's website by adding a link to a JavaScript to merchant's website code. The SDK serves as a layer on top of the original website, and in general, the SDK does not interfere with the how the merchant's website operates.

[0061] In an embodiment, a user accesses the merchant's website using a web browser running on the client computer system. The web browser loads the code from the merchant's website which includes a reference to the SDK. The web browser loads executable code identified by the reference and executes it within the web browser. In some examples, the executable code is a JavaScript plug-in which is hosted on a computer system.

[0062] In an embodiment, the executable code downloaded by the SDK into the users web browser is executed, causing the web browser to display the user interface described herein to the user. In an embodiment, the executable code also causes the web browser to contact an online service. The online service maintains a database of looks, where each look includes a list of products that, when worn together form the associated look. In an embodiment, each look is stored in association with a set of products. In another embodiment, each product in the set of products is characterized as a set of characteristics. For example, a particular look may include a shirt, a pair of pants, and a hat. The shirt, pants, and hat may be identified as particular products that can be purchased. Alternatively, each product may be described as a set of characteristics. For example, the hat may be described as short, Brown, and Tweed, and the shirt may be described as white, longsleeved, V-neck, and cotton knit.

[0063] In an embodiment, the online service is provided with a particular article of clothing in the form of a SKU, a product identifier, a set of characteristics, or an image, and the online service identifies one or more looks that include the particular article of clothing. In some embodiments, the online service identifies one or more looks that include similar articles of clothing. The online service returns the look in the form of an image, and information regarding the individual products that are associated with the look. The online service may also include bounding box information indicating where each product is worn on the image.

[0064] FIG. 1 shows an illustrative example of a system 100 that presents product recommendations to a user, in an embodiment. In an embodiment, the system 100 includes a Web server 102 that hosts an website. In various examples, the Web server 102 may be a computer server, server cluster, virtual computer system, computer runtime, or web hosting service. The website is a set of hypertext markup language ("HTML") files, script files, multimedia files, extensible markup language ("XML") files, and other files stored on computer readable media that is accessible to the Web server 102. Executable instructions are stored on a memory of the Web server 102. The executable instructions, as a result of being executed by a processor of the Web server 102, cause the Web server 102 to serve the contents of the website over a network interface in accordance with the hypertext transport protocol ("HHTP") or secure hypertext transport protocol ("HTTPS"). In an embodiment, the Web server 102 includes a network interface connected to the Internet.

[0065] A client computer system 104 communicates with the Web server 102 using a web browser via a computer network. In an embodiment, the client computer system 104 may be a personal computer system, a laptop computer system, a tablet computer system, a cell phone, or handheld device that includes a processor, memory, and an interface for communicating with the Web server 102. In an embodiment, the interface may be an Ethernet interface, a Wi-Fi interface, cellular interface, a Bluetooth interface, a fiber-optic interface, or satellite interface that allows communication, either directly or indirectly, with the Web server 102. Using the client computer system 104, a user 106 is able to explore products for sale as well as looks that are presented by the Web server 102. In various examples, the Web server 102 recommends various products to the user 106 based on product linkages established through information maintained by the Web server 102.

[0066] In an embodiment, the Web server 102 maintains a database of style images 108, a database of product information 110, and the database of look information 112. In various examples, style images may include images or videos of celebrities, models, or persons demonstrating a particular look. The database of product information 110 may include information on where product may be purchased, an associated designer or source, and various attributes of a product such as fabric type, color, texture, cost, and size. The database of look information 112 includes information that describes a set of articles that, when worn together, create a desired appearance. In some examples, the database of look information 112 may be used by the Web server 102 to identify articles of clothing that may be worn together to achieve a particular look, or to suggest additional products for purchase that may be combined with an already purchased product. In an embodiment, recommendations may be made by sending information describing the set of additional products from the Web server 102 to the client computer system 104 via a network.

[0067] FIG. 2 shows an illustrative example of a data record 200 for storing information associated with a look, in an embodiment. A data structure is an organization of data that specifies formatting, arrangement, and linkage between individual data fields such that a computer program is able to navigate and retrieve particular data structures and in various fields of individual data structures. A data record is a unit of data stored in accordance with a particular data structure. The data record 200 may be stored in semiconductor memory or on disk that is accessible to the computer system. In an embodiment, a look record 202 includes a look source data field 204, and an article set 206. The look source data field 204 may include a uniform resource locator ("URL"), image identifier, video segment identifier, website address, filename, or memory pointer that identifies an image, video segment, or look book used to generate the look record 202. For example, a look record may be generated based on an image of the celebrity, and the source of the image may be identified in the look source data field 204. In another example, a look record may be generated from entries in a look book provided by a clothing manufacturer, and the look source data field 204 may identify the look book.

[0068] The article set 206 is a linked list, array, hash table, or other container structure that holds the set of article records. Each article record in the article set 206 describes an article included in the look. An article can be an article of clothing such as a skirt, shirt, shoes, blouse, hat, jewelry, handbag, watch, or wearable item. In the example illustrated in FIG. 2, the article set 206 includes a first article 208 and a second article 220. In various examples, other numbers of articles may be present in the article set 206. The first article 208 includes an article position field 210 and a set of article attributes 212. The article position field 210 describes a position in which the article is worn. For example, an article may be worn as a top, as a bottom, as a hat, as gloves, as shoes, or carried as a handbag. The set of article attributes 212 describes characteristics of the article and in an example includes a texture field 214, a color field 216, and a pattern field 218. The texture field 214 may specify a fabric type, a texture, a level of translucence, or thickness. The color field 216 may indicate a named color, a color hue, a color intensity, a color saturation, a level of transparency, a reflectivity, or optical characteristic of the article. The pattern field 218 may describe a fabric pattern, a weave, a print design, or image present on the article. The second article 220 includes data fields similar to those in the first article 208 including an article position field 222 and an article attribute set 224.

[0069] FIG. 3 shows an illustrative example of a data record 300 for storing information associated with an image, in an embodiment. An image record 302 includes a set of image properties 304 and information that describes an article set 306. The image record 302 may be generated to describe the contents of a digital image or a video segment. For example, if the image record 302 describes a digital image, the set of image properties 304 includes an image source 308 that identifies the image file. If the image record 302 describes a video segment, the image source 308 identifies a segment of a video file. An image subject field 310 includes information describing the subject of the image. For example, the subject may be a model, an actor, or a celebrity.

[0070] In an embodiment, the article set 306 includes one or more article records that correspond to a set of articles found within the image. The article records may be stored as an array, linked lists, hash table, relational database, or other data structure. An article record 312 includes an article position 314 and a set of article attributes 316. The article position 314 describes the location of the article relative to the subject of the image. For example, the article position may indicate that the article is a hat, pants, shoes, blouse, dress, watch, or handbag. The set of article attributes 316 may include a texture, color, pattern, or other information associated with an article as described elsewhere in the present application (for example, in FIG. 2).

[0071] FIG. 4 shows an illustrative example of a data record 400 for storing information associated with a product, in an embodiment. A product is an article captured in an image or available-for-sale. For example, an article may be described as a large white T-shirt, and a particular product matching that article may be an ABC Corporation cotton large T sold by retailer XYZ. In an embodiment, a product record 402 includes a product position field 404, a set of product attributes 406, and a set of availability information 408. The set of product attributes 406 indicates how the product (such as a hat, pants, shirt, dress, shoes, or handbag) is worn (on the head, legs, torso, whole body, feet, or hand). The set of product attributes 406 contains a variety of subfields that describe attributes of the product. In an example, the set of product attributes 406 includes a texture field 410, a color field 412, and a pattern field 414. In an embodiment, the product attributes may include some or all of the attributes of an article. In some examples, product attributes may include a superset or a subset of the attributes of an article. For example, product attributes may include characteristics that are not directly observable from an image such as a fabric blend, a fabric treatment, washing instructions, or country of origin.

[0072] In an embodiment, the set of availability information 408 includes information that describes how the product may be obtained by user. In an embodiment, the set of availability information 408 includes a vendor field 416, a quantity field 418, a price field 420, and a URL field 422. The vendor field 416 identifies a vendor or vendors offering the product for sale. The vendor field 416 may include a vendor name, a vendor identifier, or a vendor website address. The quantity field 418 may include information describing the availability of the product including the quantity of the product available for sale, the quantity of the product available broken down by size (for example how many small, medium, and large), and whether the product is available for backorder. The price field 420 indicates the price of the product and may include quantity discount information, retail, and wholesale pricing. The URL field 422 may include a URL of an Web site at which the product may be purchased.

[0073] FIG. 5 shows an illustrative example of an association 500 between an image record and a look record, in an embodiment. An association between records may be established using a pointer, a linking record that references each of the linked records, or by establishing matching data values between the associated records. FIG. 5 illustrates an association between a set of articles detected in an image, and a set of articles that make up a look. In an embodiment, the system is provided with an image in the form of an URL, filename, image file, or video segment. The system processes the image to identify a set of articles worn by a subject. For example, a picture of a celebrity may be submitted to the system to identify a set of articles worn by the celebrity. Once the articles worn by the subject of the image are identified, an associated look record can be created.

[0074] In an embodiment, an image record 502 includes a set of image properties 506 and information that describes an article set 508. The image record 502 may be generated to describe the contents of a digital image or a video segment. For example, if the image record 502 describes a digital image, the set of image properties 506 includes an image source field that identifies the image file. If the image record 502 describes a video segment, the image properties 506 identify a segment of a video file. An image subject field may include information describing the subject of the image. For example, the subject may be a model, an actor, or a celebrity.

[0075] In an embodiment, the article set 508 includes one or more article records that correspond to a set of articles found within the image. The article records may be stored as an array, linked lists, hash table, relational database, or other data structure. An article record 510 includes an article position 512 and a set of article attributes 514. The article position 512 describes the location of the article relative to the subject of the image. For example, the article position (head, feet, torso etc.) may suggest that the article is a hat, pants, shoes, blouse, dress, watch, or handbag. The set of article attributes 514 may include a texture, color, pattern, or other information associated with an article as described elsewhere in the present application (for example, in FIG. 2).

[0076] In an embodiment, a look record 504 includes a look source data field 516, and an article set 518. The look source data field 516 may include a uniform resource locator ("URL"), image identifier, video segment identifier, website address, filename, or memory pointer that identifies an image, video segment, or look book used to generate the look record 504. For example, a look record may be generated based on an image of the celebrity, and the source of the image may be identified in the look source data field 516. In another example, a look record may be generated from entries in a look book provided by a clothing manufacturer, and the look source data field 516 may identify the look book.

[0077] The article set 518 is a linked list, array, hash table, or other container structure that holds the set of article records. Each article record in the article set 518 describes an article included in the look. An article can be an article of clothing such as a skirt, shirt, shoes, blouse, hat, jewelry, handbag, watch, or wearable item. In the example illustrated in FIG. 5, the article set 518 includes an article 520. In various examples, other numbers of articles may be present in the article set 518. The article record 510 includes an article position field 522 and a set of article attributes 524. The article position field 522 describes a position in which the article is worn. For example, an article may be worn as a top, as a bottom, as a hat, as gloves, as shoes, or carried as a handbag. The set of article attributes 524 describes characteristics of the article and, for example, may include a texture field, a color field, and a pattern field.

[0078] In various embodiments, the look record 504 may be used by the system to make recommendations to a user by identifying particular products that match articles in the article set 518. By identifying particular products that match the articles in the article set 518, the system helps the user identify those products that, when worn together, achieve a look similar to that captured in the image.

[0079] FIG. 6 shows an illustrative example of a process 600 that, as a result of being performed by a computer system, generates a look record based on an image, in an embodiment. The process begins at block 602 with a computer system acquiring an image of the subject. In various examples, the image may be acquired by acquiring a file name, file identifier, a stream identifier, or a block of image data. In additional examples, the image may be acquired as a portion of a video stream or as a composite of a number of frames within a video stream. For example, the image may be specified as information that identifies a video file, and a position within the video file.

[0080] In an embodiment, at block 604, the computer system identifies a set of articles worn by a subject within the image. In some embodiments, the computer system identifies the particular subject as a particular celebrity or model. In some embodiments, the computer system identifies characteristics of the subject such as male, female, youth, or infant. In some examples, the computer system identifies a plurality of subjects present in the image. In an embodiment, for at least one of the subjects, the computer system identifies a set of articles worn by the subject. As described elsewhere in the current application, articles may be articles of clothing, accessories, jewelry, handbags, or items worn by the subject. The computer system identifies a position or way in which each article is worn by the subject. In an embodiment, the computer system identifies the article as a hat, pants, dress, top, watch, handbag, necklace, bracelet, earing, pin, broach, sash, or belt.

[0081] In an embodiment, at block 606, the computer system identifies one or more attributes for each article worn by a subject. Attributes may be identified such as those identified elsewhere in the current document. In various embodiments, the computer system identifies a texture, color, material, or finish on the article. In additional embodiments, the computer system identifies a size of the article. The size of the article may be determined based at least in part on the identity of the subject.

[0082] At block 608, the computer system generates a record of a look in accordance with the items worn by a particular subject in the image. In some embodiments, the computer system generates a look record based on the articles worn by each subject identified in the image. The look record includes source information that identifies the image, and article information identified above. The look record may be constructed in accordance with the record structure shown in FIG. 2.

[0083] FIG. 7 shows an illustrative example of an association 700 between a look record and a set of product records, in an embodiment. In an embodiment, a look record can be used by the system to identify products that, when worn together, can reproduce an overall appearance or "look" associated with the look record. In an embodiment, a look record 702 includes a look source data field 710, and an article set 712. The look source data field 710 may include a uniform resource locator ("URL"), image identifier, video segment identifier, website address, filename, or memory pointer that identifies an image, video segment, or look book used to generate the look record 702. For example, a look record may be generated from entries in a look book provided by a clothing manufacturer, and the look source data field 710 may identify the source of the look book.

[0084] The article set 712 is a linked list, array, hash table, or other container structure that holds the set of article records. Each article record in the article set 712 describes an article included in the look. An article can be an article of clothing such as a skirt, shirt, shoes, blouse, hat, jewelry, handbag, watch, or wearable item. In the example illustrated in FIG. 7, the article set 712 includes a first article 714, a second article 720, and a third article 726. In various examples, other numbers of articles may be present in the article set 712. Each article includes information that describes an article position and article attributes. In the example shown, the first article 714 includes an article position field 716 and a set of article attributes 718. The second article 720 includes an article position field 722 and a set of article attributes 724. The third article 726 includes an article position field 728 and a set of article attributes 730. The article position fields describe a position in which the associated article is worn. The article attributes describe various aspects of each article as described elsewhere in the present document.

[0085] In an embodiment, the computer system identifies products matching various articles in the look record 702. In the example shown in FIG. 7, the computer system identifies a first product record 704 that matches the first article 714, a second product record 706 that matches the second article 720, and a third product record 708 that matches the third article 726. In some examples, the computer system may identify a plurality of products that match a particular article in the look record 702. Each product record includes an associated product position 732, 738, 744, product attributes 734, 740, 746, and product availability 736, 742, 748, as described elsewhere in the present document. In an embodiment, a product matches an article if the article position matches the product position and a threshold proportion of the product attributes match the attributes of the associated article. In some examples, all product attributes match all article attributes. In another example, selected attributes such as color and style match to match the product and an article. In yet another example, a measure of similarity is determined between a product and an article, and a match is determined when the measure of similarity exceeds a threshold value. By identifying a set of products that match a set of articles in a look, the system is able to recommend products to users that, when worn together, produce a similar look. In some examples, the system uses information in the product records to direct the user to websites or merchants from which the particular products can be purchased.

[0086] FIG. 8 shows an illustrative example of a process 800 that, as a result of being performed by a computer system, identifies a set of products to achieve a desired look, in an embodiment. In an embodiment, the process begins at block 802 with the computer system identifying a look desired by a user. The look may be identified by selecting an image from which a look is generated, by selecting a look record from which a look is already been generated or otherwise acquired, or by supplying an image or video segment from which look record can be generated.

[0087] At block 804, the computer system identifies the attributes of the articles present in the selected look. In various examples, the look may include a plurality of articles where each article has a set of attributes as described above. At block 806, the system searches a product database to identify products having attributes that match the articles in the selected look. In some embodiments, a product database is specified to limit the search to products from a given manufacturer or available from a particular merchant website. In some implementations, matching products have all of the attributes of an article in the look. In another implementation, matching products have a threshold percentage of the attributes of an article in the look.

[0088] At block 808, the computer system presents the identified products to the user. The products may be presented in the form of a webpage having graphical user interface elements as shown and described in the present document. In some examples, the user may be directed to similar looks to identify additional products.

[0089] FIG. 9 shows an illustrative example of an association 900 between a product owned by a user, and a related product that may be worn with the user's product to achieve a look, in an embodiment. In an embodiment, a first product record 902 is used to identify a look record 904 which in turn is used to identify a second product record 906. The first product record 902 holds information that represents a product selected by the user. In some examples, the product is a product in a cart of an Web site. In another example, the product is a product previously purchased by the user. In yet another example, the product is a product currently owned by the user. The first product record includes a product position field 908, a set of product attributes 910, and product availability information 912. The product position field 908 and a set of product attributes 910 used to identify the look record 904 based on the presence of an article that matches the attributes in position of the first product record 902. In some implementations a plurality of look records may be identified based on the presence of matching articles.

[0090] In an embodiment, the look record 904 includes a look source field 914, and a set of articles 916. In the example shown in FIG. 9, the set of articles 916 includes a first article 917, a second article 921, and a third article 925. The first article 917 includes an article position field 918 and a set of article attributes 920. The second article 921 includes an article position field 922 and a set of article attributes 924. The third article 925 includes an article position field 926 and a set of article attributes 928.

[0091] In the example illustrated in FIG. 9, the computer system identifies that the attributes in the first product record 902 match the article attributes 928 of the third article 925. As a result of the presence of the matching article, the computer system examines the other articles in the set of articles 916 and searches for products matching the attributes of each article in the set of articles 916. In the example shown in FIG. 9, the computer system identifies the second product record 906 which has a product position field 930, a set product attributes 932, and a set of product availability information 934, and determines that the product attributes 932 and product position field 930 match the corresponding article position field 918 and article attributes 920 of the first article 917. In an embodiment, the computer system recommends the product represented by the second product record 906 as one that can be worn with the product associated with the first product record 902 to achieve the look represented by the look record 904.

[0092] FIG. 10 shows an illustrative example of a process 1000 that, as a result of being performed by a computer system, identifies a product that may be worn with an indicated product to achieve a particular look. In an embodiment, the process begins at block 1002 with the computer system identifying a product owned by a user. In some examples, the computer system searches a purchase history of the user and identifies the product as one that has previously been purchased by the user. In another implementation, the product may be a product in an electronic shopping cart of a website. At block 1004, the computer system determines the attributes of the identified product such as the color, texture, pattern, and position of the product when worn by the user. In some implementations, the attributes are determined based on an image of the product. In other implementations, the products are retrieved from a product database provided by the manufacturer or retailer.

[0093] In an embodiment, at block 1006, the computer system identifies a look that includes a product that matches the identified product. In some implementations, the computer system identifies look records from a database of look records that have a sufficient number of matching attributes with the identified product. In another implementation, the computer system identifies look records that contain a matching product. At block 1008, the computer system searches the identified look records and identifies additional articles in those look records. For each additional article in the identified look records, the computer system identifies the attributes of those articles, and at block 1010, identifies products from a product database containing a sufficient set of matching attributes of those articles. In this way, in some examples, the system identifies products that when worn with the identified product, "go together" or produce the "look" associated with the linking look record.

[0094] At block 1012, the system presents the identified products as recommendations to the user. In some implementations, the recommendations may be presented along with the look so that the user can visualize how the articles may be worn together to produce the linking look.

[0095] FIG. 11 shows an illustrative example of a process that identifies, based at least in part on a specified article of clothing, a set of additional articles that, when worn in combination with the selected article of clothing, achieve a particular look, in an embodiment. While viewing a website, a user identifies a particular product such as a shirt as indicated in FIG. 11. In order to view looks that are relevant to the particular product, the user is able to click on an icon, button, or other UI element that signals the SDK to find related looks. Information identifying a product is sent from the user's web browser to an online service. In some embodiments, information is an image of the product. In other embodiments, information is a SKU, product identifier, or list of product characteristics.

[0096] The online service receives the identifying information, and uses the identifying information to identify one or more associated looks. In some embodiments, associated looks are identified as looks that include the identified product. In another embodiment, associated looks identified as looks that include a product similar to the identified product. The online service returns look information to the web browser. The look information includes an image of the look, a list of products associated with a look, and a bounding box identifying each associated product in the image of the look.

[0097] Upon receiving the information identifying the look, the executable code running on the browser displays the look, and highlights the products that are associated with the look. In some examples, each product associated with a look is surrounded by a bounding box. By selecting a bounding box, the user is presented with an image of the associated product. In some examples, the user is presented with additional information about the associated product and may also be presented with an option to purchase the associated product. In some embodiments, the user interface allows the user to explore products similar to a selected product. In this way, users may be provided with the matching products that are associated with a look, as well as similar products that may be used to achieve a similar look.

[0098] In various embodiments, the system attempts to identify, from a specified set of catalogs, products that are present within a particular look, based at least in part on a set of identified characteristics of each product in the look. If the system is unable to find a product matching a particular set of product characteristics, the system will attempt to identify the most similar product from the set of catalogs. The system presents product images for the identified products to the user. If the user selects a product image, the system identifies one or more similar products from the available catalogs, and the similar products are presented to the user in order of their similarity to the selected product. In some embodiments, the available sources of product information may be limited to a particular set of catalogs selected by the user hosting the SDK. In some examples, results may be sorted so that similar products from a preferred catalog are presented higher in the search results.

[0099] In an embodiment, the system may be adapted to identify articles of clothing that may be worn in combination with other articles of clothing to produce a desired look or overall appearance. In an embodiment, a user selects an article of clothing such as a shirt, dress, pants, shoes, watch, handbag, jewelry, or accessory. In various embodiments, the article may be selected from an web page, a digital image, or even a video stream. In an embodiment, the system identifies one or more looks that contain the selected article, or one or more looks that contain an article similar to the selected article. A look is a collection of articles that, when worn together, create a particular overall appearance. Looks may be ranked in accordance with a preference of the user, a score assigned by an influencer, a popularity measure, a style tag, a celebrity identity, or other measure. In some examples, the user interface allows the user to navigate a plurality of looks to identify a desired overall appearance. In some examples, the system allows the user to select a look, and in response, the user interface presents associated articles of clothing that, when worn together, produce the selected look. In some embodiments, the user interface identifies similar articles of clothing that may be combined to produce the selected look.

[0100] FIG. 12 shows an illustrative example of a user interface product search system displayed on a laptop computer and mobile device, in an embodiment. In various embodiments, the SDK may be applied to retailer websites, social media websites, and browser extensions. Platforms that implement the SDK may be accessed from mobile devices or desktop devices.

[0101] FIG. 13 shows an illustrative example of executable instructions that install a product search user interface on a website, in an embodiment. In one example, the SDK is installed by adding the lines of code shown to a webpage on a merchant website. The SDK may be served from a variety of locations including the merchant's website itself or from a third-party. The SDK may be served from various websites including third party Web platforms.

[0102] The website owner can customize the design completely using cascading style sheets ("CSS") within their own website code.

[0103] FIG. 14 shows an illustrative example of a user interface for identifying similar products using a pop-up dialog, in an embodiment. In an example shown in FIG. 14, an icon in the left-hand panel is clicked to bring up the pop-up dialog showing the product and similar products. Clicking on the icon generates a call to the application programming interface, and the identity of the product is communicated to an online service. In some embodiments, the identity of the product is communicated in the form of an image. In other embodiments, the identity of the product is communicated in the form of a product identifier, or list of product characteristics. The online service identifies similar products, and information describing the similar products including images of the similar products is returned to the SDK running on the browser. The SDK displays the center dialog showing the product and the similar products. In some embodiments, bounding boxes appear indicating an identified product. By swiping left on the returned products, the SDK presents a sequence of similar products. By scrolling up and down the user can see different categories of similar items. For example, by scrolling up and down the user can see similar tops, or similar shoes. In the example shown in FIG. 14, the bounding boxes have a color that matches the color bar underneath each similar product.

[0104] FIG. 15 shows an illustrative example of a user interface for identifying similar products, in an embodiment. In an embodiment, when the user selects a product, information identifying the product is sent to an online service. The online service processes the image and identifies one or more products, each of which is surrounded by a colored bounding box. The image and information identifying the bounding box is returned to the client.

[0105] When the user clicks on a bounding box, other bounding boxes are muted to indicate selection of the bounding box. Products matching the selected product (that is associated with the selected bounding box) are highlighted in the bottom portion of the pop-up dialog.

[0106] In some examples, an arrow pointing to the right appears as indicated in the dialogue on the right half of FIG. 15. By swiping across the product image, the SDK receives information that identifies the product, and the online service identifies looks that are associated with the product. When a user selects a product on the similar products pop-up, the user is led to the product page of the product being clicked.

[0107] FIG. 16 shows an illustrative example of a user interface for identifying a look based on a selected article of clothing, in an embodiment. In one example, the user swipes over a search image or clicks on an arrow at the edge of the image to generate a signal that causes the SDK to provide looks that are associated with the item shown. In some embodiments, the SDK produces looks that are based on celebrity photos. In other embodiments, the SDK produces looks that are based on Instagram pages. In another embodiment, the SDK identifies looks from a stylebook or Instagram feed of a retailer or brand. In some implementations, the system produces a lookbook which is a collection of looks for a particular product.

[0108] When viewing a particular look, arrows at the edges of the look image allow the user to navigate back to the product page (by clicking left or swiping right) or forward to view additional looks (by clicking right or swiping left). In some examples, a thumbnail of the original product photo appears below the look, and clicking on the photo of the product will navigate back to the product page. In some examples, a similar product pop-up displays similar items to those detected in the current photo.

[0109] FIG. 17 shows an illustrative example of a user interface that allows the user to select a look from a plurality of looks, in an embodiment. For example, using the user interface illustrated in FIG. 17, the user is able to swipe right on the picture to select between various looks. Clicking the right arrow or swiping left advances to the next look, and clicking the left arrow or swiping right advances to the previous look. In some implementations, the sequence of looks is transmitted to the browser from the online service, and the selection occurs between stored looks within the client software. In other implementations, swiping left or right requests a next look or previous look from the server, and the server provides information on the next or previous block as requested.

[0110] In various implementations, the user interface provides a way for the user to view products associated with the current look. In the example shown in FIG. 17, the user scrolls up to see similar products that are detected and matched from the current look image.

[0111] In an embodiment, a thumbnail of the product used to identify the look is shown in the upper left corner of the look image. By selecting the thumbnail, the user is returned to the product screen for the product.

[0112] FIG. 18 shows an illustrative example of a user interface that allows the user to select a particular article of clothing from within a look, in an embodiment. In one example, the user is able to select individual products from the look photo. Individual products of the look photo are highlighted by a bounding box. By selecting a bounding box, information identifying a product is sent to the online service and the online service identifies a set of looks associated with the product.

[0113] Upon selecting the product's bounding box, the thumbnail associated with the previous product is removed, and an arrow pointing to the right appears. By clicking the arrow or swiping, information identifying the product is sent to the online service, and the online service returns a set of looks for the selected product (a lookbook). In this way, style recommendations can be acquired for any particular product present in a look.

[0114] FIG. 19 shows an illustrative example of a desktop user interface for navigating looks and related articles of clothing, in an embodiment. In the example shown in FIG. 19, a browser window displays a user interface for a particular look. An image of the look is shown on the left part of the page, and bounding boxes are placed around each product identified in the image. By selecting a particular bounding box, the user can be shown a set of similar products on the left side of the page.

[0115] In various examples, application dialogs and the pop-up windows size responsively to the browser window. The searched image will be displayed on the left and results on the right. User can use mouse to scroll up and down to explore the results.

[0116] User can click the bounding box to start looking at a lookbook of that item.

[0117] FIG. 20 shows an illustrative example of a user interface for navigating looks implemented on a mobile device, in an embodiment. FIG. 20 illustrates a mobile device implementing the system. The mobile device may be a cellular phone, tablet computer, handheld device, or other mobile device. In one embodiment, the mobile device includes a camera. The user is able to take a picture with the camera, and the resulting image is displayed on the screen of the mobile device. An icon appears in the lower right corner of the image indicating that the image may be used to identify a product or look. By clicking on the icon, the images uploaded to an online service identifies one or more products in the image. The service identifies the particular products and characteristics of the products in the image. In an embodiment, the online service returns information to the mobile device that allows the application to create bounding boxes around each product in the image.

[0118] Once bounding boxes are added to the image, the user may select a bounding box to request additional information. In one embodiment, the selection information is returned to the online service, and the online service provides information that identifies the product and optionally similar products. Images of the product and similar products are transferred from the online service to the mobile device, where there displayed to the user on the display screen. The user can either view a plurality of similar products, or select a particular product and explore additional looks that use that particular product.

[0119] In some examples, the user may start from an image on a retailer's website, from a social media site, or a photo sharing site or service.

[0120] FIG. 21 shows an illustrative example of a user interface for navigating looks implemented on a web browser, in an embodiment. In an embodiment, the SDK runs on a personal computer system running a browser. The embodiment shown in FIG. 21 may be implemented using a personal computer, a laptop computer, or tablet computer running a browser.

[0121] FIG. 22 shows an illustrative example of a generic object detector and a hierarchical detector, in an embodiment. The hierarchical detector predicts a tree of categories as output compared to the generic detector that outputs a single category for each bounding box. In an embodiment, clothing product detection from images and videos paves the way for visual fashion understanding. Clothing detection allows for retrieving similar clothing items, organizing fashion photos, artificial intelligence powered shopping assistants and automatic labeling of large catalogues. Training a deep learning based clothing detector requires predefined categories (dress, pants, etc.) and a high volume of annotated image data for each category. However, fashion evolves and new categories are constantly introduced in the marketplace. For example, consider the case of jeggings which is a combination of jeans and leggings. To retrain a network to handle j egging category may involve adding annotated data specific to j egging class and subsequently relearning the weights for the deep network. In this paper, we propose a novel method that can handle novel category detection without the need of obtaining new labeled data or retraining the network. Our approach learns the visual similarities between various clothing categories and predicts a tree for categories. The resulting framework significantly improves the generalization capabilities of the detector to the novel clothing products.