Information Processing Method, System And Device Based On Contextual Signals And Prefrontal Cortex-like Network

ZENG; Guanxiong ; et al.

U.S. patent application number 16/971691 was filed with the patent office on 2021-02-25 for information processing method, system and device based on contextual signals and prefrontal cortex-like network. This patent application is currently assigned to INSTITUTE OF AUTOMATION, CHINESE ACADEMY OF SCIENCES. The applicant listed for this patent is INSTITUTE OF AUTOMATION, CHINESE ACADEMY OF SCIENCES. Invention is credited to Yang CHEN, Shan YU, Guanxiong ZENG.

| Application Number | 20210056415 16/971691 |

| Document ID | / |

| Family ID | 1000005381769 |

| Filed Date | 2021-02-25 |

| United States Patent Application | 20210056415 |

| Kind Code | A1 |

| ZENG; Guanxiong ; et al. | February 25, 2021 |

INFORMATION PROCESSING METHOD, SYSTEM AND DEVICE BASED ON CONTEXTUAL SIGNALS AND PREFRONTAL CORTEX-LIKE NETWORK

Abstract

An information processing method based on contextual signals and a prefrontal cortex-like network includes: selecting a feature vector extractor based on obtained information to perform feature extraction to obtain an information feature vector; inputting the information feature vector into the prefrontal cortex-like network, and performing dimensional matching between the information feature vector and each contextual signal in an input contextual signal set to obtain contextual feature vectors to constitute a contextual feature vector set; and classifying each feature vector in the contextual feature vector set by a feature vector classifier to obtain classification information of the each feature vector to constitute a classification information set. An information processing system based on contextual signals and a prefrontal cortex-like network includes an acquisition module, a feature extraction module, a dimensional matching module, a classification module and an output module.

| Inventors: | ZENG; Guanxiong; (Beijing, CN) ; CHEN; Yang; (Beijing, CN) ; YU; Shan; (Beijing, CN) | ||||||||||

| Applicant: |

|

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Assignee: | INSTITUTE OF AUTOMATION, CHINESE

ACADEMY OF SCIENCES Beijing CN |

||||||||||

| Family ID: | 1000005381769 | ||||||||||

| Appl. No.: | 16/971691 | ||||||||||

| Filed: | April 19, 2019 | ||||||||||

| PCT Filed: | April 19, 2019 | ||||||||||

| PCT NO: | PCT/CN2019/083356 | ||||||||||

| 371 Date: | August 21, 2020 |

| Current U.S. Class: | 1/1 |

| Current CPC Class: | G06K 9/6228 20130101; G06K 9/6232 20130101; G06K 9/6201 20130101; G06N 3/08 20130101; G06K 9/6268 20130101 |

| International Class: | G06N 3/08 20060101 G06N003/08; G06K 9/62 20060101 G06K009/62 |

Foreign Application Data

| Date | Code | Application Number |

|---|---|---|

| Jan 22, 2019 | CN | 201910058284.2 |

Claims

1. An information processing method based on contextual signals and a prefrontal cortex-like network, comprising: step S10: selecting a feature vector extractor based on obtained information to perform feature extraction to obtain an information feature vector; step S20: inputting the information feature vector into the prefrontal cortex-like network, and performing dimensional matching between the information feature vector and each contextual signal in an input contextual signal set to obtain contextual feature vectors, and constituting a contextual feature vector set according to the contextual feature vectors; and step S30: classifying each contextual feature vector of the contextual feature vectors in the contextual feature vector set by a feature vector classifier to obtain classification information of the each contextual feature vector, and constituting a classification information set according to the classification information of the each contextual feature vector, wherein the feature vector classifier is a mapping network of the contextual feature vectors and the classification information.

2. The information processing method based on the contextual signals and the prefrontal cortex-like network of claim 1, wherein, the step of selecting the feature vector extractor in step S10 comprises: based on a preset feature vector extractor library, selecting the feature vector extractor corresponding to a category of the obtained information.

3. The information processing method based on the contextual signals and the prefrontal cortex-like network of claim 2, wherein, a method for constructing the feature vector extractor comprises: based on a training data set, iteratively updating a weight of a parameter in a feature vector extraction network by an Adam algorithm to obtain a trained feature vector extraction network, wherein the feature vector extraction network is constructed based on a deep neural network; and using a network obtained after removing a last classification layer of the trained feature vector extraction network as the feature vector extractor.

4. The information processing method based on the contextual signals and the prefrontal cortex-like network of claim 1, wherein, the step of inputting the information feature vector into the prefrontal cortex-like network and performing the dimensional matching between the information feature vector and the each contextual signal in the input contextual signal set to obtain the contextual feature vectors in step S20 comprises: step S201: constructing a weight matrix based on the contextual signals and the prefrontal cortex-like network, and normalizing each column of the weight matrix; W.sup.in=[w.sub.1.sup.in,w.sub.2.sup.in, . . . ,w.sub.m.sup.in] R.sup.k.times.m, .parallel.w.sub.i.sup.in.parallel.=1, i=1,2, . . . ,m, where, W.sup.in denotes the weight matrix, .parallel.w.sub.i.sup.in.parallel. denotes a normalized module of w.sub.i.sup.in, i denotes a dimension index of a hidden layer, k denotes a dimension of an input feature, and m denotes a dimension of the hidden layer; step S202: based on the weight matrix, performing the dimensional matching between the each contextual signal and the information feature vector to obtain the contextual feature vectors; Y.sup.out=g([c.sub.1 cos .theta..sub.1,c.sub.2 cos .theta..sub.2, . . . ,c.sub.m cos .theta..sub.m].sup.T).parallel.F.parallel., where, Y.sup.out denotes the contextual feature vectors, and Y.sup.out=[y.sub.1, y.sub.2, . . . , y.sub.m].sup.T R.sup.m; F denotes the information feature vector, and F=[f.sub.1, f.sub.2, . . . , f.sub.k].sup.T R.sup.k; C denotes the each contextual signal, and C=[c.sub.1, c.sub.2, . . . , c.sub.m].sup.T R.sup.m; .theta..sub.m denotes an angle between w.sub.m.sup.in and F, and g=max(0,x); W.sup.in denotes the weight matrix, and W.sup.in=[w.sub.1.sup.in, w.sub.2.sup.in, . . . , w.sub.m.sup.in] R.sup.k.times.m; and step S203: constructing the contextual feature vector set by the contextual feature vectors obtained after performing the dimensional matching between each contextual signal in the contextual signal set and the information feature vector.

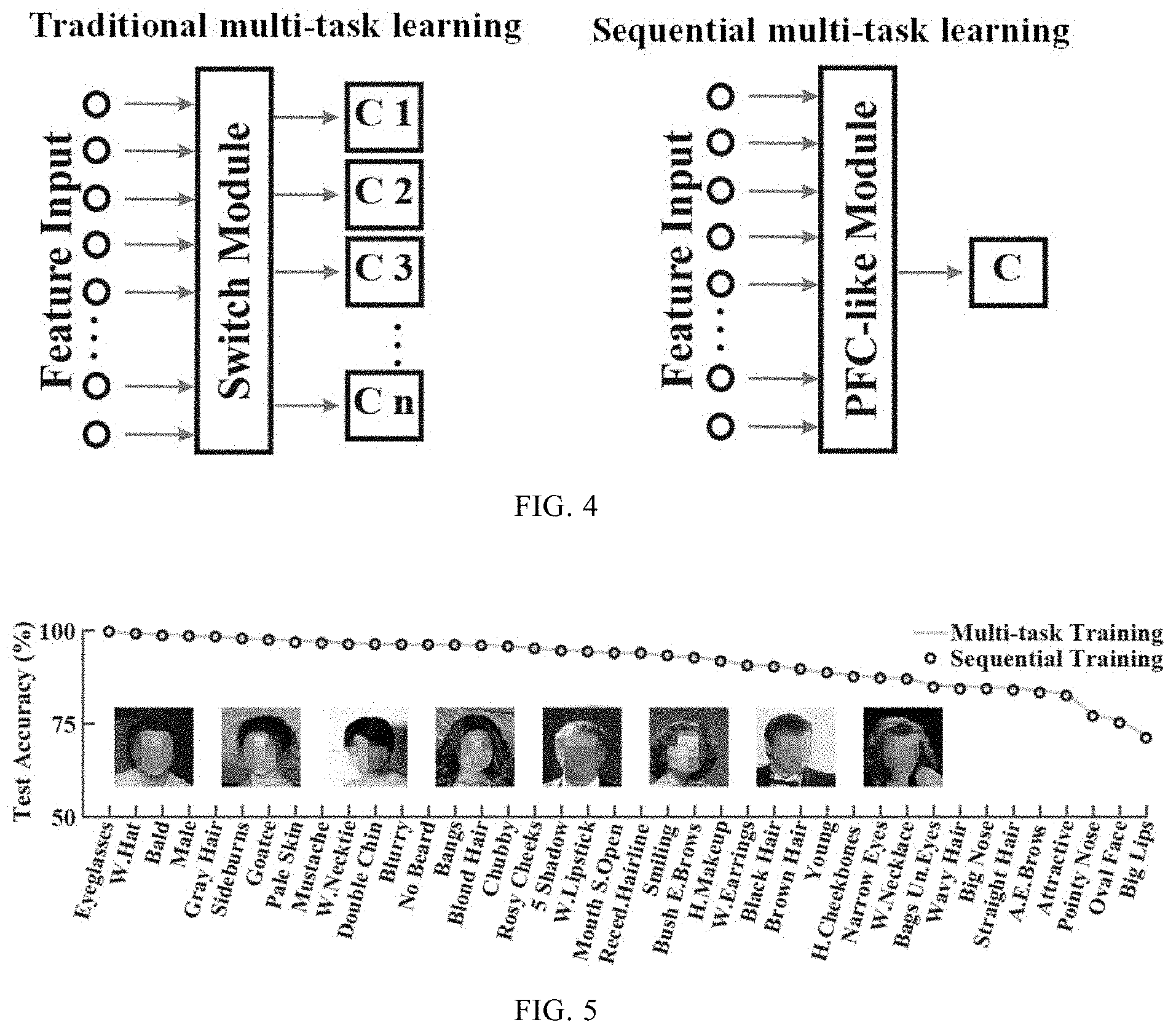

5. The information processing method based on the contextual signals and the prefrontal cortex-like network of claim 1, wherein, the feature vector classifier in step S30 is constructed based on the following equations: Y L a b l e = ( W o u t ) T Y o u t = W o u t F cos .phi. , .phi. = arccos ( i = 1 m w i out c i g ( cos .theta. i ) W out ) , ##EQU00004## where, Y.sup.Lable denotes the classification information, W.sup.out denotes a classification weight of the classifier, Y.sup.out denotes an output feature of the prefrontal cortex-like network, and F denotes the information feature vector.

6. The information processing method based on the contextual signals and the prefrontal cortex-like network of claim 3, wherein, configuration parameters of the Adam algorithm comprise: a learning rate of 0.1, a weight decay rate of 0.0001, and a batch size of 256.

7. The information processing method based on the contextual signals and the prefrontal cortex-like network of claim 3, wherein, the each contextual signal is a multi-dimensional word vector corresponding to a classification attribute, and a dimension of the multi-dimensional word vector is 200.

8. The information processing method based on the contextual signals and the prefrontal cortex-like network of claim 4, wherein, the weight matrix W.sup.in is: a matrix constructed based on a dimension of a word vector and a dimension of the prefrontal cortex-like network, wherein the matrix has a size of multiplying the dimension of the word vector by the dimension of the prefrontal cortex-like network.

9. An information processing system based on contextual signals and a prefrontal cortex-like network, comprising an acquisition module, a feature extraction module, a dimensional matching module, a classification module and an output module; wherein, the acquisition module is configured to obtain input information and an input contextual signal set and input; the feature extraction module is configured to extract features of the input information by using a feature vector extractor corresponding to the input information to obtain an information feature vector; the dimensional matching module is configured to input the information feature vector into the prefrontal cortex-like network, perform dimensional matching between the information feature vector and each contextual signal in the input contextual signal set to obtain contextual feature vectors, and constitute a contextual feature vector set according to the contextual feature vectors; the classification module is configured to classify each contextual feature vector of the contextual feature vectors in the contextual feature vector set by a pre-constructed feature vector classifier to obtain classification information of the each contextual feature vector, and to constitute a classification information set according to the classification information of the each contextual feature vector; and the output module is configured to output the classification information set.

10. A storage device, wherein a plurality of programs are stored in the storage device, and the plurality of programs are configured to be loaded and executed by a processor to implement the information processing method based on the contextual signals and the prefrontal cortex-like network of claim 1.

11. A processing device, comprising: a processor, and a storage device, wherein the processor is configured to execute a plurality of programs, and the storage device is configured to store the plurality of programs; the plurality of programs are configured to be loaded and executed by the processor to implement the information processing method based on the contextual signals and the prefrontal cortex-like network of claim 1.

12. The storage device of claim 10, wherein, the step of selecting the feature vector extractor in step S10 comprises: based on a preset feature vector extractor library, selecting the feature vector extractor corresponding to a category of the obtained information.

13. The storage device of claim 12, wherein, a method for constructing the feature vector extractor comprises: based on a training data set, iteratively updating a weight of a parameter in a feature vector extraction network by an Adam algorithm to obtain a trained feature vector extraction network, wherein the feature vector extraction network is constructed based on a deep neural network; and using a network obtained after removing a last classification layer of the trained feature vector extraction network as the feature vector extractor.

14. The storage device of claim 10, wherein, the step of inputting the information feature vector into the prefrontal cortex-like network and performing the dimensional matching between the information feature vector and the each contextual signal in the input contextual signal set to obtain the contextual feature vectors in step S20 comprises: step S201: constructing a weight matrix based on the contextual signals and the prefrontal cortex-like network, and normalizing each column of the weight matrix; W.sup.in=[w.sub.1.sup.in,w.sub.2.sup.in, . . . ,w.sub.m.sup.in] R.sup.k.times.m .parallel.w.sub.i.sup.in.parallel.=1, i=1,2, . . . ,m, where, W.sup.in denotes the weight matrix, .parallel.w.sub.i.sup.in.parallel. denotes a normalized module of w.sub.i.sup.in, l denotes a dimension index of a hidden layer, k denotes a dimension of an W.sup.in input feature, and m denotes a dimension of the hidden layer; step S202: based on the weight matrix, performing the dimensional matching between the each contextual signal and the information feature vector to obtain the contextual feature vectors; Y.sup.out=g([c.sub.1 cos .theta..sub.1,c.sub.2 cos .theta..sub.2, . . . ,c.sub.m cos .theta..sub.m].sup.T).parallel.F.parallel., where, Y.sup.out denotes the contextual feature vectors, and Y.sup.out=[y.sub.1, y.sub.2, . . . , y.sub.m].sup.T R.sup.m; F denotes the information feature vector, and F=[f.sub.1, f.sub.2, . . . , f.sub.k].sup.T R.sup.k; C denotes the each contextual signal, and C=[c.sub.1, c.sub.2, . . . , c.sub.m].sup.T R.sup.m; .theta..sub.m denotes an angle between w.sub.m.sup.in and F, and g=max(0,x); W.sup.in denotes the weight matrix, and W.sup.in[w.sub.1.sup.in, w.sub.2.sup.in, . . . , w.sub.m.sup.in] R.sup.k.times.m; and step S203: constructing the contextual feature vector set by the contextual feature vectors obtained after performing the dimensional matching between each contextual signal in the contextual signal set and the information feature vector.

15. The storage device of claim 10, wherein, the feature vector classifier in step S30 is constructed based on the following equations: Y L a b l e = ( W o u t ) T Y o u t = W o u t F cos .phi. , .phi. = arccos ( j = 1 m w i out c i g ( cos .theta. i ) W out ) , ##EQU00005## where, Y.sup.Lable denotes the classification information, W.sup.out denotes a classification weight of the classifier, Y.sup.out denotes an output feature of the prefrontal cortex-like network, and F denotes the information feature vector.

16. The storage device of claim 13, wherein, configuration parameters of the Adam algorithm comprise: a learning rate of 0.1, a weight decay rate of 0.0001, and a batch size of 256.

17. The storage device of claim 13, wherein, the each contextual signal is a multi-dimensional word vector corresponding to a classification attribute, and a dimension of the multi-dimensional word vector is 200.

18. The storage device of claim 14, wherein, the weight matrix W.sup.in is: a matrix constructed based on a dimension of a word vector and a dimension of the prefrontal cortex-like network, wherein the matrix has a size of multiplying the dimension of the word vector by the dimension of the prefrontal cortex-like network.

19. The processing device of claim 11, wherein, the step of selecting the feature vector extractor in step S10 comprises: based on a preset feature vector extractor library, selecting the feature vector extractor corresponding to a category of the obtained information.

20. The processing device of claim 19, wherein, a method for constructing the feature vector extractor comprises: based on a training data set, iteratively updating a weight of a parameter in a feature vector extraction network by an Adam algorithm to obtain a trained feature vector extraction network, wherein the feature vector extraction network is constructed based on a deep neural network; and using a network obtained after removing a last classification layer of the trained feature vector extraction network as the feature vector extractor.

Description

CROSS REFERENCE TO THE RELATED APPLICATIONS

[0001] This application is the national phase entry of International Application No. PCT/CN2019/083356, filed on Apr. 19, 2019, which is based upon and claims priority to Chinese Patent Application No. 201910058284.2, filed on Jan. 22, 2019, the entire contents of which are incorporated herein by reference.

TECHNICAL FIELD

[0002] The present invention belongs to the field of pattern recognition and machine learning, and more particularly, relates to an information processing method, system and device based on contextual signals and a prefrontal cortex-like network.

BACKGROUND

[0003] One of the hallmarks of advanced intelligence is flexibility. Humans can respond differentially to the same stimulus according to different goals, environments, internal states and other situations. The prefrontal cortex, which is highly elaborated in primates, is pivotal for such an ability. The prefrontal cortex can quickly learn the "rules of the game" and dynamically apply them to map the sensory inputs to context-dependent tasks based on different actions. This process, named cognitive control, allows primates to behave appropriately in an unlimited number of situations.

[0004] Current artificial neural networks are powerful in extracting advanced features from the original data to perform pattern classification and learning sophisticated mapping rules. Their responses, however, are largely dictated by network inputs, exhibiting stereotyped input-output mappings. These mappings are usually fixed once the network training is completed.

[0005] Therefore, the current artificial neural networks lack enough flexibility to work in complex situations in which the mapping rules may change according to the context and these rules need to be learned "on the go" from a small number of training samples. This constitutes a significant ability gap between artificial neural networks and human brains.

SUMMARY

[0006] In order to solve the above-mentioned problems in the prior art, that is, to solve the problem that the system has a complex structure, poor flexibility, and requires massive training samples when performing complex multi-tasking, inspired by the function of the prefrontal cortex, the present invention provides an information processing method based on contextual signals and a prefrontal cortex-like network, including:

[0007] step S10: selecting a feature vector extractor based on obtained information to perform feature extraction to obtain an information feature vector;

[0008] step S20: inputting the information feature vector into the prefrontal cortex-like network, and performing dimensional matching between the information feature vector and each contextual signal in an input contextual signal set to obtain contextual feature vectors to constitute a contextual feature vector set; and step S30: classifying each feature vector in the contextual feature vector set by a feature vector classifier to obtain classification information of the each feature vector to constitute a classification information set, wherein the feature vector classifier is a mapping network of the contextual feature vectors and the classification information.

[0009] In some preferred embodiments, the step of "selecting the feature vector extractor" in step S10 includes:

[0010] based on a preset feature vector extractor library, selecting the feature vector extractor corresponding to a category of the obtained information.

[0011] In some preferred embodiments, a method for constructing the feature vector extractor includes:

[0012] based on a training data set, iteratively updating a weight of a parameter in a feature vector extraction network by an Adam algorithm, wherein the feature vector extraction network is constructed based on a deep neural network; and

[0013] using a network obtained after removing the last classification layer of the trained feature vector extraction network as the feature vector extractor.

[0014] In some preferred embodiments, the step of "inputting the information feature vector into the prefrontal cortex-like network and performing the dimensional matching between the information feature vector and the each contextual signal in the input contextual signal set to obtain the contextual feature vectors" in step S20 includes:

[0015] step S201: constructing a weight matrix based on the contextual signal and the prefrontal cortex-like network, and normalizing each column of the weight matrix;

W.sup.in=[w.sub.1.sup.in,w.sub.2.sup.in, . . . ,w.sub.m.sup.in] R.sup.k.times.m,

.parallel.w.sub.i.sup.in.parallel.=1, i=1,2, . . . ,m,

[0016] where, W.sup.in denotes the weight matrix, .parallel.w.sub.i.sup.in.parallel. denotes a normalized module of w.sub.i.sup.in, i denotes a dimension index of an input feature, k denotes a dimension of the input feature, and m denotes a dimension of a hidden layer;

[0017] step S202: based on the weight matrix, performing the dimensional matching between the contextual signal and the information feature vector to obtain the contextual feature vectors;

Y.sup.out=g([c.sub.1 cos .theta..sub.1,c.sub.2 cos .theta..sub.2, . . . ,c.sub.m cos .theta..sub.m].sup.T).parallel.F.parallel.,

[0018] where, Y.sup.out denotes the contextual feature vectors, and Y.sup.out=[y.sub.1, y.sub.2, . . . , y.sub.m].sup.T R.sup.m; F denotes the information feature vector, and F=[f.sub.1, f.sub.2, . . . , f.sub.k].sup.T R.sup.k; C denotes the contextual signal, and C=[c.sub.1, c.sub.2, . . . , c.sub.m].sup.T R.sup.m; .circle-w/dot. denotes element-wise multiplication of the vector; .theta..sub.m denotes an angle between w.sub.m.sup.in and F, and g=max(0,x); W.sup.in denotes the weight matrix, and W.sup.in=[w.sub.1.sup.in, w.sub.2.sup.in, . . . , w.sub.m.sup.in] R.sup.k.times.m; and

[0019] step S203: constructing the contextual feature vector set by the contextual feature vectors obtained after performing the dimensional matching between each contextual signal in the contextual signal set and the information feature vector.

[0020] In some preferred embodiments, the feature vector classifier in step S30 is constructed based on the following equations:

Y L a b l e = ( W o u t ) T Y o u t = W o u t F cos .phi. , .phi. = arccos ( j = 1 n w j c j g ( cos .theta. j ) W out ) , ##EQU00001##

[0021] where Y.sup.Lable denotes the classification information, W.sup.out denotes a classification weight of the classifier, Y.sup.out denotes an output feature of the prefrontal cortex-like network, n denotes a dimension of an output weight of the prefrontal cortex-like network, and F denotes the information feature vector.

[0022] In some preferred embodiments, configuration parameters of the Adam algorithm include:

[0023] a learning rate of 0.1, a weight decay rate of 0.0001, and a batch size of 256.

[0024] In some preferred embodiments, the contextual signal is a multi-dimensional word vector corresponding to a classification attribute, and a dimension of the word vector is 200.

[0025] In some preferred embodiments, the weight matrix W.sup.in is:

[0026] a matrix constructed based on a dimension of a word vector and a dimension of the prefrontal cortex-like network, wherein the matrix has a size of the dimension of the word vector.times.the dimension of the prefrontal cortex-like network.

[0027] According to the second aspect of the present invention, an information processing system based on contextual signals and a prefrontal cortex-like network includes an acquisition module, a feature extraction module, a dimensional matching module, a classification module and an output module.

[0028] The acquisition module is configured to obtain input information and an input contextual signal set and input.

[0029] The feature extraction module is configured to extract features of the input information by using a feature vector extractor corresponding to the input information to obtain an information feature vector.

[0030] The dimensional matching module is configured to input the information feature vector into the prefrontal cortex-like network, and perform dimensional matching between the information feature vector and each contextual signal in the input contextual signal set to obtain contextual feature vectors to constitute a contextual feature vector set.

[0031] The classification module is configured to classify each feature vector in the contextual feature vector set by a pre-constructed feature vector classifier to obtain classification information of the each feature vector to constitute a classification information set.

[0032] The output module is configured to output the acquired classification information set.

[0033] According to the third aspect of the present invention, a storage device is provided, wherein a plurality of programs are stored in the storage device, and the programs are configured to be loaded and executed by a processor to implement the information processing method based on the contextual signals and the prefrontal cortex-like network described above.

[0034] According to the fourth aspect of the present invention, a processing device includes a processor and a storage device. The processor is configured to execute a plurality of programs. The storage device is configured to store the plurality of programs. The programs are configured to be loaded and executed by the processor to implement the information processing method based on the contextual signals and the prefrontal cortex-like network described above.

[0035] The present invention has the following advantages.

[0036] (1) The multi-task information processing method of the present invention based on the contextual signals uses a prefrontal cortex-like module to realize the multi-task learning oriented to the scene information. When the contextual information cannot be determined in advance, the mapping depending on the contextual information can be gradually learned step by step. The data processed by the method of the present invention can be applied to multi-task learning or sequential multi-task learning with higher requirements, whereby the network structure can be simplified, thus reducing the difficulty of multi-task learning and increasing the flexibility of the system.

[0037] (2) In the present invention, the deep neural network is used as the feature extractor, and the optimization method is designed on the linear layer. In this way, the deep neural network exerts its full role, and the design difficulty is reduced.

[0038] (3) In the method of the present invention, the contextual signals are designed and can be changed according to the change of the current working environment, which solves the disadvantage that the neural network cannot respond differently to the same stimulus according to different goals, environments, internal states and other situations.

BRIEF DESCRIPTION OF THE DRAWINGS

[0039] By reading the detailed description of the non-restrictive embodiment with reference to the following drawings, other features, objectives and advantages of the present invention will become better understood.

[0040] FIG. 1 is a schematic flowchart of the information processing method based on the contextual signals and the prefrontal cortex-like network of the present invention;

[0041] FIG. 2 is a schematic diagram showing the network structure of the information processing method based on the contextual signals and the prefrontal cortex-like network of the present invention;

[0042] FIG. 3 is a schematic diagram showing the three-dimensional space of an embodiment of the information processing method based on the contextual signals and the prefrontal cortex-like network of the present invention;

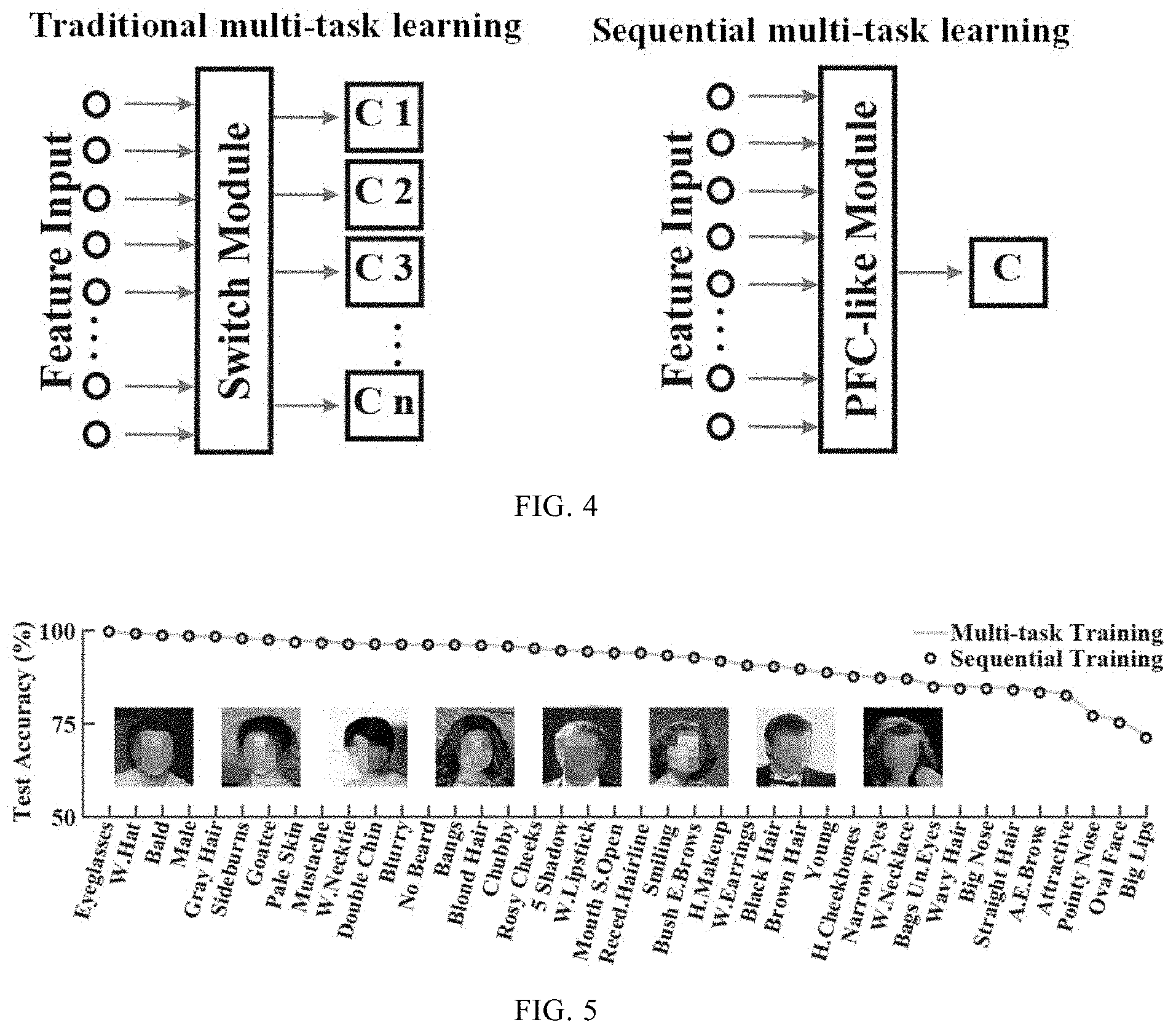

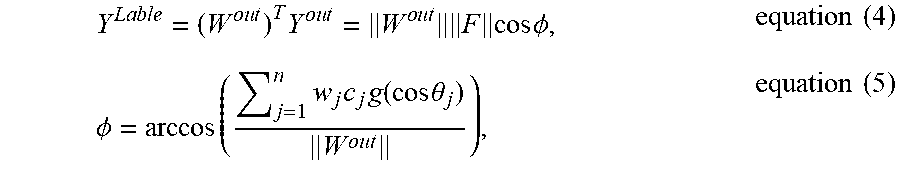

[0043] FIG. 4 is a schematic diagram showing the network architectures of the traditional multi-task learning network and the sequential multi-task learning network according to the information processing method based on the contextual signals and the prefrontal cortex-like network of the present invention; and

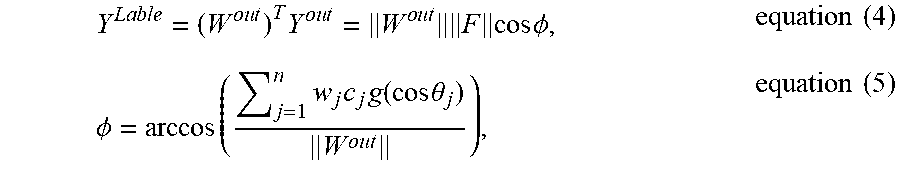

[0044] FIG. 5 is a schematic diagram showing the accuracy of the face recognition task of the multi-task training and the sequential multi-scene training according to the information processing method based on the contextual signals and the prefrontal cortex-like network of the present invention.

DETAILED DESCRIPTION OF THE EMBODIMENTS

[0045] The present invention is further described in detail hereinafter with reference to the drawings and embodiments. Understandably, the specific embodiments described herein are only used to explain the present invention rather than to limit the present invention. In addition, it should be noted that for the convenience of description, only the parts related to the present invention are shown in the drawings.

[0046] It should be noted that without conflict, the embodiments in the present invention and the features in the embodiments may be combined with each other. The present invention will be explained in detail with reference to the drawings and embodiments below.

[0047] An information processing method based on contextual signals and a prefrontal cortex-like network includes:

[0048] step S10: a feature vector extractor is selected based on obtained information to perform feature extraction to obtain an information feature vector;

[0049] step S20: the image feature vector is input into a prefrontal cortex-like network, and dimensional matching is performed between the image feature vector and each contextual signal in an input contextual signal set to obtain contextual feature vectors to constitute a contextual feature vector set; and

[0050] step S30: each feature vector in the contextual feature vector set is classified by a feature vector classifier to obtain classification information of the each feature vector to constitute a classification information set, wherein the feature vector classifier is a mapping network of the contextual feature vectors and the classification information.

[0051] In order to more clearly explain the information processing method based on the contextual signals and the prefrontal cortex-like network of the present invention, the steps in the embodiment of the method of the present invention are described in detail below with reference to FIG. 1.

[0052] An information processing method based on contextual signals and a prefrontal cortex-like network according to the first embodiment of the present invention includes steps S10-step S30, and each step is described in detail as follows.

[0053] Step S10: a feature vector extractor is selected based on obtained information to perform feature extraction to obtain an information feature vector.

[0054] Based on a preset feature vector extractor library, the feature vector extractor corresponding to the category of the obtained information is selected.

[0055] The feature vector extractor library includes at least one selected from the group consisting of an image feature vector extractor, a speech feature vector extractor and a text feature vector extractor. Alternatively, the feature vector extractor library can also include feature vector extractors of other common information categories, which are not enumerated in detail herein. In the present invention, the feature vector extractor can be constructed based on the deep neural network. For example, a deep neural network such as a residual neural network (ResNet) can be selected for the input image information. The deep neural network such as a convolutional neural network (CNN), long short-term memory (LSTM) neural network and gated recurrent unit (GRU) neural network can be selected for the input speech information. The deep neural network such as fastText, Text-convolutional neural network (TextCNN) and Text-recurrent neural network (TextRNN) can be used for the input text information.

[0056] In the real environment, information is generally multimodal, and features thereof can be extracted by simultaneously using several feature vector processors in combination, which can not only enrich the expression of information, but also significantly reduce the dimension of the original information to facilitate processing the downstream information.

[0057] A method for constructing each feature vector extractor in the feature vector extractor library includes:

[0058] based on the training data set, a weight of a parameter in a feature vector extraction network is iteratively updated by the Adam algorithm, wherein the feature vector extraction network is constructed based on a deep neural network; and

[0059] a network obtained after removing the last classification layer of the trained feature vector extraction network is used as the feature vector extractor.

[0060] In the preferred embodiment of the present invention, the Adam algorithm has a learning rate of 0.1, a weight decay rate of 0.0001, and a batch size of 256. The training data set used in the present invention is the face data set CelebFaces Attributes (CelebA) (CelebA is the open data of the Chinese University of Hong Kong, containing 202599 face images of 10177 celebrities, each with 40 corresponding attributes. Each attribute is used as a kind of scene, and different scenes correspond to different contextual signals.)

[0061] The deep neural network ResNet50 is trained using the Adam algorithm, and the last classification layer of the ResNet50 network is removed. The output of the penultimate layer of the ResNet50 network is used as the feature of face data and has 2048 dimensions.

[0062] Step S20: the information feature vector is input into the prefrontal cortex-like network, and dimensional matching is performed between the information feature vector and each contextual signal in the input contextual signal set to obtain the contextual feature vectors to constitute the contextual feature vector set. FIG. 2 is a schematic diagram of the prefrontal cortex-like network structure based on contextual signals according to an embodiment of the present invention.

[0063] In the preferred embodiment of the present invention, the English word vectors trained by the default parameters of the gensim toolkit are used as the contextual signals, and the contextual signals of 40 attribute classification tasks are the 200-dimensional word vectors of the corresponding attribute labels.

[0064] Step S201: a weight matrix is constructed based on the contextual signals and the prefrontal cortex-like network, and each column of the weight matrix is normalized.

[0065] The dimension of the contextual signals is 200, and the dimension of the contextual feature vector is 5000. The weight matrix with a size of 200.times.5000 is constructed. FIG. 3 schematically shows the three-dimensional space of the preferred embodiment of the present invention.

[0066] The weight matrix Will is constructed by equation (1):

W.sup.in=[w.sub.1.sup.in,w.sub.2.sup.in, . . . ,w.sub.m.sup.in] R.sup.k.times.m equation (1),

[0067] where, k denotes the dimension of the input feature, and m denotes the dimension of the hidden layer.

[0068] Each column of the weight matrix is normalized by equation (2):

.parallel.w.sub.i.sup.in.parallel.=1, i=1,2, . . . ,m equation (2),

[0069] where, i denotes the dimension index of the input feature.

[0070] Step S202: based on the weight matrix, dimensional matching is performed between the contextual signal and the information feature vector to obtain the contextual feature vectors, as shown in equation (3):

Y out = g ( ( W in ) T F ) .circle-w/dot. C = g ( [ w 1 in , w 2 in , , w m in ] T F ) .circle-w/dot. C = g ( [ c 1 F w 1 in cos .theta. 1 , c 2 F w 2 in cos .theta. 2 , , c m F w m in cos .theta. m ] T ) = g ( [ c 1 w 1 in cos .theta. 1 , c 2 w 2 in cos .theta. 2 , , c m w m in cos .theta. m ] T ) F = g ( [ c 1 cos .theta. 1 , c 2 cos .theta. 2 , , c m cos .theta. m ] T ) F , equation ( 3 ) ##EQU00002##

[0071] where, Y.sup.out denotes the contextual feature vectors, and Y.sup.out=[y.sub.1, y.sub.2, . . . , y.sub.m].sup.T R.sup.m; F denotes the information feature vector, and F=[f.sub.1, f.sub.2, . . . , f.sub.k].sup.T R.sup.k; C denotes the contextual signal, and C=[c.sub.1, c.sub.2, . . . , c.sub.m].sup.T R.sup.m; .circle-w/dot. denotes element-wise multiplication of the vector; .theta..sub.m denotes the angle between w.sub.m.sup.in and F, and g=max(0,x); W.sup.in denotes the weight matrix, and W.sup.in=[w.sub.1.sup.in, w.sub.2.sup.in, . . . , w.sub.m.sup.in] R.sup.k.times.m.

[0072] Step S203: the contextual feature vector set is constructed by the contextual feature vectors obtained after performing the dimensional matching between each contextual signal in the contextual signal set and the input information feature vector.

[0073] Step S30: each feature vector in the contextual feature vector set is classified by a feature vector classifier to obtain classification information of the each feature vector to constitute a classification information set, wherein the feature vector classifier is a mapping network of the contextual feature vectors and classification information.

[0074] The feature vector classifier is constructed based on equation (4) and equation (5):

Y L a b l e = ( W o u t ) T Y o u t = W o u t F cos .phi. , equation ( 4 ) .phi. = arccos ( j = 1 n w j c j g ( cos .theta. j ) W out ) , equation ( 5 ) ##EQU00003##

[0075] where, Y.sup.Lable denotes the classification information, W.sup.out denotes the classification weight of the classifier, Y.sup.out denotes the output feature of the prefrontal cortex-like network, n denotes the dimension of the output weight of the prefrontal cortex-like network, and F denotes the information feature vector.

[0076] FIG. 4 is a schematic diagram showing the network architectures of the traditional multi-task learning network and the sequential multi-task learning network according to the information processing method based on the contextual signals and the prefrontal cortex-like network of the present invention, wherein C represents the classifier. In order to achieve context-dependent processing, a switch module and n classifiers are required in multitask training, wherein n represents the number of contextual signals.

[0077] FIG. 5 is a schematic diagram showing the accuracy of the face recognition task of the multi-task training and the sequential multi-scene training according to the information processing method based on the contextual signals and the prefrontal cortex-like network of the present invention. Each point represents one face attribute, and the number of points is 40 in total. Each attribute is associated with one contextual signal, so that sequential multi-task learning based on contextual signals can be performed to obtain the results of multi-task training.

[0078] An information processing system based on contextual signals and a prefrontal cortex-like network according to the second embodiment of the present invention includes an acquisition module, a feature extraction module, a dimensional matching module, a classification module and an output module.

[0079] The acquisition module is configured to obtain input information and an input contextual signal set and input.

[0080] The feature extraction module is configured to extract the features of the input information by using a feature vector extractor corresponding to the input information to obtain an information feature vector.

[0081] The dimensional matching module is configured to input the information feature vector into the prefrontal cortex-like network, and perform dimensional matching between the information feature vector and each contextual signal in the input contextual signal set to obtain contextual feature vectors to constitute a contextual feature vector set.

[0082] The classification module is configured to classify each feature vector in the contextual feature vector set by a pre-constructed feature vector classifier to obtain classification information of the each feature vector to constitute a classification information set.

[0083] The output module is configured to output the acquired classification information set.

[0084] It can be clearly understood by those skilled in the art that for the convenience and brevity of the description, the specific working process and related description of the system described above can refer to the corresponding processes in the foregoing embodiments of the method of the present invention, which will not be repeatedly described here.

[0085] It should be noted that the information processing system based on the contextual signals and the prefrontal cortex-like network provided in the above embodiment is only exemplified by the division of the above functional modules. In practical applications, the above functions may be allocated to be completed by different functional modules as needed, that is, the modules or steps in the embodiments of the present invention are further decomposed or combined. For example, the modules in the above embodiments can be combined into one module, or can be further split into a plurality of sub-modules to complete all or a part of the functions of the above description. The designations of the modules and steps involved in the embodiments of the present invention are only intended to distinguish these modules or steps, and should not be construed as an improper limitation of the present invention.

[0086] The third embodiment of the present invention provides a storage device, wherein a plurality of programs are stored in the storage device. The programs are configured to be loaded and executed by a processor to implement the information processing method based on the contextual signals and the prefrontal cortex-like network described above.

[0087] A processing device according to the fourth embodiment of the present invention includes a processor and a storage device. The processor is configured to execute a plurality of programs, and the storage device is configured to store the plurality of programs. The programs are configured to be loaded and executed by the processor to implement the information processing method based on the contextual signals and the prefrontal cortex-like network described above.

[0088] It can be clearly understood by those skilled in the art that for the convenience and brevity of the description, the specific working process and related description of the storage device and the processing device described above can refer to the corresponding processes in the foregoing embodiments of the method of the present invention, which will not be repeatedly described here.

[0089] Those skilled in the art can realize that the exemplary modules and steps of methods described with reference to the embodiments disclosed herein can be implemented by electronic hardware, computer software or a combination of the electronic hardware and the computer software. The programs corresponding to modules of software and/or steps of methods can be stored in a random access memory (RAM), a memory, a read-only memory (ROM), an electrically programmable ROM, an electrically erasable programmable ROM, a register, a hard disk, a removable disk, a compact disc read-only memory (CD-ROM), or any other form of storage mediums well-known in the technical field. In order to clearly illustrate the interchangeability of electronic hardware and software, in the above description, the compositions and steps of each embodiment have been generally described functionally. Whether these functions are performed by electronic hardware or software depends on specific applications and design constraints of the technical solution. Those skilled in the art may use different methods to implement the described functions for each specific application, but such implementation should not be considered beyond the scope of the present invention.

[0090] The terminology "include/comprise" or any other similar terminologies are intended to cover non-exclusive inclusions, so that a process, method, article or apparatus/device including a series of elements not only includes those elements but also includes other elements that are not explicitly listed, or further includes elements inherent in the process, method, article or apparatus/device.

[0091] Hereto, the technical solutions of the present invention have been described in combination with the preferred implementations with reference to the drawings. However, it is easily understood by those skilled in the art that the scope of protection of the present invention is obviously not limited to these specific embodiments. Without departing from the principle of the present invention, those skilled in the art can make equivalent modifications or replacements to related technical features, and the technical solutions obtained through these modifications or replacements shall fall within the scope of protection of the present invention.

* * * * *

D00000

D00001

D00002

D00003

XML

uspto.report is an independent third-party trademark research tool that is not affiliated, endorsed, or sponsored by the United States Patent and Trademark Office (USPTO) or any other governmental organization. The information provided by uspto.report is based on publicly available data at the time of writing and is intended for informational purposes only.

While we strive to provide accurate and up-to-date information, we do not guarantee the accuracy, completeness, reliability, or suitability of the information displayed on this site. The use of this site is at your own risk. Any reliance you place on such information is therefore strictly at your own risk.

All official trademark data, including owner information, should be verified by visiting the official USPTO website at www.uspto.gov. This site is not intended to replace professional legal advice and should not be used as a substitute for consulting with a legal professional who is knowledgeable about trademark law.