Entering Of Human Face Information Into Database

Cai; Haijiao ; et al.

U.S. patent application number 16/678838 was filed with the patent office on 2021-02-04 for entering of human face information into database. This patent application is currently assigned to NEXTVPU (SHANGHAI) CO., LTD.. The applicant listed for this patent is NEXTVPU (SHANGHAI) CO., LTD.. Invention is credited to Haijiao Cai, Xinpeng Feng, Ji Zhou.

| Application Number | 20210034898 16/678838 |

| Document ID | / |

| Family ID | 1000004482907 |

| Filed Date | 2021-02-04 |

| United States Patent Application | 20210034898 |

| Kind Code | A1 |

| Cai; Haijiao ; et al. | February 4, 2021 |

ENTERING OF HUMAN FACE INFORMATION INTO DATABASE

Abstract

A processor chip circuit is provided, which is used for entering human face information into a database and includes a circuit unit configured to perform the steps of: videoing one or more videoed persons and extracting human face information of the one or more videoed persons from one or more video frames during the videoing; recording a voice of at least one of the one or more videoed persons during the videoing; performing semantic analysis on the recorded voice so as to extract respective information therefrom; and associating the extracted information with the human face information of the videoed person who has spoken the extracted information, and entering the associated information into the database.

| Inventors: | Cai; Haijiao; (Shanghai, CN) ; Feng; Xinpeng; (Shanghai, CN) ; Zhou; Ji; (Shanghai, CN) | ||||||||||

| Applicant: |

|

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Assignee: | NEXTVPU (SHANGHAI) CO.,

LTD. Shanghai CN |

||||||||||

| Family ID: | 1000004482907 | ||||||||||

| Appl. No.: | 16/678838 | ||||||||||

| Filed: | November 8, 2019 |

Related U.S. Patent Documents

| Application Number | Filing Date | Patent Number | ||

|---|---|---|---|---|

| PCT/CN2019/104108 | Sep 3, 2019 | |||

| 16678838 | ||||

| Current U.S. Class: | 1/1 |

| Current CPC Class: | G06K 9/00255 20130101; G06K 9/00288 20130101; G10L 17/06 20130101; G06K 9/00718 20130101; G10L 17/00 20130101; G06K 9/00926 20130101; G06F 16/784 20190101; G06F 16/7834 20190101 |

| International Class: | G06K 9/00 20060101 G06K009/00; G10L 17/00 20060101 G10L017/00; G10L 17/06 20060101 G10L017/06; G06F 16/783 20060101 G06F016/783 |

Foreign Application Data

| Date | Code | Application Number |

|---|---|---|

| Jul 29, 2019 | CN | 201910686122.3 |

Claims

1. A processor chip circuit for entering human face information into a database, comprising: a circuit unit coupled with an auxiliary wearable device configured for being worn by a visually impaired person, the circuit unit being configured to perform, from the auxiliary wearable device, the steps of: videoing one or more videoed persons and extracting human face information of the one or more videoed persons from one or more video frames during the videoing; recording a voice of at least one of the one or more videoed persons during the videoing, wherein the voice of the at least one videoed person comprises identity information of a speaker that is spoken by the speaker; performing semantic analysis on the recorded voice so as to extract respective information therefrom, wherein the extracted respective information comprises the identity information of the speaker; and associating the extracted information with the human face information of the videoed person who has spoken the extracted information, and entering the associated information into the database, wherein the circuit unit is further configured to perform, from the auxiliary wearable device, the step of: accessing, during a conversation participated by the visually impaired person and at least one of the one or more videoed persons, the database having the entered associated information to provide the visually impaired person with the identity information of the speaker.

2-3. (canceled)

4. The processor chip circuit according to claim 1, wherein associating the extracted information with the human face information of the videoed person who has spoken the extracted information comprises: analyzing the movement of lips of the one or more videoed persons from the one or more video frames during the videoing.

5. The processor chip circuit according to claim 4, wherein a start time of the movement of the lips is compared with a start time at which the voice is recorded.

6. A method for entering human face information into a database, comprising: videoing, from an auxiliary wearable device configured for being worn by a visually impaired person, one or more videoed persons and extracting human face information of the one or more videoed persons from one or more video frames during the videoing; recording, from the auxiliary wearable device, a voice of at least one of the one or more videoed persons during the videoing, wherein the voice of the at least one videoed person comprises identity information of a speaker that is spoken by the speaker; performing, from the auxiliary wearable device, semantic analysis on the recorded voice so as to extract respective information therefrom, wherein the extracted respective information comprises the identity information of the speaker; and associating, from the auxiliary wearable device, the extracted information with the human face information of the videoed person who has spoken the extracted information, and entering the associated information into the database, wherein the method further comprises: accessing, from the auxiliary wearable device, the database having the entered associated information to provide the visually impaired person with the identity information of the speaker, during a conversation participated by the visually impaired person and at least one of the one or more videoed persons.

7. The method according to claim 6, wherein the human face information comprises face feature information for identifying the one or more videoed persons.

8. The method according to claim 6, wherein the voice of the at least one videoed person comprises identity information of a speaker, and the extracted respective information comprises the identity information of the speaker.

9. The method according to claim 6, wherein the identity information of the speaker comprises a name of the speaker.

10. The method according to claim 6, wherein the voice of the at least one videoed person comprises information related to a scenario where a speaker is located, and the extracted respective information comprises the information related to the scenario where the speaker is located.

11-12. (canceled)

13. The method according to claim 6, wherein associating the extracted information with the human face information of the videoed person who has spoken the extracted information comprises: analyzing the movement of lips of the one or more videoed persons from the one or more video frames during the videoing.

14. The method according to claim 13, wherein a start time of the movement of the lips is compared with a start time at which the voice is recorded.

15. The method according to claim 6, wherein it is detected whether the human face information of the at least one videoed person is already stored in the database, and if the human face information of the at least one videoed person is not in the database, the recorded voice is analyzed.

16. The method according to claim 6, wherein it is detected whether the human face information of the at least one videoed person is already stored in the database, and if the human face information of the at least one videoed person has been stored in the database, the extracted information is used to supplement information associated with the human face information of the at least one videoed person that is already stored in the database.

17. The method according to claim 6, wherein the extracted information is stored in the database as text information.

18. A non-transitory computer readable storage medium storing a program which comprises instructions that, when executed by a processor of an electronic device, cause the electronic device to perform the steps of: videoing, from an auxiliary wearable device configured for being worn by a visually impaired person, one or more videoed persons and extracting human face information of the one or more videoed persons from one or more video frames during the videoing; recording, from the auxiliary wearable device, a voice of at least one of the one or more videoed persons during the videoing, wherein the voice of the at least one videoed person comprises identity information of a speaker that is spoken by the speaker; performing, from the auxiliary wearable device, semantic analysis on the recorded voice so as to extract respective information therefrom, wherein the extracted respective information comprises the identity information of the speaker; and associating, from the auxiliary wearable device, the extracted information with the human face information of the videoed person who has spoken the extracted information, and entering the associated information into the database, wherein the instructions, when executed by the processor, further cause the electronic device to perform the step of: accessing, from the auxiliary wearable device, the database having the entered associated information to provide the visually impaired person with the identity information of the speaker, during a conversation participated by the visually impaired person and at least one of the one or more videoed persons.

19. (canceled)

20. The non-transitory computer readable storage medium according to claim 18, wherein associating the extracted information with the human face information of the videoed person who has spoken the extracted information comprises: analyzing the movement of lips of the one or more videoed persons from the video frames during the videoing.

Description

CROSS-REFERENCES TO RELATED APPLICATIONS

[0001] This application claims priority to and is a continuation of International Patent Application No. PCT/CN2019/104108, filed Sep. 3, 2019; which claims priority from Chinese Patent Application No. CN 201910686122.3, filed Jul. 29, 2019. The entire contents of the PCT/CN2019/104108 application is incorporated by reference herein in its entirety for all purposes.

TECHNICAL FIELD

[0002] The present disclosure relates to human face recognition, and in particular to a method for entering human face information into a database, and a processor chip circuit and a non-transitory computer readable storage medium.

DESCRIPTION OF THE RELATED ART

[0003] Human face recognition is a biometric recognition technology for recognition based on human face feature information. The human face recognition technology uses a video camera or a camera to capture an image or a video stream containing a human face, and automatically detects the human face in the image, thereby performing human face recognition on the detected human face. Establishing a human face information database is a prerequisite for human face recognition. In the process of entering human face information into the database, the information corresponding to the captured human face information is usually entered by a user of an image and video capturing device.

BRIEF SUMMARY OF THE INVENTION

[0004] An objective of the present disclosure is to provide a method for entering human face information into a database, and a processor chip circuit and a non-transitory computer readable storage medium.

[0005] According to an aspect of the present disclosure, a method for entering human face information into a database is provided, the method including: videoing one or more videoed persons and extracting human face information of the one or more videoed persons from one or more video frames during the videoing; recording a voice of at least one of the one or more videoed persons during the videoing; performing semantic analysis on the recorded voice so as to extract respective information therefrom; and associating the extracted information with the human face information of the videoed person who has spoken the extracted information, and entering the associated information into the database.

[0006] According to another aspect of the present disclosure, a processor chip circuit is provided, which is used for entering human face information into a database and includes a circuit unit configured to perform the steps of the method above.

[0007] According to yet another aspect of the present disclosure, a non-transitory computer readable storage medium is provided, the storage medium having stored thereon a program which contains instructions that, when executed by a processor of an electronic device, cause the electronic device to perform the steps of the method above.

BRIEF DESCRIPTION OF THE DRAWINGS

[0008] The accompanying drawings exemplarily show the embodiments and constitute a part of the specification for interpreting the exemplary implementations of the embodiments together with the description of the specification. The embodiments shown are merely for illustrative purposes and do not limit the scope of the claims. In all the figures, the same reference signs refer to similar but not necessarily identical elements.

[0009] FIG. 1 shows a flow chart for associating human face information with information extracted from a voice according to a first implementation;

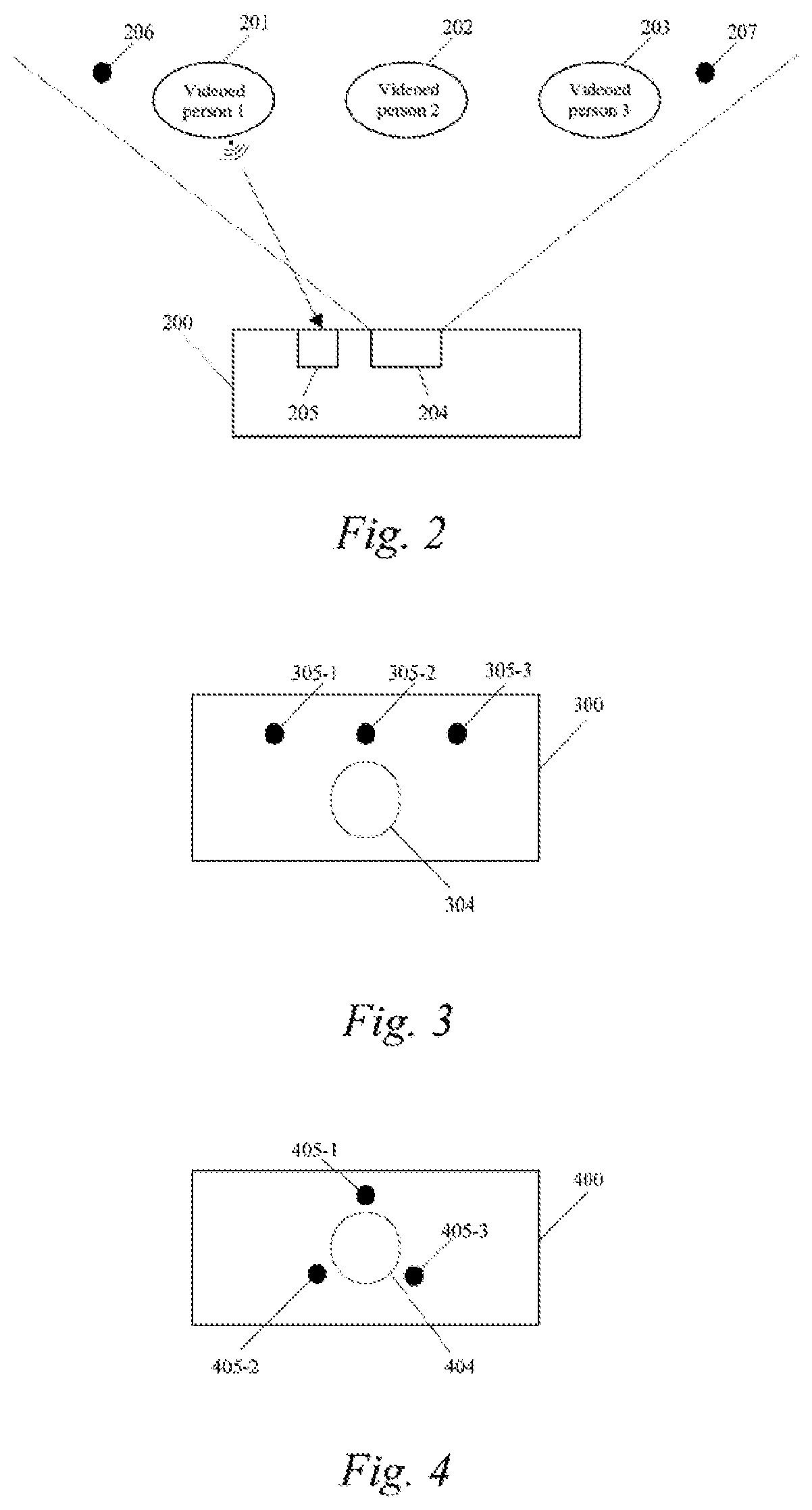

[0010] FIG. 2 exemplarily shows a scenario where human face information is entered for a plurality of videoed persons;

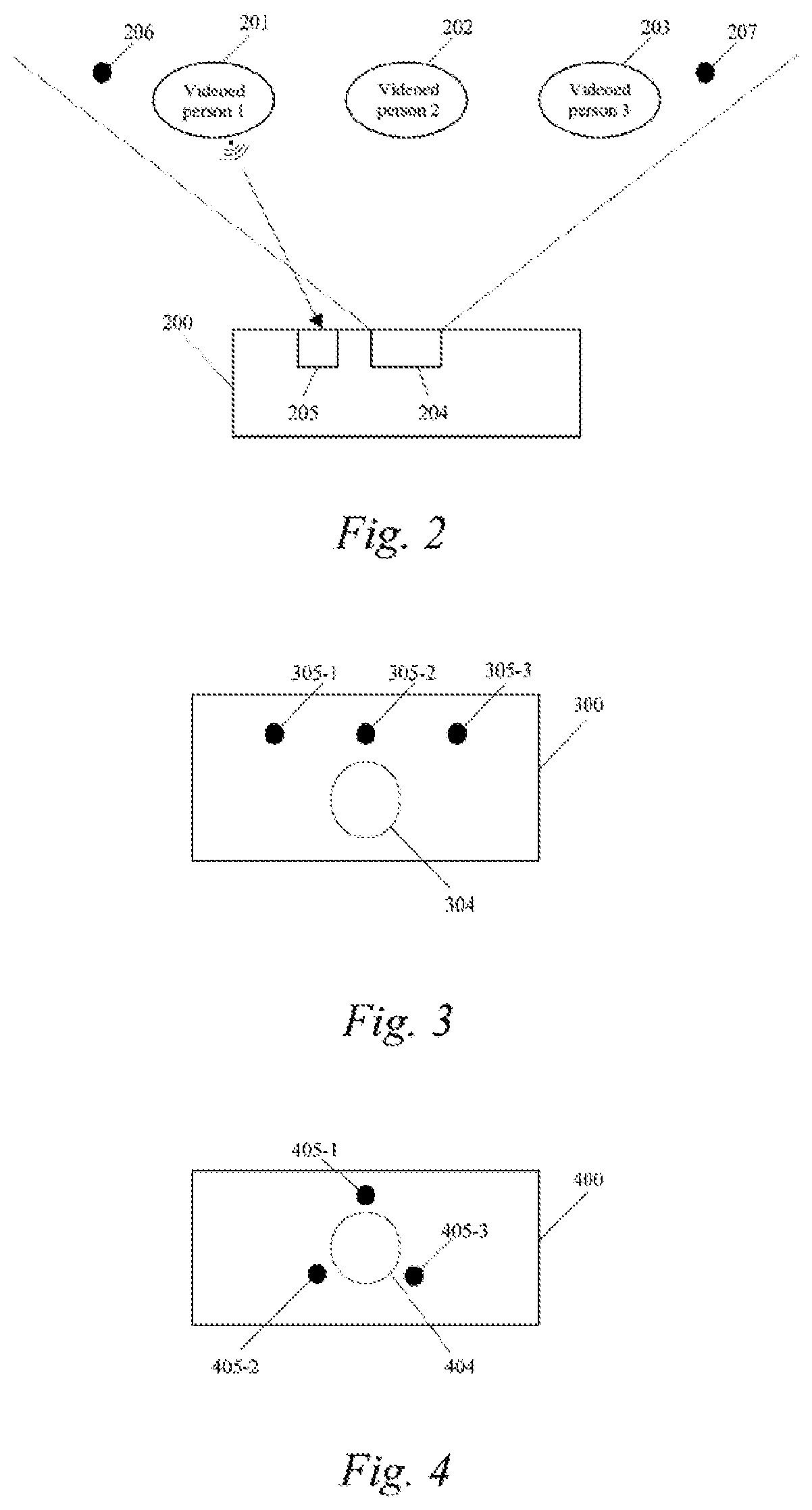

[0011] FIG. 3 shows a first arrangement of a microphone array and a camera;

[0012] FIG. 4 shows a second arrangement of the microphone array and the camera;

[0013] FIG. 5 exemplarily displays a video image in association with an audio waveform based on a common time axis;

[0014] FIG. 6 shows a flow chart for associating human face information with information extracted from a voice according to a second implementation;

[0015] FIG. 7 shows a flow chart for associating human face information with information extracted from a voice according to a third implementation; and

[0016] FIG. 8 shows a structural block diagram of an exemplary computing device that can be applied to an exemplary embodiment.

DETAILED DESCRIPTION OF THE INVENTION

[0017] In the present disclosure, unless otherwise stated, the terms "first", "second", etc., used to describe various elements are not intended to limit the positional, temporal or importance relationship of these elements, but rather only to distinguish one component from the other. In some examples, the first element and the second element may point to the same instance of the elements, and in some cases, based on contextual descriptions, they may also refer to different instances.

[0018] FIG. 1 shows a flow chart for associating human face information with information extracted from a voice according to a first implementation of the present disclosure.

[0019] Hereinafter, a scenario with only one videoed person is first described in accordance with the steps in FIG. 1. The scenario is, for example, a scenario where government department or bank staff needs to enter a human face and identity of a videoed person; or it is a scenario where a visually impaired person passively enters, by using an auxiliary wearable device, a human face and related information including the identity of the person speaking to him/her.

[0020] In step S101, one videoed person is videoed and human face information of the videoed person is extracted from one or more video frames during the videoing.

[0021] Videoing can be done with the aid of a video camera, a camera or other video capturing units with an image sensor. When the videoed person is within a videoing range of a video capturing unit, the video capturing unit can automatically search for the human face by means of the human face recognition technology, and then extract the human face information of the videoed person for human face recognition.

[0022] The human face information includes human face feature information that can be used to identify the videoed person. The features that can be used by a human face recognition system include visual features, pixel statistical features, human face image transform coefficient features, human face image algebra features, and the like. For example, the geometric description of a structural relationship among the parts such as the eyes, the nose, the mouth, the chin, etc. of the human face, and the iris can be both used as important features for recognizing the human face.

[0023] During human face recognition, the extracted human face information above is searched and matched with human face information templates stored in the database, and identity information of the human face is determined according to the degree of similarity. For example, the degree of similarity can be determined by training a neural network through deep learning.

[0024] In step S103, a voice of the videoed person during the videoing is recorded.

[0025] The voice can contain the speaker's own identity information; alternatively or additionally, the voice may also include information related to the scenario where the speaker himself/herself is located. For example, in a medical treatment scenario for a visually impaired person, the conversation content of a doctor may include not only the doctor's identity information, such as the name, his/her department and his/her position, but also effective voice information about a treatment mode, a medicine-taking way, etc.

[0026] The voice can be captured by an audio capturing unit such as a microphone. The videoed person actively spoke the information, e.g. his/her own identity information, "I am Wang Jun", etc. The identity information includes at least the name; however, depending on the purpose of the database, it may also include other information, such as age, place of birth, and the above-mentioned work unit and duty.

[0027] In step S105, semantic analysis is performed on the recorded voice, and corresponding information is extracted therefrom.

[0028] Extracting information from a voice can be realized with a voice recognition technology, and the extracted information can be stored in the form of texts. Based on a voice database for Chinese (including different dialects), English and other languages that is provided by a voice recognition technology provider, the information reported in multiple languages can be recognized. As described above, the extracted information may be the speaker's own identity information; alternatively or additionally, the extracted information may also include information related to the scenario where the speaker himself/herself is located. It should be noted that the identity information extracted by means of semantic analysis is different from voiceprint information of the speaker.

[0029] The degree of cooperation of the videoed person may affect the result of voice recognition. It can be understood that if the videoed person has clearly spoken the information at an appropriate speed, the result of the voice recognition will be more accurate.

[0030] In step S107, the extracted information is associated with the human face information of the videoed person who has spoken the extracted information, and the associated information is entered into the database.

[0031] In the scenario with only one videoed person, it can be determined that the extracted human face information and the extracted information belong to the same videoed person, and then the extracted human face information is stored in association with the extracted information in the database. The extracted information is stored in the database in the form of text information.

[0032] The above-mentioned human face information entry method automatically recognizes the information broadcast by the videoed person and associates same with the human face information thereof, thereby reducing the risk of a user of a video capturing unit erroneously entering information (especially identity information) of a videoed person, and improving the efficiency of human face information entry. Moreover, the method according to the present disclosure makes it possible to simultaneously enter other information related to the scenario, and thus can satisfy the user's usage requirements in different scenarios.

[0033] The steps in the flow chart of FIG. 1 can also be applied to the scenario with multiple videoed persons. The scenario is, for example, the one where a visually impaired person attends a multi-person conference or in a social occasion.

[0034] It should be understood that the human face recognition and voice recognition described above with respect to a single videoed person may be applied to each individual in the scenario including a plurality of videoed persons, respectively, and thus, related content will not be described again.

[0035] In step S101, a plurality of videoed persons are videoed and human face information of the videoed persons is extracted from one or more video frames during the videoing.

[0036] As shown in FIG. 2, within the videoing range of a video capturing unit 204 (a sector area defined by two dotted lines in FIG. 2), there are three videoed persons 201, 202, and 203 at the same time. The human face recognition technology is used to automatically search for the human faces of the plurality of videoed persons, and respective human face information is then extracted for all the videoed human faces.

[0037] In step S103, a voice of at least one of the plurality of videoed persons during the videoing is recorded.

[0038] The plurality of videoed persons can broadcast their own information in turn, and the recorded voices can be stored in a memory.

[0039] In step S105, semantic analysis is performed on each of the recorded voices, and respective information is extracted therefrom. It should be noted that, as described above, in addition to the identity information, the voice may also include information related to the scenario where the speaker is located, and such information may also be extracted by analyzing the voice, and stored in association with the human face information in the database. For the sake of brevity of explanation, the present disclosure will be illustrated below by taking the identity information in the voice as an example.

[0040] In step S107, the extracted information is associated with the human face information of the videoed person who has spoken the extracted information, and the associated information is entered into the database.

[0041] In the scenario including a plurality of videoed persons, it is possible to further distinguish between scenarios where only one person is speaking and where multiple persons are simultaneously speaking. In the case where the speaking of multiple persons causes serious interference with each other and cannot be distinguished, it is possible to choose to discard the voice recorded in the current scenario and perform voice recording again; the primary (or the only) sound in the recorded voice is analyzed to extract corresponding information when only one person is speaking, or when multiple persons are speaking but one sound can be distinguished from the others.

[0042] The association between the extracted respective information and the human face information can be implemented in the following two ways:

I. Sound Localization

[0043] In the scenario shown in a top view of FIG. 2, three videoed persons 201, 202 and 203 are located within the videoing range of the video capturing unit 204. Moreover, a device 200 for human face information entry further includes an audio capturing unit 205. It should be pointed out that FIG. 2 is not intended to define the relative positions between the audio capturing unit 205 and the video capturing unit 204.

[0044] The audio capturing unit 205 may be an array including three microphones, which are, for example, non-directional microphone elements that are highly sensitive to sound pressure.

[0045] In FIG. 3, three microphones 305-1, 305-2 and 305-3 are linearly arranged above a camera 304. In FIG. 4, three microphones 405-1, 405-2, and 405-3 form an equilateral triangle with a camera 404 as the centre.

[0046] The form of the array of microphones is not limited to the patterns in FIG. 3 and FIG. 4. It is important that the three microphones are respectively mounted at known and different positions on human face information entry devices 200, 300 and 400.

[0047] When one of the videoed persons 201, 202 and 203 broadcasts his/her own identity information, the sound waves from speaking are propagated to the three microphones 305-1, 305-2 and 305-3 of the audio capturing unit. Due to the different positions, there are phase differences among audio signals captured by the three microphones, and the direction of the sound source relative to the human face information entry device can be determined according to the information of the three phase differences. For example, as shown in FIG. 3, one microphone 305-2 of the three microphones 305-1, 305-2 and 305-3 may be disposed on the central axis of the human face information entry device 300 in a vertical direction, and the other two microphones 305-1 and 305-3 are symmetrically arranged with respect to the microphone 305-2, and the normal line passing through the microphone 305-2 and perpendicular to the plane in which they are located is used as a reference line to calibrate the specific direction of the sound source with an angle.

[0048] In the case shown in FIG. 2, the videoed person 1 is making a voice to broadcast his/her own identity information. By means of the audio capturing unit 205, the direction of the videoed person 1 with respect to the audio capturing unit 205 can be accurately determined. It should be understood that the accuracy of sound localization is related to the sensitivity of the microphone used by the audio capturing unit. If the spacing between videoed persons within the videoing range is large, the requirement for the accuracy of the sound localization is relatively low; on the contrary, if the spacing between the videoed persons within the videoing range is small, the requirement for the accuracy of the sound localization is relatively high. Based on the above teachings, during the implementation of the present disclosure, those skilled in the art can determine the performance of the audio capturing unit according to a specific application scenario (for example, according to the number of persons within the videoing range at the same time).

[0049] The video capturing units 304 and 404 can be used to map the real scenario where the videoed persons are located with the video scenario in terms of the location. This mapping can be achieved by pre-setting reference markers 206 and 207 in the real scenario (in this case, the distance from the video capturing unit to the reference marker is known), or by using a ranging function of the camera.

[0050] The use of camera ranging can be achieved in the following manner: [0051] 1) photographing a multi-view image: in the case where parameters of the camera of the video capturing units 304 and 404 are known, a sensor (such as a gyroscope) inside the device can be used to estimate the change of angle of view of the camera and the displacement of the video capturing unit, thereby inferring an actual spatial distance corresponding to the displacement of pixels in the image; or [0052] 2) photographing multiple images with different depths of focus by using a defocus (depth from focus) method, and then performing depth estimation by using the multiple images.

[0053] Based on the location mapping between the real scenario and the video scenario, a corresponding position of a certain location in the real scenario in the videoed video frame can be determined. For example, in the scenario of FIG. 2, in the case where the positions of the three videoed persons 201, 202 and 203 in the real scenario relative to the video capturing unit 204, the direction of the speaker 201 relative to the audio capturing unit 205, and the relative distance between the audio capturing unit 205 and the video capturing unit 204 are known, the position of the speaker (the videoed person 1) in the video frames can be derived, thereby associating the extracted identity information with the extracted human face information.

II. Capturing Lip Movements

[0054] The sound localization described above involves the association between audio and video in terms of spatial location, while the implementation of capturing lip movements involves the association between audio and video in terms of time.

[0055] It is beneficial to simultaneously start the video capturing unit and the audio capturing unit, and separately record video and audio.

[0056] In FIG. 5, the videoed video is displayed in association with the recorded audio waveform by a common time axis.

[0057] When the audio capturing unit detects that an audio signal is entered in a time interval from t1 to t2, and the identity information can be extracted therefrom effectively (excluding noise), the human face information entry devices 200, 300 and 400 retrieve the recorded video frames, and compare a frame 502 at time t1 and a frame 501 at a previous time (for example, before 100 ms). By comparison, it can be determined that the lip of the videoed person on the left side has an obvious opening action in the frame 502; similarly, a frame 503 at time t2 and a frame 504 at a later time (for example, after 100 ms) are compared; and by comparison, it can be determined that the videoed person on the left side ends the opening state of his/her lip in the frame 504.

[0058] Based on the high degree of time consistency, it can be determined that the identity information captured by the audio capturing unit within the time interval from t1 to t2 should be associated with the videoed person on the left side.

[0059] The above method for associating identity information with human face information by capturing lip movements can not only be used to reinforce the implementation of sound localization, but also can be separately used as an alternative to the sound localization.

[0060] By associating the identity information with the human face information, it is possible to enter information of a plurality of videoed persons during the same videoing, which further saves the time required for human face information entry, and can also assist a visually impaired person in quickly grasping identity information of persons present in a large conference or social occasion and storing the identity information of strangers in association with the corresponding human face information in the database. Once the database is established, during the following conversation, the position of the speaker in a video frame can be determined by the localization technique explained above, and the human face recognition can be performed to provide the visually impaired person with the identity information of the current speaker, for example, through a loudspeaker, thereby providing great convenience for the visually impaired person to participate normal social activities.

[0061] Moreover, in the scenario where multiple persons are speaking, it is also possible to accurately analyse corresponding semantics according to the videoed video of lip movements, split different sound sources by means of the audio capturing device, and compare the analyzed semantics on the video of lip movements with information of a single channel of sound source split by the audio capturing device for association.

[0062] FIG. 6 shows a flow chart for entering human face information in association with the extracted respective information into a database according to a second implementation of the present disclosure.

[0063] Unlike the implementation shown in FIG. 1, in the second implementation, it is determined whether the extracted human face information is already stored in the database before the respective information is extracted from the voice.

[0064] In step S601, one or more videoed persons are videoed and human face information of the one or more videoed persons is extracted from one or more video frames, and voices of the one or more videoed persons are recorded.

[0065] In step S602, the extracted human face information is compared with human face information templates that is already stored in the database.

[0066] If it is determined that the human face information has been stored in the database, the process proceeds to step S605 to exit a human face information entry mode.

[0067] If it is determined that the human face information has not been stored in the database, the process proceeds to S603 to start to perform semantic analysis on the voices of the one or more videoed persons that are recorded in step 601, and to extract respective information from the voices.

[0068] Preferably, when the name to be entered is already stored in the database (the corresponding human face information is different), the name to be entered may be distinguished and then entered into the database. For example, when "Wang Jun" is already in the database, "Wang Jun 2" is entered so as to distinguish from "Wang Jun" that has been entered into the database, so that during subsequent broadcast to a user, a different voice information code name is used to make the user to distinguish between different human face information.

[0069] In step S604, the extracted information is associated with the human face information and entered into the database. The manner in which the sound and the human face are associated as described above in connection with FIGS. 1 to 5 can also be applied to the second implementation.

[0070] According to the second implementation, the efficiency of entering the extracted information and the human face information can be further improved.

[0071] It should be noted that the respective information including the identity extracted according to the present disclosure is text information recognized from voice information of an audio format, and therefore, the above information is stored in the database as text information instead of voice information.

[0072] FIG. 7 shows a flow chart of entering human face information in association with identity information into a database according to a third implementation of the present disclosure.

[0073] In step S701, one or more videoed persons are videoed and human face information of the one or more videoed persons is extracted from one or more video frames during the videoing.

[0074] In step S703, semantic analysis is performed on a voice of a videoed person during the videoing, and the voice can contain the speaker's own identity information.

[0075] In step S705, it is determined whether the extracted human face information is already in the database.

[0076] If it is found, after determination, that the relevant human face information has not been stored in the database, the process proceeds to step S707, and the extracted information is stored in association with the human face information in the database. Here, the manner in which the sound and the human face are associated as described above in connection with FIGS. 1 to 5 can also be applied to the third implementation.

[0077] If it is found, after determination, that the relevant human face information is already stored in the database, the process proceeds to S710 to further determine whether the extracted information can supplement the information that is already in the database. For example, the name of the videoed person already exists in the database, and the extracted information further includes other information such as age and place of birth, or new information related to the scenario where the speaker is located.

[0078] If there is no other information that can be supplemented to the database, the process proceeds to S711 to exit the human face information entry mode.

[0079] If there is other information that can be supplemented to the database, the process proceeds to S712 to store the information that can be supplemented in the database.

[0080] According to the third implementation, a more comprehensive identity information database can be acquired with higher efficiency.

[0081] FIG. 8 is a computing device 2000 for implementing the methods or processes of the present disclosure, which is an example of a hardware device that can be applied to various aspects of the present disclosure. The computing device 2000 can be any machine configured to perform processing and/or computation. In particular, in the above-mentioned conference or social occasion where multiple persons are present, the computing device 2000 can be implemented as a wearable device, preferably as smart glasses. Moreover, the computing device 2000 can also be implemented as a tablet computer, a smart phone, or any combination thereof. The apparatus for human face information entry according to the present disclosure may be implemented, in whole or at least in part, by the computing device 2000 or a similar device or system.

[0082] The computing device 2000 may include elements in connection with a bus 2002 or in communication with a bus 2002 (possibly via one or more interfaces). For example, the computing device 2000 can include a bus 2002, one or more processors 2004, one or more input devices 2006, and one or more output devices 2008. The one or more processors 2004 may be any type of processors and may include, but are not limited to, one or more general purpose processors and/or one or more dedicated processors (e.g., special processing chips). The input device 2006 can be any type of devices capable of inputting information to the computing device 2000, and can include, but is not limited to, a camera. The output device 2008 can be any type of devices capable of presenting information, and can include, but is not limited to, a loudspeaker, an audio output terminal, a vibrator, or a display. The computing device 2000 may also include a non-transitory storage device 2010 or be connected to a non-transitory storage device 2010. The non-transitory storage device may be non-transitory and may be any storage device capable of implementing data storage, and may include, but is not limited to, a disk drive, an optical storage device, a solid-state memory, a floppy disk, a flexible disk, a hard disk, a magnetic tape, or any other magnetic medium, an optical disk or any other optical medium, a read-only memory (ROM), a random access memory (RAM), a cache memory and/or any other memory chip or cartridge, and/or any other medium from which a computer can read data, instructions and/or codes. The non-transitory storage device 2010 can be detached from an interface. The non-transitory storage device 2010 may have data/programs (including instructions)/codes for implementing the methods and steps. The computing device 2000 can also include a communication device 2012. The communication device 2012 may be any type of device or system that enables communication with an external device and/or a network, and may include, but is not limited to, a wireless communication device and/or a chipset, e.g., a Bluetooth device, a 1302.11 device, a WiFi device, a WiMax device, a cellular communication device and/or the like.

[0083] The computing device 2000 may also include a working memory 2014, which may be any type of working memory that stores programs (including instructions) and/or data useful to the working of the processor 2004, and may include, but is not limited to, a random access memory and/or a read-only memory.

[0084] Software elements (programs) may be located in the working memory 2014, and may include, but is not limited to, an operating system 2016, one or more applications 2018, drivers, and/or other data and codes. The instructions for executing the methods and steps may be included in the one or more applications 2018.

[0085] When the computing device 2000 shown in FIG. 8 is applied to an embodiment of the present disclosure, the memory 2014 may store program codes, and the videoed video and/or audio files for performing the flow charts shown in FIGS. 1, 6 and 7, in which the application 2018 may include a human face recognition application, a voice recognition application, a camera ranging application, etc. provided by a third party. The input device 2006 may be a sensor, such as a camera and a microphone, for acquiring video and audio. The storage device 2010 is used, for example, to store a database such that the associated identity information and human face information can be written into the database. The processor 2004 is used for performing, according to the program codes in the working memory 2014, the steps of the methods according to various aspects of the present disclosure.

[0086] It should also be understood that the components of the computing device 2000 can be distributed over a network. For example, some processing may be executed by one processor while other processing may be executed by another processor away from the one processor.

[0087] Other components of the computing device 2000 may also be similarly distributed. As such, the computing device 2000 can be interpreted as a distributed computing system that performs processing at multiple positions.

[0088] Although the embodiments or examples of the present disclosure have been described with reference to the accompanying drawings, it should be understood that the methods, systems and devices described above are merely exemplary embodiments or examples, and the scope of the present disclosure is not limited by the embodiments or examples, but only defined by the claims and equivalent scopes thereof. Various elements in the embodiments or examples may be omitted or substituted by equivalent elements thereof. Moreover, the steps may be executed in an order different from that described in the present disclosure. Further, various elements in the embodiments or examples may be combined in various ways. It is important that, as the technology evolves, many elements described herein may be replaced with equivalent elements that appear after the present disclosure.

* * * * *

D00000

D00001

D00002

D00003

D00004

D00005

D00006

XML

uspto.report is an independent third-party trademark research tool that is not affiliated, endorsed, or sponsored by the United States Patent and Trademark Office (USPTO) or any other governmental organization. The information provided by uspto.report is based on publicly available data at the time of writing and is intended for informational purposes only.

While we strive to provide accurate and up-to-date information, we do not guarantee the accuracy, completeness, reliability, or suitability of the information displayed on this site. The use of this site is at your own risk. Any reliance you place on such information is therefore strictly at your own risk.

All official trademark data, including owner information, should be verified by visiting the official USPTO website at www.uspto.gov. This site is not intended to replace professional legal advice and should not be used as a substitute for consulting with a legal professional who is knowledgeable about trademark law.