Methods And Devices For Encoding And Reconstructing A Point Cloud

CAI; Kangying ; et al.

U.S. patent application number 16/616491 was filed with the patent office on 2020-03-19 for methods and devices for encoding and reconstructing a point cloud. The applicant listed for this patent is INTERDIGITAL VC HOLDINGS, INC.. Invention is credited to Kangying CAI, Wei HU, Sebastien LASSERRE.

| Application Number | 20200092584 16/616491 |

| Document ID | / |

| Family ID | 59061945 |

| Filed Date | 2020-03-19 |

| United States Patent Application | 20200092584 |

| Kind Code | A1 |

| CAI; Kangying ; et al. | March 19, 2020 |

METHODS AND DEVICES FOR ENCODING AND RECONSTRUCTING A POINT CLOUD

Abstract

This method comprises: --accessing (2) a point cloud (PC) comprising a plurality of points defined by attributes, said attributes including a spatial position of a point in a 3D space and at least one feature of the point;--segmenting (2) the point cloud into one or more clusters (C.sub.i) of points on the basis of the attributes of the points; and for at least one cluster (C.sub.i):--constructing (4) a similarity graph having a plurality of vertices and at least one edge, the similarity graph representing a similarity among neighboring points of the cluster (C.sub.i) in terms of the attributes, the plurality of vertices including vertices P.sub.i and P.sub.j corresponding to points of the cluster (C.sub.i);--assigning one or more weights w.sub.i,j to one or more edges connecting vertices P.sub.i and P.sub.j of the graph;--computing (6) a transform using the one or more assigned weights, said transform being characterized by coefficients; and--quantizing (8) and encoding (10) the transform coefficients.

| Inventors: | CAI; Kangying; (RENNES, FR) ; HU; Wei; (Rennes, FR) ; LASSERRE; Sebastien; (Thorigne Fouillard, FR) | ||||||||||

| Applicant: |

|

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Family ID: | 59061945 | ||||||||||

| Appl. No.: | 16/616491 | ||||||||||

| Filed: | April 12, 2018 | ||||||||||

| PCT Filed: | April 12, 2018 | ||||||||||

| PCT NO: | PCT/EP2018/059420 | ||||||||||

| 371 Date: | November 24, 2019 |

| Current U.S. Class: | 1/1 |

| Current CPC Class: | H04N 19/18 20141101; H04N 19/597 20141101; H04N 19/60 20141101; H04N 19/62 20141101 |

| International Class: | H04N 19/62 20060101 H04N019/62; H04N 19/18 20060101 H04N019/18 |

Foreign Application Data

| Date | Code | Application Number |

|---|---|---|

| May 24, 2017 | EP | 17305610.2 |

Claims

1. A method comprising: segmenting a point cloud comprising a plurality of points into multiple clusters of points on the basis of spatial positions of the points in a 3D space and at least one feature of the points; obtaining a graph-based representation of each cluster of points, said graph-based representation having a plurality of vertices and at least one edge connecting two vertices, an edge comprising an indicator capturing similarities of multiple attributes of two neighboring points of a cluster; encoding transform coefficients based on said at least one indicator.

2. (canceled)

3. The method of claim 1, wherein, for at least one cluster, the method further comprises a step of or the device comprises an interface configured to transmitting the encoded transform coefficients and identifiers of the clusters.

4. (canceled)

5. The method of claim 1, wherein if a weight is less than a threshold, said weight is set to 0 and corresponding vertices are disconnected.

6. The method of claim 1, wherein the transform coefficients are coefficients of a Graph Fourier Transform.

7. The method of claim 1, wherein the at least one feature comprises color information.

8-15. (canceled)

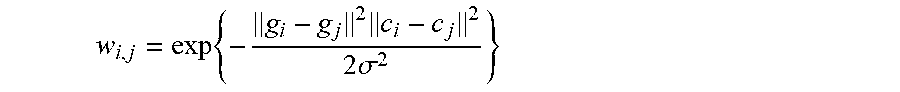

16. The method of claim 1, wherein an indicator of an edge connecting two vertices Pi and Pj is a weight wi,j defined by: w i , j = exp { - g i - g j 2 c i - c j 2 2 .sigma. 2 } ##EQU00002## where gi and gj are vectors representing, respectively, spatial position and another feature of points Pi and Pj and .sigma. is a parameter used to control the weight value.

17. A device comprising: a segmentation module configured to segment a point cloud comprising a plurality of points into multiple clusters of points on the base of spatial positions of the points in a 3D space and at least one feature of the points; a construction module configured to obtain a graph-based representation of each cluster of points, said graph-based representation having a plurality of vertices and at least one edge connecting two vertices, an edge comprising an indicator capturing similarities of multiple attributes of two neighboring points of a cluster; a coding module configured to encode transform coefficients based on said at least one indicator.

18. The device of claim 17, wherein, for at least one cluster, the method further comprises a step of or the device comprises an interface configured to transmitting the encoded transform coefficients and identifiers of the clusters.

19. The device of claim 17, wherein if a weight is less than a threshold, said weight is set to 0 and corresponding vertices are disconnected.

20. The device of claim 17, wherein the transform coefficients are coefficients of a Graph Fourier Transform.

21. The device of claim 17, wherein the at least one feature comprises color information.

22. The device of claim 17, wherein an indicator of an edge connecting two vertices Pi and Pj is a weight wi,j defined by: w i , j = exp { - g i - g j 2 c i - c j 2 2 .sigma. 2 } ##EQU00003## where gi and gj are vectors representing, respectively, spatial position and another feature of points Pi and Pj and .sigma. is a parameter used to control the weight value.

23. A method comprising: receiving data including transform coefficients associated with a cluster of points and identifiers of multiple clusters of points of a point cloud; decoding the received transform coefficients associated with each cluster of points; and reconstructing the spatial positions in a 3D space of points of the clusters and at least one feature of said points from the decoded transform coefficients and identifiers of clusters of points.

24. The method of claim 23, wherein the transform is a Graph Fourier Transform.

25. The method of claim 23, wherein the at least one feature comprises color information.

26. A device for reconstructing a point cloud comprising: a receiver configured to receive data including transform coefficients associated with a cluster of points and identifiers of multiple clusters of points of a point cloud; a decoding module configured to decode the received transform coefficients associated with each cluster of points; and a reconstruction module configured to reconstruct the spatial positions in a 3D space of points of the clusters and at least one feature of said points from the decoded transform coefficients and identifiers of clusters of points.

27. The device of claim 26, wherein the transform is a Graph Fourier Transform.

28. The device of claim 26, wherein the at least one feature comprises color information.

29. A computer readable storage medium having program instructions stored thereon which are executable by a processor to cause the processor to implement the method according to claim 1.

Description

FIELD OF THE INVENTION

[0001] The present disclosure generally relates to the field of point cloud data sources.

[0002] More particularly, it deals with point cloud compression (PCC).

[0003] Thus, the disclosure concerns a method for encoding a point cloud and a corresponding encoder.

[0004] It also concerns a method for reconstructing a point cloud and a corresponding decoder. It further concerns computer programs implementing the encoding and reconstructing methods of the invention.

BACKGROUND OF THE INVENTION

[0005] The approaches described in this section could be pursued, but are not necessarily approaches that have been previously conceived or pursued. Therefore, unless otherwise indicated herein, the approaches described in this section are not prior art to the claims in this application and are not admitted to be prior art by inclusion in this section.

[0006] A point cloud consists in a set of points. Each point is defined by its spatial location (x, y, z), i.e. geometry information, and different attributes, which typically include the color information in (R, G, B) or (Y, U, V) or any other color coordinate system. Geometry can be regarded as one of the attribute data. In the rest of this disclosure, both geometry and other attribute data are considered as attributes of points.

[0007] Point cloud data sources are found in many applications. Important applications relying on huge point cloud data sources can be found in geographic information systems, robotics, medical tomography and scientific visualization.

[0008] Beyond these applications that are more industrial and scientifically oriented, the rise in popularity of inexpensive 3D scanners based on time of flight or other depth sensing technologies, 3D capturing on mobile devices and the rise of cloud based 3D printing are creating a huge demand for large scale interoperable compressed 3D point cloud storage and transmission data formats in the consumer market.

[0009] Scanned 3D point clouds often have thousands of points and occupy large amounts of storage space. Additionally, they can be generated at a high rate when captured live from 3D scanners, increasing the data rate even further. Therefore, point cloud compression is critical for efficient networked distribution and storage.

[0010] While there has been quite some work on point cloud compression in the 3D graphics and robotics domains, they generally do not address some of the requirements for building efficient interoperable and complete end-to-end 3D multimedia systems.

[0011] For example, the coding of color and other attribute values, which are important for high quality rendering, are often ignored or dealt with inefficiently, for example by performing a simple one way predictive coding of colors. Also, compression of time-varying point clouds, i.e. sequences of clouds captured at a fast rate over time, is limited. Furthermore, methods with low complexity and lossy encoding are of interest for systems where hardware/bandwidth limitations apply or where a low delay is critical. Research in point cloud compression still needs to happen to address these challenges.

[0012] Different coding methods of the point clouds have been proposed in the prior art.

[0013] The first coding approach is based on the octree based point-cloud representation. It is described, for instance, in J. Peng and C.-C. Jay Kuo, "Geometry-guided progressive lossless 3D mesh coding with octree (OT) decomposition," ACM Trans. Graph, vol. 21, no. 2, pp. 609-616, July 2005 and in Yan Huang, Jingliang Peng, C.-C. Jay Kuo and M. Gopi, "A Generic Scheme for Progressive Point Cloud Coding," IEEE Transactions on Visualization and Computer Graphics, vol. 14, no. 2, pp. 440-453, 2008.

[0014] An octree is a tree data structure where every branch node represents a certain cube or cuboid bounding volume in space. Starting at the root, every branch has up to eight children, one for each sub-octant of the node's bounding box.

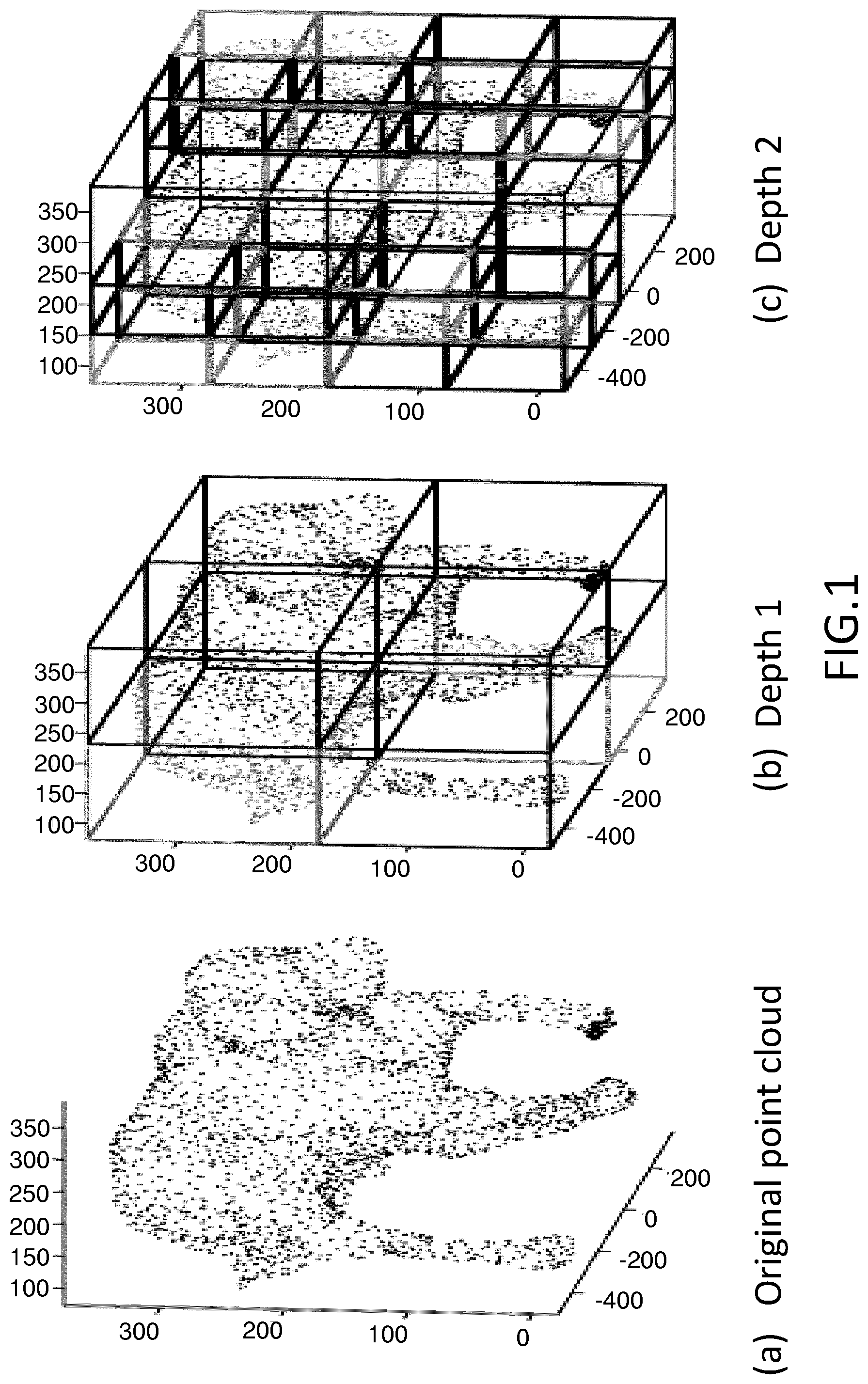

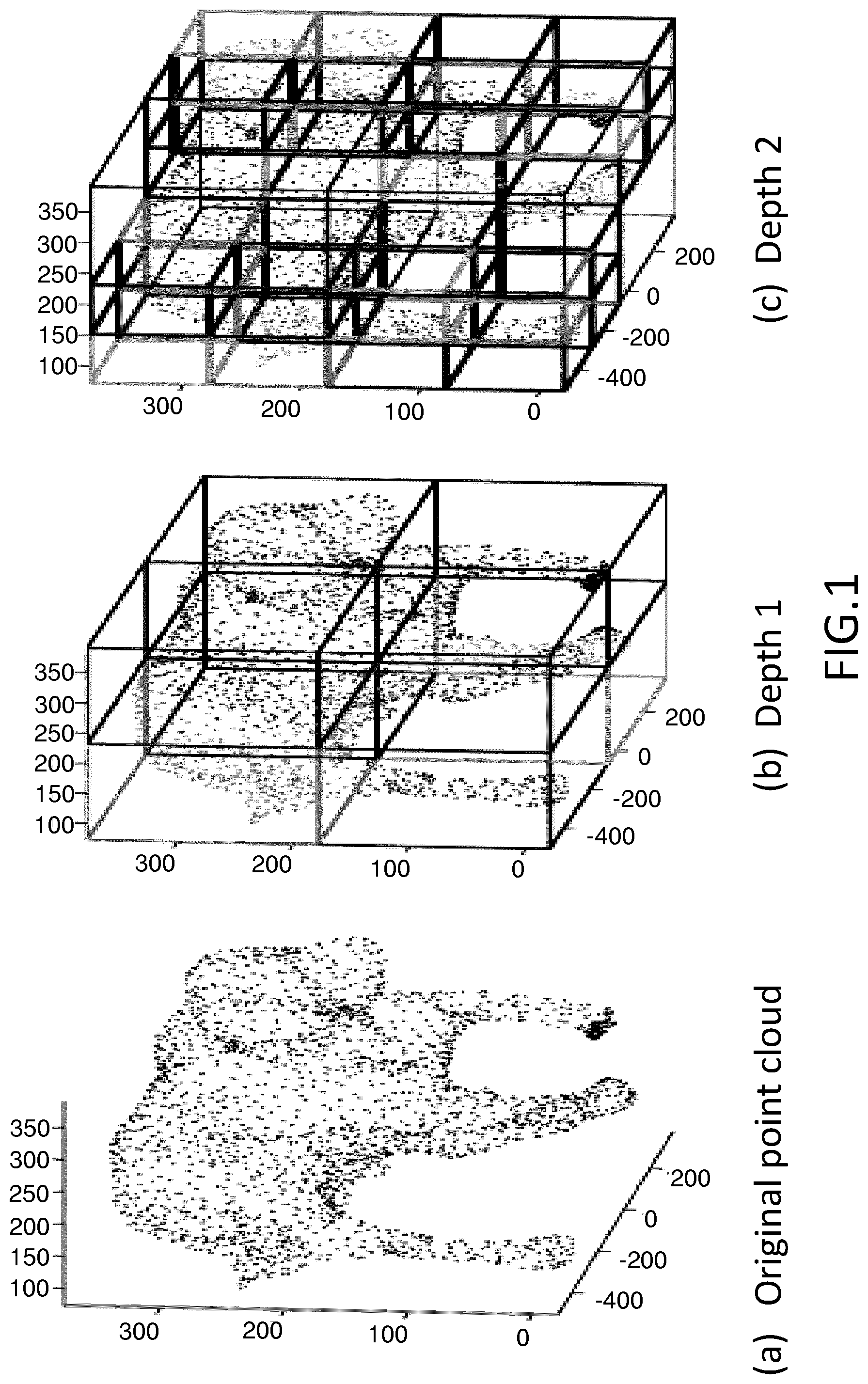

[0015] An example is shown in FIG. 1 and the left half of FIG. 2.

[0016] For compression, a single bit is used to mark whether every child of a branch node is empty and then this branch node configuration can be efficiently represented in a single byte, assuming some consistent ordering of the eight octants. By traversing the tree in breadth-first order and outputting every child node configuration byte encountered, the point distribution in space can be efficiently encoded. Upon reading the encoded byte stream, the number of bits set in the first byte tells the decoder the number of consecutive bytes that are direct children. The bit positions specify the voxel/child in the octree they occupy. The right half side of FIG. 2 illustrates this byte stream representation for the octree represented in the left half side.

[0017] Due to its hierarchical nature, the octree representation is very efficient in exploiting the sparsity of point clouds. Thus, octree decomposition based strategy is very efficient for compressing sparse point clouds. However, octree-based representation is inefficient for representing and compressing watertight dense point clouds. Moreover, most of the existing octree-based point cloud representations independently represent geometry and other attributes of points.

[0018] The second coding approach is based on the segmentation based point cloud representation. It is described, for instance, in T. Ochotta and D. Saupe, "Compression of point-based 3d models by shape-adaptive wavelet coding of multi-heightfields," in Proc. Eurographics Symp. on Point-Based Graphics, 2004, and in J. Digne, R. Chaine, S. Valette, et al, "Self-similarity for accurate compression of point sampled surfaces," Computer Graphics Forum, vol. 33, p. 155-164, 2014.

[0019] The segmentation based point cloud representation comprises three steps: plane-like decomposition, plane projection and coding. In the first step, the point cloud data is segmented into plane-like patches. Then, the resultant patches are projected onto one or several planes. In the last step, the projected image(s) are compressed. Efficient image/video compression techniques can be used for compressing the projected image(s).

[0020] Plane-like segmentation based PCC has been proved to be very efficient at coding dense point clouds which represent approximate piecewise linear surfaces. However, there are several disadvantages of segmentation-based PCC. Indeed, this method requires resampling onto a regular grid, which introduces approximation errors. Also, it is necessary to generate a large number of patches for complex shapes, which implies a high computation complexity for complicated textures. Moreover, this method processes independently geometry and other properties.

[0021] There are works trying to combine the advantages of the above two representations of point clouds such as J. K. N. Blodow, R. Rusu, S. Gedikli and E. S. M Beetz, "Real-time compression of point cloud streams," in Robotics and Automation (ICRA), 2012 IEEE International Conference on, pp. 778, 785, 14-18 May 2012.

[0022] These works first decompose the input point clouds in the manner of octree decomposition. After each cuboid division operation, it is checked whether the points falling into the same newly generated leaf cuboid can be approximated by a plane. If the answer is yes, the division of the corresponding cuboid is stopped and the related points are encoded by projecting on the corresponding plane. Then, the positions of the plane-like patches can be efficiently represented and compressed by the octree method.

[0023] However, all these known methods do not provide an efficient representation and encoding of the attribute data of point clouds.

[0024] In the article of Collet et al. "High-quality streamable free-viewpoint video", ACM Transactions on Graphics, vol. 34, no. 4, 2015, point clouds are converted into 3D meshes and then a state-of-the-art 3D mesh compression technique is used to encode the point clouds. However, this method, which requires the conversion of the point cloud into a mesh, is usually computationally expensive and extra bits are required to encode the connectivity of 3D meshes.

[0025] Recently graph-based methods have been proposed to encode point clouds. The point cloud attributes are compressed using graph transforms in Zhang et al.: "Point cloud attribute compression with graph transform", IEEE International Conference on Image Processing, Paris, 2014. The attributes are treated as signals over the graph and the graph transform is employed to approximately de-correlate the signal. However, the point cloud representation is still based on the octree representation by constructing graphs upon the octree structure. Also, only the compression of attributes except the geometry is considered in this article.

[0026] The problem of compression of 3D point cloud sequences is addressed in Thanou et al.: "Graph-based compression of dynamic 3D point cloud sequences", IEEE Transactions on Image Processing, vol. 25, no 4, pages 1765-1778, 2016, with focus on intra coding with graph-based methods. Nevertheless, this approach still adopts the octree representation. In Queiroz et al. "Compression of 3D point clouds using a region-adaptive hierarchical transform", IEEE Transactions on Image Processing, Volume: 25, Issue: 8, August 2016, a method is designed to compress the colors in point clouds based on a hierarchical transform. Meanwhile, the geometry of the point cloud is again encoded using the octree representation.

[0027] The aforementioned works are all based on the octree representation and consider the geometry and other attributes separately. This is disadvantageous as the exploration of the piecewise-linearity of the point cloud for coding efficiency is limited by the octree representation. Also, the redundancy between the geometry and the attributes is not taken into account, which eliminates further improvement of the coding performance.

SUMMARY OF THE INVENTION

[0028] The present disclosure proposes a solution for improving the situation.

[0029] Thus, the disclosure concerns a method for encoding a point cloud according to claim 1 and a corresponding encoder according to claim 2. It also concerns a method for reconstructing a point cloud according to claim 8 and a corresponding decoder according to claim 9. It further concerns computer programs implementing the encoding and reconstructing methods of the invention. It also concerns a signal according to claim 15.

[0030] Accordingly, the present disclosure provides a method for encoding a point cloud comprising a plurality of points, in which each point is defined by attributes, the attributes including the spatial position of the point in a 3D space and at least one feature of the point, wherein the method comprises: [0031] segmenting the point cloud into clusters of points on the basis of the attributes of the points; and for each cluster: [0032] constructing a similarity graph representing the similarity among neighboring points of the cluster in terms of the attributes; [0033] computing a Graph Fourier Transform, GFT, based on the constructed similarity graph, the GFT being characterized by its coefficients; and [0034] quantizing and encoding the GFT coefficients.

[0035] Thus, the coding method of the invention jointly compresses the attributes, which include the geometry i.e. the spatial position of the points in the 3D space and at least one other attribute i.e. a feature such as the color or the texture, and exploits the redundancy among them based on the GFT.

[0036] By segmenting the point cloud into clusters, the graph transform operation is performed within each cluster and thus it is more efficient in the sense of processing time.

[0037] Advantageously, the method comprises, for each cluster, transmitting to a decoder the encoded GFT coefficients and identifiers of the cluster and of the computed GFT.

[0038] These identifiers may consist on duster indices and GFT indices in order to indicate to the decoder which GFT is used for which duster so that the decoder can perform the correct inverse transform for the final reconstruction of the point cloud.

[0039] According to a preferred embodiment, the encoding of the GFT coefficients is an entropy encoding.

[0040] Advantageously, the segmentation of the point cloud into clusters uses a normalized cuts technique.

[0041] This technique is described, for instance, in the article of Malik et al.: "Normalized cuts and image segmentation" in IEEE Trans. Pattern Anal. Mach. Intell., Vol. 22, No. 8, pages 888-905, 2000.

[0042] Preferably, vertices of the constructed similarity graph consist of the points of the corresponding cluster and the method comprises assigning a weight w.sub.i,j between any vertices P.sub.i and P.sub.j of the graph.

[0043] Advantageously, the weight w.sub.i,j between vertices P.sub.i and P.sub.j is set to 0 if said weight is less than a threshold.

[0044] In this way, the small weights are not encoded so as to reduce the overall coding bits while not degrading the coding quality as a small weight means that the corresponding connected vertices are dissimilar to some extent.

[0045] Advantageously, constructing the similarity graph comprises adding an edge e.sub.i,j between vertices P.sub.i and P.sub.j if the weight w.sub.i,j is equal to or greater than the threshold.

[0046] The present disclosure also provides an encoder for encoding a point cloud comprising a plurality of points, in which each point is defined by attributes, the attributes including the spatial position of the point in a 3D space and at least one feature of the point, wherein the encoder comprises: [0047] a segmentation module configured to segment the point cloud into dusters of points on the basis of the attributes of the points; [0048] a construction module configured to construct, for each duster, a similarity graph representing the similarity among neighboring points of the duster in terms of the attributes; [0049] a computation module configured to compute, for each cluster; a Graph Fourier Transform, GFT, based on the constructed similarity graph, the GFT being characterized by its coefficients; and [0050] a coding module configured to encode the GFT coefficients.

[0051] Advantageously, the encoder comprises a transmitter configured to transmit to a decoder the encoded GFT coefficients and identifiers of the dusters and of the computed GFTs.

[0052] The present disclosure also provides a method for reconstructing a point cloud comprising a plurality of points, in which each point is defined by attributes, the attributes including the spatial position of the point in a 3D space and at least one feature of the point, wherein the method comprises: [0053] receiving data including GFT coefficients associated with cluster indices and GFT indices; [0054] decoding the received data; and [0055] reconstructing clusters by performing for each cluster identified by a received cluster index an inverse GFT.

[0056] Advantageously, the decoding includes an entropy decoding.

[0057] The present disclosure also provides a decoder for reconstructing a point cloud comprising a plurality of points, in which each point is defined by attributes, the attributes including the spatial position of the point in a 3D space and at least one feature of the point, wherein the decoder comprises: [0058] a receiver configured to receive encoded data including GFT coefficients associated with cluster indices and GFT indices; [0059] a decoding module configured to decode the received data; and [0060] a reconstruction module configured to reconstruct clusters by performing for each cluster identified by a received cluster index an inverse GFT.

[0061] The methods according to the disclosure may be implemented in software on a programmable apparatus. They may be implemented solely in hardware or in software, or in a combination thereof.

[0062] Since these methods can be implemented in software, they can be embodied as computer readable code for provision to a programmable apparatus on any suitable carrier medium. A carrier medium may comprise a storage medium such as a floppy disk, a CD-ROM, a hard disk drive, a magnetic tape device or a solid state memory device and the like.

[0063] The disclosure thus provides a computer-readable program comprising computer-executable instructions to enable a computer to perform the encoding method of the invention.

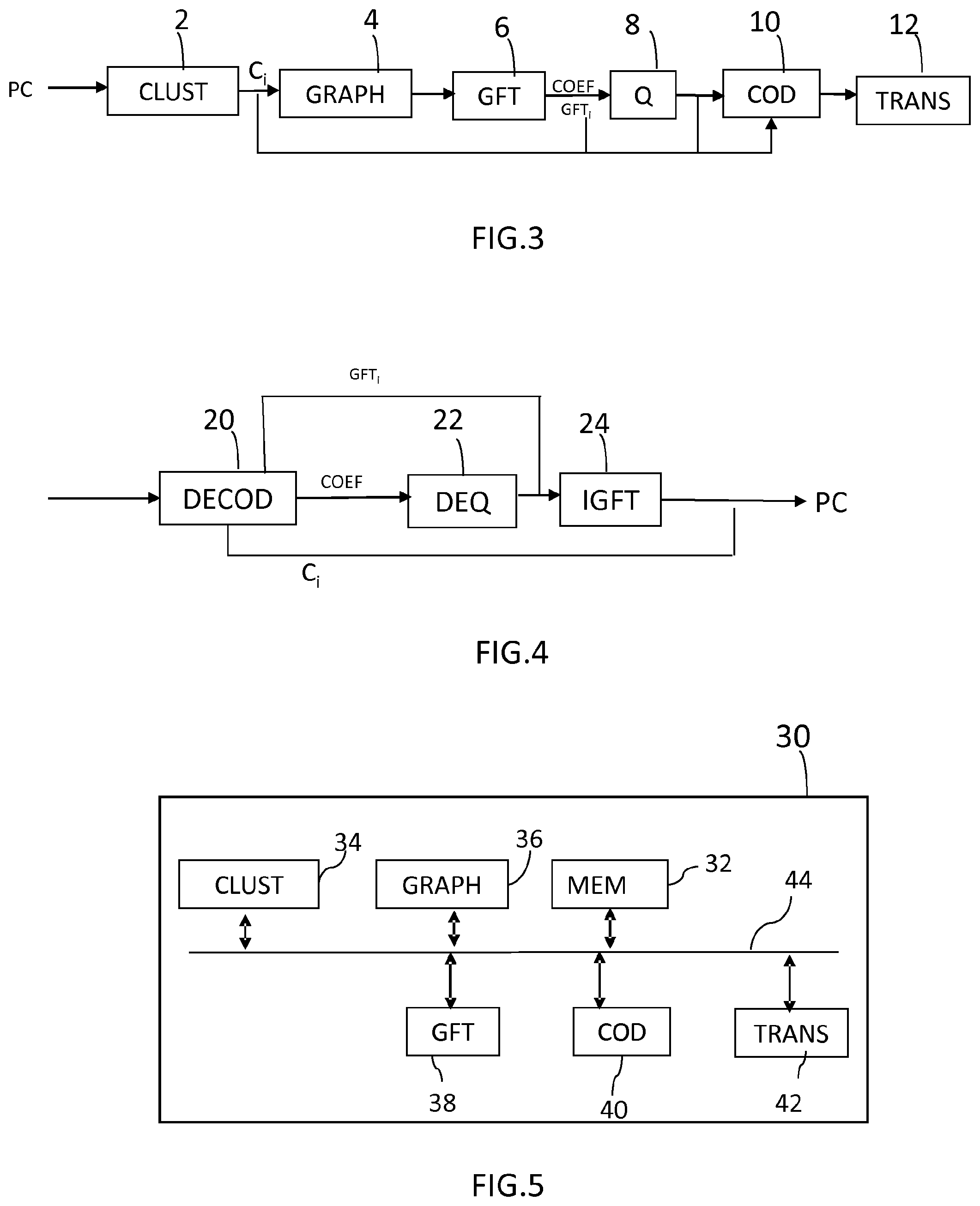

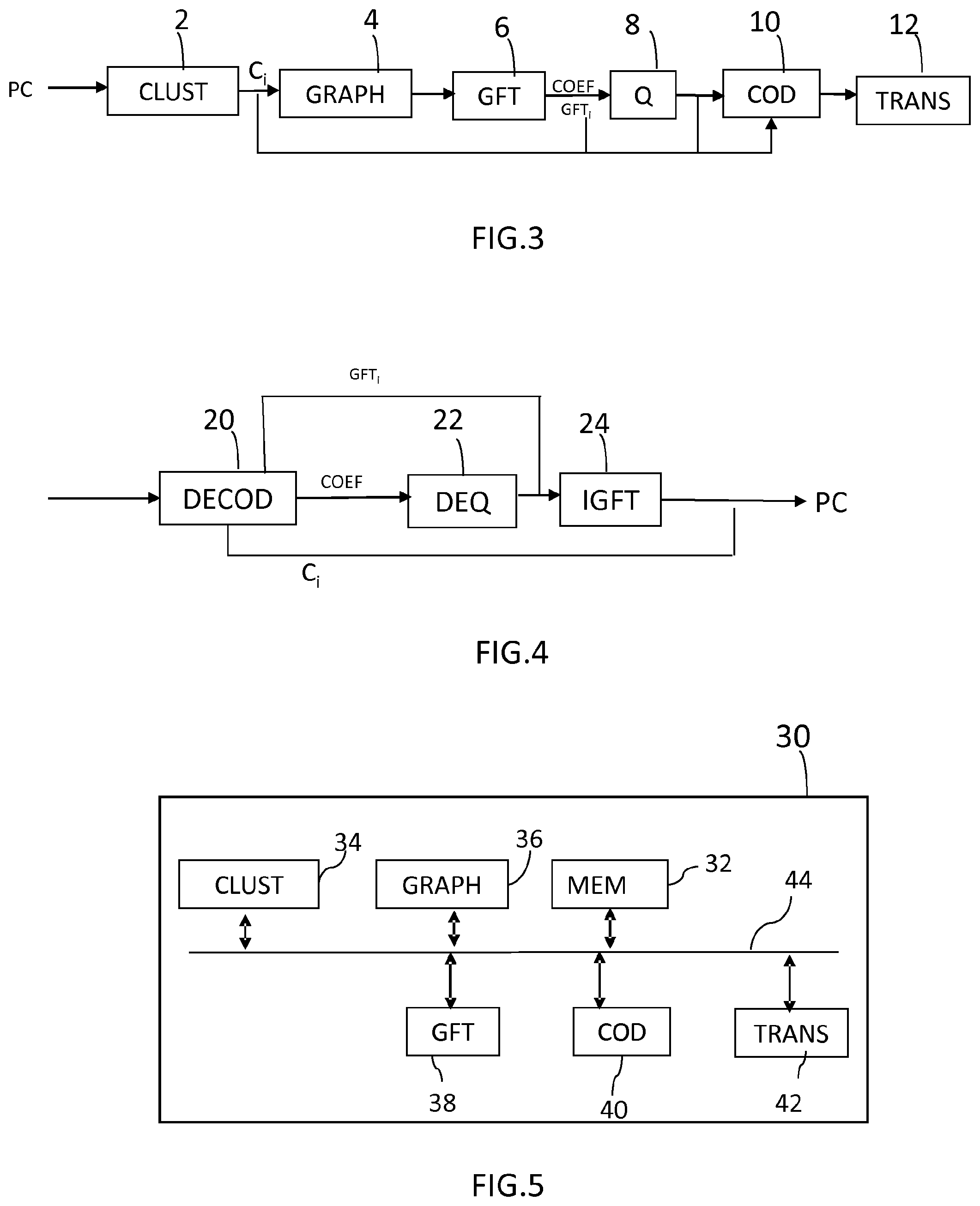

[0064] The diagram of FIG. 3 illustrates an example of the general algorithm for such computer program.

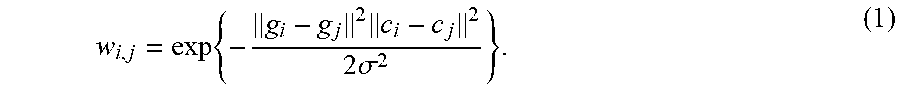

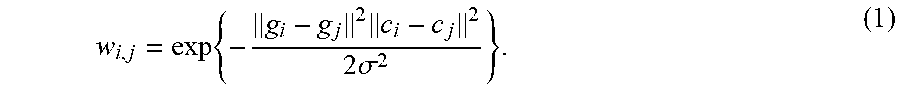

[0065] The disclosure also provides a computer-readable program comprising computer-executable instructions to enable a computer to perform the reconstructing method of the invention.

[0066] The diagram of FIG. 4 illustrates an example of the general algorithm for such computer program.

BRIEF DESCRIPTION OF THE DRAWINGS

[0067] The present invention is illustrated by way of examples, and not by way of limitation, in the figures of the accompanying drawings, in which like reference numerals refer to similar elements and in which:

[0068] FIG. 1, already described, is a schematic view illustrating an octree-based point cloud representation according to the prior art;

[0069] FIG. 2 already described, is a schematic view illustrating an overview of an octree data structure;

[0070] FIG. 3 is a flowchart showing the steps of encoding a point cloud, according to an embodiment of the present invention;

[0071] FIG. 4 is a flowchart showing the steps of reconstructing a point cloud, according to an embodiment of the present invention;

[0072] FIG. 5 is a schematic view illustrating an encoder, according to an embodiment of the invention; and

[0073] FIG. 6 is a schematic view illustrating a decoder, according to an embodiment of the invention.

DETAILED DESCRIPTION OF PREFERRED EMBODIMENTS

[0074] The method of encoding a point cloud according to an embodiment of the present invention is illustrated in the flowchart of FIG. 3.

[0075] The points of the point cloud PC are characterized by their attributes which include the geometry, i.e. the spatial position of each point and at least another attribute of the point, such as for example the color. We will assume in the following description that each point of the point cloud has two attributes: the geometry g in the 3D space and the color c in the RGB space.

[0076] The point cloud data is classified into several clusters at step 2. As a result of this clustering, the point cloud data in each cluster can be approximated by a linear function as it has generally been observed that most point cloud data has a piecewise linear behavior.

[0077] This clustering may be performed by any prior art clustering method.

[0078] Advantageously, the clustering is performed using the normalized cuts technique modified, according to the present embodiment, in order to consider similarities between all the attributes of the points of the point cloud.

[0079] Thus, when performing clustering, multi-lateral relations are considered. That is, both similarities in the geometry and in the other attributes, such as the color, are taken into account to segment the point cluster in order to achieve a clustering result that exploits the piecewise linear property in an optimal way.

[0080] At the end of step 2, the point cloud PC is clustered into a plurality of clusters identified by corresponding indices C.sub.i, wherein 1.ltoreq.i.ltoreq.N, where N is the obtained number of clusters.

[0081] The cluster indices are assigned randomly, while ensuring that a unique index is assigned for each cluster.

[0082] Then, at step 4, a similarity graph is constructed for each cluster. This similarity graph represents the similarity among neighboring vertices, i.e. points, of the cluster and permits to acquire a compact representation of the cluster.

[0083] A graph G={V, E, W} consists of a finite set of vertices V with cardinality |V|=N, a set of edges E connecting vertices, and a weighted adjacency matrix W. W is a real N.times.N matrix, where w.sub.i,j is the weight assigned to the edge (i, j) connecting vertices Pi and Pj. Only undirected graphs are considered here, which correspond to symmetric weighted adjacency matrices, i.e., w.sub.i,j=w.sub.j,i. Weights are assumed non-negative, i.e., w.sub.i,j.gtoreq.0.

[0084] In the similarity graph constructed at step 4, the weight w.sub.i,j between vertex Pi and vertex Pj is assigned as

w i , j = exp { - g i - g j 2 c i - c j 2 2 .sigma. 2 } . ( 1 ) ##EQU00001##

Where g.sub.i and g.sub.j are the coordinate vectors respectively, i.e., g.sub.i=(x.sub.i, y.sub.i, z.sub.i).sup.T and g.sub.j=(x.sub.j, y.sub.j, z.sub.j).sup.T; c.sub.i and c.sub.j are the color vectors respectively, i.e., c.sub.i=(r.sub.i, g.sub.i, b.sub.i).sup.T and c.sub.j=(r.sub.i, g.sub.j, b.sub.j).sup.T. In this formula, .parallel.g.sub.i-g.sub.j.parallel..sup.2 describes the similarity in the geometry and .parallel.c.sub.i-c.sub.j.parallel..sup.2 describes the similarity in the color. [0085] .sigma. is a parameter used to control the weight value and is often set empirically.

[0086] In this way, both the geometry and color information are taken into consideration in the constructed graph, which is different from the usual definition of edge weights, which only considers the similarity in one attribute.

[0087] According to a preferred embodiment and in order to reduce the number of connected vertices for sparse representation, a threshold t is used to set small weights to 0 as follows:

w.sub.i,j=0, if w.sub.i,j<t (2).

[0088] In this way, small weights won't be encoded so as to save coding bits. Also, as these weights are small, which means the connected vertices are dissimilar to some extent, setting these weights to 0 almost does not degrade the coding quality.

[0089] Thus, the graph is constructed as follows.

[0090] For each point Pi of the cluster, the possible edge weight between it and any other point Pj in the cluster is calculating according to equation (1). If w.sub.i,j>=t, an edge e.sub.i,j between Pi and Pj is added and the calculated weight w.sub.i,j is attached to the edge, otherwise, Pi and Pj are disconnected as a small weight means that there's a large discrepancy between them.

[0091] Then, at step 6, the GFT is calculated for each cluster based on the constructed similarity graph.

[0092] At this step, the weighted adjacency matrix is obtained from the weights of the graph.

[0093] Then, the graph Laplacian matrix is computed.

[0094] There exist different variants of Laplacian matrices.

[0095] In one embodiment, the unnormalized combinatorial graph Laplacian is deployed, which is defined as L:=D-W, where D is the degree matrix defined as a diagonal matrix whose i-th diagonal element is the sum of all elements in the i-th row of W, i.e., d.sub.i,i=.SIGMA..sub.j=1.sup.Nw.sub.i,j. Since the Laplacian matrix is a real symmetric matrix, it admits a set of real eigenvalues {.lamda.l}.sub.l=0, 1, . . . , N-1 with a complete set of orthonormal eigenvectors {.psi..sub.l}.sub.l=0, 1, . . . , N-1, i.e., L.psi..sub.l=.lamda..sub.l.psi..sub.l, for l=0, 1, . . . , N-1.

[0096] This Laplacian matrix is employed in one embodiment for two reasons.

[0097] First, as the elements in each row of L sum to zero by construction, 0 is guaranteed to be an eigenvalue with [1 . . . 1].sup.T as the corresponding eigenvector. This allows a frequency interpretation of the GFT, where the eigenvalues .lamda..sub.l's are the graph frequencies and always have a DC component, which is beneficial for the compression of point cloud data consisting of many smooth regions. By connecting similar points and disconnecting dissimilar ones, high-frequency coefficient are reduced, which leads to a compact representation of the point cloud in the GFT domain.

[0098] Second, GFT defaults to the well-known DCT when defined for a line graph (corresponding to the 1D DCT) or a 4-connectivity graph (2D DCT) with all edge weights equal to 1. That means that the GFT is at least as good as the DCT in sparse signal representation if the weights are chosen in this way. Due to the above two desirable properties, the unnormalized Laplacian matrix is used for the definition of the GFT as described in the following paragraph.

[0099] The eigenvectors {.psi..sub.l}.sub.l=0, 1, . . . , N-1 of the Laplacian matrix are used to define the GFT. Formally, for any signal x.di-elect cons..sup.N residing on the vertices of G, its GFT {circumflex over (x)}.di-elect cons..sup.N is defined as

{circumflex over (x)}(l)=<.psi..sub.l,x>=.SIGMA..sub.n=1.sup.N.psi..sub.l*(n)x(n),l=- 0,1, . . . ,N-1. (3)

where {circumflex over (x)}(l) is the l-th GFT coefficient, where x(n) refers here to the attributes of the point n of the point cloud.

[0100] For each cluster, the obtained GFT is identified by a GFT index.

[0101] Thus, step 6 results in the GFT coefficients and the GFT indices.

[0102] Then, at step 8, the GFT coefficients are quantized.

[0103] At step 10, the quantized GFT coefficients are entropy coded, for example by using the CABAC method described in Marpe: "Context-Based Adaptive Binary Arithmetic Coding in the H.264/AVC Video Compression Standard", IEEE Trans. Cir. and Sys. For Video Technol., vol. 13, no. 7, pages 620-636, 2003.

[0104] Also, the overhead, constituted by the cluster indices and the GFT indices, is entropy coded at step 10 to indicate which GFT is used for which cluster. The encoded GFT coefficients, cluster indices and GFT indices are then transmitted, at step 12, to a decoder.

[0105] FIG. 4 shows the steps of reconstructing a point cloud, i.e. the decoding steps implemented by the decoder after receiving the encoded GFT coefficients, cluster indices and GFT indices, according to an embodiment of the present disclosure.

[0106] At step 20, the received GFT coefficients, cluster indices and GFT indices are entropy decoded.

[0107] Then, at step 22, the decoded GFT coefficients are dequantized.

[0108] At step 24, an inverse GFT is performed using the dequantized GFT coefficient and the decoded GFT indices which indicate the applied GFT.

[0109] The inverse GFT is given by:

x(n)=.SIGMA..sub.l=0.sup.N-1{circumflex over (x)}(l).psi..sub.l(n),n=1,2, . . . ,N. (4)

where x is the recovered signal representing the point cloud data of each cluster.

[0110] According to an embodiment, the graph Fourier transform is calculated as follows, where each point in the point cloud is treated as a vertex in a graph.

[0111] Firstly, each point is connected to its neighbors as long as they are similar. Two points are disconnected if there is a large discrepancy between them.

[0112] Secondly, given the connectivity graph, the adjacency matrix W is defined, where w.sub.i,j=w.sub.j,i=1 if vertex i and j are connected, and 0 otherwise. The degree matrix D is then computed.

[0113] In a third step, using computed matrices W and D, the graph Laplacian matrix is computed as L=D-W. The eigenvectors U of L are the basis vectors of the GFT. Finally, the attributes of the points of the point cloud are stacked into a column vector, and the GFT and inverse GFT are computed according to equations 3 and 4.

[0114] By connecting similar points and disconnecting dissimilar ones, high-frequency coefficients are reduced, thus leading to compact representation of point clouds in the GFT domain.

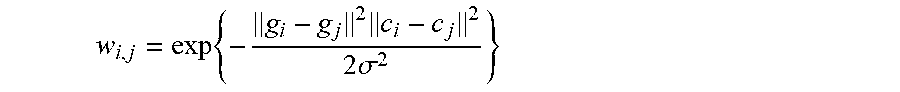

[0115] FIG. 5 is a block diagram of an exemplary embodiment of an encoder 30 implementing the encoding method of the present disclosure.

[0116] Advantageously, the encoder 30 includes one or more processors and a memory 32.

[0117] The encoder 30 comprises: [0118] a segmentation module 34 configured to segment the point cloud into clusters of points on the basis of the attributes of the points; [0119] a construction module 36 configured to construct, for each cluster, a similarity graph representing the similarity among neighboring points of the cluster in terms of the attributes; [0120] a computation module 38 configured to compute, for each cluster; a Graph Fourier Transform, GFT, based on the constructed similarity graph, the GFT being characterized by its coefficients; and [0121] a coding module 40 configured to encode the GFT coefficients.

[0122] The encoder 30 also comprises a transmitter 42 configured to transmit to a decoder the encoded GFT coefficients and identifiers of the clusters and of the computed GFTs.

[0123] According to the represented embodiment, a bus 44 provides a communication path between various elements of the encoder 30. Other point-to-point interconnection options (e.g. non-bus architecture) are also feasible.

[0124] FIG. 6 is a block diagram of an exemplary embodiment of a decoder 50 implementing the reconstructing method of the present disclosure.

[0125] Advantageously, the decoder 50 includes one or more processors and a memory 52.

[0126] The decoder 50 comprises: [0127] a receiver 54 configured to receive encoded data including GFT coefficients associated with cluster indices and GFT indices; [0128] a decoding module 66 configured to decode the received data; and [0129] a reconstruction module 58 configured to reconstruct the clusters by performing for each cluster an inverse GFT.

[0130] According to the represented embodiment, a bus 60 provides a communication path between various elements of the decoder 50. Other point-to-point interconnection options (e.g. non-bus architecture) are also feasible.

[0131] While there has been illustrated and described what are presently considered to be the preferred embodiments of the present invention, it will be understood by those skilled in the art that various other modifications may be made, and equivalents may be substituted, without departing from the true scope of the present invention. Additionally, many modifications may be made to adapt a particular situation to the teachings of the present invention without departing from the central inventive concept described herein. Furthermore, an embodiment of the present invention may not include all of the features described above. Therefore, it is intended that the present invention is not limited to the particular embodiments disclosed, but that the invention includes all embodiments falling within the scope of the appended claims.

[0132] Expressions such as "comprise", "include", "incorporate", "contain", "is" and "have" are to be construed in a non-exclusive manner when interpreting the description and its associated claims, namely construed to allow for other items or components which are not explicitly defined also to be present. Reference to the singular is also to be construed to be a reference to the plural and vice versa.

[0133] A person skilled in the art will readily appreciate that various parameters disclosed in the description may be modified and that various embodiments disclosed and/or claimed may be combined without departing from the scope of the invention.

[0134] For instance, even if the described graph transform is a GFT, other graph transforms may also be used such as, for example, wavelets on graphs, as described in D. Hammond, P. Vandergheynst, and R. Gribonval, "Wavelets on graphs via spectral graph theory" in Elsevier Appplied and Computational Harmonic Analysis, vol. 30, April 2010, pp. 129-150, and lifting transforms on graphs, as described in G. Shen, "Lifting transforms on graphs: Theory and applications" in Ph.D. dissertation, University of Southern California, 2010.

* * * * *

D00000

D00001

D00002

D00003

D00004

P00001

XML

uspto.report is an independent third-party trademark research tool that is not affiliated, endorsed, or sponsored by the United States Patent and Trademark Office (USPTO) or any other governmental organization. The information provided by uspto.report is based on publicly available data at the time of writing and is intended for informational purposes only.

While we strive to provide accurate and up-to-date information, we do not guarantee the accuracy, completeness, reliability, or suitability of the information displayed on this site. The use of this site is at your own risk. Any reliance you place on such information is therefore strictly at your own risk.

All official trademark data, including owner information, should be verified by visiting the official USPTO website at www.uspto.gov. This site is not intended to replace professional legal advice and should not be used as a substitute for consulting with a legal professional who is knowledgeable about trademark law.