Multi-stage Coding Block Partition Search

Han; Jingning ; et al.

U.S. patent application number 16/016980 was filed with the patent office on 2019-12-26 for multi-stage coding block partition search. The applicant listed for this patent is GOOGLE LLC. Invention is credited to Jingning Han, Yaowu Xu.

| Application Number | 20190394491 16/016980 |

| Document ID | / |

| Family ID | 68980925 |

| Filed Date | 2019-12-26 |

View All Diagrams

| United States Patent Application | 20190394491 |

| Kind Code | A1 |

| Han; Jingning ; et al. | December 26, 2019 |

MULTI-STAGE CODING BLOCK PARTITION SEARCH

Abstract

Multi-stage coding block partition search is disclosed. A method includes selecting a partition-none partition type and a partition-split partition type for predicting the block, determining a first cost of predicting the block using the partition-none partition type, and determining a second cost of predicting the block using the partition-split partition type. The partition-none partition type and the partition-split partition type are selected from a set of partition types that includes the partition-none partition type, the partition-split partition type, and third partition types. The method also includes, on condition that the result meets a criterion, determining a respective encoding cost corresponding to at least some of the third partition types; selecting a selected partition type corresponding to a minimal cost amongst the partition-none partition type and the at least some of the third partition types; and encoding, in a compressed bitstream, the selected partition type.

| Inventors: | Han; Jingning; (Santa Clara, CA) ; Xu; Yaowu; (Saratoga, CA) | ||||||||||

| Applicant: |

|

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Family ID: | 68980925 | ||||||||||

| Appl. No.: | 16/016980 | ||||||||||

| Filed: | June 25, 2018 |

| Current U.S. Class: | 1/1 |

| Current CPC Class: | H04N 19/176 20141101; H04N 19/147 20141101; H04N 19/119 20141101; H04N 19/96 20141101; H04N 19/66 20141101; H04N 19/192 20141101; H04N 19/50 20141101 |

| International Class: | H04N 19/66 20060101 H04N019/66; H04N 19/119 20060101 H04N019/119; H04N 19/50 20060101 H04N019/50; H04N 19/96 20060101 H04N019/96; H04N 19/192 20060101 H04N019/192; H04N 19/176 20060101 H04N019/176 |

Claims

1. A method for predicting a block of size N.times.N of a video frame, comprising: selecting a partition-none partition type and a partition-split partition type for predicting the block, wherein the partition-none partition type and the partition-split partition type are selected from a set of partition types comprising the partition-none partition type, the partition-split partition type, and third partition types, the partition types being a same level partitions, wherein the partition-none partition type includes one prediction unit of size N.times.N corresponding to the block of size N.times.N, and wherein the partition-split partition type partitions the block into equally sized square sub-blocks, each of square sub-blocks having a size of N/2.times.N/2; determining a first cost of predicting the block using the partition-none partition type; determining a second cost of predicting the block using the partition-split partition type; determining a result of comparing the first cost and the second cost; on condition that the result meets a criterion indicating that the partition-none partition type is preferred over the partition-split partition type: determining a respective encoding cost corresponding to at least some of the third partition types; and selecting a selected partition type corresponding to a minimal cost amongst the partition-none partition type and the at least some of the third partition types; and encoding, in a compressed bitstream, the selected partition type.

2. The method of claim 1, further comprising: on condition that the result does not meet the criterion: selecting the partition-split partition type as the selected partition type.

3. The method of claim 1, wherein determining the second cost of predicting the block using the partition-split partition type comprises: recursively partitioning, based on a quad-tree partitioning, the block into sub-blocks using the partition-split partition type.

4. The method of claim 1, wherein the third partition types comprise a partition_vert partition type and a partition_horz partition type.

5. The method of claim 4, wherein the third partition types further comprise a partition_horz_a partition type, a partition_horz_b partition type, a partition_vert_a partition type, a partition_vert_b partition type, a partition_horz_4 partition type, and a partition_vert_4 partition type.

6. The method of claim 1, wherein the block has a size of 128.times.128.

7. The method of claim 1, wherein the block has a size of 64.times.64.

8. The method of claim 1, wherein the criterion comprises the first cost being less than the second cost.

9. The method of claim 1, wherein the criterion comprises the first cost being within a predefined range of the second cost.

10. An apparatus for predicting a block of size N.times.N of a video frame, comprising: a memory; and a processor, the processor configured to execute instructions stored in the memory to: select a partition-none partition type and a partition-split partition type for predicting the block, wherein the partition-none partition type and the partition-split partition type are selected from a set of partition types comprising the partition-none partition type, the partition-split partition type, and third partition types, wherein the partition-none partition type includes one prediction unit of size N.times.N corresponding to the block of size N.times.N, and wherein the partition-split partition type partitions the block into equally sized square sub-blocks, each of square sub-blocks having a size of N/2.times.N/2; determine a first cost of predicting the block using the partition-none partition type; determine a second cost of predicting the block using the partition-split partition type; determine a result of comparing the first cost and the second cost; on condition that the result meets a criterion indicating that the partition-none partition type is preferred over the partition-split partition type: determine a respective encoding cost corresponding to at least some of the third partition types; and select a selected partition type corresponding to a minimal cost amongst the partition-none partition type and the at least some of the third partition types.

11. The apparatus of claim 10, wherein the instructions further comprise: on condition that the result does not meet the criterion: selecting the partition-split partition type as the selected partition type.

12. The apparatus of claim 10, wherein determining the second cost of predicting the block using the partition-split partition type comprises: recursively partitioning, based on a quad-tree partitioning, the block into sub-blocks using the partition-split partition type.

13. The apparatus of claim 10, wherein the third partition types comprise a partition_vert partition type and a partition_horz partition type.

14. The apparatus of claim 10, wherein the third partition types further comprise a partition_horz_a partition type, a partition_horz_b partition type, a partition_vert_a partition type, a partition_vert_b partition type, a partition_horz_4 partition type, and a partition_vert_4 partition type.

15. The apparatus of claim 10, wherein the block has a size of 128.times.128.

16. The apparatus of claim 10, wherein the block has a size of 64.times.64.

17. The apparatus of claim 10, wherein the criterion comprises the first cost being less than the second cost.

18. The apparatus of claim 10, wherein the criterion comprises the first cost being within a predefined range of the second cost.

19. An apparatus for predicting a block of size N.times.N of a video frame, comprising: a memory; and a processor, the processor configured to execute instructions stored in the memory, the instructions comprising: determining a partition type, from partition types comprising a partition-none partition type, a partition-split partition type, and third partition types, for predicting the block by operations comprising: determining a first coding cost of the block associated with the partition-none partition type, wherein the partition-none partition type includes one prediction unit of size N.times.N corresponding to the block of size N.times.N; determining a second coding cost of the block associated with a skip-level recursive partitioning, wherein the skip-level recursive partitioning partitions the block into square sub-blocks, and wherein each sub-block having a size that is less than N/2.times.N/2; on condition that the first coding cost is smaller than the second coding cost indicating that the partition-none partition type is preferred over the skip-level recursive partitioning: determining respective coding costs of encoding the block using at least some of the third partition types and the partition-split partition type; and selecting the partition type corresponding to a minimal coding cost from among the first coding cost and the respective coding costs; and encoding, in a compressed bitstream, the partition type.

20. The apparatus of claim 19, wherein the third partition types comprise a partition_vert partition type and a partition_horz partition type.

Description

BACKGROUND

[0001] Digital video streams may represent video using a sequence of frames or still images. Digital video can be used for various applications, including, for example, video conferencing, high-definition video entertainment, video advertisements, or sharing of user-generated videos. A digital video stream can contain a large amount of data and consume a significant amount of computing or communication resources of a computing device for processing, transmission, or storage of the video data. Various approaches have been proposed to reduce the amount of data in video streams, including compression and other encoding techniques.

SUMMARY

[0002] One aspect of the disclosed implementations is a method for predicting a block of a video frame. The method includes selecting a partition-none partition type and a partition-split partition type for predicting the block, determining a first cost of predicting the block using the partition-none partition type, and determining a second cost of predicting the block using the partition-split partition type. The partition-none partition type and the partition-split partition type are selected from a set of partition types that includes the partition-none partition type, the partition-split partition type, and third partition types. The partition-split partition type partitions the block into equally sized square sub-blocks. The method also includes, on condition that the result meets a criterion, determining a respective encoding cost corresponding to at least some of the third partition types; selecting a selected partition type corresponding to a minimal cost amongst the partition-none partition type and the at least some of the third partition types; and encoding, in a compressed bitstream, the selected partition type.

[0003] Another aspect is an apparatus for predicting a block of a video frame. The apparatus includes a memory and a processor. The processor is configured to execute instructions stored in the memory to select a partition-none partition type and a partition-split partition type for predicting the block; determine a first cost of predicting the block using the partition-none partition type; and determine a second cost of predicting the block using the partition-split partition type. The partition-none partition type and the partition-split partition type are selected from a set of partition types that includes the partition-none partition type, the partition-split partition type, and third partition types. The partition-split partition type partitions the block into equally sized square sub-blocks. The processor is also configured to execute instructions stored in the memory to determine a result of comparing the first cost and the second cost; and on condition that the result meets a criterion, determine a respective encoding cost corresponding to at least some of the third partition types, and select a selected partition type corresponding to a minimal cost amongst the partition-none partition type and the at least some of the third partition types.

[0004] Another aspect is a method for predicting a block of a video frame. The method includes determining a partition type, from partition types including a partition-none partition type, a partition-split partition type, and third partition types, for predicting the block; and encoding, in a compressed bitstream, the partition type. Determining a partition type includes determining a first coding cost of the block associated with the partition-none partition type; determining a second coding cost of the block associated with a skip-level recursive partitioning; and, on condition that the first coding cost is smaller than the second coding cost, determining respective coding costs of encoding the block using at least some of the third partition types and the partition-split partition type, and selecting the partition type corresponding to a minimal coding cost from among the first coding cost and the respective coding costs.

[0005] These and other aspects of the present disclosure are disclosed in the following detailed description of the embodiments, the appended claims, and the accompanying figures.

BRIEF DESCRIPTION OF THE DRAWINGS

[0006] The description herein makes reference to the accompanying drawings, wherein like reference numerals refer to like parts throughout the several views.

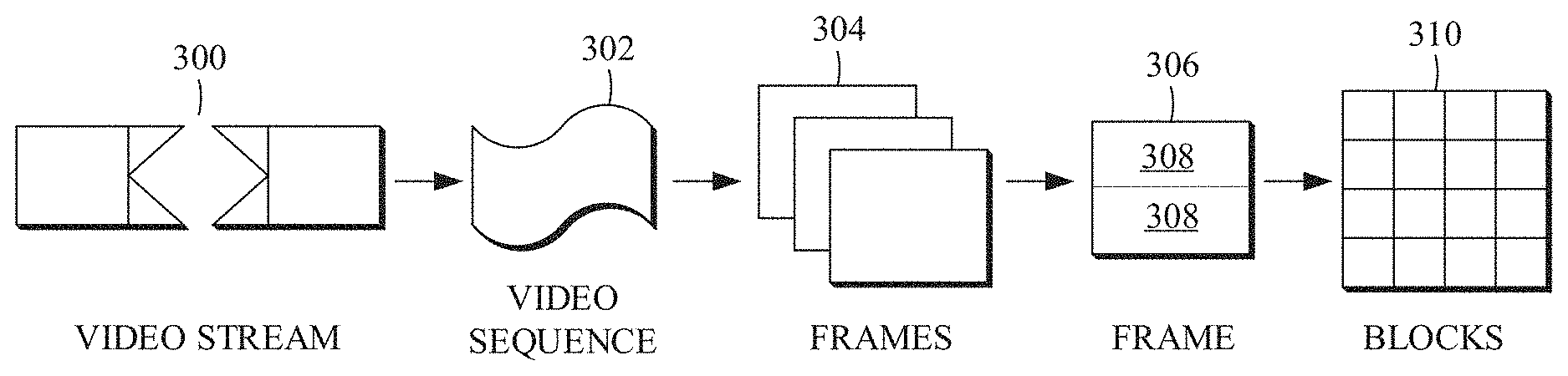

[0007] FIG. 1 is a schematic of a video encoding and decoding system.

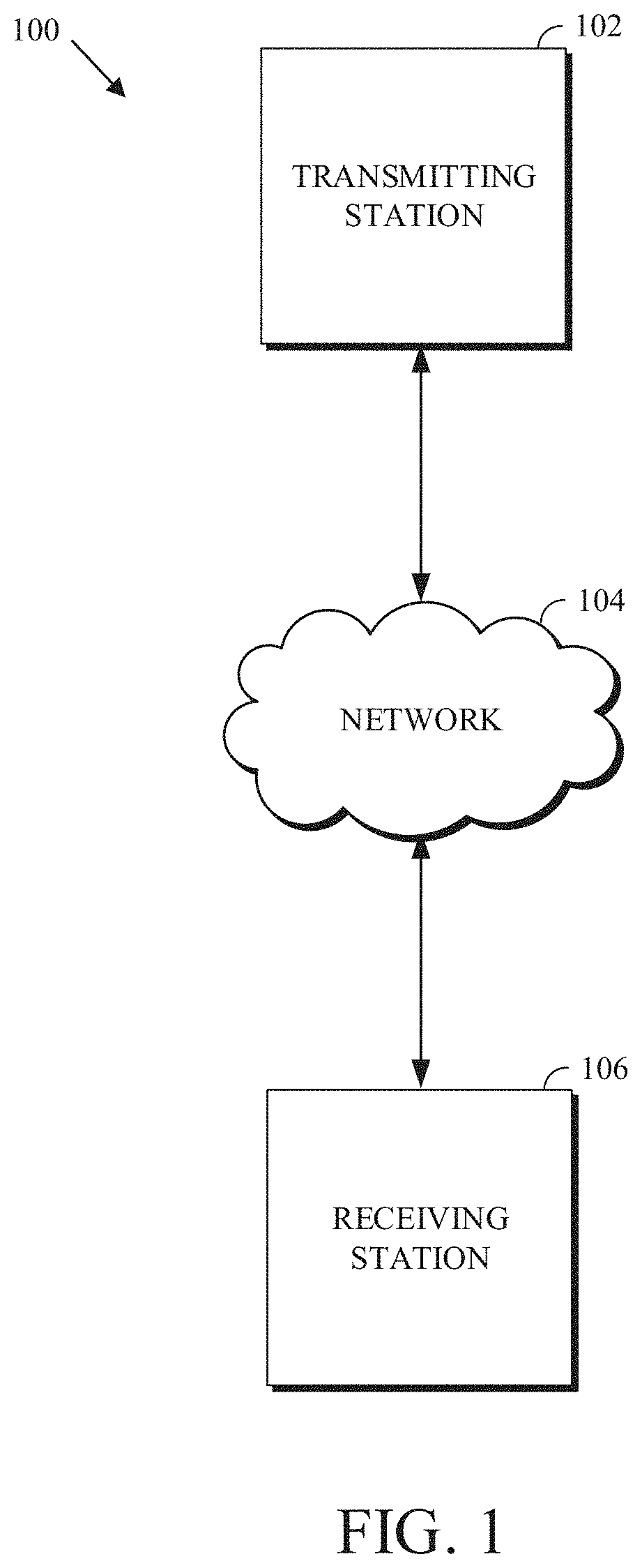

[0008] FIG. 2 is a block diagram of an example of a computing device that can implement a transmitting station or a receiving station.

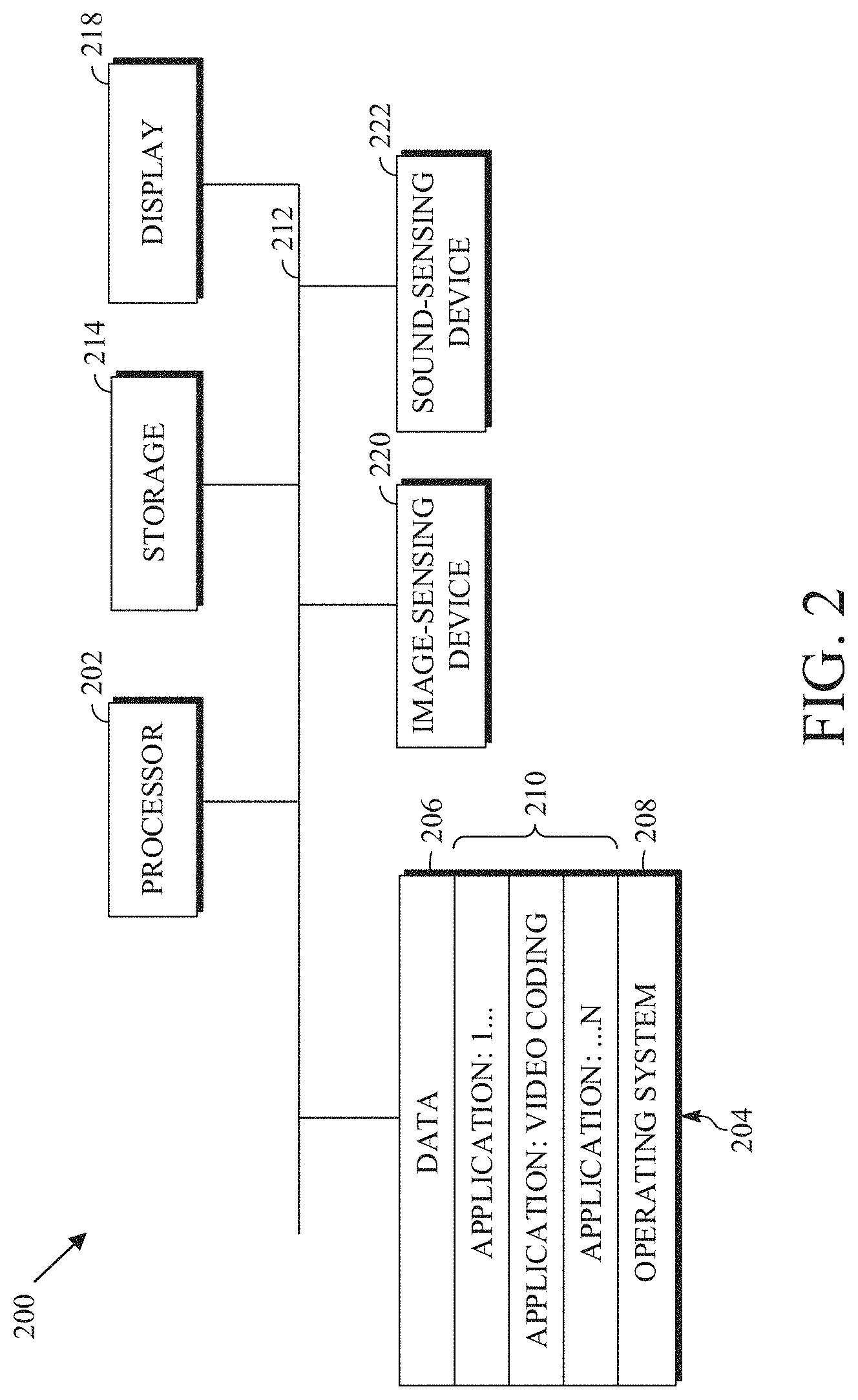

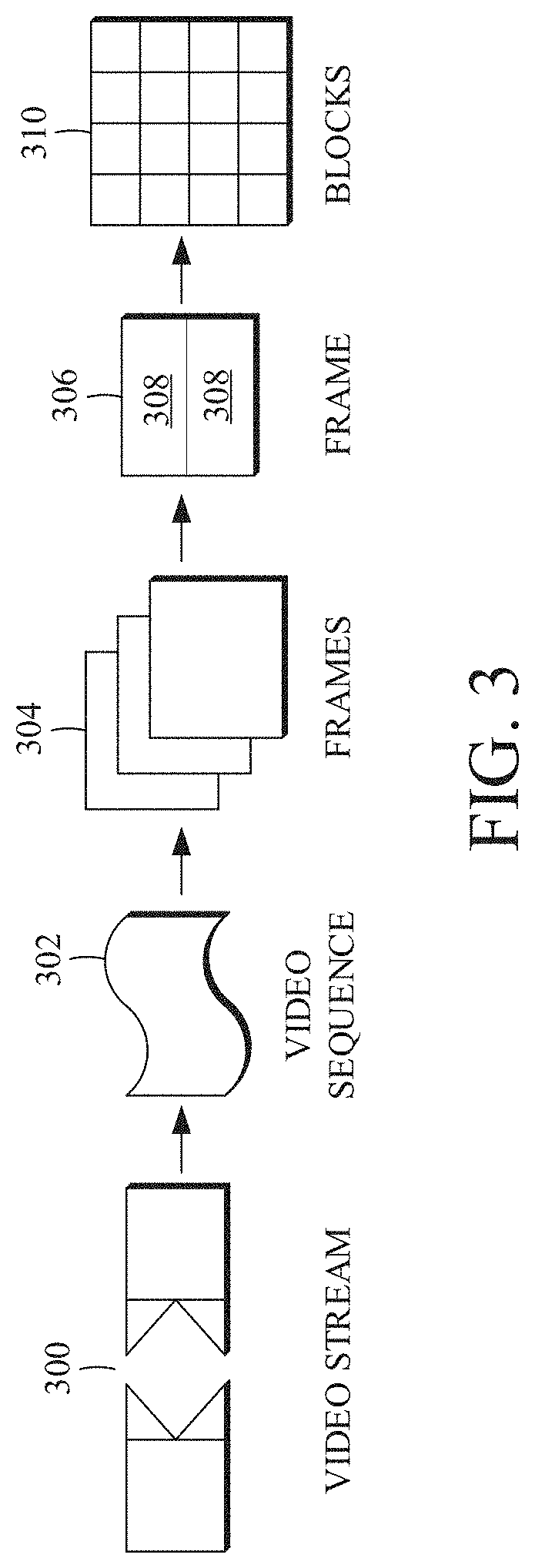

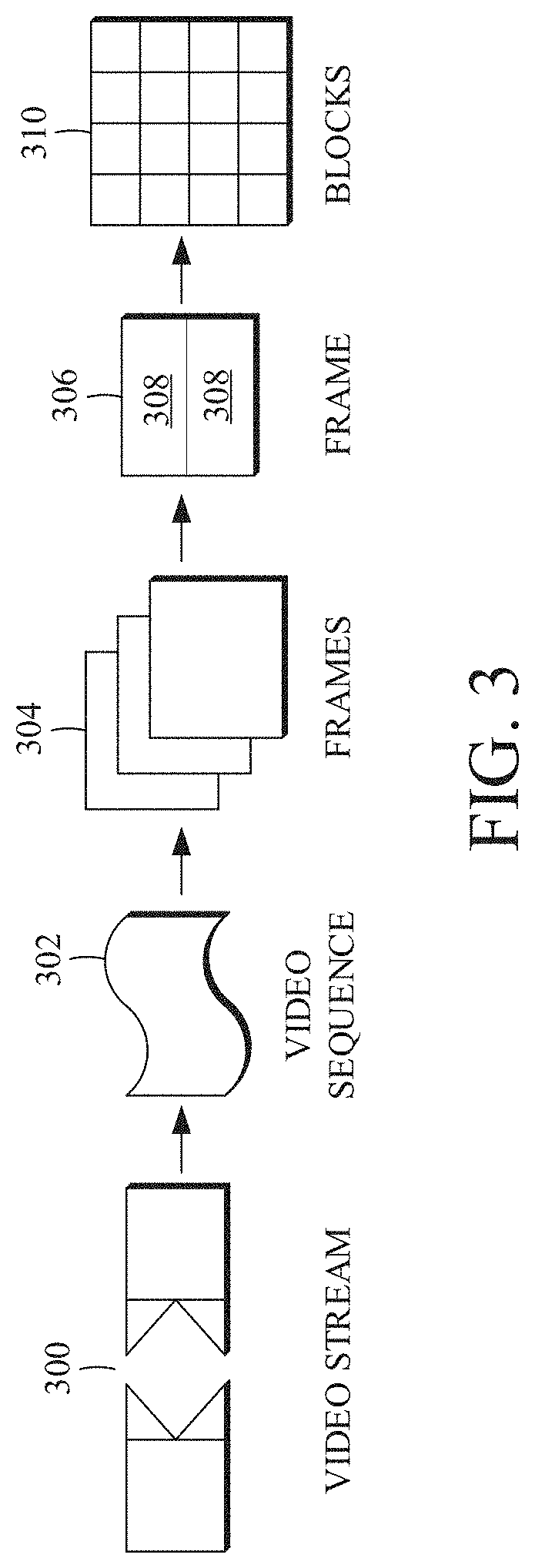

[0009] FIG. 3 is a diagram of a video stream to be encoded and subsequently decoded.

[0010] FIG. 4 is a block diagram of an encoder according to implementations of this disclosure.

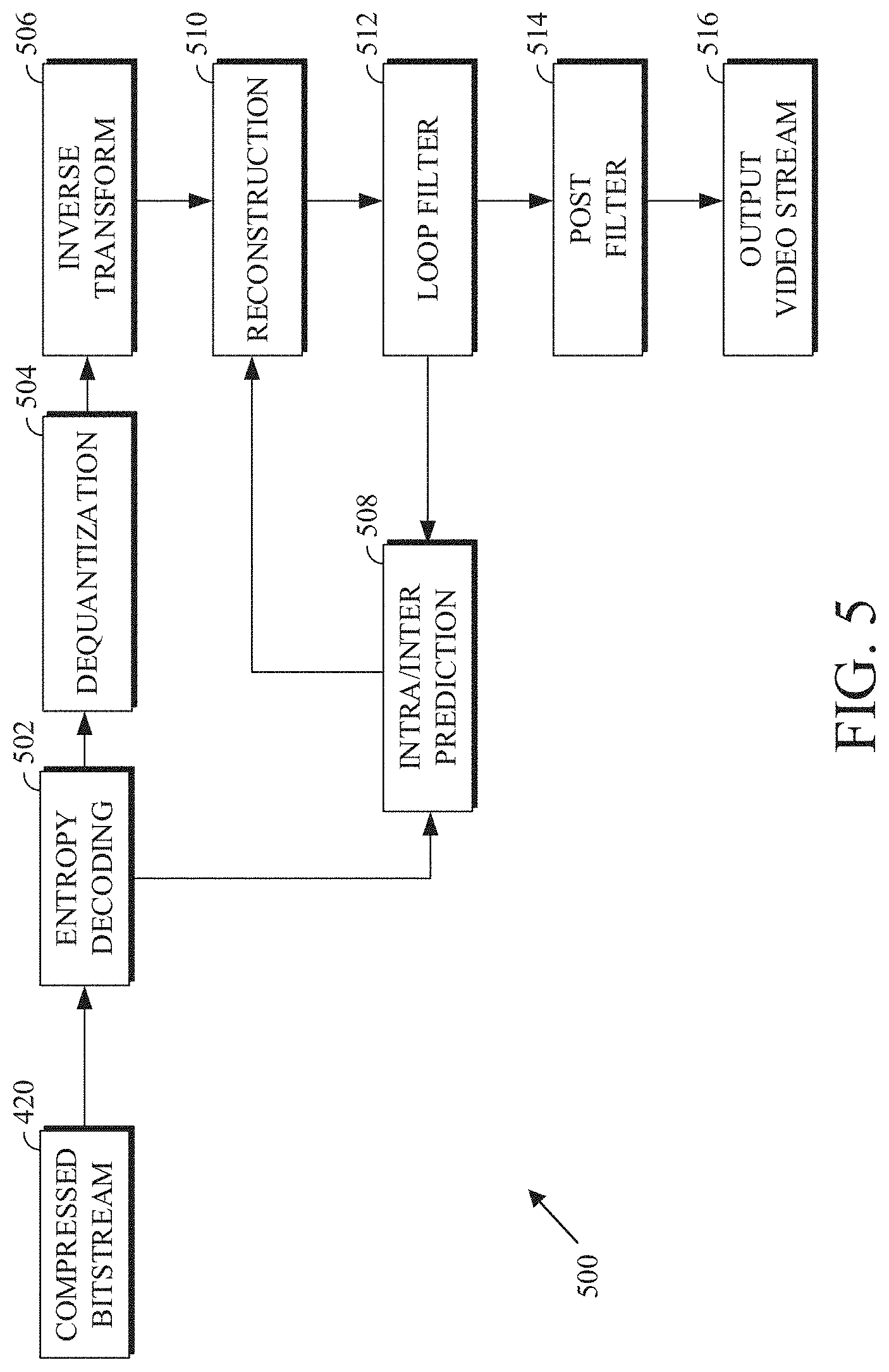

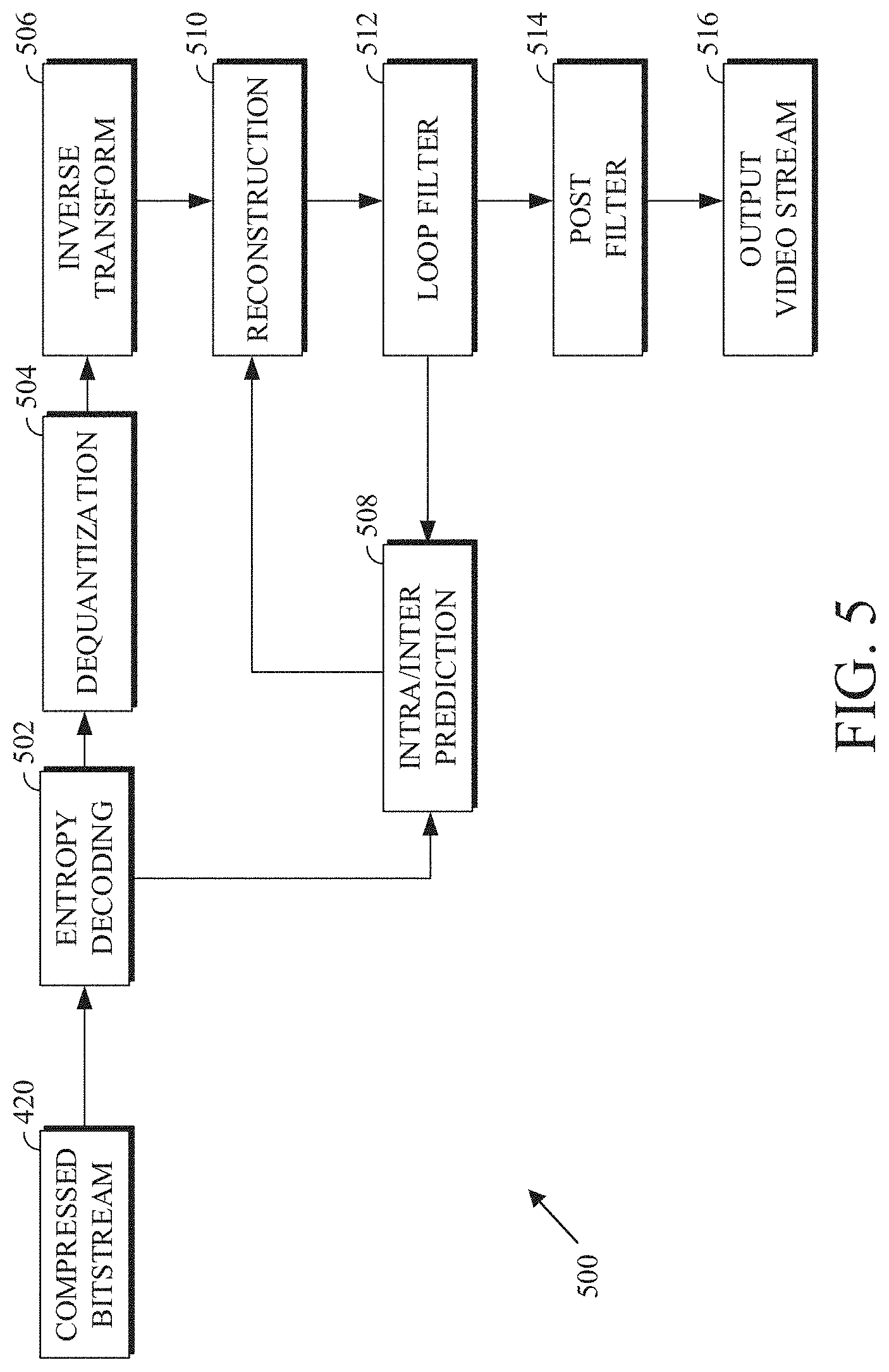

[0011] FIG. 5 is a block diagram of a decoder according to implementations of this disclosure.

[0012] FIG. 6 is a block diagram of a representation of a portion of a frame according to implementations of this disclosure.

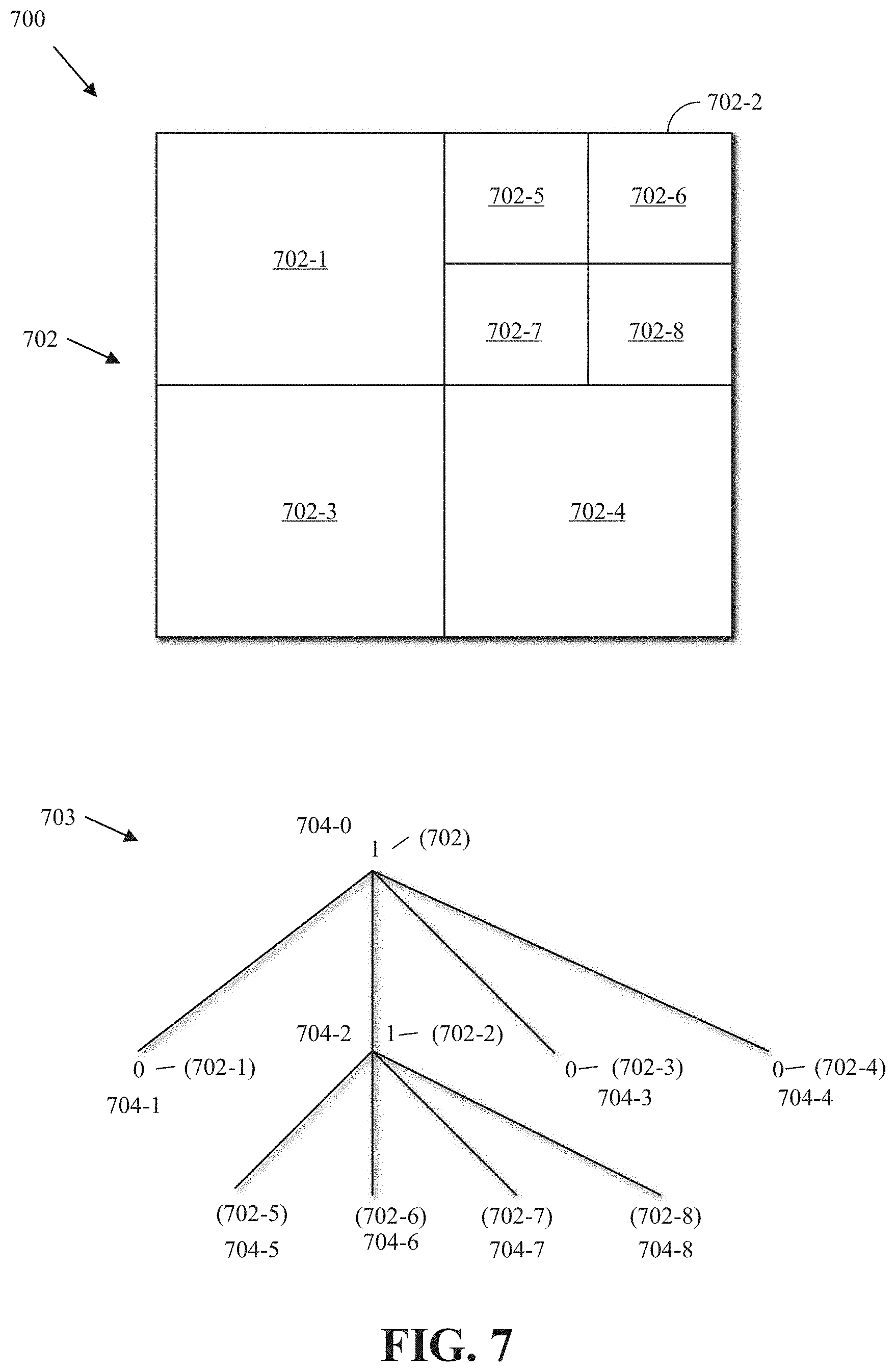

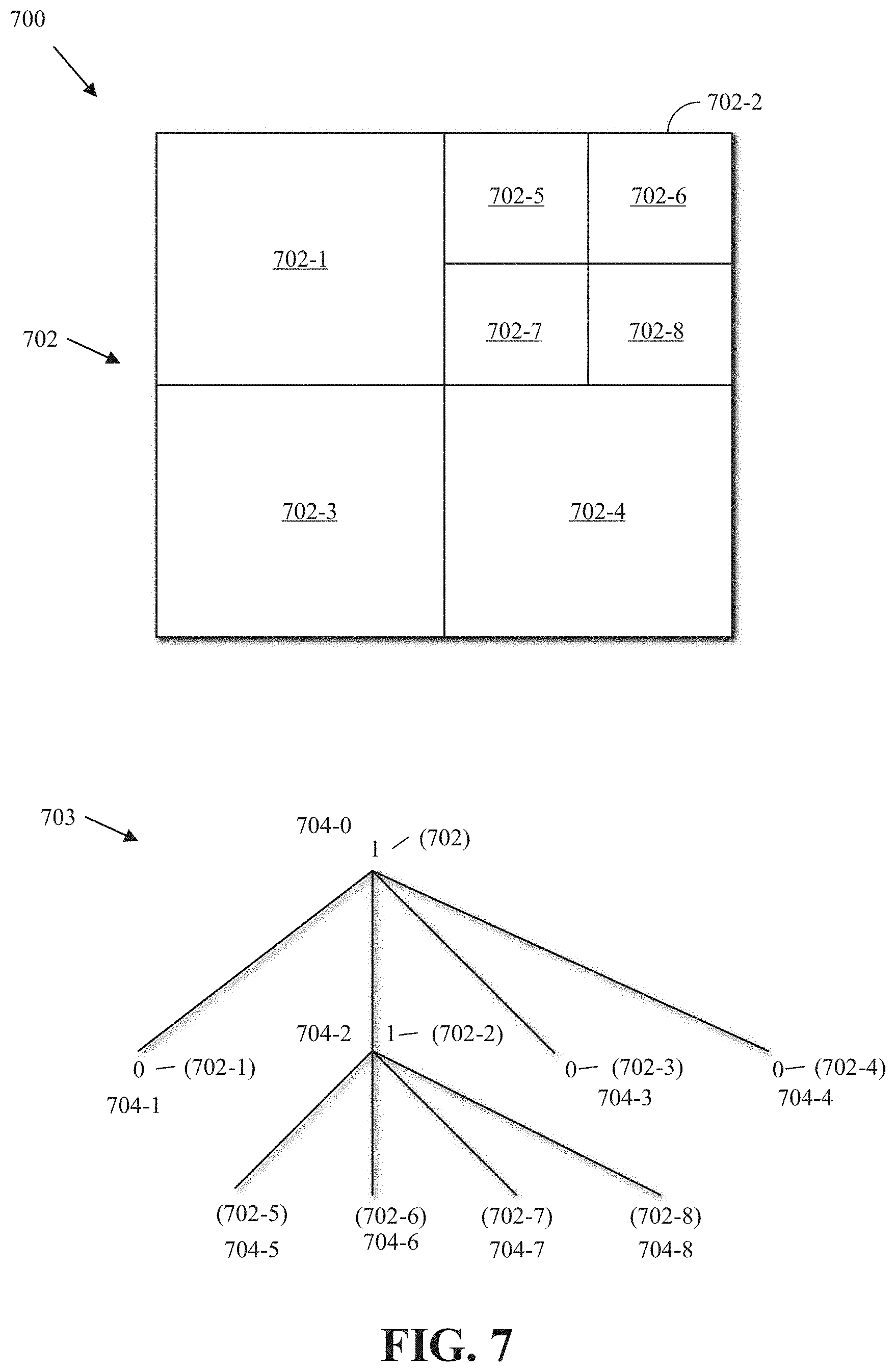

[0013] FIG. 7 is a block diagram of an example of a quad-tree representation of a block according to implementations of this disclosure.

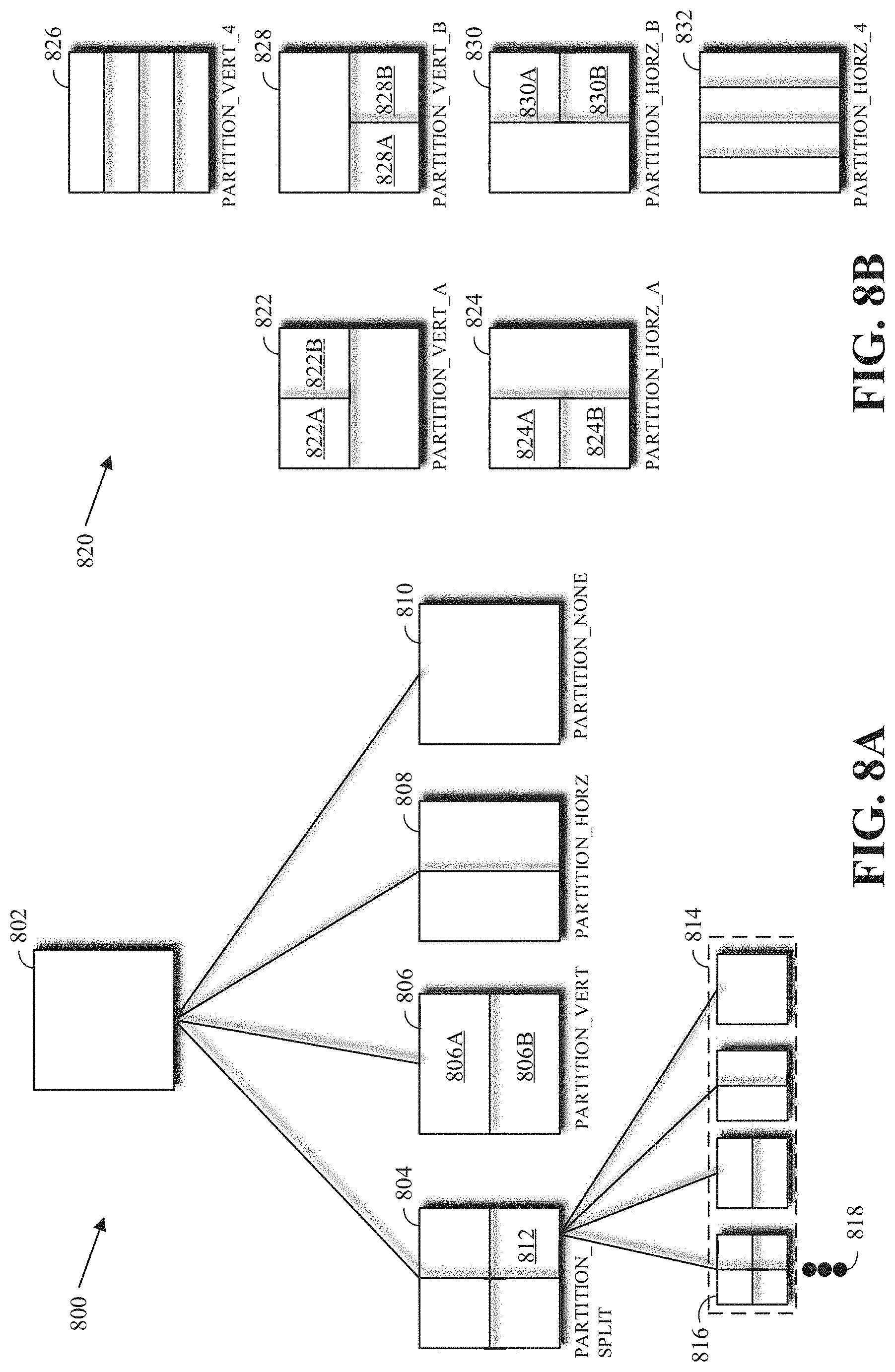

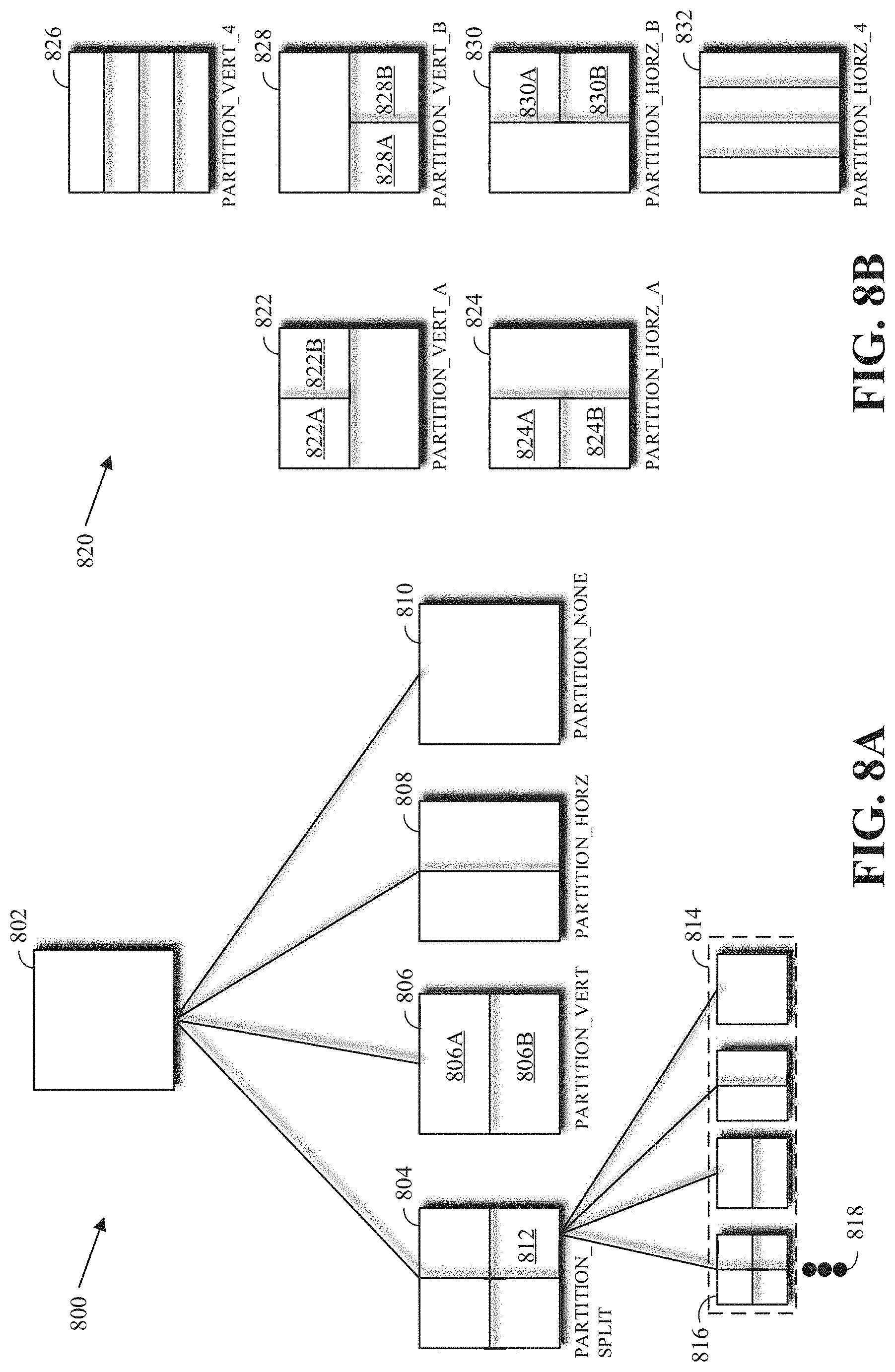

[0014] FIG. 8A is a block diagram of an example of recursive partitioning of a coding block into prediction blocks.

[0015] FIG. 8B is a block diagram of an example of extended partition types of a coding block according to implementations of this disclosure.

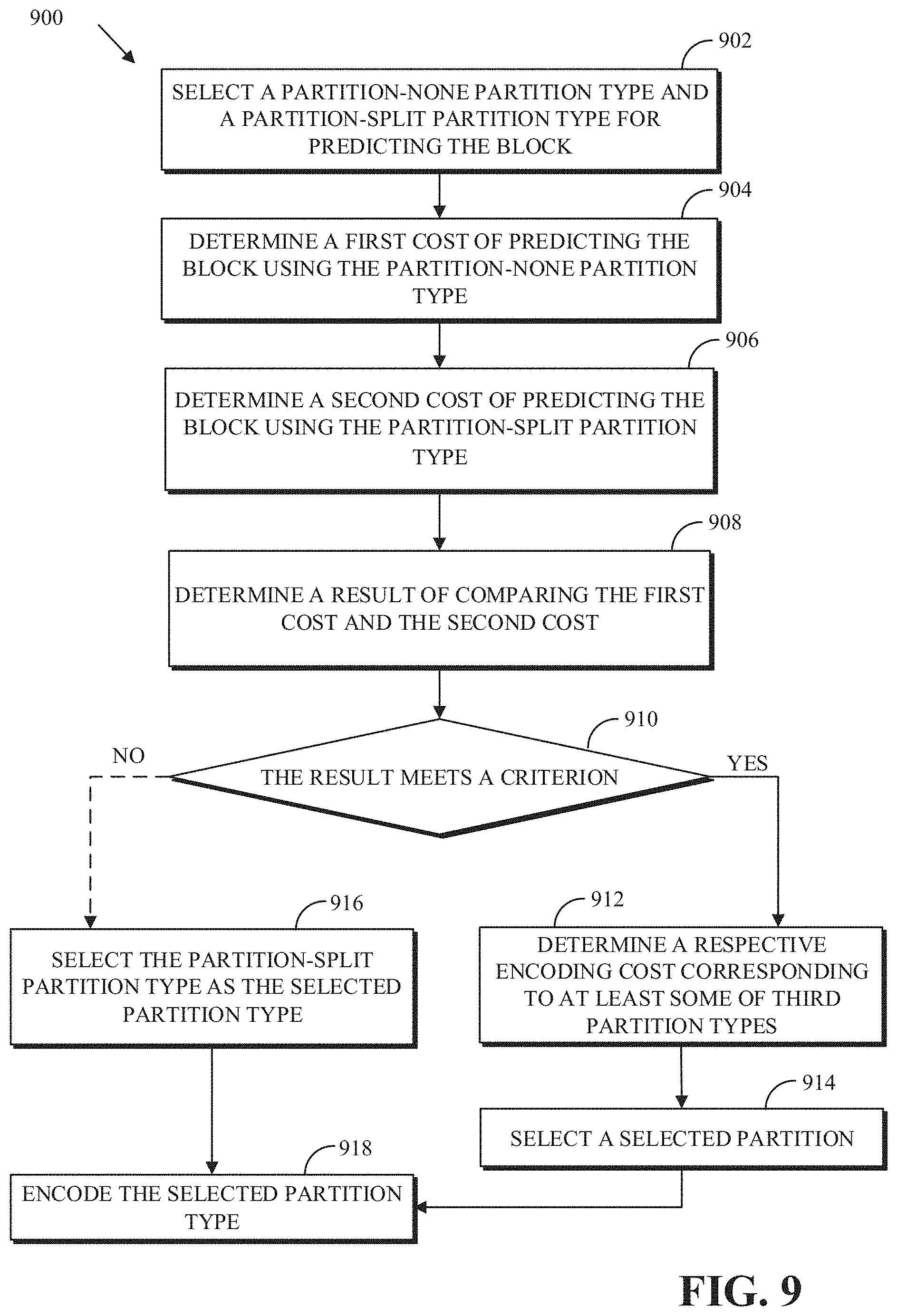

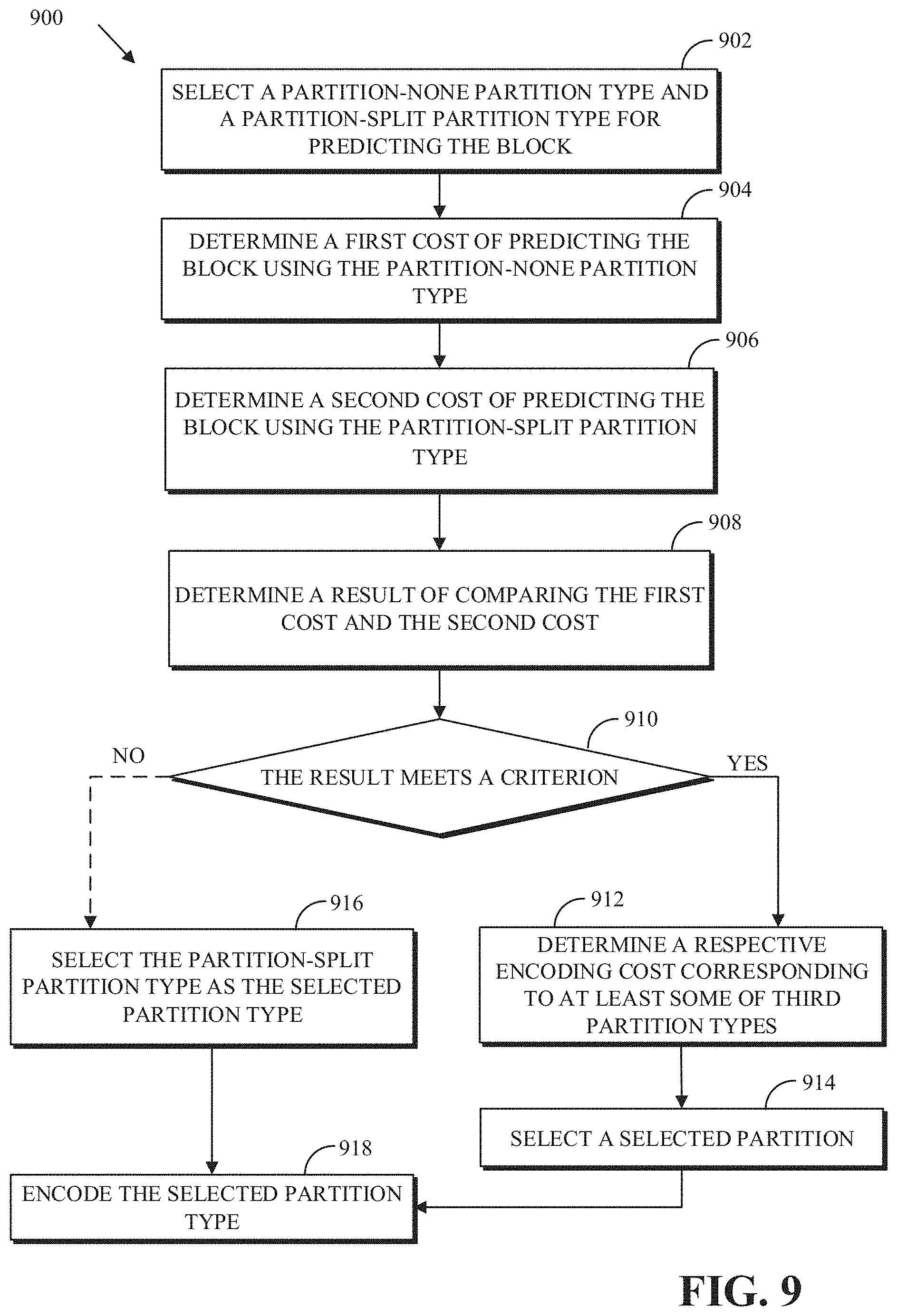

[0016] FIG. 9 is a flowchart of a process for predicting a coding block of a video frame according to implementations of this disclosure.

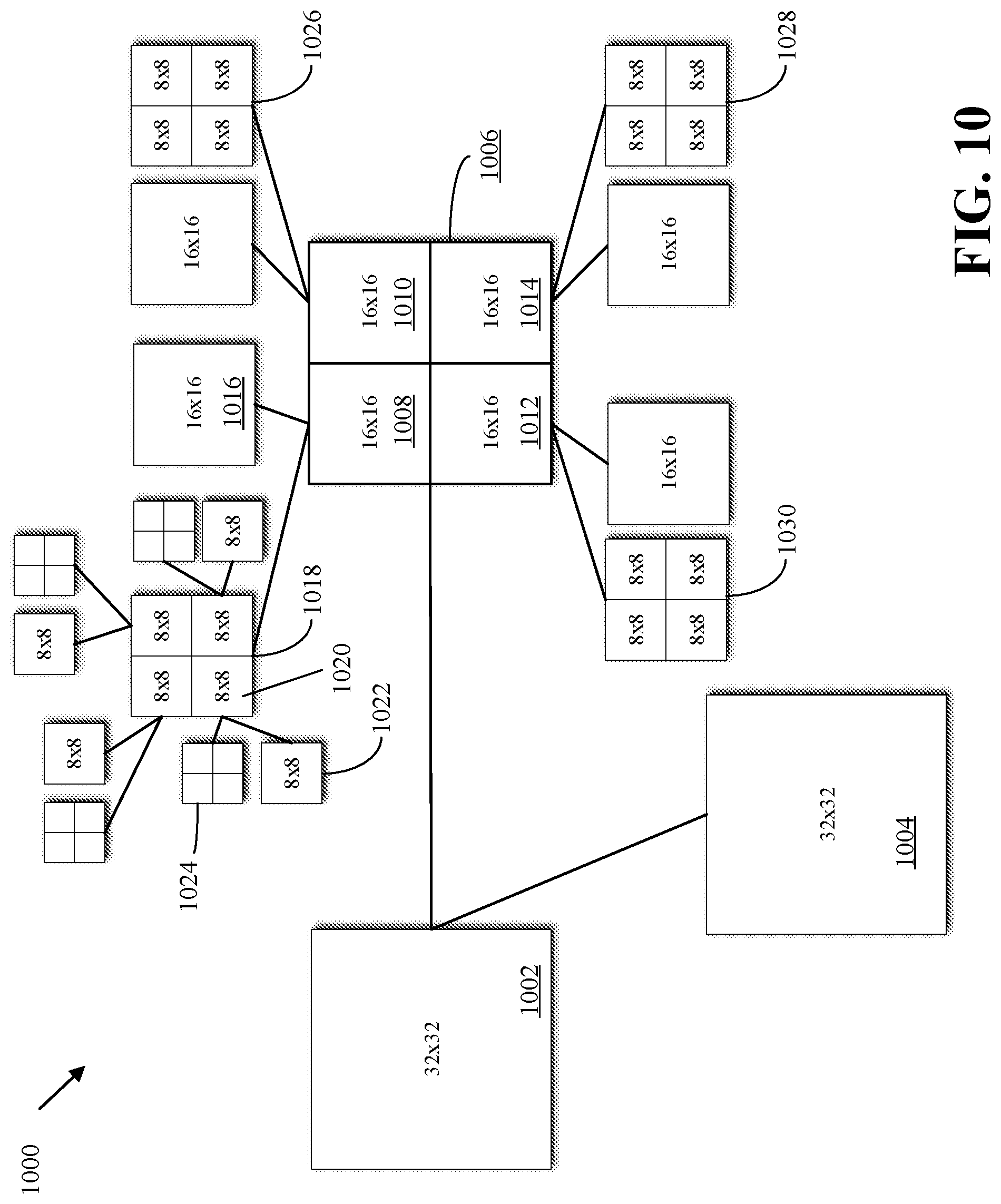

[0017] FIG. 10 is a block diagram of an example of a first stage of determining a partition type of a coding block according to implementations of this disclosure.

[0018] FIG. 11 is a block diagram of an example of a result of a first stage of determining a partition type of a coding block according to implementations of this disclosure.

[0019] FIG. 12 is a flowchart of a process for predicting a coding block of a video frame according to implementations of this disclosure.

DETAILED DESCRIPTION

[0020] As mentioned above, compression schemes related to coding video streams can include breaking images into blocks and generating a digital video output bitstream (i.e., an encoded bitstream) using one or more techniques to limit the information included in the output bitstream. A received bitstream can be decoded to re-create the blocks and the source images from the limited information. Encoding a video stream, or a portion thereof, such as a frame or a block, can include using temporal or spatial similarities in the video stream to improve coding efficiency. For example, a current block of a video stream may be encoded based on identifying a difference (residual) between the previously coded pixel values, or between a combination of previously coded pixel values, and those in the current block.

[0021] Encoding using spatial similarities is referred to as intra-prediction. Intra-prediction techniques exploit spatial redundancy within a video frame for compression. Specifically, an image block (e.g., a block of a still image or a block of a frame of a video) can be predicted using neighboring coded pixels. An image block being coded is referred to as a current block. The neighboring pixels are typically pixels of reconstructed blocks of previously coded blocks. The previously coded blocks are blocks that precede the current block in a scan order of blocks of the image. For example, in a raster scan order, the reconstructed blocks are located on the top boundary and the left boundary, but outside, of the current block.

[0022] Encoding using temporal similarities is referred to as inter-prediction. Inter-prediction attempts to predict the pixel values of a block using a possibly displaced block or blocks from a temporally nearby frame (i.e., reference frame) or frames. A temporally nearby frame is a frame that appears earlier or later in time in the video stream than the frame of the block being encoded. A prediction block resulting from inter-prediction is referred to herein as an inter-predictor or an inter-predictor block.

[0023] As mentioned above, a current block of a video stream may be encoded based on identifying a difference (residual) between the previously coded pixel values and those in the current block. In this way, only the residual and parameters used to generate the residual need be added to the encoded bitstream. The residual may be encoded using a lossy quantization operation.

[0024] Identifying the residual involves prediction (as further described below) using, for example, intra-prediction and/or inter-prediction. As further described below with respect to FIG. 7, prediction is performed at a coding block level. A coding block can be partitioned (e.g., split) into one or more prediction blocks according to a partition type. A partitioning (according to a partition type) that optimally captures the content (e.g., signal) characteristics of the coding block is used. An optimal partitioning is a partitioning that can result in a best (i.e., minimal) rate-distortion value for the coding block.

[0025] As further explained below, a frame of video (or an image) can be divided into blocks (referred to as superblocks or coding block trees) of largest possible coding block sizes. The largest possible coding block size can be 128.times.128, 64.times.64, or other largest possible coding block size. Each superblock is processed (e.g., encoded) separately from the coding of other superblocks.

[0026] A superblock is encoded based on a partitioning (i.e., into coding blocks) that results in an optimal rate-distortion value for the superblock. A rate-distortion value refers to a ratio that balances an amount of distortion (e.g., a loss in video quality) with a rate (e.g., a number of bits) for encoding a coding block of the superblock. As such, the superblock may be recursively partitioned into coding blocks to determine the optimal rate-distortion value of the superblock. The rate-distortion value of the superblock can be the sum of the rate-distortion values of the constituent coding blocks of the superblock. A partitioning can include only one coding block that corresponds to the superblock itself (i.e., no further partitioning of the superblock). Partitioning of a superblock into coding blocks is illustrated with respect to FIG. 7.

[0027] A coding block can be partitioned into one or more prediction blocks. The coding block can be partitioned into prediction blocks according to a partition type. Several partition types may be available. Examples of partition types are described with respect to FIGS. 8A-8B.

[0028] To determine an optimal partition (e.g., a partition that results in the minimum rate-distortion value) of a coding block, an encoder can perform a partition search. For example, for each available partition type, the encoder can partition the coding block, according to the partition type, into respective prediction blocks. For each partition type, each prediction block is encoded according to all possible intra-prediction modes and inter-prediction modes to determine the optimal prediction mode for the prediction mode.

[0029] As an illustrative example, assume that only two partition types are available, namely, a non-partition type and a vertical-partition type, and that the superblock is of size N.times.N (e.g., 128.times.128). The non-partition type corresponds to using a prediction block of size N.times.N (i.e., 128.times.128). The vertical-partition type corresponds to splitting the superblock into two prediction blocks, each of size N/2.times.N (i.e., 64.times.128).

[0030] A first minimum rate-distortion value corresponding to the non-partition type is determined. The first minimum rate-distortion value can be the minimum rate-distortion value that results from predicting the block using each of the possible intra- and inter-prediction modes. A second minimum rate-distortion value corresponding to the vertical-partition type is determined. The second minimum rate-distortion value can be the sum of the minimum rate-distortion values corresponding to each of the N/2.times.N prediction blocks. The optimal partition type of the superblock is that partition that results in the minimal rate-distortion values amongst the first minimum rate-distortion value and the second minimum rate-distortion value.

[0031] A codec (e.g., an encoder) can have available multiple partition types. More partition types can be used to better fit the characteristics of the block signal. For example, using the partition types described with respect to FIG. 8B (herein referred to as "extended partitioning types") can provide compression performance gains over using only the partition types described with respect to FIG. 8A. For example, 3% performance gains have been observed when partition types that partition a coding block into two square and one rectangular sub-blocks are used; and an additional 1% performance gain has been observed when additionally using partition types that partition a coding block into four rectangular prediction units.

[0032] The improved compression gains, however, result in higher encoder complexity. Encoder complexity is a function of the number of partition types. Higher encoder complexity is due to the more extensive partition search associated with higher partition types. For example, an encoder that has available 10 partition types may take 2.5 times longer to determine (e.g., by performing a partition search) an optimal partition type than an encoder that has four partition types.

[0033] Implementations according to this disclosure can balance the performance gains associated with an increased number of partition types with the time required (i.e., by an encoder) to perform a partition search. A multi-stage coding block partition search can be used to reduce the search space (i.e., the number of partition types to be checked) while retaining the compression gains associated with the increased number of partition types. At each stage, some of the partition types are eliminated (i.e., not checked) and, accordingly, predictions based on the eliminated partition types are not performed. The multi-stage coding block partition search has been observed to improve coding performance (i.e., computation time associated with partition search) by 40% at a minor compression loss of 0.15%.

[0034] Multi-stage coding block partition search of coding blocks is described herein first with reference to a system in which the teachings may be incorporated.

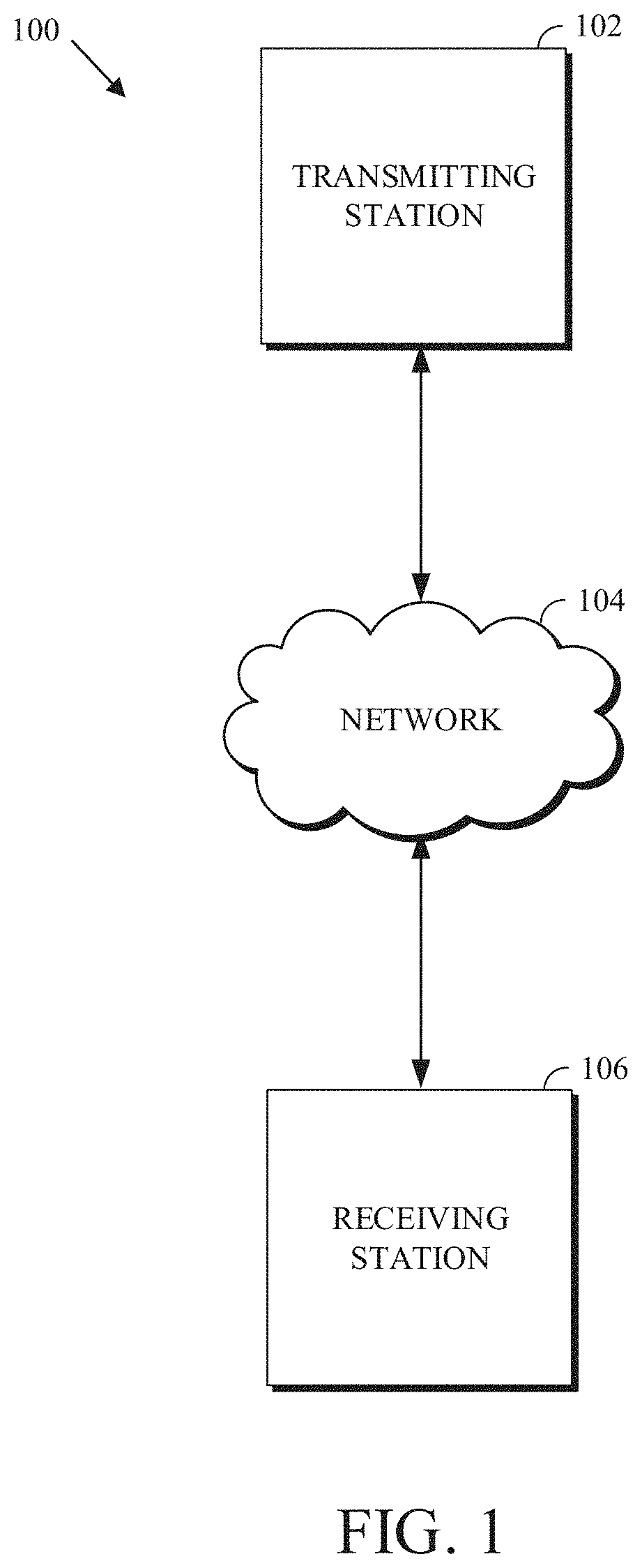

[0035] FIG. 1 is a schematic of a video encoding and decoding system 100. A transmitting station 102 can be, for example, a computer having an internal configuration of hardware, such as that described with respect to FIG. 2. However, other suitable implementations of the transmitting station 102 are possible. For example, the processing of the transmitting station 102 can be distributed among multiple devices.

[0036] A network 104 can connect the transmitting station 102 and a receiving station 106 for encoding and decoding of the video stream. Specifically, the video stream can be encoded in the transmitting station 102, and the encoded video stream can be decoded in the receiving station 106. The network 104 can be, for example, the Internet. The network 104 can also be a local area network (LAN), wide area network (WAN), virtual private network (VPN), cellular telephone network, or any other means of transferring the video stream from the transmitting station 102 to, in this example, the receiving station 106.

[0037] In one example, the receiving station 106 can be a computer having an internal configuration of hardware, such as that described with respect to FIG. 2. However, other suitable implementations of the receiving station 106 are possible. For example, the processing of the receiving station 106 can be distributed among multiple devices.

[0038] Other implementations of the video encoding and decoding system 100 are possible. For example, an implementation can omit the network 104. In another implementation, a video stream can be encoded and then stored for transmission at a later time to the receiving station 106 or any other device having memory. In one implementation, the receiving station 106 receives (e.g., via the network 104, a computer bus, and/or some communication pathway) the encoded video stream and stores the video stream for later decoding. In an example implementation, a real-time transport protocol (RTP) is used for transmission of the encoded video over the network 104. In another implementation, a transport protocol other than RTP (e.g., an HTTP-based video streaming protocol) may be used.

[0039] When used in a video conferencing system, for example, the transmitting station 102 and/or the receiving station 106 may include the ability to both encode and decode a video stream as described below. For example, the receiving station 106 could be a video conference participant who receives an encoded video bitstream from a video conference server (e.g., the transmitting station 102) to decode and view and further encodes and transmits its own video bitstream to the video conference server for decoding and viewing by other participants.

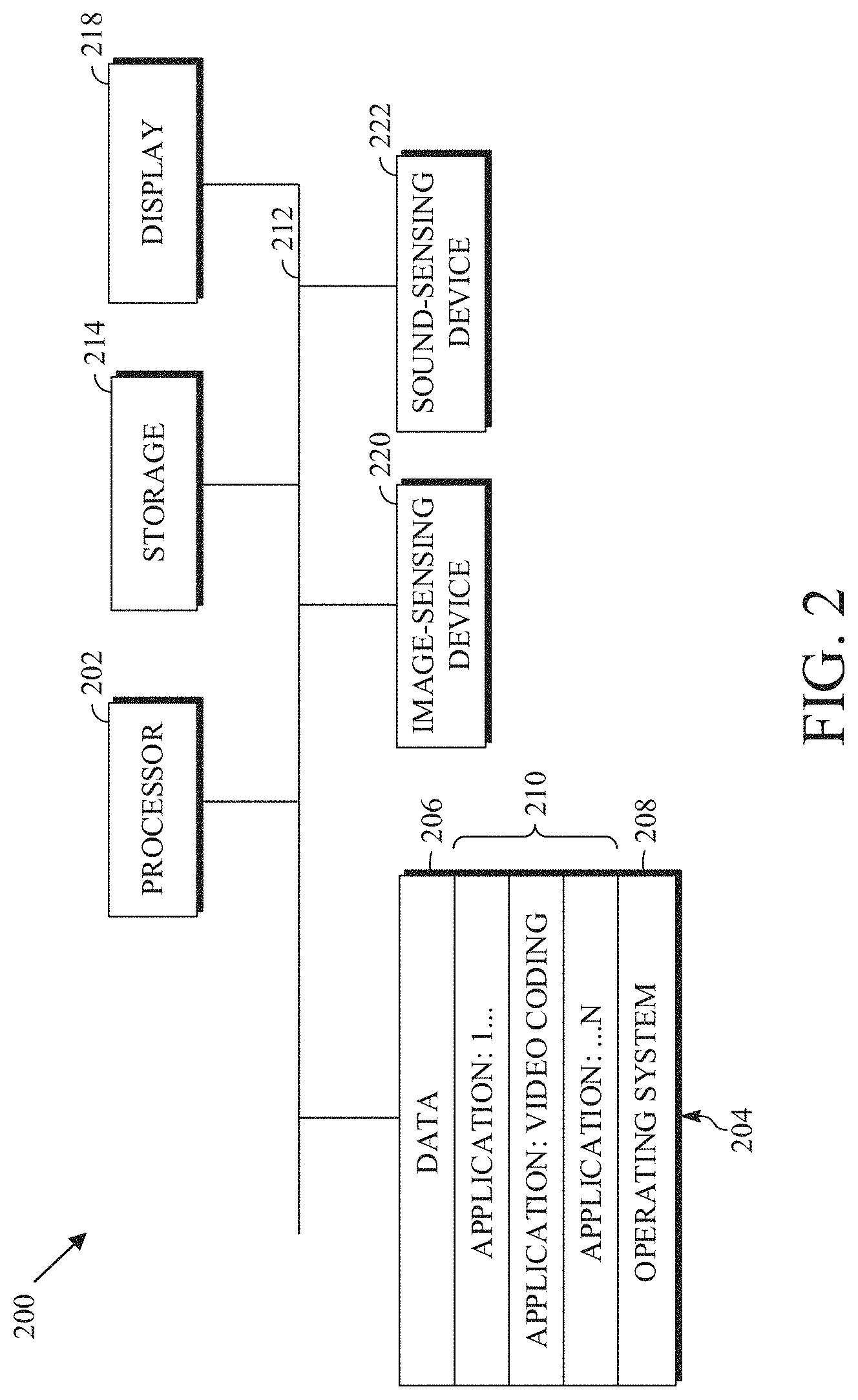

[0040] FIG. 2 is a block diagram of an example of a computing device 200 that can implement a transmitting station or a receiving station. For example, the computing device 200 can implement one or both of the transmitting station 102 and the receiving station 106 of FIG. 1. The computing device 200 can be in the form of a computing system including multiple computing devices, or in the form of a single computing device, for example, a mobile phone, a tablet computer, a laptop computer, a notebook computer, a desktop computer, and the like.

[0041] A CPU 202 in the computing device 200 can be a central processing unit. Alternatively, the CPU 202 can be any other type of device, or multiple devices, now-existing or hereafter developed, capable of manipulating or processing information. Although the disclosed implementations can be practiced with a single processor as shown (e.g., the CPU 202), advantages in speed and efficiency can be achieved by using more than one processor.

[0042] In an implementation, a memory 204 in the computing device 200 can be a read-only memory (ROM) device or a random-access memory (RAM) device. Any other suitable type of storage device can be used as the memory 204. The memory 204 can include code and data 206 that is accessed by the CPU 202 using a bus 212. The memory 204 can further include an operating system 208 and application programs 210, the application programs 210 including at least one program that permits the CPU 202 to perform the methods described herein. For example, the application programs 210 can include applications 1 through N, which further include a video coding application that performs the methods described herein. The computing device 200 can also include a secondary storage 214, which can, for example, be a memory card used with a computing device 200 that is mobile. Because the video communication sessions may contain a significant amount of information, they can be stored in whole or in part in the secondary storage 214 and loaded into the memory 204 as needed for processing.

[0043] The computing device 200 can also include one or more output devices, such as a display 218. The display 218 may be, in one example, a touch-sensitive display that combines a display with a touch-sensitive element that is operable to sense touch inputs. The display 218 can be coupled to the CPU 202 via the bus 212. Other output devices that permit a user to program or otherwise use the computing device 200 can be provided in addition to or as an alternative to the display 218. When the output device is or includes a display, the display can be implemented in various ways, including as a liquid crystal display (LCD); a cathode-ray tube (CRT) display; or a light-emitting diode (LED) display, such as an organic LED (OLED) display.

[0044] The computing device 200 can also include or be in communication with an image-sensing device 220, for example, a camera, or any other image-sensing device, now existing or hereafter developed, that can sense an image, such as the image of a user operating the computing device 200. The image-sensing device 220 can be positioned such that it is directed toward the user operating the computing device 200. In an example, the position and optical axis of the image-sensing device 220 can be configured such that the field of vision includes an area that is directly adjacent to the display 218 and from which the display 218 is visible.

[0045] The computing device 200 can also include or be in communication with a sound-sensing device 222, for example, a microphone, or any other sound-sensing device, now existing or hereafter developed, that can sense sounds near the computing device 200. The sound-sensing device 222 can be positioned such that it is directed toward the user operating the computing device 200 and can be configured to receive sounds, for example, speech or other utterances, made by the user while the user operates the computing device 200.

[0046] Although FIG. 2 depicts the CPU 202 and the memory 204 of the computing device 200 as being integrated into a single unit, other configurations can be utilized. The operations of the CPU 202 can be distributed across multiple machines (each machine having one or more processors) that can be coupled directly or across a local area or other network. The memory 204 can be distributed across multiple machines, such as a network-based memory or memory in multiple machines performing the operations of the computing device 200. Although depicted here as a single bus, the bus 212 of the computing device 200 can be composed of multiple buses. Further, the secondary storage 214 can be directly coupled to the other components of the computing device 200 or can be accessed via a network and can comprise a single integrated unit, such as a memory card, or multiple units, such as multiple memory cards. The computing device 200 can thus be implemented in a wide variety of configurations.

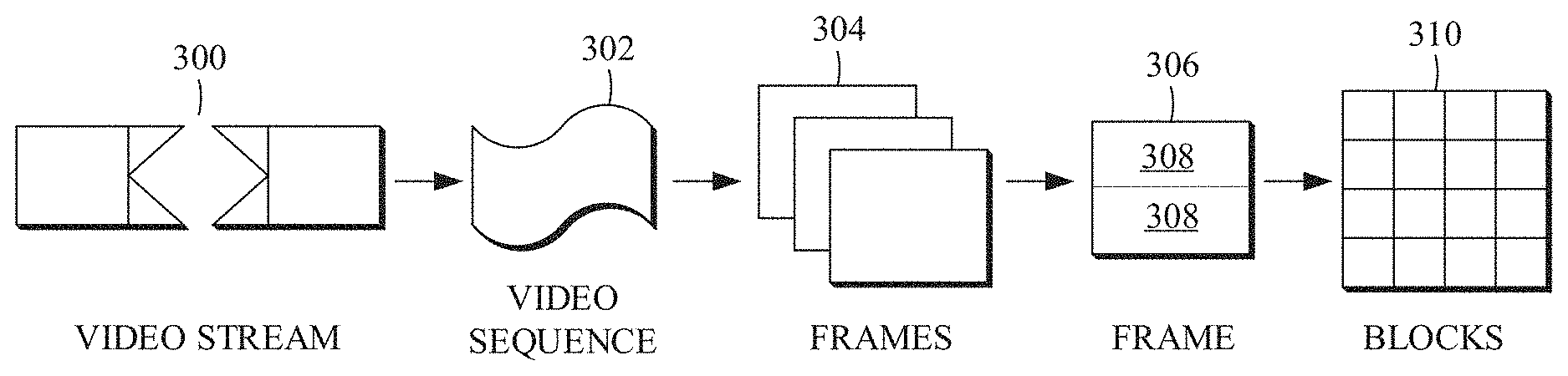

[0047] FIG. 3 is a diagram of an example of a video stream 300 to be encoded and subsequently decoded. The video stream 300 includes a video sequence 302. At the next level, the video sequence 302 includes a number of adjacent frames 304. While three frames are depicted as the adjacent frames 304, the video sequence 302 can include any number of adjacent frames 304. The adjacent frames 304 can then be further subdivided into individual frames, for example, a frame 306. At the next level, the frame 306 can be divided into a series of segments 308 or planes. The segments 308 can be subsets of frames that permit parallel processing, for example. The segments 308 can also be subsets of frames that can separate the video data into separate colors. For example, the frame 306 of color video data can include a luminance plane and two chrominance planes. The segments 308 may be sampled at different resolutions.

[0048] Whether or not the frame 306 is divided into the segments 308, the frame 306 may be further subdivided into blocks 310, which can contain data corresponding to, for example, 16.times.16 pixels in the frame 306. The blocks 310 can also be arranged to include data from one or more segments 308 of pixel data. The blocks 310 can also be of any other suitable size, such as 4.times.4 pixels, 8.times.8 pixels, 16.times.8 pixels, 8.times.16 pixels, 16.times.16 pixels, or larger.

[0049] FIG. 4 is a block diagram of an encoder 400 in accordance with implementations of this disclosure. The encoder 400 can be implemented, as described above, in the transmitting station 102, such as by providing a computer software program stored in memory, for example, the memory 204. The computer software program can include machine instructions that, when executed by a processor, such as the CPU 202, cause the transmitting station 102 to encode video data in manners described herein. The encoder 400 can also be implemented as specialized hardware included in, for example, the transmitting station 102. The encoder 400 has the following stages to perform the various functions in a forward path (shown by the solid connection lines) to produce an encoded or compressed bitstream 420 using the video stream 300 as input: an intra/inter-prediction stage 402, a transform stage 404, a quantization stage 406, and an entropy encoding stage 408. The encoder 400 may also include a reconstruction path (shown by the dotted connection lines) to reconstruct a frame for encoding of future blocks. In FIG. 4, the encoder 400 has the following stages to perform the various functions in the reconstruction path: a dequantization stage 410, an inverse transform stage 412, a reconstruction stage 414, and a loop filtering stage 416. Other structural variations of the encoder 400 can be used to encode the video stream 300.

[0050] When the video stream 300 is presented for encoding, the frame 306 can be processed in units of blocks. At the intra/inter-prediction stage 402, a block can be encoded using intra-frame prediction (also called intra-prediction) or inter-frame prediction (also called inter-prediction), or a combination of both. In any case, a prediction block can be formed. In the case of intra-prediction, all or part of a prediction block may be formed from samples in the current frame that have been previously encoded and reconstructed. In the case of inter-prediction, all or part of a prediction block may be formed from samples in one or more previously constructed reference frames determined using motion vectors.

[0051] Next, still referring to FIG. 4, the prediction block can be subtracted from the current block at the intra/inter-prediction stage 402 to produce a residual block (also called a residual). The transform stage 404 transforms the residual into transform coefficients in, for example, the frequency domain using block-based transforms. Such block-based transforms (i.e., transform types) include, for example, the Discrete Cosine Transform (DCT) and the Asymmetric Discrete Sine Transform (ADST). Other block-based transforms are possible. Further, combinations of different transforms may be applied to a single residual. In one example of application of a transform, the DCT transforms the residual block into the frequency domain where the transform coefficient values are based on spatial frequency. The lowest frequency (DC) coefficient is at the top-left of the matrix, and the highest frequency coefficient is at the bottom-right of the matrix. It is worth noting that the size of a prediction block, and hence the resulting residual block, may be different from the size of the transform block. For example, the prediction block may be split into smaller blocks to which separate transforms are applied.

[0052] The quantization stage 406 converts the transform coefficients into discrete quantum values, which are referred to as quantized transform coefficients, using a quantizer value or a quantization level. For example, the transform coefficients may be divided by the quantizer value and truncated. The quantized transform coefficients are then entropy encoded by the entropy encoding stage 408. Entropy coding may be performed using any number of techniques, including token and binary trees. The entropy-encoded coefficients, together with other information used to decode the block (which may include, for example, the type of prediction used, transform type, motion vectors, and quantizer value), are then output to the compressed bitstream 420. The information to decode the block may be entropy coded into block, frame, slice, and/or section headers within the compressed bitstream 420. The compressed bitstream 420 can also be referred to as an encoded video stream or encoded video bitstream; these terms will be used interchangeably herein.

[0053] The reconstruction path in FIG. 4 (shown by the dotted connection lines) can be used to ensure that both the encoder 400 and a decoder 500 (described below) use the same reference frames and blocks to decode the compressed bitstream 420. The reconstruction path performs functions that are similar to functions that take place during the decoding process and that are discussed in more detail below, including dequantizing the quantized transform coefficients at the dequantization stage 410 and inverse transforming the dequantized transform coefficients at the inverse transform stage 412 to produce a derivative residual block (also called a derivative residual). At the reconstruction stage 414, the prediction block that was predicted at the intra/inter-prediction stage 402 can be added to the derivative residual to create a reconstructed block. The loop filtering stage 416 can be applied to the reconstructed block to reduce distortion, such as blocking artifacts.

[0054] Other variations of the encoder 400 can be used to encode the compressed bitstream 420. For example, a non-transform based encoder 400 can quantize the residual signal directly without the transform stage 404 for certain blocks or frames. In another implementation, an encoder 400 can have the quantization stage 406 and the dequantization stage 410 combined into a single stage.

[0055] FIG. 5 is a block diagram of a decoder 500 in accordance with implementations of this disclosure. The decoder 500 can be implemented in the receiving station 106, for example, by providing a computer software program stored in the memory 204. The computer software program can include machine instructions that, when executed by a processor, such as the CPU 202, cause the receiving station 106 to decode video data in the manners described below. The decoder 500 can also be implemented in hardware included in, for example, the transmitting station 102 or the receiving station 106.

[0056] The decoder 500, similar to the reconstruction path of the encoder 400 discussed above, includes in one example the following stages to perform various functions to produce an output video stream 516 from the compressed bitstream 420: an entropy decoding stage 502, a dequantization stage 504, an inverse transform stage 506, an intra/inter-prediction stage 508, a reconstruction stage 510, a loop filtering stage 512, and a post filtering stage 514. Other structural variations of the decoder 500 can be used to decode the compressed bitstream 420.

[0057] When the compressed bitstream 420 is presented for decoding, the data elements within the compressed bitstream 420 can be decoded by the entropy decoding stage 502 to produce a set of quantized transform coefficients. The dequantization stage 504 dequantizes the quantized transform coefficients (e.g., by multiplying the quantized transform coefficients by the quantizer value), and the inverse transform stage 506 inverse transforms the dequantized transform coefficients using the selected transform type to produce a derivative residual that can be identical to that created by the inverse transform stage 412 in the encoder 400. Using header information decoded from the compressed bitstream 420, the decoder 500 can use the intra/inter-prediction stage 508 to create the same prediction block as was created in the encoder 400, for example, at the intra/inter-prediction stage 402. At the reconstruction stage 510, the prediction block can be added to the derivative residual to create a reconstructed block. The loop filtering stage 512 can be applied to the reconstructed block to reduce blocking artifacts. Other filtering can be applied to the reconstructed block. In an example, the post filtering stage 514 is applied to the reconstructed block to reduce blocking distortion, and the result is output as an output video stream 516. The output video stream 516 can also be referred to as a decoded video stream; these terms will be used interchangeably herein.

[0058] Other variations of the decoder 500 can be used to decode the compressed bitstream 420. For example, the decoder 500 can produce the output video stream 516 without the post filtering stage 514. In some implementations of the decoder 500, the post filtering stage 514 is applied after the loop filtering stage 512. The loop filtering stage 512 can include an optional deblocking filtering stage. Additionally, or alternatively, the encoder 400 includes an optional deblocking filtering stage in the loop filtering stage 416.

[0059] A codec can use multiple transform types. For example, a transform type can be the transform type used by the transform stage 404 of FIG. 4 to generate the transform block. For example, the transform type (i.e., an inverse transform type) can be the transform type to be used by the dequantization stage 504 of FIG. 5. Available transform types can include a one-dimensional Discrete Cosine Transform (1D DCT) or its approximation, a one-dimensional Discrete Sine Transform (1D DST) or its approximation, a two-dimensional DCT (2D DCT) or its approximation, a two-dimensional DST (2D DST) or its approximation, and an identity transform. Other transform types can be available. In an example, a one-dimensional transform (1D DCT or 1D DST) can be applied in one dimension (e.g., row or column), and the identity transform can be applied in the other dimension.

[0060] In the cases where a 1D transform (e.g., 1D DCT, 1D DST) is used (e.g., 1D DCT is applied to columns (or rows, respectively) of a transform block), the quantized coefficients can be coded by using a row-by-row (i.e., raster) scanning order or a column-by-column scanning order. In the cases where 2D transforms (e.g., 2D DCT) are used, a different scanning order may be used to code the quantized coefficients. As indicated above, different templates can be used to derive contexts for coding the non-zero flags of the non-zero map based on the types of transforms used. As such, in an implementation, the template can be selected based on the transform type used to generate the transform block. As indicated above, examples of a transform type include: 1D DCT applied to rows (or columns) and an identity transform applied to columns (or rows); 1D DST applied to rows (or columns) and an identity transform applied to columns (or rows); 1D DCT applied to rows (or columns) and 1D DST applied to columns (or rows); a 2D DCT; and a 2D DST. Other combinations of transforms can comprise a transform type.

[0061] FIG. 6 is a block diagram of a representation of a portion 600 of a frame, such as the frame 306 of FIG. 3, according to implementations of this disclosure. As shown, the portion 600 of the frame includes four 64.times.64 blocks 610, which may be referred to as superblocks, in two rows and two columns in a matrix or Cartesian plane. A superblock can have a larger or a smaller size. For example, a superblock can be 128.times.128. A superblock can also be referred to as a coding tree block (CTB). While FIG. 6 is explained with respect to a superblock of size 64.times.64, the description is easily extendable to larger (e.g., 128.times.128) or smaller superblock sizes.

[0062] In an example, a superblock can be a basic or maximum coding unit (CU). Each superblock can include four 32.times.32 blocks 620. Each 32.times.32 block 620 can include four 16.times.16 blocks 630. Each 16.times.16 block 630 can include four 8.times.8 blocks 640. Each 8.times.8 block 640 can include four 4.times.4 blocks 650. Each 4.times.4 block 650 can include 16 pixels, which can be represented in four rows and four columns in each respective block in the Cartesian plane or matrix. The pixels can include information representing an image captured in the frame, such as luminance information, color information, and location information. In an example, a block, such as a 16.times.16-pixel block as shown, can include a luminance block 660, which can include luminance pixels 662; and two chrominance blocks 670/680, such as a U or Cb chrominance block 670, and a V or Cr chrominance block 680. The chrominance blocks 670/680 can include chrominance pixels 690. For example, the luminance block 660 can include 16.times.16 luminance pixels 662, and each chrominance block 670/680 can include 8.times.8 chrominance pixels 690, as shown. Although one arrangement of blocks is shown, any arrangement can be used. Although FIG. 6 shows N.times.N blocks, in some implementations, N.times.M, where N.noteq.M, blocks can be used. For example, 32.times.64 blocks, 64.times.32 blocks, 16.times.32 blocks, 32.times.16 blocks, or any other size blocks can be used. In some implementations, N.times.2N blocks, 2N.times.N blocks, or a combination thereof can be used.

[0063] In some implementations, video coding can include ordered block-level coding. Ordered block-level coding can include coding blocks of a frame in an order, such as raster-scan order, wherein blocks can be identified and processed starting with a block in the upper left corner of the frame, or a portion of the frame, and proceeding along rows from left to right and from the top row to the bottom row, identifying each block in turn for processing. For example, the superblock in the top row and left column of a frame can be the first block coded, and the superblock immediately to the right of the first block can be the second block coded. The second row from the top can be the second row coded, such that the superblock in the left column of the second row can be coded after the superblock in the rightmost column of the first row.

[0064] In an example, coding a block can include using quad-tree coding, which can include coding smaller block units with a block in raster-scan order. The 64.times.64 superblock shown in the bottom-left corner of the portion of the frame shown in FIG. 6, for example, can be coded using quad-tree coding in which the top-left 32.times.32 block can be coded, then the top-right 32.times.32 block can be coded, then the bottom-left 32.times.32 block can be coded, and then the bottom-right 32.times.32 block can be coded. Each 32.times.32 block can be coded using quad-tree coding in which the top-left 16.times.16 block can be coded, then the top-right 16.times.16 block can be coded, then the bottom-left 16.times.16 block can be coded, and then the bottom-right 16.times.16 block can be coded. Each 16.times.16 block can be coded using quad-tree coding in which the top-left 8.times.8 block can be coded, then the top-right 8.times.8 block can be coded, then the bottom-left 8.times.8 block can be coded, and then the bottom-right 8.times.8 block can be coded. Each 8.times.8 block can be coded using quad-tree coding in which the top-left 4.times.4 block can be coded, then the top-right 4.times.4 block can be coded, then the bottom-left 4.times.4 block can be coded, and then the bottom-right 4.times.4 block can be coded. In some implementations, 8.times.8 blocks can be omitted for a 16.times.16 block, and the 16.times.16 block can be coded using quad-tree coding in which the top-left 4.times.4 block can be coded, and then the other 4.times.4 blocks in the 16.times.16 block can be coded in raster-scan order.

[0065] In an example, video coding can include compressing the information included in an original, or input, frame by omitting some of the information in the original frame from a corresponding encoded frame. For example, coding can include reducing spectral redundancy, reducing spatial redundancy, reducing temporal redundancy, or a combination thereof.

[0066] In an example, reducing spectral redundancy can include using a color model based on a luminance component (Y) and two chrominance components (U and V or Cb and Cr), which can be referred to as the YUV or YCbCr color model or color space. Using the YUV color model can include using a relatively large amount of information to represent the luminance component of a portion of a frame and using a relatively small amount of information to represent each corresponding chrominance component for the portion of the frame. For example, a portion of a frame can be represented by a high-resolution luminance component, which can include a 16.times.16 block of pixels, and by two lower resolution chrominance components, each of which representing the portion of the frame as an 8.times.8 block of pixels. A pixel can indicate a value (e.g., a value in the range from 0 to 255) and can be stored or transmitted using, for example, eight bits. Although this disclosure is described with reference to the YUV color model, any color model can be used.

[0067] Reducing spatial redundancy can include transforming a block into the frequency domain as described above. For example, a unit of an encoder, such as the entropy encoding stage 408 of FIG. 4, can perform a DCT using transform coefficient values based on spatial frequency.

[0068] Reducing temporal redundancy can include using similarities between frames to encode a frame using a relatively small amount of data based on one or more reference frames, which can be previously encoded, decoded, and reconstructed frames of the video stream. For example, a block or a pixel of a current frame can be similar to a spatially corresponding block or pixel of a reference frame. A block or a pixel of a current frame can be similar to a block or a pixel of a reference frame at a different spatial location. As such, reducing temporal redundancy can include generating motion information indicating the spatial difference (e.g., a translation between the location of the block or the pixel in the current frame and the corresponding location of the block or the pixel in the reference frame).

[0069] Reducing temporal redundancy can include identifying a block or a pixel in a reference frame, or a portion of the reference frame, that corresponds with a current block or pixel of a current frame. For example, a reference frame, or a portion of a reference frame, which can be stored in memory, can be searched for the best block or pixel to use for encoding a current block or pixel of the current frame. For example, the search may identify the block of the reference frame for which the difference in pixel values between the reference block and the current block is minimized, and can be referred to as motion searching. The portion of the reference frame searched can be limited. For example, the portion of the reference frame searched, which can be referred to as the search area, can include a limited number of rows of the reference frame. In an example, identifying the reference block can include calculating a cost function, such as a sum of absolute differences (SAD), between the pixels of the blocks in the search area and the pixels of the current block.

[0070] The spatial difference between the location of the reference block in the reference frame and the current block in the current frame can be represented as a motion vector. The difference in pixel values between the reference block and the current block can be referred to as differential data, residual data, or as a residual block. In some implementations, generating motion vectors can be referred to as motion estimation, and a pixel of a current block can be indicated based on location using Cartesian coordinates such as f.sub.x,y. Similarly, a pixel of the search area of the reference frame can be indicated based on a location using Cartesian coordinates such as r.sub.x,y. A motion vector (MV) for the current block can be determined based on, for example, a SAD between the pixels of the current frame and the corresponding pixels of the reference frame.

[0071] As mentioned above, a superblock can be coded using quad-tree coding. FIG. 7 is a block diagram of an example 700 of a quad-tree representation of a block according to implementations of this disclosure. The example 700 includes a block 702. As mentioned above, the block 702 can be referred to as a superblock or a CTB. The example 700 illustrates a partition of the block 702. However, the block 702 can be partitioned differently, such as by an encoder (e.g., the encoder 400 of FIG. 4).

[0072] The example 700 illustrates that the block 702 is partitioned into four blocks, namely, blocks 702-1, 702-2, 702-3, and 702-4. The block 702-2 is further partitioned into blocks 702-5, 702-6, 702-7, and 702-8. As such, if, for example, the size of the block 702 is N.times.N (e.g., 128.times.128), then the blocks 702-1, 702-2, 702-3, and 702-4 are each of size N/2.times.N/2 (e.g., 64.times.64), and the blocks 702-5, 702-6, 702-7, and 702-8 are each of size N/4.times.N/4 (e.g., 32.times.32). If a block is partitioned, it is partitioned into four equally sized, non-overlapping square sub-blocks.

[0073] A quad-tree data representation is used to describe how the block 702 is partitioned into sub-blocks, such as blocks 702-1, 702-2, 702-3, 702-4, 702-5, 702-6, 702-7, and 702-8. A quad-tree 703 of the partition of the block 702 is shown. Each node of the quad-tree 703 is assigned a flag of "1" if the node is further split into four sub-nodes and assigned a flag of "0" if the node is not split. The flag can be referred to as a split bit (e.g., 1) or a stop bit (e.g., 0) and is coded in a compressed bitstream. In a quad-tree, a node either has four child nodes or has no child nodes. A node that has no child nodes corresponds to a block that is not split further. Each of the child nodes of a split block corresponds to a sub-block.

[0074] In the quad-tree 703, each node corresponds to a sub-block of the block 702. The sub-block is shown between parentheses. For example, a node 704-1, which has a value of 0, corresponds to the block 702-1.

[0075] A root node 704-0 corresponds to the block 702. As the block 702 is split into four sub-blocks, the value of the root node 704-0 is the split bit (e.g., 1). At an intermediate level, the flags indicate whether a sub-block of the block 702 is further split into four sub-sub-blocks. In this case, a node 704-2 includes a flag of "1" because the block 702-2 has been split into the blocks 702-5, 702-6, 702-7, and 702-8. Each of nodes 704-1, 704-3, and 704-4 includes a flag of "0" because the corresponding blocks are not split. As nodes 704-5, 704-6, 704-7, and 704-8 are at a bottom level, no flag of "0" or "1" is necessary for those nodes because of corresponding CUs. That the blocks 702-5, 702-6, 702-7, and 702-8 are not split further can be inferred from the absence of additional flags corresponding to these blocks.

[0076] The quad-tree data representation for the quad-tree 703 can be represented by the binary data of "10100," where each bit represents a node 704 of the quad-tree 703. The binary data indicates the partitioning of the block 702 to the encoder and decoder. The encoder can encode the binary data in a compressed bitstream, such as the compressed bitstream 420 of FIG. 4, in a case where the encoder needs to communicate the binary data to a decoder, such as the decoder 500 of FIG. 5.

[0077] The blocks corresponding to the leaf nodes of the quad-tree 703 can be used as the bases for prediction. That is, prediction can be performed for each of the blocks 702-1, 702-5, 702-6, 702-7, 702-8, 702-3, and 702-4, referred to herein as coding blocks. As mentioned with respect to FIG. 6, the coding block can be a luminance block or a chrominance block. It is noted that, in an example, the superblock partitioning can be determined with respect to luminance blocks. The same partition can be used with the chrominance blocks.

[0078] A prediction type (e.g., intra- or inter-prediction) is determined at the coding block (e.g., a block 702-1, 702-5, 702-6, 702-7, 702-8, 702-3, or 702-4) level. That is, a coding block is the decision point for prediction.

[0079] FIG. 8A is a block diagram of an example 800 of recursive partitioning of a coding block. The example 800 includes a coding block 802. Inter- or intra-prediction is performed with respect to the coding block 802. That is, the coding block 802 can be partitioned (e.g., divided, split, or otherwise partitioned) into one or more prediction units (PUs) according to a partition type, such as one of the partition types described herein. Each PU can be predicted using inter- or intra-prediction. In an example, the process described with respect to the example 800 can be performed (e.g., implemented) by an intra/inter-prediction stage, such as the intra/inter-prediction stage 402 of the encoder 400 of FIG. 4.

[0080] The coding block 802 can be a chrominance block. The coding block 802 can be a luminance block. In an example, a partition is determined for a luminance block, and a corresponding chrominance block uses the same partition as that of the luminance block. In another example, a partition of a chrominance block can be determined independently of the partition of a luminance block.

[0081] The example 800 illustrates a recursive partition search of the coding block 802. The recursive search is performed in order to determine the partition that results in the optimal RD cost. An RD cost can include the cost of encoding both the luminance and the chrominance blocks corresponding to a block.

[0082] The example 800 illustrates four partition types that may be available at an encoder. A partition type 804 (also referred to herein as the PARTITION_SPLIT partition type and partition-split partition type) splits the coding block 802 into four equally sized square sub-blocks. For example, if the coding block 802 is of size N.times.N, then each of the four sub-blocks of the PARTITION_SPLIT partition type is of size N/4.times.N/4. Each of the four sub-blocks resulting from the partition type 804 is not itself a prediction unit/block.

[0083] A partition type 806 (also referred to herein as the PARTITION_VERT partition type) splits the coding block 802 into two adjacent rectangular prediction units, each of size N.times.N/2. A partition type 808 (also referred to herein as the PARTITION_HORZ partition type) splits the coding block 802 into two adjacent rectangular prediction units, each of size N/2.times.N. A partition type 810 (also referred to herein as the PARTITION_NONE partition type and partition-none partition type) uses one prediction unit for the coding block 802 such that the prediction unit has the same size (i.e., N.times.N) as the coding block 802.

[0084] For brevity, a partition type may simply be referred to herein by its name only. For example, instead of using "the PARTITION_VERT partition type," "the PARTITION_VERT" may be used herein. As another example, instead of "the partition-none partition type," "the partition-none" may be used. Additionally, uppercase or lowercase letters may be used to refer to partition type names. As such, "PARTITION_VERT" and "partition-vert" refer to the same partition type.

[0085] Except for the partition type 804, none of the other partitions can be split further. As such, the partition types 806-810 can be considered end points. Each of the sub-blocks of a partition (according to a partition type) that is not an end point can be further partitioned using the available partition types. As such, partitioning can be further performed for square coding blocks. The sub-blocks of a partition type that is an end point are not partitioned further. As such, further partitioning is possible only for the sub-blocks of the PARTITION_SPLIT partition type.

[0086] As mentioned above, to determine the minimal RD cost for the coding block 802, the coding block is partitioned according to the available partition types, and a respective cost (e.g., an RD cost) of encoding the block based on each partition is determined. The partition type resulting in the smallest RD cost is selected as the partition type to be used for partitioning and encoding the coding block.

[0087] The RD cost of a partition is the sum of the RD costs of each of the sub-blocks of the partition. For example, the RD cost associated with the PARTITION_VERT (i.e., the partition type 806) is the sum of the RD cost of a sub-block 806A and the RD cost of a sub-block 806B. The sub-blocks 806A and 806B are prediction units. In an example, identifiers, such as identifiers 0, 1, 2, and 4, can be associated, respectively, with the PARTITION_NONE, PARTITION_HORZ, PARTITION_VERT, and PARTITION_SPLIT. Other identifiers are possible. Other ways of communicating the partition type to a decoder are possible.

[0088] To determine an RD cost associated with a prediction block, an encoder can predict the prediction block using at least some of the available prediction modes (i.e., available inter- and intra-prediction modes). In an example, for each of the prediction modes, a corresponding residual is determined, transformed, and quantized to determine the distortion and the rate (in bits) associated with the prediction mode. As mentioned, the partition type resulting in the smallest RD cost can be selected. Selecting a partition type can mean, inter alia, encoding in a compressed bitstream, such as the compressed bitstream 420 of FIG. 4, the partition type. Encoding the partition type can mean encoding an identifier corresponding to the partition type. Encoding the identifier corresponding to the partition type can mean entropy encoding, such as by the entropy encoding stage 408 of FIG. 4, the identifier.

[0089] To determine the RD cost corresponding to the PARTITION_SPLIT (i.e., the partition type 804), a respective RD cost corresponding to each of the sub-blocks, such as a sub-block 812, is determined. As the sub-block 812 is a square sub-block, the sub-block 812 is further partitioned according to the available partition types to determine a minimal RD cost for the sub-block 812. As such, the sub-block 812 is further partitioned as shown with respect to partitions 814. As the sub-blocks of a partition 816 (corresponding to the PARTITION_SPLIT) are square sub-blocks, the process repeats for each of the sub-blocks of the partition 816, as illustrated with an ellipsis 818, until each of a smallest square sub-block size is reached. The smallest square sub-block size corresponds to a block size that is not partitionable further. In an example, the smallest square sub-block size, for a luminance block, is a 4.times.4 block size.

[0090] As such, determining an RD cost of a square block can be regarded as a bottom-up search. That is, for example, to determine the RD cost of a PARTITION_SPLIT of a 16.times.16 coding block, the RD cost of each of the four 8.times.8 sub-blocks is determined; to determine the RD cost of a PARTITION_SPLIT of a 4.times.4 coding block, the RD cost of each of the four 4.times.4 sub-blocks is determined. As such, a square block can be recursively partitioned, based on a quad-tree partitioning, into sub-blocks using the partition-split type.

[0091] As mentioned above, more partition types than those described with respect to FIG. 8A can be available at a codec. FIG. 8B is a block diagram of an example 820 of extended partition types of a coding block according to implementations of this disclosure. The term "extended" in this context can mean "additional."

[0092] A partition type 822 (also referred to herein as the PARTITION_VERT_A) splits an N.times.N coding block into two horizontally adjacent square blocks, each of size N/2.times.N/2, and a rectangular prediction unit of size N.times.N/2. A partition type 828 (also referred to herein as the PARTITION_VERT_B) splits an N.times.N coding block into a rectangular prediction unit of size N.times.N/2 and two horizontally adjacent square blocks, each of size N/2.times.N/2.

[0093] A partition type 824 (also referred to herein as the PARTITION_HORZ_A) splits an N.times.N coding block into two vertically adjacent square blocks, each of size N/2.times.N/2, and a rectangular prediction unit of size N/2.times.N. A partition type 830 (also referred to herein as the PARTITION_HORZ_B) splits an N.times.N coding block into a rectangular prediction unit of size N/2.times.N and two vertically adjacent square blocks, each of size N/2.times.N/2.

[0094] A partition type 826 (also referred to herein as the PARTITION_VERT_4) splits an N.times.N coding block into four vertically adjacent rectangular blocks, each of size N.times.N/4. A partition type 832 (also referred to herein as the PARTITION_HORZ_4) splits an N.times.N coding block into four horizontally adjacent rectangular blocks, each of size N/4.times.N.

[0095] As mentioned above, a recursive partition search (e.g., based on a quad-tree partitioning) can be applied to square sub-blocks, such as sub-blocks 822A, 822B, 824A, 824B, 828A, 828B, 830A, and 830B.

[0096] Identifiers can be associated with each of the partition types of the example 820. In an example, identifiers 4-9 can be associated, respectively, with the PARTITION_HORZ_A, PARTITION_HORZ_B, PARTITION_VERT_A, PARTITION_VERT_B, PARTITION_HORZ_4, and PARTITION_VERT_4. Other identifiers are possible.

[0097] As shown in the example 820, instead of the four possible partition types of the example 800, 10 possible partition types (the partition types of the example 800 and the partition types of the example 820) can be available at an encoder. The complexity of an encoder that uses the 10 partition types can be 2.5 times that of an encoder that uses only the four partition types.

[0098] FIG. 9 is a flowchart of a process 900 for predicting a coding block of a video frame according to implementations of this disclosure. The coding block is a current block being encoded. The process 900 can be implemented by an encoder, such as the encoder 400 of FIG. 4. For example, the process 900 can be performed in whole or in part by the intra/inter-prediction stage 402 of the encoder 400.

[0099] Implementations of the process 900 can be performed by storing instructions in a memory, such as the memory 204 of the transmitting station 102, to be executed by a processor, such as the CPU 202, for example.

[0100] The process 900 can be implemented using specialized hardware or firmware. Some computing devices can have multiple memories, multiple processors, or both. The steps or operations of the process 900 can be distributed using different processors, memories, or both. For simplicity of explanation, the process 900 is depicted and described as a series of operations. However, the teachings in accordance with this disclosure can occur in various orders and/or concurrently. Additionally, operations in accordance with this disclosure may occur with other operations not presented and described herein. Furthermore, not all illustrated steps or operations may be used to implement a method in accordance with the disclosed subject matter.

[0101] The process 900 uses a two-stage partition search for a coding block, such as the block 802 of FIG. 8. Using the two-stage partitioning of a coding block, an encoder can adapt the processing unit (e.g., prediction unit) sizes according to the content characteristics of the coding block. Using the two-stage partition search, an encoder can advantageously narrow down the effective search range (over all available partition types) in a first pass and can conduct, in a second pass, an extensive search over only the most likely used partition range of the first pass. In the first pass, square partitions, as described below, are checked.

[0102] It is noted that even though a coding block can be partitioned into four (as described with respect to FIG. 8A) or 10 different partitions (as described with respect to the partitions of FIGS. 8A and 8B) to best fit the video signal of the coding block, the process 900 uses a recursive quad-tree partition that goes through the square coding block sizes. As such, in a first stage, the process 900 determines encoding costs (e.g., RD costs) associated with square blocks only. As such, in a first stage, the process 900 determines RD costs associated with only the PARTITION_SPLIT and the PARTITION_NONE partition types. The PARTITION_SPLIT partition type is applied recursively until a smallest square sub-block size is reached. Available partition types, other than the PARTITION_SPLIT and the PARTITION_NONE partition types, are referred to, collectively, as third partition types.

[0103] In an example, the third partition types include the PARTITION_VERT and the PARTITION_HORZ partition types. In an example, the third partition types include the PARTITION_VERT, the PARTITION_HORZ, the PARTITION_HORZ_A, the PARTITION_HORZ_B, the PARTITION_VERT_A, the PARTITION_VERT_B, the PARTITION_HORZ_4, and the PARTITION_VERT_4 partition types. In another example, the third partition types can include more, fewer, or other partition types.

[0104] The process 900 can be summarized as follows. In a first pass (i.e., a first stage), the process 900 determines encoding costs (e.g., RD costs) for square partitions only. In a second pass (i.e., a second stage), if the PARTITION_SPLIT of the first pass results in a better encoding cost than the PARTITION_NONE, then the encoder need not determine respective encoding costs for the third partition types. As such, a full partition search can be bypassed, and a full partition search can be performed with respect to the smaller block sizes. As the first stage is an intermediate state, during (and at the end of) the first stage, the process 900 writes no data (e.g., bits) to a compressed bitstream, such as the compressed bitstream 420 of FIG. 4. In an implementation, the encoding costs are determined in the first pass using limited encoding such as limited prediction options, limited transform coding options, limited quantization options, other limitations on the coding options, or a combination thereof. Illustrative examples of limited encoding include using only four representative intra prediction modes in the first pass out of ten available intra-prediction modes, using an inter-prediction search window that is limited in size, using only the DCT transform, and using predefined transform block sizes. Other limited encoding options can be used in the first pass. In an implementation, the encoding costs are determined without limiting the encoding options.

[0105] The process 900 is further explained below with reference to FIGS. 10 and 11.

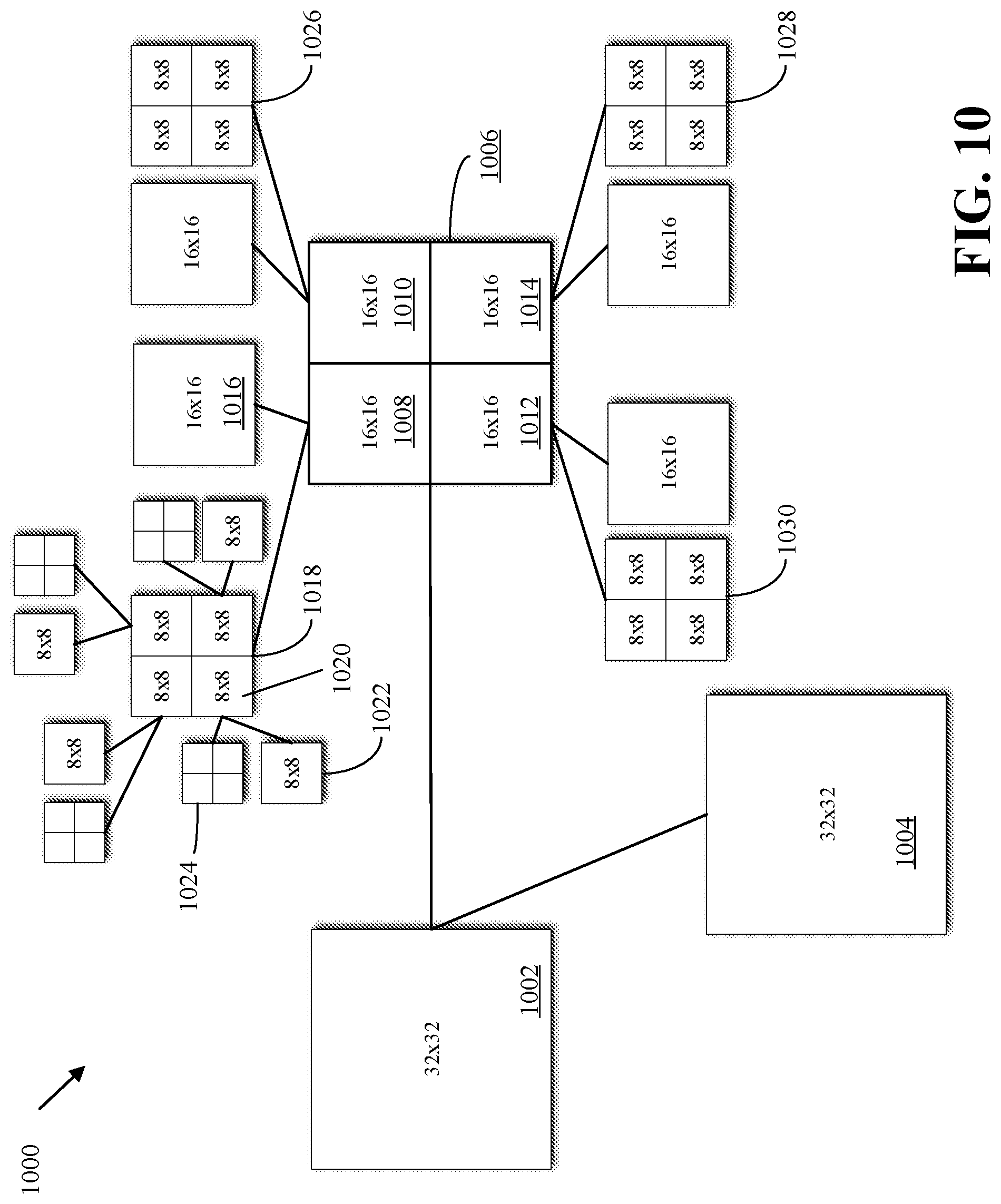

[0106] FIG. 10 is a block diagram of an example 1000 of a first stage of determining a partition type of a coding block according to implementations of this disclosure. The example 1000 includes a coding block 1002. The coding block 1002 can be the coding block 802 of FIG. 8. As such, in an example, the coding block can be of size 128.times.128. The coding block 1002 can be a sub-block that results from a partitioning (corresponding to the PARTITION_SPLIT partition type), such as the sub-block 812 of FIG. 8. In the example 1000, the coding block 1002 is illustrated as being a 32.times.32 coding block. As such, the block 1002 can be a coding block that is generated, based on the PARTITION_SPLIT partition type, during the recursive partitioning.

[0107] At operation 902, the process 900 selects a partition-none partition type and a partition-split partition type for predicting the block. As described above, the partition-none partition type and the partition-split partition type are selected from a set of partition types that includes the partition-none partition type, the partition-split partition type, and third partition types. As mentioned above, the partition-split (i.e., PARTITION_SPLIT) partition type partitions the coding block into equally sized square sub-blocks. Selecting a partition type can include partitioning the coding block according to the selected partition.

[0108] At operation 904, the process 900 determines a first cost of predicting the block using the partition-none partition type. The first cost can be an RD cost of encoding the coding block using the partition-none partition type. At operation 906, the process 900 determines a second cost of predicting the block using the partition-split type. The second cost can be an RD cost of encoding the coding block using the partition-split partition type.

[0109] In another example, the first cost and the second cost may not be RD costs. For example, the first cost and the second cost can be based merely on the residual error without considering the number of bits required to encode the residual. The residual error can be a mean square error between the block and a predicted block of the block. The error can be a sum of absolute differences error between the block and the predicted block. Any other suitable error measure can be used or any other suitable cost function metric (e.g., one unrelated to error) can be used.

[0110] Referring to FIG. 10, in the first stage, the operation 902 selects the partition-none partition type and the partition-split partition type. As such, the operation 902 partitions the coding block 1002 into a partition 1004 (corresponding to the partition-none partition type) and a partition 1006 (corresponding to the partition-split partition type). As such, the partition 1006 includes sub-blocks 1008-1014, each of size 16.times.16. At operation 904, the first cost of predicting the coding block 1002 using the partition-none partition type (i.e., the partition 1004) is determined. The partition 1004 corresponds to one prediction unit (PU) that is of the same size as the coding block itself.

[0111] At operation 906, the second cost of predicting the coding block 1002 using the partition-split partition type (i.e., the partition 1006) is determined. As mentioned above, to determine the second cost of the partition 1006, a cost corresponding to each of the sub-blocks 1008-1012 is determined and the four costs are added (e.g., summed). As such, to determine the cost of one of the sub-blocks 1008-1012, the operations of the process 900 are recursively applied to each sub-block, such as the sub-block 1008. To reduce the clutter in FIG. 10, only the recursive partition of the sub-block 1008 is shown. However, the same process described with respect to the sub-block 1008 is also performed with respect to each of the sub-blocks 1010-1014. As such, determining the second cost of predicting the block using the partition-split partition type can include recursively partitioning, based on a quad-tree partitioning, the block into sub-blocks using the partition-split partition type.

[0112] The sub-block 1008 is partitioned into a partition 1016 using the partition-none partition type and into a partition 1018 using the partition-split partition type. Each of the four sub-blocks of the partition 1018 is of size 8.times.8. A first cost of predicting the sub-block 1008 (i.e., a prediction unit corresponding to the partition 1016) is determined, and a second cost of predicting the sub-block 1008 (i.e., a total cost of predicting the sub-blocks of the partition 1018) is determined. As described above, to determine the second cost, a cost of each sub-block, such as a sub-block 1020, is determined. As described above, a first cost of predicting the sub-block 1020 is determined based on a partition-none partition type (i.e., a partition 1022), and a second cost is determined based on a partition-split partition type (i.e., a partition 1024). Each of the four blocks of the partition 1024 is of size 4.times.4. As mentioned above, a block of size 4.times.4 can be the smallest square sub-block size, if the block is a luminance block. As such, the sub-blocks of the partition 1024 are not split further. For a chrominance block, the smallest square sub-block size can be 2.times.2.

[0113] At each level of the recursion, at operation 908, the process 900 determines a result of comparing the first cost and the second cost. The steps described above can result in a decision, at each square block, of whether to split or not split the square block. That is, the process 900 compares the first cost to the second cost. That is, whether the partition-none partition type or the partition-split partition type is selected is based on a comparison of the first cost to the second cost. Said differently, the recursive process of the first pass indicates the operating points (i.e., operating scales) of various regions of the coding block. An operating point (or operating scale) is indicative of the optimal sub-block sizes that the coding block is to be split into to minimize the encoding cost of the coding block.

[0114] The operating scale of a region of a coding block is indicative of whether smaller, finer coding blocks or larger, coarser prediction units are sufficient to predict the block. The smaller, finer coding blocks correspond to performing a finer partition search with respect to the coding blocks corresponding to the partition-split partition type. The larger, coarser prediction units are prediction units that are at the same scale as those corresponding to the partition-none partition type. As such, the partition types of the third partition types result in prediction units of the same scale as those of the partition-none partition type.