Method For Tracking Movement Of A Mobile Robotic Device

Ebrahimi Afrouzi; Ali

U.S. patent application number 15/955480 was filed with the patent office on 2019-10-17 for method for tracking movement of a mobile robotic device. The applicant listed for this patent is Ali Ebrahimi Afrouzi. Invention is credited to Ali Ebrahimi Afrouzi.

| Application Number | 20190317121 15/955480 |

| Document ID | / |

| Family ID | 68101889 |

| Filed Date | 2019-10-17 |

| United States Patent Application | 20190317121 |

| Kind Code | A1 |

| Ebrahimi Afrouzi; Ali | October 17, 2019 |

METHOD FOR TRACKING MOVEMENT OF A MOBILE ROBOTIC DEVICE

Abstract

A method for tracking movement and turning angle of a mobile robotic device using two optoelectronic sensors positioned on the underside thereof. Digital image correlation is used to analyze images captured by the optoelectronic sensors and determine the amount of offset, and thereby amount of movement of the device. Trigonometric analysis of a triangle formed by lines between the positions of the optoelectronic sensors at different intervals may be used to determine turning angle of the mobile robotic device.

| Inventors: | Ebrahimi Afrouzi; Ali; (San Jose, CA) | ||||||||||

| Applicant: |

|

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Family ID: | 68101889 | ||||||||||

| Appl. No.: | 15/955480 | ||||||||||

| Filed: | April 17, 2018 |

| Current U.S. Class: | 1/1 |

| Current CPC Class: | G06T 7/262 20170101; G01C 22/00 20130101; G06T 2207/30241 20130101; G06T 7/254 20170101; G01P 3/806 20130101; G01C 22/02 20130101; G06T 2207/30252 20130101; G01P 3/38 20130101; G01P 3/68 20130101 |

| International Class: | G01P 3/80 20060101 G01P003/80; G01P 3/38 20060101 G01P003/38; G06T 7/254 20060101 G06T007/254; G01C 22/00 20060101 G01C022/00 |

Claims

1. A method for tracking movement of a robotic device comprising: Capturing a plurality of images of a driving surface by each of at least two optoelectronic sensors of the robotic device moving within an environment; Obtaining, with one or more processors of the robotic device, the plurality of images by each of the at least two optoelectronic sensors; Determining, with one or more processors of the robotic device, based on the images captured by each of the at least two optoelectronic sensors, linear movement of each of the at least two optoelectronic sensors; and Determining, with one or more processors of the robotic device, based on the linear movement of each of the at least two optoelectronic sensors, rotational movement of the robotic device.

2. The method of claim 1, wherein the linear movement of the at least two optoelectronic sensors is equal to linear movement of the robotic device.

3. The method of claim 1, wherein the linear movement of each of the at least two optoelectronic sensors is determined by maximum cross correlation between successive images captured by each of the at least two optoelectronic sensors.

4. The method of claim 1, further comprising determining linear velocity of the robotic device, by the one or more processors of the robotic device, based on linear movement, of the robotic device and the time between the captured images, the linear movement of the robotic device being estimated from the captured images.

5. The method of claim 1, further comprising determining angular velocity of the robotic device, by the one or more processors of the robotic device, based on linear movement of the at least two optoelectronic sensors and the time between the captured images, the linear movement of the at least two optoelectronic sensors being estimated from the captured images.

6. The method of claim 1, wherein images captured by each of the at least two optoelectronic sensors overlap with preceding images captured by each of the at least two optoelectronic sensors.

7. The method of claim 1, wherein the driving surface is illuminated by a light source.

8. The method of claim 7, wherein the light source is an LED.

9. A method for tracking velocity of a robotic device comprising: Capturing a plurality of images of a driving surface by each of at least two optoelectronic sensors of the robotic device moving within an environment; Obtaining with one or more processors of the robotic device, the plurality of images by each of the at least two optoelectronic sensors; and Determining, with the one or more processors of the robotic device, based on images captured by each of the at least two optoelectronic sensors, linear movement of each of the at least two optoelectronic sensors.

10. The method of claim 9, further comprising determining linear velocity of the robotic device, by the one or more processors of the robotic device, based on the linear movement of each of the at least two optoelectronic sensors and time between the images captured.

11. The method of claim 9, further comprising determining angular velocity of the robotic device, by the one or more processors of the robotic device, based on the linear movement of each of the at least two optoelectronic sensors and time between the images captured.

12. The method of claim 9, wherein determining the linear movement of each of the at least two optoelectronic sensors further comprises determining maximum cross correlation between successive images captured by each of the at least two optoelectronic sensors.

13. The method of claim 9, wherein the plurality of images captured by each of the at least two optoelectronic sensors overlaps with preceding images captured by each of the at least two optoelectronic sensors.

14. The method of claim 9, wherein the driving surface is illuminated by a light source.

15. The method of claim 14, wherein the light source is an LED.

16. A method for tracking movement of a robotic device comprising: Capturing a plurality of images of a driving surface by at least one optoelectronic sensor of the robotic device moving within an environment; Obtaining, with one or more processors of the robotic device, the plurality of images by the at least one optoelectronic sensor; Determining, with one or more processors of the robotic device, based on the images captured by the at least one optoelectronic sensor, linear movement of the optoelectronic sensor; and Determining, with one or more processors of the robotic device, based on the linear movement of the at least one optoelectronic sensor, rotational movement of the robotic device.

17. The method of claim 16, wherein the linear movement of the at least one optoelectronic sensor is determined by maximum cross correlation between successive images captured by the at least one optoelectronic sensor.

18. The method of claim 16, wherein the linear movement of the at least one optoelectronic sensor is equal to linear movement of the robotic device.

19. The method of claim 16, further comprises determining linear velocity of the robotic device, by the one or more processors of the robotic device, based on the linear movement of the at least one optoelectronic sensor and the time between the captured images, the linear movement of the at least one optoelectronic sensor being estimated from the captured images.

20. The method of claim 16, further comprises determining angular velocity of the robotic device, by one or more processors of the robotic device, based on the linear movement of the at least one optoelectronic sensor and the time between the captured images, the linear movement of the at least one optoelectronic sensor being estimated from the captured images.

21. The method of claim 16, wherein images captured by the at least one optoelectronic sensor overlaps with preceding images captured by the at least one optoelectronic sensor.

22. The method of claim 16, wherein the rotational movement is about an instantaneous center of rotation located at a center of the robotic device.

23. The method of claim 16, wherein the rotational movement is about an instantaneous center of rotation located at a wheel of the robotic device.

24. The method of claim 16, wherein the driving surface is illuminated by a light source.

25. The method of claim 24, wherein the light source is an LED.

Description

CROSS-REFERENCE TO RELATED APPLICATIONS

[0001] This is a continuation-in-part of U.S. patent application Ser. No. 15/425,130 filed Feb. 6, 2017 which is a Non-provisional Patent Application of U.S. Provisional Patent Application No. 62/299,701 filed Feb. 25, 2016 all of which are herein incorporated by reference in their entireties for all purposes.

FIELD OF THE INVENTION

[0002] The present invention relates to methods for tracking movement of mobile robotic devices.

BACKGROUND

[0003] Mobile robotic devices are being used with increasing frequency to carry out routine tasks, like vacuuming, mopping, cutting grass, painting, etc. It may be useful to track the position and orientation (the movement) of a mobile robotic device so that even and thorough coverage of a surface can be ensured. Many robotic devices utilize SLAM (simultaneous localization and mapping) to determine position and orientation, however SLAM requires expensive technology that may augment the overall cost of the robotic device. Additionally, SLAM requires intensive processing which takes extra time and processing power. A need exists for a simpler method to track the relative movement of a mobile robotic device.

SUMMARY

[0004] According to embodiments of the present invention, two (or more) optoelectronic sensors are positioned on the underside of a mobile robotic device to monitor the surface below the device. Successive images of the surface below the device are captured by the optoelectronic sensors and processed by an image processor using cross correlation to determine how much each successive image is offset from the last. From this, a device's relative position may be determined.

BRIEF DESCRIPTION OF THE DRAWINGS

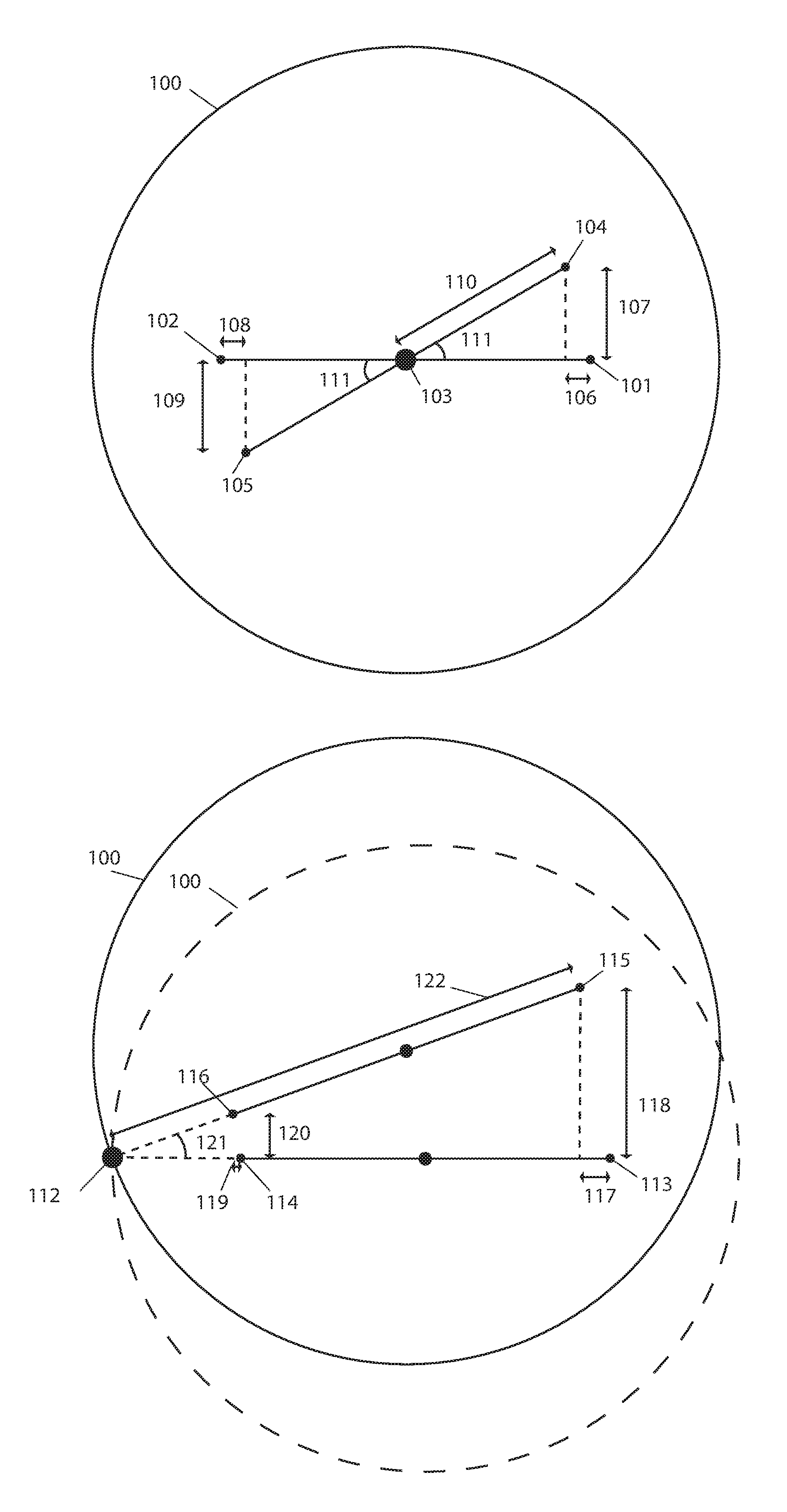

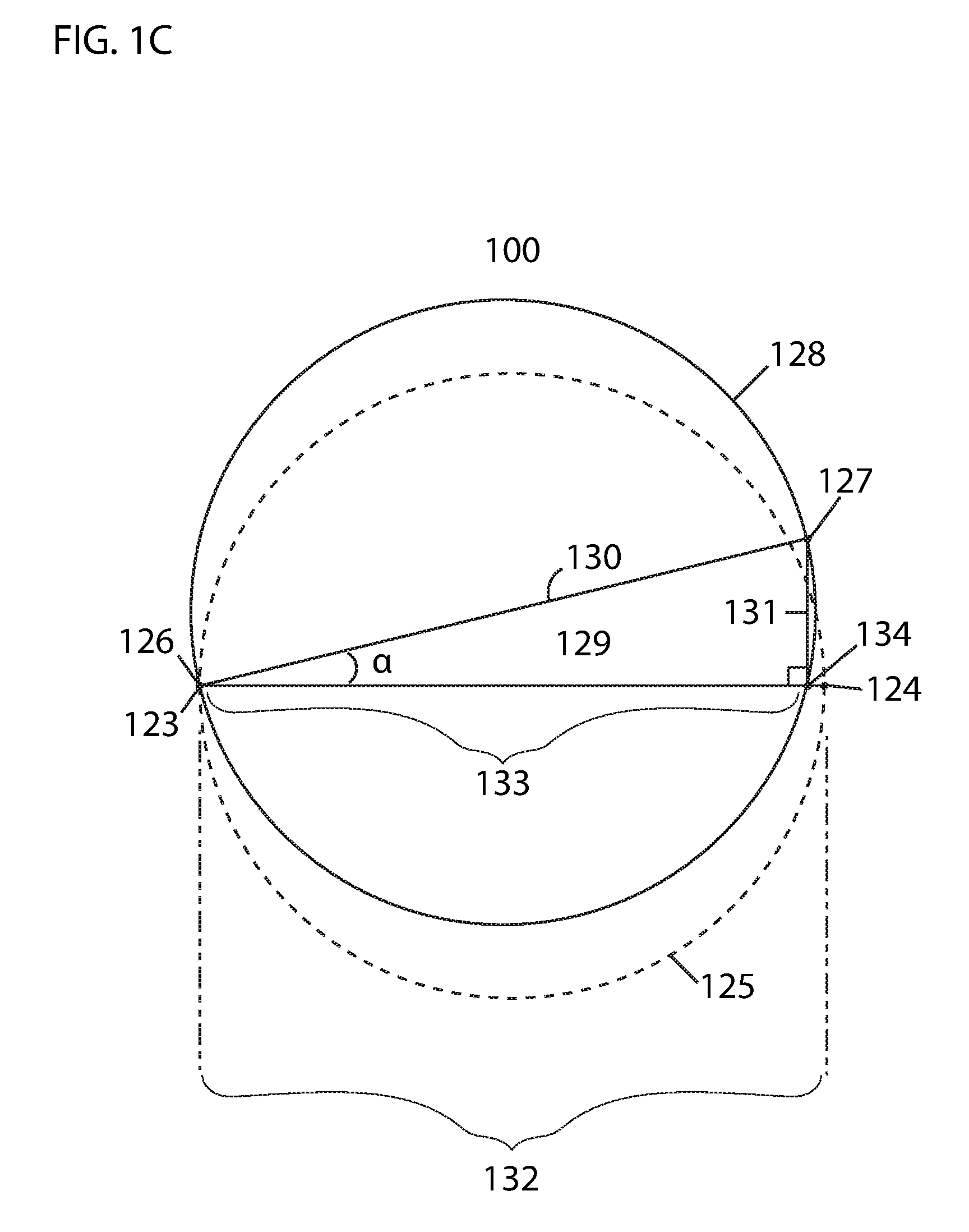

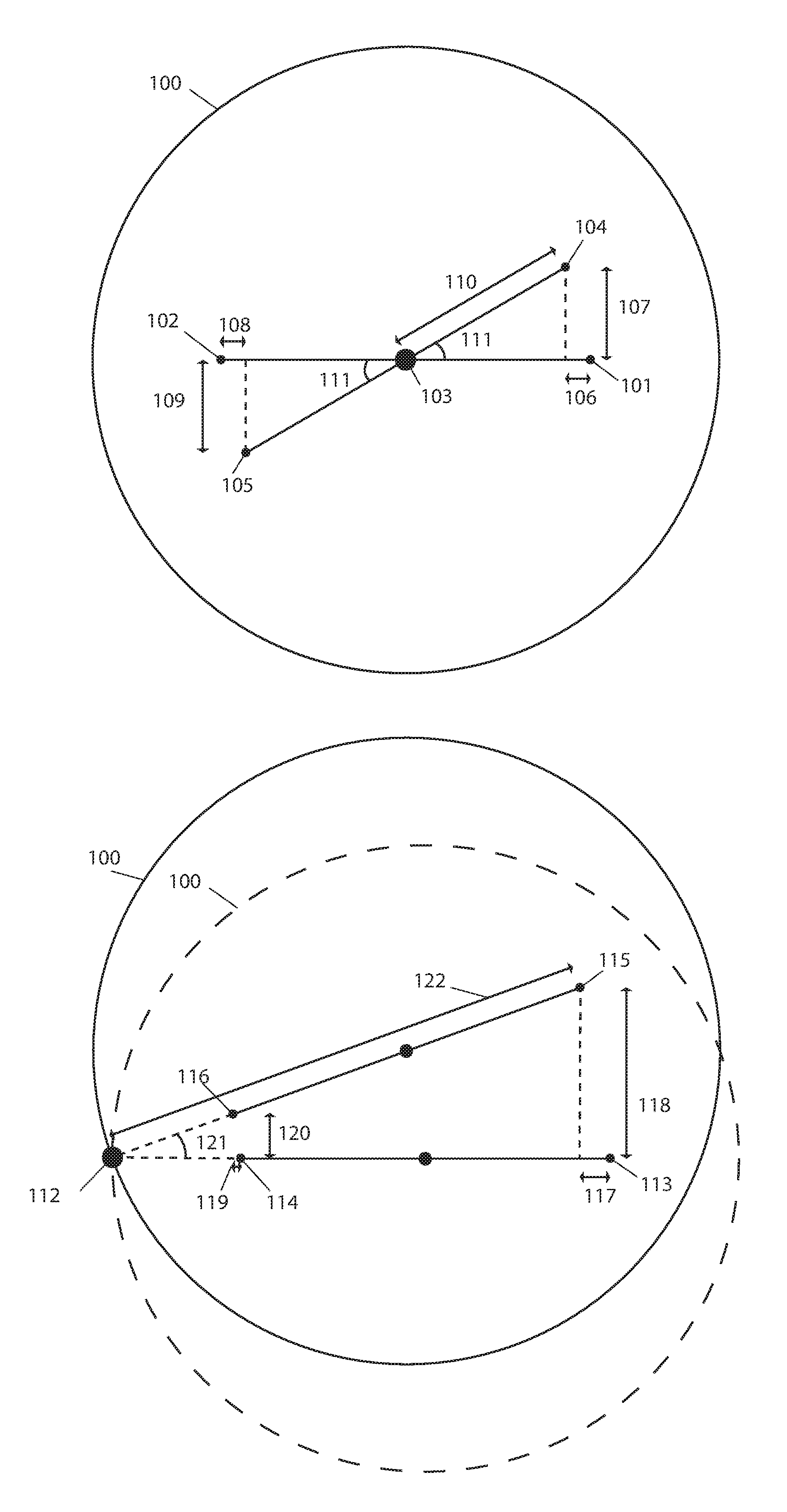

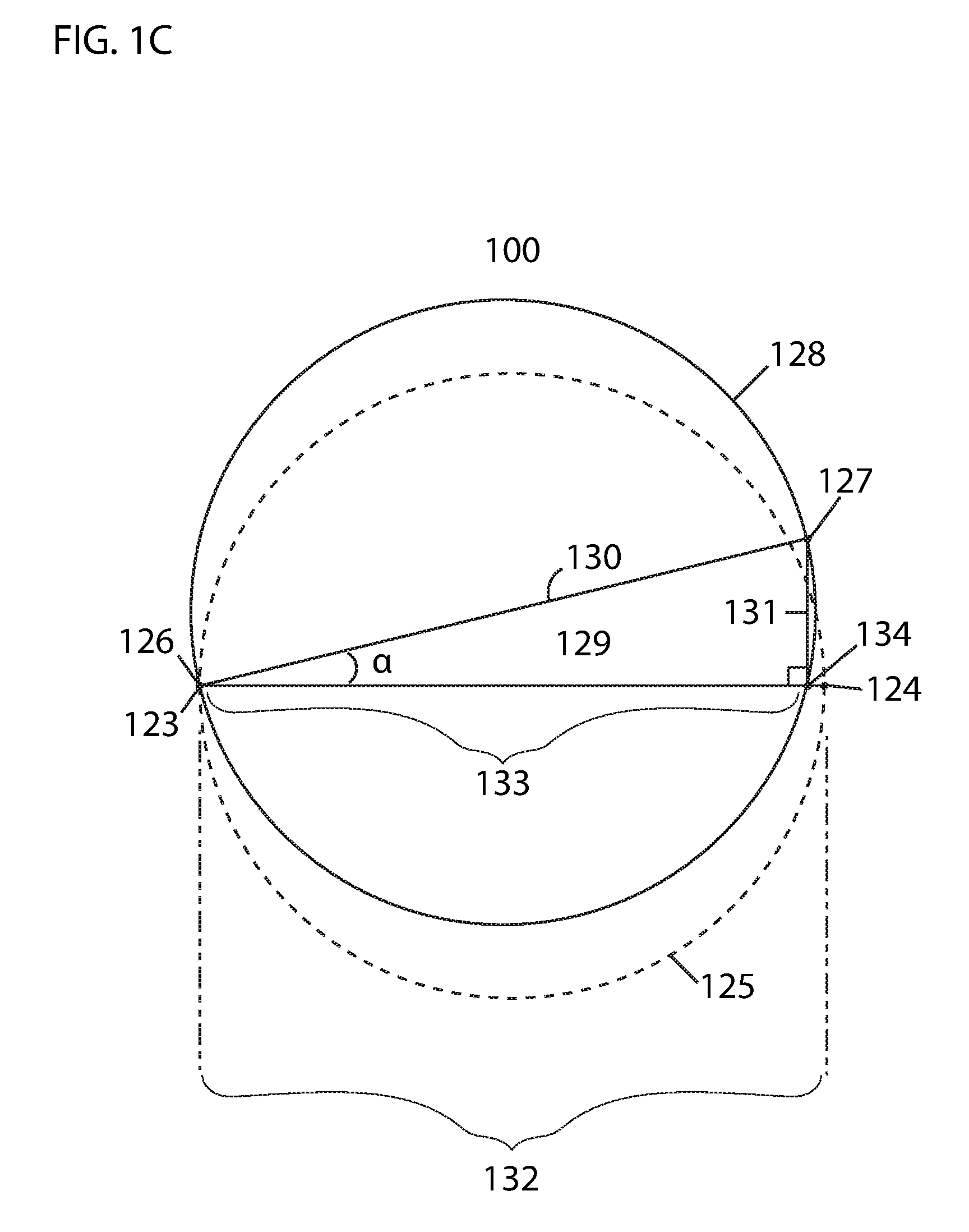

[0005] FIGS. 1A-1C illustrate a method for calculating rotation angle given linear movements of two optoelectronic sensors disposed on the underside of the robotic device embodying features of the present invention.

[0006] FIG. 2 illustrates a method for calculating rotation angle given linear movements of two optoelectronic sensors disposed on the underside of the robotic device embodying features of the present invention.

DETAILED DESCRIPTION OF THE INVENTION

[0007] The present invention proposes a method for tracking relative movement of a mobile robotic device through optoelectronic sensors.

[0008] According to embodiments of the proposed invention, at least two optoelectronic sensors and a light emitting diode (LED) are positioned on the underside of a mobile robotic device such that they monitor the surface upon which the device drives. In embodiments, an infrared laser diode or other types of light sources may be used. The at least two optoelectronic sensors may be positioned at some distance from one another on the underside of the mobile robotic device. For example, two optoelectronic sensors may be positioned along a line passing through the center of the robotic device, each on opposite sides and at an equal distance from the center of the robotic device. In other instances, the optoelectronic sensors may be positioned differently on the underside of the robotic device, where for example, they may not be at equal distance from the center of the robotic device. The LED illuminates the surface upon which the robotic device drives and the optoelectronic sensors capture images of the driving surface as the robotic device moves through the environment. The images captured are sent to a processor and a technique such as, digital image correlation (DIC), may be used to determine the linear movement of each of the optoelectronic sensors in the x and y directions. Each optoelectronic sensor has an initial starting location that can be identified with a pair of x and y coordinates and using a technique such as DIC, a second location of each optoelectronic sensor can be identified by a second pair of x and y coordinates. In some embodiments, the processor detects patterns in images and is able to determine by how much the patterns have moved from one image to another, thereby providing the movement of each optoelectronic sensor in the x and y directions over a time from a first image being captured to a second image being captured. To detect these patterns and movement of each sensor in the x and y directions the processor mathematically processes these images using a technique such as cross correlation to calculate how much each successive image is offset from the previous one. In embodiments, finding the maximum of the correlation array between pixel intensities of two images may be used to determine the translational shift in the x-y plane or in the case of a robotic device, its driving surface plane. Cross correlation may be defined in various ways. For example, two-dimensional discrete cross correlation r.sub.ij may be defined as:

r ij = k l [ s ( k + i , l + j ) - s _ ] [ q ( k , l ) - q _ ] k l [ s ( k , l ) - s _ ] 2 k l [ q ( k , l ) - q _ ] 2 ##EQU00001##

where s(k,l) is the pixel intensity at a point (k,l) in a first image and q(k,l) is the pixel intensity of a corresponding point in the translated image. s and q are the mean values of respective pixel intensity matrices s and q. The coordinates of the maximum r.sub.ij gives the pixel integer shift.

( .DELTA. x , .DELTA. y ) = arg max ( i , j ) { r } ##EQU00002##

In some embodiments, the correlation array may be computed faster using Fourier Transform techniques or other mathematical methods.

[0009] Given the movement of each optoelectronic sensor in the x and y directions, the linear and rotational movement of the robotic device may be known. For example, if the robotic device is only moving linearly without any rotation, the translation of each optoelectronic sensor (.DELTA.x,.DELTA.y) over a time .DELTA.t are the same in magnitude and sign and may be assumed to be the translation of the robotic device as well. If the robotic device rotates, the linear translations of each optoelectronic sensor determined from their respective images may be used to calculate the rotation angle of the robotic device. For example, in some instances the robotic device rotates in place, about an instantaneous center of rotation (ICR) located at its center. This may occur when the velocity of one wheel is equal and opposite to the other wheel (i.e. v.sub.r=-v.sub.l, wherein r denotes right wheel and l left wheel). In embodiments wherein two optoelectronic sensors, for example, are placed at equal distances from the center of the robotic device along a line passing through its center, the magnitude of the translations in the x and y directions of the two optoelectronic sensors are the same and of opposite sign. The translations of either sensor may be used to determine the rotation angle of the robotic device about the ICR by applying Pythagorean theorem, given the distance of the sensor to the ICR is known. In embodiments wherein the optoelectronic sensors are placed along a line passing through the center of the robotic device and are not of equal distance from the center of the robotic device, translations of each sensor will not be of the same magnitude however, any set of translations corresponding to an optoelectronic sensor, will result in the same angle of rotation when applying Pythagorean theorem.

[0010] The offset amounts at each optoelectronic sensor location may be used to determine the amount that the mobile robotic device turned. FIG. 1A illustrates a top view of robotic device 100 with a first optoelectronic sensor initially positioned at 101 and a second optoelectronic sensor initially positioned at 102, both of equal distance from the center of robotic device 100. The initial and end position of robotic device 100 is shown, wherein the initial position is denoted by the dashed lines. Robotic device 100 rotates in place about ICR 103, moving first optoelectronic sensor to position 104 and second optoelectronic sensor to position 105. As robotic device 100 rotates from its initial position to a new position optoelectronic sensors capture images of the surface illuminated by an LED (not shown) and send the images to a processor for DIC. After DIC of the images is complete, translation 106 in the x direction (.DELTA.x) and 107 in the y direction (.DELTA.y) are determined for the first optoelectronic sensor and translation 108 in the x direction and 109 in the y direction for the second sensor. Since rotation is in place and the optoelectronic sensors are positioned symmetrically about the center of robotic device 100 the translations for both optoelectronic sensors are of equal magnitude. The translations (.DELTA.x,.DELTA.y) corresponding to either optoelectronic sensor together with the respective distance 110 of either sensor from ICR 103 of robotic device 100 may be used to calculate rotation angle 111 of robotic device 100 by forming a right-angle triangle as shown in FIG. 1A and applying Pythagorean theorem:

sin .theta. = opposite hypotneuse = .DELTA. y d ##EQU00003##

wherein .theta. is rotation angle 111 and d is known distance 110 of the sensor from ICR 103 of robotic device 100.

[0011] In embodiments, the rotation of the robotic device may not be about its center but about an ICR located elsewhere, such as the right or left wheel of the robotic device. For example, if the velocity of one wheel is zero while the other is spinning then rotation of the robotic device is about the wheel with zero velocity and is the location of the ICR. The translations determined by images from each of the optoelectronic sensors may be used to estimate the rotation angle about the ICR. For example, FIG. 1B illustrates rotation of robotic device 100 about ICR 112. The initial and end position of robotic device 100 is shown, wherein the initial position is denoted by the dashed lines. Initially first optoelectronic sensor is positioned at 113 and second optoelectronic sensor is positioned at 114. Robotic device 100 rotates about ICR 112, moving first optoelectronic sensor to position 115 and second optoelectronic sensor to position 116. As robotic device 100 rotates from its initial position to a new position optoelectronic sensors capture images of the surface illuminated by an LED (not shown) and send the images to a processor for DIC. After DIC of the images is complete, translation 117 in the x direction (.DELTA.x) and 118 in the y direction (.DELTA.y) are determined for the first optoelectronic sensor and translation 119 in the x direction and 120 in the y direction for the second sensor. The translations (.DELTA.x,.DELTA.y) corresponding to either optoelectronic sensor together with the respective distance of the sensor to the ICR, which in this case is the left wheel, may be used to calculate rotation angle 121 of robotic device 100 by forming a right-angle triangle, such as that shown in FIG. 1B. Translation 118 of the first optoelectronic sensor in the y direction and its distance 122 from ICR 112 of robotic device 100 may be used to calculate rotation angle 121 of robotic device 100 by Pythagorean theorem:

sin .theta. = opposite hypotneuse = .DELTA. y d ##EQU00004##

wherein .theta. is rotation angle 121 and d is known distance 122 of the first sensor from ICR 112 located at the left wheel of robotic device 100. Rotation angle 121 may also be determined by forming a right-angled triangle with the second sensor and ICR 112 and using its respective translation in the y direction.

[0012] In another example, the initial position of robotic device 100 with two optoelectronic sensors 123 and 124 is shown by the dashed line 125 in FIG. 1C. A secondary position of the mobile robotic device 100 with two optoelectronic sensors 126 and 127 after having moved slightly is shown by solid line 128. Because the secondary position of optoelectronic sensor 126 is substantially in the same position 123 as before the move, no difference in position of this sensor is shown. In real time, analyses of movement may occur so rapidly that a mobile robotic device may only move a small distance in between analyses and only one of the two optoelectronic sensors may have moved substantially. The rotation angle of robotic device 100 may be represented by the angle a within triangle 129. Triangle 129 is formed by: [0013] the straight line 130 between the secondary positions of the two optoelectronic sensors 126 and 127; [0014] the line 131 from the second position 127 of the optoelectronic sensor with the greatest change in coordinates from its initial position to its secondary position to the line 132 between the initial positions of the two optoelectronic sensors that forms a right angle therewith; [0015] and the line 133 from the vertex 134 formed by the intersection of line 131 with line 132 to the initial position 123 of the optoelectronic sensor with the least amount of (or no) change in coordinates from its initial position to its secondary position. The length of side 130 is fixed because it is simply the distance between the two sensors, which does not change. The length of side 131 may be calculated by finding the difference of the y coordinates between the position of the optoelectronic sensor at position 127 and at position 124. It should be noted that the length of side 133 does not need to be known in order to find the angle .alpha.. The trigonometric function:

[0015] sin .theta. = opposite hypotneuse ##EQU00005##

only requires that we know the length of sides 131 (opposite) and 130 (hypotenuse). After performing the above trigonometric function, we have the angle .alpha., which is the turning angle of the mobile robotic device.

[0016] In a further example, wherein the location of the ICR relative to each of the optoelectronic sensors is unknown, translations in the x and y directions of each optoelectronic sensor may be used together to determine rotation angle about the ICR. For example, in FIG. 2 ICR 200 is located to the left of center 201 and is the point about which rotation occurs. The initial and end position of robotic device 202 is shown, wherein the initial position is denoted by the dashed lines. While the distance of each optoelectronic sensor to center 201 or a wheel of robotic device 202 may be known, the distance between each sensor and an ICR, such as ICR 200, may be unknown. In these instances, translation 203 in the y direction of first optoelectronic sensor initially positioned at 204 and translated to position 205 and translation 206 in the y direction of second optoelectronic sensor initially position at 207 and translated to position 208, along with distance 209 between the two sensors may be used to determine rotation angle 210 about ICR 200 using the following equation:

sin .theta. = .DELTA. y 1 + .DELTA. y 2 b ##EQU00006##

wherein .theta. is rotation angle 210, .DELTA.y.sub.1 is translation 203 in the y direction of first optoelectronic sensor, .DELTA.y.sub.2 is translation 206 in the y direction of second optoelectronic sensor and b is distance 209 between the two sensors.

[0017] The methods of tracking linear and rotational movement of a robotic device described herein may be supplemental to other methods used for tracking movement of a robotic device. For example, this method may be used with an optical encoder, gyroscope or both to improve accuracy of the estimated movement or may be used independently in other instances.

[0018] In embodiments, given that the time .DELTA.t between captured images is known, the linear velocities in the x (v.sub.x) and y (v.sub.y) directions and angular velocity (.omega.) of the robotic device may be estimated using the following equations:

v x = .DELTA. x .DELTA. t ##EQU00007## v y = .DELTA. y .DELTA. t ##EQU00007.2## .omega. = .DELTA. .theta. .DELTA. t ##EQU00007.3##

wherein .DELTA.x and .DELTA.y are the translations in the x and y directions, respectively, that occur over time .DELTA.t and .DELTA..theta. is the rotation that occurs over time .DELTA.t.

[0019] In embodiments, one optoelectronic sensor may be used to determine linear and rotational movement of a robotic device using the same methods as described above. The use of at least two optoelectronic sensors is particularly useful when the location of ICR is unknown or the distance between each sensor and the ICR is unknown. However, rotational movement of the robotic device may be determined using one optoelectronic sensor when the distance between the sensor and ICR is known, such as in the case when the ICR is at the center of the robotic device and the robotic device rotates in place (illustrated in FIG. 1A) or the ICR is at a wheel of the robotic device and the robotic device rotates about the wheel (illustrated in FIGS. 1B and 1C).

[0020] In embodiments, the optoelectronic sensors and light source, such as an LED, may be combined into a single unit device with an embedded processor or may be connected to any other separate processor, such as that of a robotic device. In embodiments, each optoelectronic sensor has its own light source and in other embodiments optoelectronic sensors may a share light source. In embodiments, a dedicated image processor may be used to process images and in other embodiments a separate processor coupled to the optoelectronic sensor(s) may be used, such as a processor or control system of a robotic device. In embodiments each optoelectronic sensor, light source and processor may be installed as separate units.

[0021] In embodiments, a light source, such as an LED, may not be used.

[0022] In embodiments, two-dimensional optoelectronic sensors may be used. In other embodiments, one-dimensional optoelectronic sensors may be used. In embodiments, one-dimensional optoelectronic sensors may be combined to achieve readings in more dimensions. For example, to achieve similar results as two-dimensional optoelectronic sensors, two one-dimensional optoelectronic sensors may be positioned perpendicularly to one another. In some instances, one-dimensional and two dimensional optoelectronic sensors may be used together.

* * * * *

D00000

D00001

D00002

D00003

XML

uspto.report is an independent third-party trademark research tool that is not affiliated, endorsed, or sponsored by the United States Patent and Trademark Office (USPTO) or any other governmental organization. The information provided by uspto.report is based on publicly available data at the time of writing and is intended for informational purposes only.

While we strive to provide accurate and up-to-date information, we do not guarantee the accuracy, completeness, reliability, or suitability of the information displayed on this site. The use of this site is at your own risk. Any reliance you place on such information is therefore strictly at your own risk.

All official trademark data, including owner information, should be verified by visiting the official USPTO website at www.uspto.gov. This site is not intended to replace professional legal advice and should not be used as a substitute for consulting with a legal professional who is knowledgeable about trademark law.