Method Of Extracting Warehouse In Port From Hierarchically Screened Remote Sensing Image

QI; Yue ; et al.

U.S. patent application number 16/205350 was filed with the patent office on 2019-06-06 for method of extracting warehouse in port from hierarchically screened remote sensing image. This patent application is currently assigned to Transport Planning and Research Institute Ministry of Transport. The applicant listed for this patent is Transport Planning and Research Institute Ministry of Transport. Invention is credited to Min DONG, Zhuo FANG, Rui LI, Lei MEI, Xiangjun NIE, Yue QI, Lu SUN, Jia TIAN, Dachuan WANG, Jing YANG.

| Application Number | 20190171879 16/205350 |

| Document ID | / |

| Family ID | 62209112 |

| Filed Date | 2019-06-06 |

| United States Patent Application | 20190171879 |

| Kind Code | A1 |

| QI; Yue ; et al. | June 6, 2019 |

METHOD OF EXTRACTING WAREHOUSE IN PORT FROM HIERARCHICALLY SCREENED REMOTE SENSING IMAGE

Abstract

A method of extracting a warehouse in a port from a hierarchically screened remote sensing image includes the following steps: first, recognizing a texture feature of a remote sensing image and extracting edge lines of a coast of a port; then, selecting a sample of an optional irregular texture region and forming, through a CA transformation, principal component images of different hierarchies by taking a ratio of a between-class difference to an intra-class difference being maximum as an optimization condition; sequentially, extracting a correlation relationship of the warehouse in the port, and forming a feature point set with recognized warehouses to be analyzed; and last, extracting a feature of a visually sensitive image through a scene image to obtain a feedback selection of a real scene image to extract the warehouse in the port accurately.

| Inventors: | QI; Yue; (Beijing, CN) ; NIE; Xiangjun; (Beijing, CN) ; DONG; Min; (Beijing, CN) ; WANG; Dachuan; (Beijing, CN) ; SUN; Lu; (Beijing, CN) ; TIAN; Jia; (Beijing, CN) ; FANG; Zhuo; (Beijing, CN) ; LI; Rui; (Beijing, CN) ; MEI; Lei; (Beijing, CN) ; YANG; Jing; (Beijing, CN) | ||||||||||

| Applicant: |

|

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Assignee: | Transport Planning and Research

Institute Ministry of Transport Beijing CN |

||||||||||

| Family ID: | 62209112 | ||||||||||

| Appl. No.: | 16/205350 | ||||||||||

| Filed: | November 30, 2018 |

| Current U.S. Class: | 1/1 |

| Current CPC Class: | G06T 2207/20016 20130101; G06K 9/00637 20130101; G06T 7/12 20170101; G06T 7/149 20170101; G06T 7/40 20130101; G06T 2207/10032 20130101; G06T 2207/30184 20130101; G06K 9/4676 20130101; G06T 2207/20116 20130101; G06K 9/6247 20130101; G06T 7/13 20170101; G06T 2207/20024 20130101 |

| International Class: | G06K 9/00 20060101 G06K009/00; G06T 7/149 20060101 G06T007/149; G06T 7/40 20060101 G06T007/40; G06T 7/13 20060101 G06T007/13; G06K 9/62 20060101 G06K009/62; G06K 9/46 20060101 G06K009/46 |

Foreign Application Data

| Date | Code | Application Number |

|---|---|---|

| Dec 4, 2017 | CN | 201711256477.6 |

Claims

1. A method of extracting a warehouse in a port from a hierarchically screened remote sensing image, comprising the following steps: S100: extracting a coastline of the port based on an active contour model, successively performing a texture feature recognition on any region in a remote sensing image to form a sea area texture region and an irregular texture region, and extracting edge lines of the coastline of the port; S200: extracting principal component images of a plurality of hierarchies using insufficient spectrum features, selecting a sample of the irregular texture region, and forming, through a CA transformation, principal component images of different hierarchies with different difference values by taking a ratio of a between-class difference to an intra-class difference being maximum as an optimization condition; S300: accurately recognizing the warehouse in the port using a spatial relationship feature, extracting a correlation relationship of the warehouse in the port from the principle component images, and forming a feature point set with recognized warehouses to be analyzed; and S400: extracting a feature of a visually sensitive image from the feature point set through a scene image based on WTA visual rapid adaptation selection to obtain a feedback selection of a real scene image to extract the warehouse in the port.

2. The method of extracting the warehouse in the port from the hierarchically screened remote sensing image according to claim 1, wherein in step S100, a gray level co-occurrence matrix method is used for the texture feature recognition, and the texture feature recognition comprises the following steps: S101: selecting a region of the remote sensing image, and setting that the region has L gray level values, and a gray level co-occurrence matrix corresponding to the region is a matrix having L.times.L orders; S102: selecting a position (i, j) in the matrix, where (i, j=1, 2, . . . , L), wherein, an element at the optional position is a first pixel at a fixed distance from a second pixel having a gray level of i and a gray level of j, wherein the following fixed positional relationship exists between the first pixel and the second pixel: .zeta.=(DX, DY), where .zeta. is a displacement, and DX and DY are distances in two directions; S103: extracting, according to a positional relationship between gray level co-occurrence matrixes, a texture feature quantity including an Angular Second Moment (ASM) and a contrast CON, wherein ASM=.SIGMA..sub.i=1.sup.L.SIGMA..sub.j=1.sup.LP.sup.2 (i, j), and CON=.SIGMA..sub.n=1.sup.Ln.sup.2[.SIGMA..sub.i=1.sup.L.SIGMA..sub.j=1.sup- .LP(i, j)]|i-j|, where P is a feature vector at the position (i,j), and n is the number of times the extraction is performed.

3. The method of extracting the warehouse in the ort from the hierarchically screened remote sensing image according to claim 1, wherein in step S100, extracting edge lines of the coast of the port between the sea area texture region and the irregular texture region using the filter algorithm and optimizing the edge lines of the coast of the port using the filter algorithm comprises the following steps: first, acquiring discrete data of a texture feature and selecting a lowest center frequency when extracting an image feature in a filter using a discretized Gabor template matrix and an image data matrix convolution, and then carrying out a frequency spectrum superposition calculation again to obtain a filtered image.

4. The method of extracting the warehouse in the port from the hierarchically screened remote sensing image according to claim 1, wherein in step S200, before the CA transformation is executed, a maximized ratio of a between-class variance of an optional data set to an intra-class variance of the optional data set is extracted according to the following linear transformation formula: Y=TX, where T is an ideal transformation matrix, so as to ensure the maximum separability of the optional data set to provide optimized basic data for the CA transformation

5. The method of extracting the warehouse in the port from the hierarchically screened remote sensing image according to claim 4, wherein a specific algorithm of the ideal transformation matrix is as follows: S201: .sigma..sub.A is set as a standard deviation of a class 1 and a class 2 obtained after the CA transformation, .sigma..sub.w1 and .sigma..sub.w2 are set as intra-class standard deviations of the class 1 and the class 2, and .sigma..sub.w is set as an average value of .sigma..sub.w1 and .sigma..sub.w2; S202: a relationship between a transformed variance and an untransformed variance is as follows: .sigma..sub.w.sup.2=t.sup.TS.sub.wt, .sigma..sub.A.sup.2=t.sup.TS.sub.At, where S.sub.w and S.sub.A are an intra-class scatter matrix and a between-class scatter matrix of a given sample, and t is a mapping transformation vector; and S203: the mapping transformation vector t is set as a special value of a ratio .sigma..sub.A.sup.2/a.sigma..sub.W.sup.2, of the between-class variance and the intra-class variance, where, .lamda.=.sigma..sub.A.sup.2/.sigma..sub.W.sup.2=t.sup.TS.sub.At/t.sup.TS.- sub.wt, when the mapping transformation vector t approximates a maximum value, (S.sub.A-.LAMBDA.S.sub.W) T=0, where .LAMBDA. represents a diagonal matrix consisting of all feature values .lamda., and a matrix T composed of all column vectors t is a desired ideal transformation matrix.

6. The method of extracting the warehouse in the port from the hierarchically screened remote sensing image according to claim 1, wherein in step S300, a correlation relationship of the warehouse in the port is extracted, the correlation relationship includes a point feature, a line feature and a plane feature included in spatial features; a hierarchical relationship feature of the correlation relationship is acquired by extracting a hierarchy attribute of the remote sensing image based on a spectral feature of the remote sensing image.

7. The method of extracting the warehouse in the port from the hierarchically screened remote sensing image according to claim 6, wherein the correlation relationship of the warehouse in the port includes a road relationship, a transshipment square relationship and an enclosure relationship of the warehouse in the port, and attributes of a whole are extracted using a spatial correlation relationship of the warehouse in the port.

8. The method of extracting the warehouse in the port from the hierarchically screened remote sensing image according to claim 1, wherein in step S400, the visually sensitive image includes a gray level, colors, edges, textures and a motion, a visual saliency map of each position in a scene image is obtained according to synthesized features, and a mutual competition of a plurality of the visual saliency maps transfers an inhibition of return of focus.

9. The method of extracting the warehouse in the port from the hierarchically screened remote sensing image according to claim 8, wherein the mutual competition and the inhibition of the visual saliency map comprises the following steps: S401: selecting a plurality of parallel and separable feature maps from the feature point set, and recording a hierarchy attribute of each position in a feature dimension on a feature map to obtain a saliency of each position in different feature dimensions; S402: merging saliencies of different feature maps to obtain a total saliency measure, and guiding a visual attention process; and S403: dynamically selecting, through a WTA network, a position with the highest saliency from the saliency map as a Focus Of Attention (FOA), and then performing the processing circularly through the inhibition of return until a real scene image is obtained.

10. The method of extracting the warehouse in the port from the hierarchically screened remote sensing image according to claim 1, further comprising: a step S500 of tracking a nonlinear filtering feature, comprising: separately extracting a filtering feature obtained in step S100 using hierarchical image attributes extracted through S100, S200, S300 and S400, and performing a tracking in a remote sensing analysis image according to a texture feature extracted according to a hierarchical analysis to compensate for an attribute that cannot be directly extracted by tracking the texture feature to form an interpreted remote sensing image, and comparing the interpreted remote sensing image after being formed with a real scene image in step S400 to remove an inaccurate tracked texture and keep a rational tracked texture.

Description

CROSS REFERENCE TO THE RELATED APPLICATIONS

[0001] The present application claims priority to Chinese Patent Application No. 2017112564776 filed on Dec. 4, 2017, the entire content of which is incorporated herein by reference.

TECHNICAL FIELD

[0002] The present invention relates to the field of remote sensing technologies, and more particularly to a method of extracting a warehouse in a port from a hierarchically screened remote sensing image.

BACKGROUND

[0003] Remote sensing images have been widely used in various aspects, and will be used further as remote sensing image recognition technologies develop further. In applications of remote sensing, information is collected without directly contacting a related target, and the collected information can be interpreted, classified and recognized. With the use of a remote sensing technology, a great quantity of earth observation data can be acquired rapidly, dynamically, and accurately.

[0004] As a hub for marine transportation, port plays an extremely important role and is therefore received more and more attentions, becoming an important research direction in marine transportation traffic planning. In the establishment and planning of a port, port data should be collected first, that is, the various ground objects in a port and their positions should be acquired, a logistics warehouse behind a storage yard is an important ground object in a port, and moreover, logistics warehouses are also crucial for a port.

[0005] However, it is somewhat difficult to recognize, based on a remote sensing image, a warehouse in the rear of a port, in the prior art, for example, a method of extracting an image of a logistics warehouse behind a storage yard in a port, which is disclosed in Patent Application No. 201610847354.9, includes: (1) applying a lee sigma edge extraction algorithm to a waveband of a remote sensing image, the algorithm using a specific edge filter to create two independent edge images: a bright-edged image and a dim-edged image, from the original image; (2) carrying out a multi-scale segmentation for the bright-edged image and the dim-edged image together with the remote sensing image to obtain an image object; (3) classifying the ones of the obtained image objects having a big blue waveband ratio into a class A, and removing, using a brightness mean feature, the ones in the class A having a relative low brightness mean from the class A; (4) and removing the objects smaller than a specified threshold from the class A using the Normalized Difference Vegetation Index (NDVI) to obtain the category of a warehouse with a blue roof. Based on features of data and those of a logistics warehouse behind a storage yard in a port, an image can be extracted accurately at a high processing efficiency.

[0006] Taking an overall view of the foregoing technical solution, actually existing problems and the currently widely used technical solutions, the following major defects are found:

[0007] (1) first, no special method is currently available for the remote sensing reorganization of a logistics warehouse in the rear of a port, because existing methods are applicable to recognize other ground objects and incapable of accurately recognizing a warehouse in a port according to features of the warehouse in the port, moreover, because of the lack of pertinence, the processing of remotely sensed big data is low in efficiency;

[0008] (2) second, most of existing data processing methods are based on the direct extraction of a remote sensing image, the biggest defect of this extraction mode is heave original data processing workload, and some undesired data or data out of this scope are usually taken into consideration during this calculation process, thus further increasing the complicity of data processing; and

[0009] (3) last, in an existing data processing process, most of feature processing operations are based on spectral features, although full-color remote sensing images have been developed, spectral feature is still disadvantaged in insufficient spectral information, making it necessary to conduct an advanced computation and an interpolation operation for an approximation recovery during a recognition process, however, this process usually triggers a correction algorithm, thus, to obtain a recognized feature that is close to reality, a large amount of calculation needs to be executed, furthermore, an algorithm correction is circulated during this process, leading to a larger computation load.

SUMMARY

[0010] A technical solution adopted by the present invention to solve the technical problems is a method of extracting a warehouse in a port from a hierarchically screened remote sensing image, comprising the following steps:

[0011] S100: extracting a coastline of the port based on an active contour model, successively performing texture feature recognition on any region in a remote sensing image to form a sea area texture region and an irregular texture region, and extracting edge lines of the coastline of the port;

[0012] S200: extracting principal component images of a plurality of hierarchies using insufficient spectrum features, optionally selecting a sample of the irregular texture region, and forming, through a CA transformation, principal component images of different hierarchies with different difference values by taking the ratio of a between-class difference to an intra-class difference being maximum as an optimization condition;

[0013] S300: accurately recognizing the warehouse in the port using a spatial relationship feature, extracting a correlation relationship of the warehouse in the port from the principle component images, and forming a feature point set with recognized warehouses to be analyzed;

[0014] S400: extracting a feature of a visually sensitive image from the feature point set through a scene image based on WTA visual rapid adaptation selection to obtain a feedback selection of a real scene image to extract the warehouse in the port.

[0015] As a preferred technical solution of the present invention, in step S100, a gray level co-occurrence matrix is used to recognize a texture feature, and the recognition includes the following steps:

[0016] S101: optionally selecting a region of the remote sensing image, and setting that the region has L gray level values, in this case, a gray level co-occurrence matrix corresponding to the region is a matrix having LXL orders;

[0017] S102: selecting an optional position (i,j) in the matrix, where (i, j=1, 2, . . . , L), in this case, an element at the optional position is a pixel at a fixed distance from a pixel having a gray level of i and has a gray level of j, wherein the following fixed positional relationship exists between the two pixels: .zeta.=(DX, DY), where .zeta. is a displacement, and DX and XY are distances in two directions;

[0018] S103: extracting, according to a positional relationship between the gray level co-occurrence matrixes, a texture feature quantity such as an Angular Second Moment (ASM) and a contrast CON, wherein ASM=.SIGMA..sub.i=1.sup.L.SIGMA..sub.j=1.sup.LP.sup.2 (i, j) and CON=.SIGMA..sub.n=1.sup.Ln.sup.2[.SIGMA..sub.i=1.sup.L.SIGMA..sub.j=1.sup- .LP(i, j)]|i-j|, where P is a feature vector at the position (i,j), and n is the number of times extraction is performed.

[0019] As a preferred technical solution of the present invention, in step S100, extracting edge lines of the coast of the port between the sea area texture region and the irregular texture region using the filter algorithm and optimizing the edge lines of the coast of the port using the filter algorithm specifically includes the following steps: first, acquiring discrete data of a texture feature and selecting a lowest center frequency when extracting an image feature in a filter using a discretized Gabor template matrix and an image data matrix convolution, and then carrying out a frequency spectrum superposition calculation again to obtain a filtered image.

[0020] As a preferred technical solution of the present invention, in step S200, before the CA transformation is executed, the maximized ratio of a between-class variance of an optional data set to an intra-class variance of the data set is extracted according to the following linear transformation formula: Y=TX, where T is an ideal transformation matrix, so as to ensure the maximum separability of the data set to provide optimized basic data for the CA transformation.

[0021] As a preferred technical solution of the present invention, a specific algorithm of the ideal transformation matrix is as follows:

[0022] S201: .sigma..sub.A is set as a standard deviation of a class 1 and a class 2 obtained after the transformation, .sigma..sub.w1 and .sigma..sub.w2 are set as intra-class standard deviations of the class 1 and the class 2, and .sigma..sub.w is set as the average value of .sigma..sub.w1 and .sigma..sub.w2;

[0023] S202: the relationship between a transformed variance and an untransformed variance is as follows:

[0024] .sigma..sub.w.sup.2=t.sup.TS.sub.wt, .sigma..sub.A.sup.2=t.sup.TS.sub.At, where S.sub.w and S.sub.A are an intra-class scatter matrix and a between-class scatter matrix of a given sample, and t is a mapping transformation vector; and

[0025] S203: the mapping transformation vector t is set as a special value of the ratio .sigma..sub.A.sup.2/.sigma..sub.W.sup.2 of the between-class variance and the intra-class variance, that is, .lamda.=.sigma..sub.A.sup.2/.sigma..sub.W.sup.2=t.sup.TS.sub.At/t.sup.TS.- sub.wt, when the mapping transformation vector t approximates a maximum value, (S.sub.A-.LAMBDA.S.sub.W) T=0, where A represents a diagonal matrix consisting of all feature values .lamda., and a matrix T composed of all column vectors t is a desired ideal transformation matrix.

[0026] As a preferred technical solution of the present invention, in step S300, a correlation relationship of the warehouse in the port is extracted, the correlation relationship includes a point feature, a line feature and a plane feature included in spatial features; a hierarchical relationship feature of the correlation relationship is acquired by extracting a hierarchy attribute of the remote sensing image based on a spectral feature of the remote sensing image.

[0027] As a preferred technical solution of the present invention, the correlation relationship of the warehouse in the port includes a road relationship, a transshipment square relationship and an enclosure relationship of the warehouse in the port, and attributes of a whole are extracted using a spatial correlation relationship of the warehouse in the port.

[0028] As a preferred technical solution of the present invention, in step S400, the visually sensitive image includes a gray level, colors, edges, textures and a motion, a visual saliency map of each position in a scene image is obtained according to synthesized features, and the mutual competition of a plurality of the visual saliency maps transfers the inhibition of return of focus.

[0029] As a preferred technical solution of the present invention, the competition and inhibition of the visual saliency map includes the following steps:

[0030] S401: selecting a plurality of parallel and separable feature maps from the feature point set, and recording a hierarchy attribute of each position in a feature dimension on a feature map to obtain the saliency of each position in different feature dimensions;

[0031] S402: merging saliencies of different feature maps to obtain a total saliency measure, and guiding a visual attention process; and

[0032] S403: dynamically selecting, through a WTA network, the position with the highest saliency from the saliency map as the Focus Of Attention (FOA), and then performing the processing circularly through the inhibition of return until a real scene image is obtained.

[0033] As a preferred technical solution of the present invention, the method further includes a step S500 of tracking a nonlinear filtering feature, including: separately extracting the filtering feature obtained in step S100 using hierarchical image attributes extracted through the execution of the foregoing four steps, and performing a tracking in a remote sensing analysis image according to a texture feature extracted according to a hierarchical analysis to compensate for an attribute that cannot be directly extracted by tracking a texture feature, so as to form an interpreted remote sensing image, and comparing the formed remote sensing image with a real scene image in step S400 so as to remove an inaccurate tracked texture and keep a rational tracked texture.

[0034] Compared with the prior art, the present invention has the following beneficial effects: by extracting the original attribute using a texture feature and dividing edge lines of a coast of a port using a filter, the present invention timely removes data that does not need to be processed and thus reduces the amount of the data sequentially processed; after performing the foregoing processing, the present invention performs a CA transformation to cause the remote sensing image data to be capable of being hierarchized, and then hierarchizes the remote sensing image data using insufficient spectrum information according to a spatial position feature of a warehouse to obtain principal component images of different hierarchies, obtains attributes of a single entity using a spatial relationship among the principal component images and thereby obtains attributes of a whole, thus, the present invention has a high pertinence; moreover, the present invention accurately acquires attributes of a warehouse in a port using a WTA visual rapid adaptation selection algorithm after forming a feature point set, and uses a track optimization algorithm to compensate for distorted data or data that is not acquired through remote sensing to form a complete interpreted image, thus avoiding the use of a correction algorithm and the calculation of an approximate interpolation.

BRIEF DESCRIPTION OF THE DRAWINGS

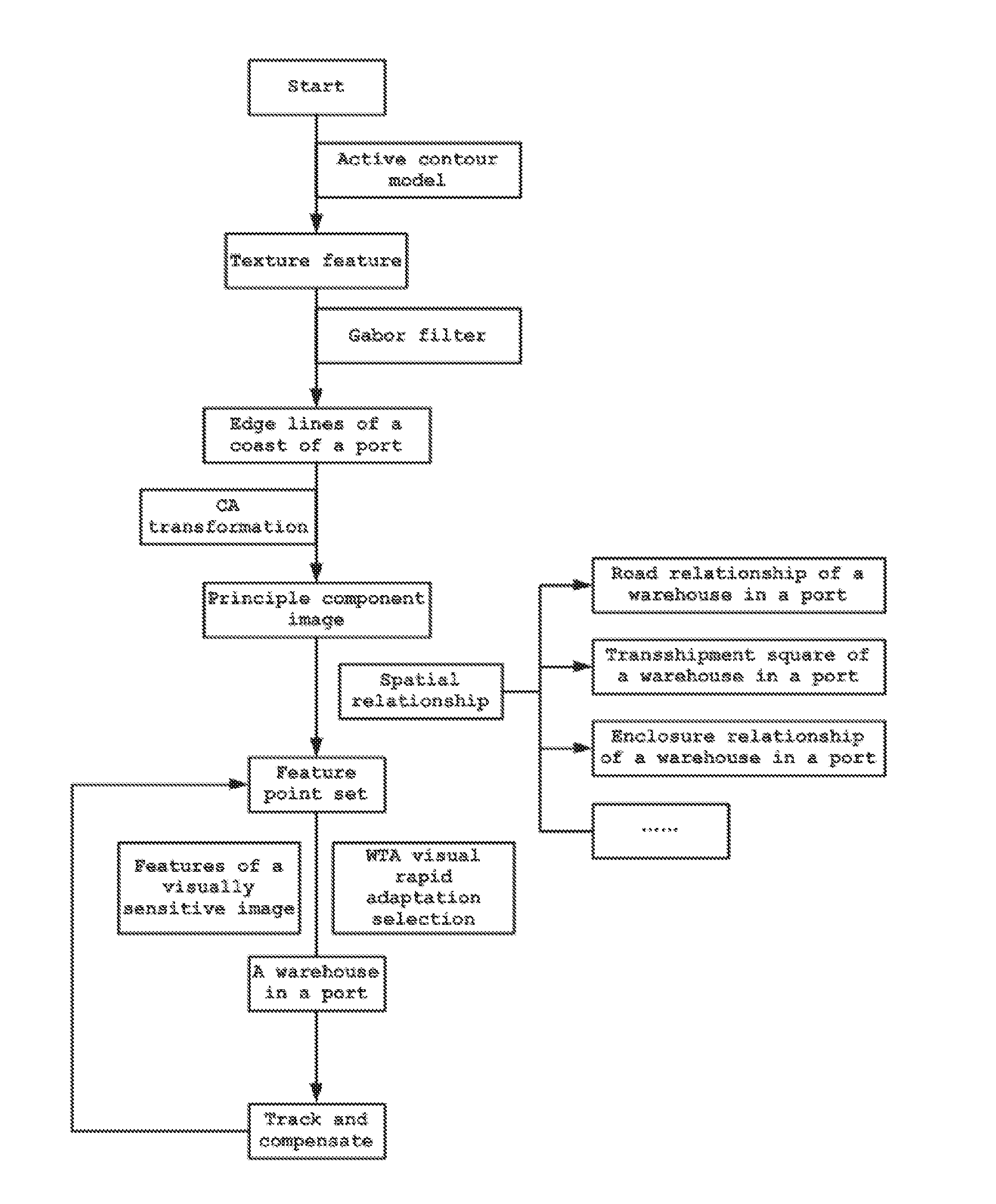

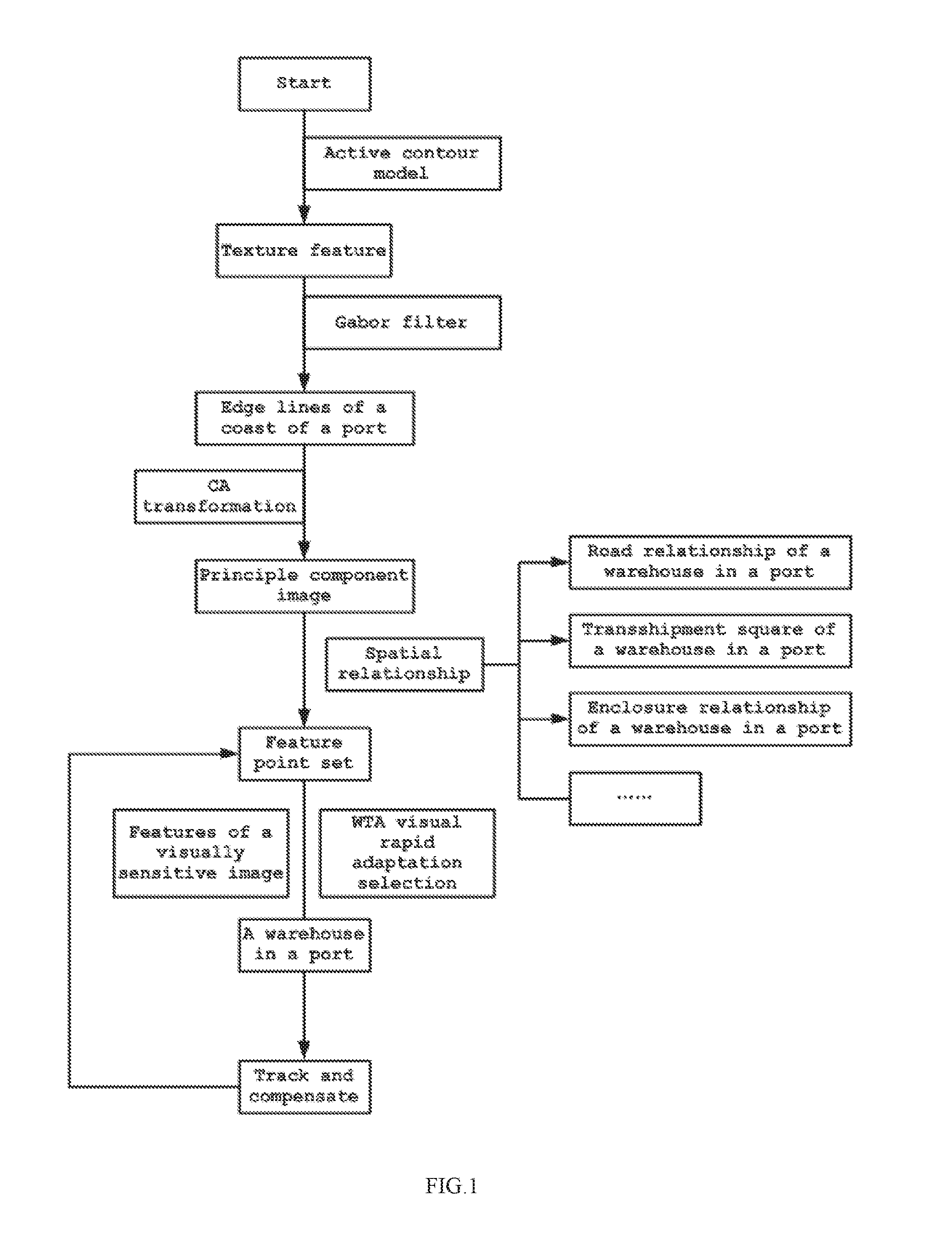

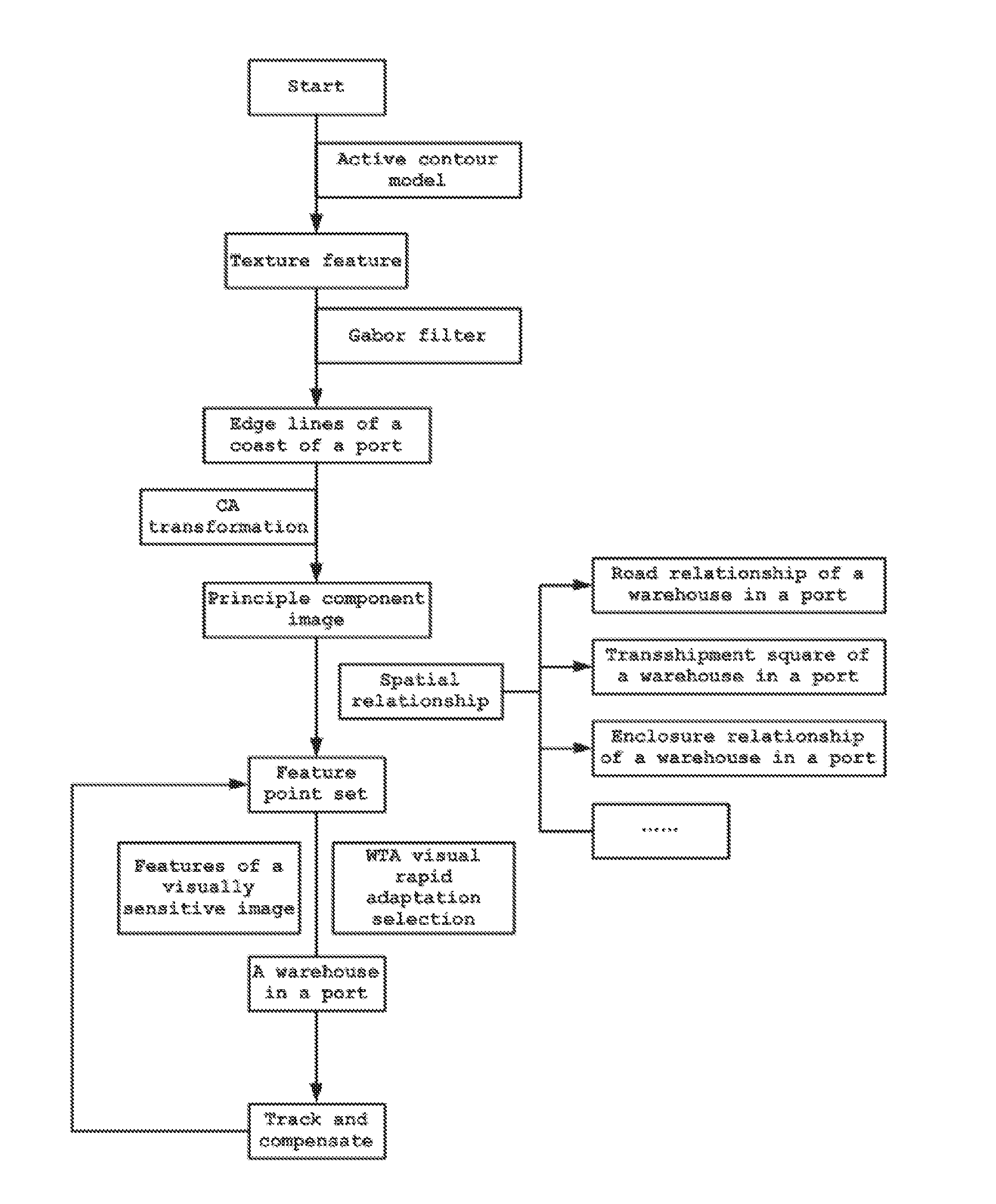

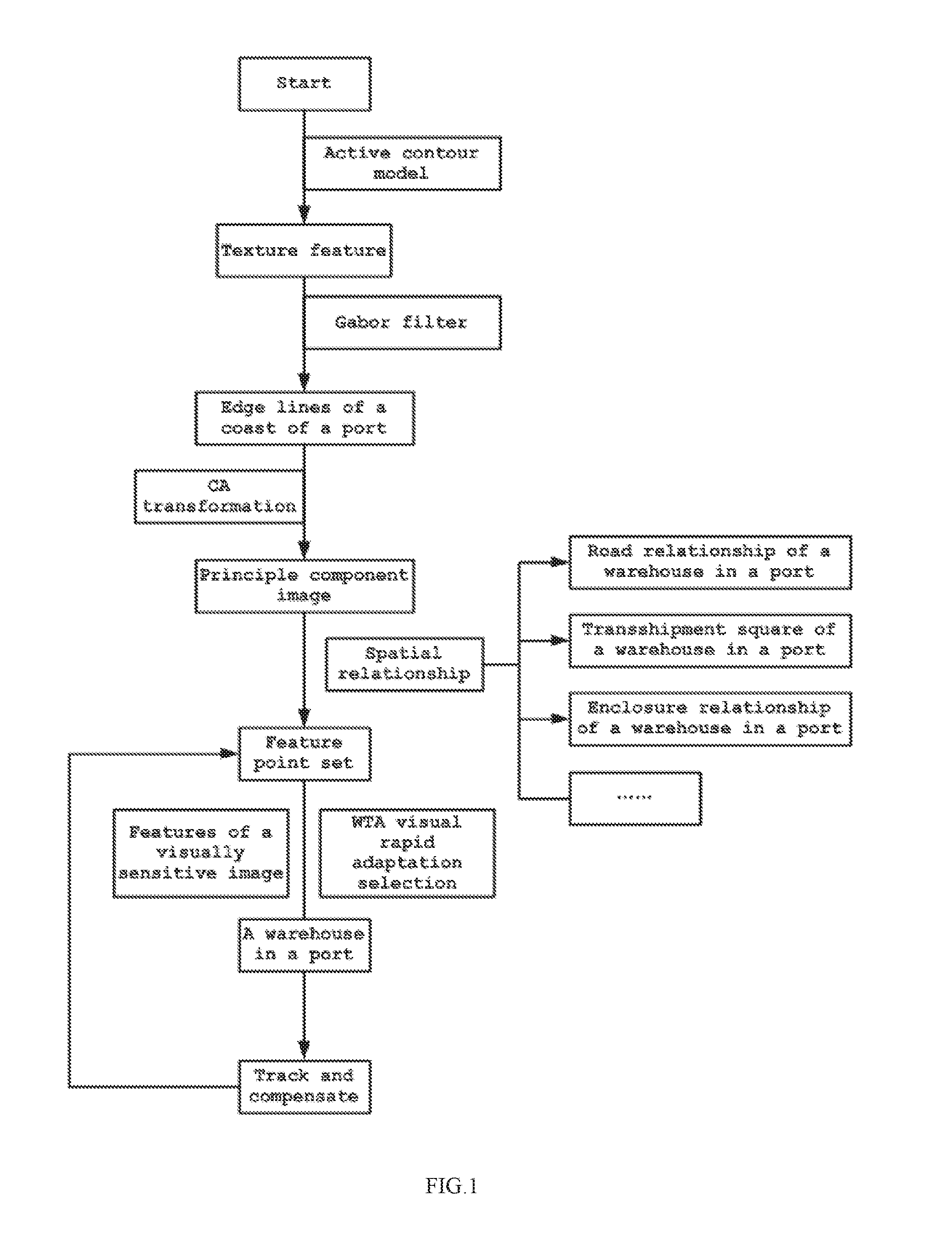

[0035] FIG. 1 is a schematic diagram illustrating a flow according to the present invention;

[0036] FIG. 2 is a diagram illustrating a flow of extracting a feature by a Gabor filter according to the present invention;

[0037] FIG. 3 is a schematic diagram illustrating a structure of a visual model according to the present invention; and

[0038] FIG. 4 is a schematic diagram illustrating a competition and inhibition structure of a visual saliency map according to the present invention.

DETAILED DESCRIPTION OF THE EMBODIMENTS

[0039] Technical solutions of the present invention will be described clearly and completely below in conjunction with accompanying drawings set forth therein, and apparently, the embodiments described herein are merely a part of, but not all of the embodiments of the present invention. All other embodiments devised by those of ordinary skill without any creative work based on the embodiments described herein should fall within the scope of the present invention.

Embodiment

[0040] As shown in FIG. 1-FIG. 3, the present invention provides a method of extracting a warehouse in a port from a hierarchically screened remote sensing image, comprising the following steps:

[0041] S100: extracting a coastline of the port based on an active contour model, successively performing texture feature recognition on any region in a remote sensing image to form a sea area texture region and an irregular texture region, and extracting edge lines of a coastline of the port using a filter algorithm.

[0042] In step S100, a gray level co-occurrence matrix is used to recognize a texture feature, the specific recognition includes the following steps:

[0043] S101: optionally selecting a region of the remote sensing image, and setting that the region has L gray level values, in this case, a gray level co-occurrence matrix corresponding to the region is a matrix having LXL orders;

[0044] S102: selecting an optional position (i,j) in the matrix, wherein (I, j=1, 2 . . . . , L), an element at the optional position is a pixel at a fixed distance from a pixel having a gray level of i and has a gray level of j, wherein the following fixed positional relationship exists between the two pixels: .zeta.=(DX, DY), where .zeta. is a displacement, and DX and XY are distances in two directions; and

[0045] S103: extracting, according to a positional relationship between the gray level co-occurrence matrixes, a texture feature quantity such as an Angular Second Moment (ASM) and a contrast CON, wherein ASM=.SIGMA..sub.i=1.sup.L.SIGMA..sub.j=1.sup.LP (i, j) and CON=.SIGMA..sub.n=1.sup.Ln.sup.2[.SIGMA..sub.i=1.sup.L.SIGMA..sub.j=1.sup- .LP(i, j)]|i-j|, where P is a feature vector at the position (i,j), and n is the number of times extraction is performed.

[0046] The gray level co-occurrence matrix mentioned in the foregoing steps is a common means for processing a texture feature of a remote sensing image, and a gray level co-occurrence matrix is used herein for processing a texture feature mainly for the following reasons:

[0047] 1: texture features of local image regions should be counted before the gray level co-occurrence matrix performs a texture feature analysis, and the extraction method provided herein needs to perform an extraction operation for a plurality of times using local features, thus, the extraction method provided herein is capable of performing an extraction operation in the original remote sensing image and directly using the extracted information in subsequent steps;

[0048] 2: in the use of the gray level co-occurrence matrix, generally, more than one texture feature is extracted in the gray level co-occurrence matrix, thus, a plurality of texture features can be used herein as a basis for a multi-hierarchical screening, moreover, it is common that some hierarchies lose image information during a multi-hierarchical screening, however, the extraction of a plurality of texture features can compensate for the lost information to a certain extent, thus further improving the quality of image extraction;

[0049] 3: the most important point is that most of the texture features extracted using a gray level co-occurrence matrix are related with each other, that is, the texture features extracted using a gray level co-occurrence matrix can visually reflect a spatial relationship whose application is emphasized herein and which is even a basis for the accurate recognition of a warehouse in a port; in addition to facilitating the direct implementation of a subsequent operation, decreasing unnecessary calculation, and increasing the speed of calculation, the pre-analysis of a corresponding texture feature is also advantaged in being independent from a spatial relationship in an actual operation although capable of visually embodying a spatial relationship of textures, therefore, as an earlier data processing, the pre-analysis, although average in texture feature recognition, reduces the amount of the data processed, increases the speed of operation, and can lay a foundation for a subsequent accurate recognition.

[0050] For the filter algorithm used in step S100, it should also be noted that in this step, due to the selection of a common Gabor filter as a filter algorithm, optimizing edge lines of a coast in a port using a filter algorithm refers specifically to: first, obtaining the discrete data obtained in the foregoing step, selecting, using a discretized Gabor template matrix and an image data matrix convolution, the lowest center frequency when extracting an image feature using a filter, and performing a frequency spectrum superposition calculation again to calculate a filtered image.

[0051] In the actual application of the Gabor filter algorithm, it should be emphasized herein that it is well known that a big convolution matrix will increase a computation burden sharply, this problem exists in the present invention as well, for this sake, a convolution matrix needs to be optimized further to conquer the problem of heave computation burden, and as shown in FIG. 2, the optimization is specifically realized by:

[0052] first, setting Fourier transformations of two convolution matrixes f.sub.1 and f.sub.2 as F.sub.1 and F.sub.2, in this case, the following equations are obtained: F.sub.1=fft (f.sub.1), and F.sub.2=fft (f.sub.2);

[0053] according to the convolution theorem, the following equation is obtained: conv (f.sub.1, f.sub.2)=ifft (F.sub.1*F.sub.2), where cony represents a convolution, fft represents a Fourier transformation, ifft represents an inverse transformation of a Fourier transformation, and F.sub.1*F.sub.2 represents the multiplying of corresponding elements in two matrixes F.sub.1 and F.sub.2.

[0054] By performing an optimization operation through the execution of the foregoing steps, the amount of calculation conducted to extract multi-hierarchical data is remarkably reduced, thus significantly increasing the efficiency of calculation, improving the actual handling capacity, and preventing calculation from being circularly repeated redundantly.

[0055] S200: extracting principal component images of a plurality of hierarchies using insufficient spectrum features, optionally selecting a sample of the irregular texture region, and forming, through a CA transformation, principal component images of different hierarchies with different difference values by taking the ratio of a between-class difference to an intra-class difference being maximum as an optimization condition;

[0056] The CA transformation specifically refers to a method for the discriminant analysis of feature extraction, which is applied to extracting a feature and capable of maximizing the ratio of a between-class variance of any data set to an intra-class variance of the data set to ensure the maximum separability of the data set.

[0057] As a canonical analysis transformation (that is, a method for the discriminant analysis of feature extraction) is an orthogonal linear transformation based on a classified statistic feature obtained through a sample analysis, in step S200, before the CA transformation is executed, the maximized ratio of a between-class variance of an optional data set to an intra-class variance of the data set is extracted according to the following linear transformation formula: Y=TX, where T is an ideal transformation matrix, so as to ensure the maximum separability of the data set to provide optimized basic data for the CA transformation.

[0058] A specific algorithm of the ideal transformation matrix is as follows:

[0059] S201: .sigma..sub.A is set as a standard deviation of a class 1 and a class 2 obtained after the transformation, .sigma..sub.w1 and .sigma..sub.w2 are set as intra-class standard deviations of the class 1 and the class 2, and .sigma..sub.w is set as the average value of .sigma..sub.w1 and .sigma..sub.w2;

[0060] S202: the relationship between a transformed variance and an untransformed variance is as follows:

[0061] .sigma..sub.w.sup.2=t.sup.TS.sub.wt, .sigma..sub.A.sup.2=t.sup.TS.sub.At, where S.sub.w and S.sub.A are an intra-class scatter matrix and a between-class scatter matrix of a given sample, and t is a mapping transformation vector; and

[0062] S203: the mapping transformation vector t is set as a special value of the ratio .sigma..sub.A.sup.2/.sigma..sub.W.sup.2 of the between-class variance and the intra-class variance, that is, .lamda.=.sigma..sub.A.sup.2/.sigma..sub.W.sup.2=t.sup.TS.sub.At/t.sup.TS.- sub.wt, when the mapping transformation vector t approximates a maximum value, (S.sub.A-.LAMBDA.S.sub.W) T=0, where A represents a diagonal matrix consisting of all feature values .lamda., and a matrix T composed of all column vectors t is a desired ideal transformation matrix.

[0063] To sum up, by taking the ratio of a between-class difference to an intra-class difference being maximum as an optimization condition, the CA transformation allows a first model corresponding to a maximum feature value to contain maximum separable information, and so on and so forth, a plurality of separable information axes can be obtained through the CA transformation in a plurality of dimensions, in this way, principle component images of a plurality of hierarchies can be extracted using insufficient spectrum features, moreover, it also should be noted that the CA transformation also decreases the number of the dimensions of a data space while increasing the separability of a class and thus reduces the complexity of an actual operation.

[0064] In the foregoing steps, the use of the CA transformation causes data concentrated in a remote sensing image to be separable, that is, the data subjected to the CA transformation have an excellent separability so that data can be hierarchically extracted without loss in subsequent transformations, resulting in that principle component images of the original remote sensing image can be hierarchically extracted.

[0065] Step S300: accurately recognizing the warehouse in the port using a spatial relationship feature, extracting a correlation relationship of the warehouse in the port from the principle component images, the correlation relationship of the warehouse in the port includes a road relationship, a transshipment square relationship and an enclosure relationship of the warehouse in the port, extracting attributes of a whole using the spatial correlation relationship of the warehouse in the port, and forming a feature point set with recognized warehouses to be analyzed.

[0066] Spatial feature, which is seldom used in remote sensing image processing, is mainly realized as a relationship pattern in a remote sensing image, that is, in a specific remote sensing image analysis, the final recognition of a target is realized using correlated features, in a remote sensing image, it is not easy to recognize attributes of an entity in a certain relationship by separately observing the entity, however, when a correlation of spatial relationship is introduced, attributes of an entity can be known using the correlated spatial relationship, and even attributes of a whole consisting of entities can be recognized using a structural feature and a relationship feature.

[0067] In step S300, a correlation relationship of the warehouse in the port is extracted, the correlation relationship includes a point feature, a line feature and a plane feature included in spatial features; a hierarchical relationship feature of the correlation relationship is acquired by extracting a hierarchy attribute of the remote sensing image based on a spectral feature of the remote sensing image.

[0068] S400: extracting a feature of a visually sensitive image from a scene image based on WTA visual rapid adaptation selection to obtain a feedback selection of a real scene image to extract the warehouse in the port.

[0069] As shown in FIG. 4, in step S400, the visually sensitive image includes a gray level, colors, edges, textures and a motion, a visual saliency map of each position in a scene image is obtained according to synthesized features, and the mutual competition of a plurality of the visual saliency maps transfers the inhibition of return of focus.

[0070] the competition and inhibition of the visual saliency map includes the following steps:

[0071] S401: selecting a plurality of parallel and separable feature maps from the feature point set, and recording a hierarchy attribute of each position in a feature dimension on a feature map to obtain the saliency of each position in different feature dimensions;

[0072] S402: merging saliencies of different feature maps to obtain a total saliency measure, and guiding a visual attention process; and

[0073] S403: dynamically selecting, through a WTA network, the position with the highest saliency from the saliency map as the Focus Of Attention (FOA), and then performing the processing circularly through the inhibition of return until a real scene image is obtained.

[0074] Moreover, in the present invention, it also should be noted that the method further includes a step S500 of tracking a nonlinear filtering feature, including: separately extracting the filtering feature obtained in step S100 using hierarchical image attributes extracted through the foregoing four steps, and performing a tracking in a remote sensing analysis image according to a texture feature extracted according to a hierarchical analysis to compensate for an attribute that cannot be directly extracted by tracking a texture feature, so as to form an interpreted remote sensing image, and comparing the formed remote sensing image with a real scene image in step S400 after forming the interpreted remote sensing image so as to remove an inaccurate tracked texture and keep a rational tracked texture.

[0075] In conclusion, the main features of the present invention lie in that: by extracting the original attribute using a texture feature and dividing edge lines of a coast of a port using a filter, the present invention timely removes data that does not need to be processed and thus reduces the amount of the data sequentially processed; after performing the foregoing processing, the present invention performs a CA transformation to cause the remote sensing image data to be capable of being hierarchized, and then hierarchizes the remote sensing image data using insufficient spectrum information according to a spatial position feature of a warehouse to obtain principal component images of different hierarchies, obtains attributes of a single entity using a spatial relationship among the principal component images and thereby obtains attributes of a whole, thus, the present invention has a high pertinence; moreover, the present invention accurately acquires attributes of a warehouse in a port using a WTA visual rapid adaptation selection algorithm after forming a feature point set, and uses a track optimization algorithm to compensate for distorted data or data that is not acquired through remote sensing to form a complete interpreted image, thus avoiding the use of a correction algorithm and the calculation of an approximate interpolation.

[0076] It is apparent for those skilled in the art that the present invention is not limited to details of the foregoing exemplary embodiments and the present invention can be realized in other specific forms without departing from the spirit or basic features of the present invention. Thus, the embodiments should be regarded as exemplary but not limitative in any aspect; because the scope of the present invention is defined by appended claims but not the foregoing description, the present invention is intended to cover all the variations falling within the meaning and scope of an equivalent of the claims. Any reference symbol in the claims should not be construed as limiting a relevant claim.

* * * * *

D00000

D00001

D00002

D00003

XML

uspto.report is an independent third-party trademark research tool that is not affiliated, endorsed, or sponsored by the United States Patent and Trademark Office (USPTO) or any other governmental organization. The information provided by uspto.report is based on publicly available data at the time of writing and is intended for informational purposes only.

While we strive to provide accurate and up-to-date information, we do not guarantee the accuracy, completeness, reliability, or suitability of the information displayed on this site. The use of this site is at your own risk. Any reliance you place on such information is therefore strictly at your own risk.

All official trademark data, including owner information, should be verified by visiting the official USPTO website at www.uspto.gov. This site is not intended to replace professional legal advice and should not be used as a substitute for consulting with a legal professional who is knowledgeable about trademark law.