Method For Predicting A Motion Of An Object

Wuthishuwong; Chairit ; et al.

U.S. patent application number 16/089381 was filed with the patent office on 2019-04-18 for method for predicting a motion of an object. The applicant listed for this patent is NEC Laboratories Europe GmbH. Invention is credited to Francesco Alesiani, Chairit Wuthishuwong.

| Application Number | 20190113603 16/089381 |

| Document ID | / |

| Family ID | 55802334 |

| Filed Date | 2019-04-18 |

| United States Patent Application | 20190113603 |

| Kind Code | A1 |

| Wuthishuwong; Chairit ; et al. | April 18, 2019 |

METHOD FOR PREDICTING A MOTION OF AN OBJECT

Abstract

A method for predicting a motion of an object includes predicting the motion of the object based on predicted future sensor readings. The predicted future sensor readings are computed based on current measurement data of one or more range sensors.

| Inventors: | Wuthishuwong; Chairit; (Wuppertal, DE) ; Alesiani; Francesco; (Heidelberg, DE) | ||||||||||

| Applicant: |

|

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Family ID: | 55802334 | ||||||||||

| Appl. No.: | 16/089381 | ||||||||||

| Filed: | March 31, 2016 | ||||||||||

| PCT Filed: | March 31, 2016 | ||||||||||

| PCT NO: | PCT/EP2016/057153 | ||||||||||

| 371 Date: | September 28, 2018 |

| Current U.S. Class: | 1/1 |

| Current CPC Class: | G01C 21/34 20130101; G01S 13/726 20130101; G05D 1/024 20130101; G01S 17/931 20200101; G01S 7/4808 20130101 |

| International Class: | G01S 7/48 20060101 G01S007/48; G01S 17/93 20060101 G01S017/93 |

Claims

1: A method for predicting a motion of an object, the method comprising: predicting the motion of the object based on predicted future sensor readings, wherein the predicted future sensor readings are computed based on current measurement data of one or more range sensors.

2: The method according to claim 1, wherein the motion of the object is predicted based on historic measurement data of the one or more range sensors.

3: The method according to claim 1, wherein a point clustering is performed on the current measurement data and on the historic measurement data of the one or more range sensors and current object state data based on a distance between two points resulting in one or more clusters, and wherein future clusters are predicted and the object is identified in a predicted cluster to predict the motion of the object.

4: The method according to claim 1, wherein at least two different prediction methods are used to compute method-dependent predicted future sensor readings to obtain the predicted future sensor readings.

5: The method according to claim 4, wherein the method-dependent predicted future sensor readings of the least two different prediction methods are weighed and combined to obtain the predicted future sensor readings.

6: The method according to claim 1, wherein the predicted future sensor readings are corrected during computing by using Gaussian Process Approximation.

7: The method according to claim 3, wherein a future cluster of the future clusters is predicted using a Kalman filter for a movement of a cluster of the clusters.

8: The method according to claim 1, wherein motion feedback of the object is included when computing the predicted motion of the object.

9: The method according to claim 1, wherein the range sensors are provided in form of LIDAR sensors.

10: A computing entity for predicting a motion of an object, the computing entity comprising: an input interface for receiving data of one or more range sensors; an output interface for outputting a predicted motion of the object; and a computation device comprising a processor and a memory being configured to predict the motion of the object based on predicted future sensor readings, wherein the predicted future sensor readings are computed based on current measurement data of the one or more range sensors.

11: A non-transitory computer readable medium storing a program configured to cause a computer to execute a method comprising predicting a motion of an object, wherein the motion of the object is predicted based on predicted sensor readings, wherein the predicted sensor readings are computed based on data of one or more range sensors at a current time.

Description

CROSS-REFERENCE TO PRIOR APPLICATION

[0001] This application is a U.S. National Stage Application under 35 U.S.C. .sctn. 371 of International Application No. PCT/EP2016/057153 filed on Mar. 31, 2016. The International Application was published in English on Oct. 5, 2017, as WO 2017/167387 A1 under PCT Article 21(2).

FIELD

[0002] The present invention relates to predicting a motion of an object.

BACKGROUND

[0003] Autonomous and automated driving requires defining a safe trajectory of the vehicle which is able to proceed in the task avoiding static and dynamic obstacles. The prediction capability of the autonomous driving component is important also to meet smoothness and comfort requirements.

[0004] Conventional techniques for prediction or estimation include the presence of inaccuracy and noisy measurement. An example of such a technique is the Kalman-Bucy filter. The conventional technique for object motion prediction is to directly estimate the motion of the object based on the current motion and historical motion after mapping the raw measurement data into local or global coordinates. Because most conventional techniques have an assumption that the measurements are not reliable, they attempt to improve this noisy measurement before determining the object motion and then, using only the outcome in order to predict the future object motion.

[0005] Conventional motion planning is generating the real time trajectory by using the real time feedback data. However, knowing the object motion provides information for solving potential conflicts that might happen in the near future. With this information a robot for example can produce a continuous trajectory for the limited future time horizon.

SUMMARY

[0006] An embodiment of the present invention provides a method for predicting a motion of an object that includes predicting the motion of the object based on predicted future sensor readings. The predicted future sensor readings are computed based on current measurement data of one or more range sensors.

BRIEF DESCRIPTION OF THE DRAWINGS

[0007] The present invention will be described in even greater detail below based on the exemplary figures. The invention is not limited to the exemplary embodiments. All features described and/or illustrated herein can be used alone or combined in different combinations in embodiments of the invention. The features and advantages of various embodiments of the present invention will become apparent by reading the following detailed description with reference to the attached drawings which illustrate the following:

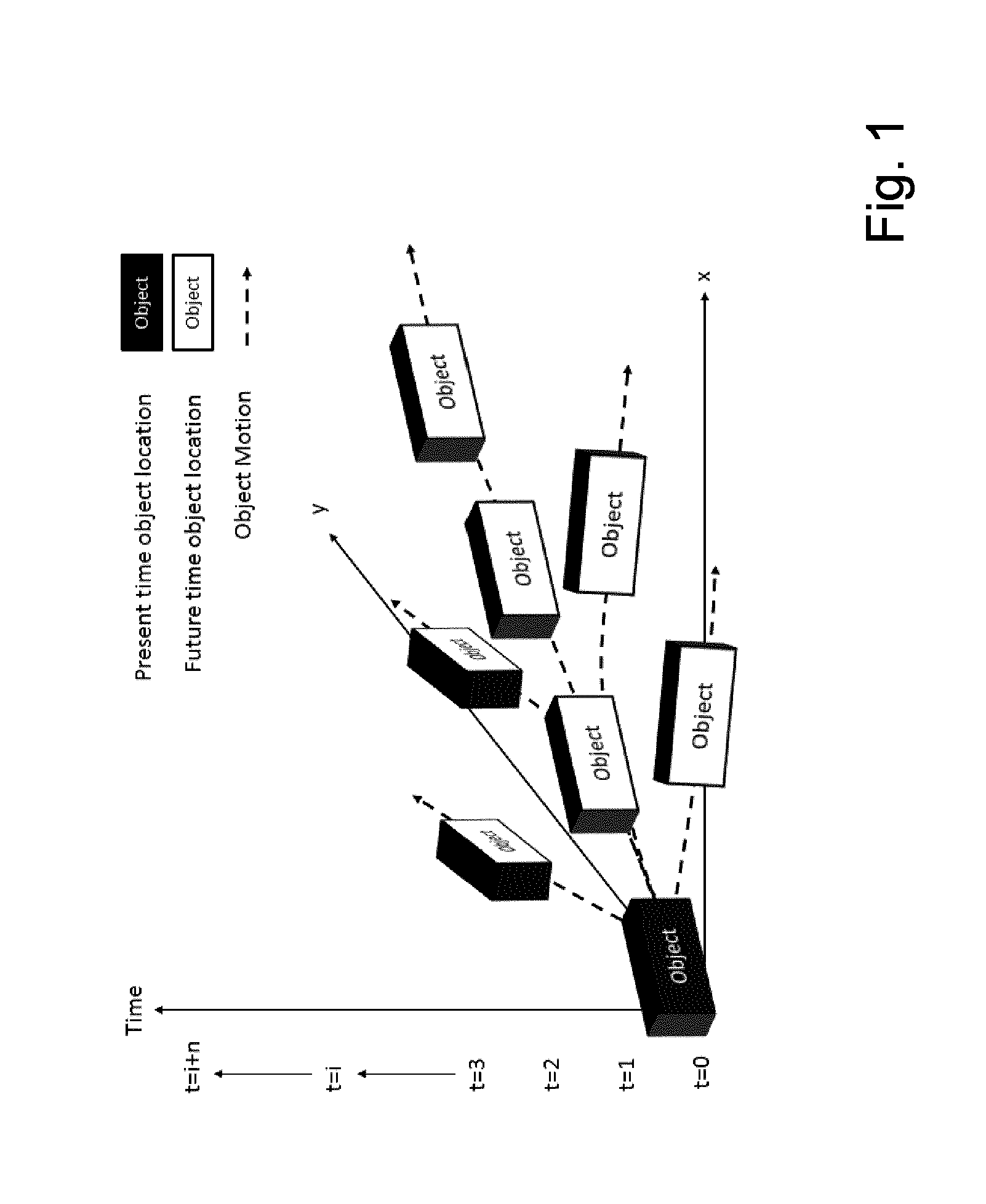

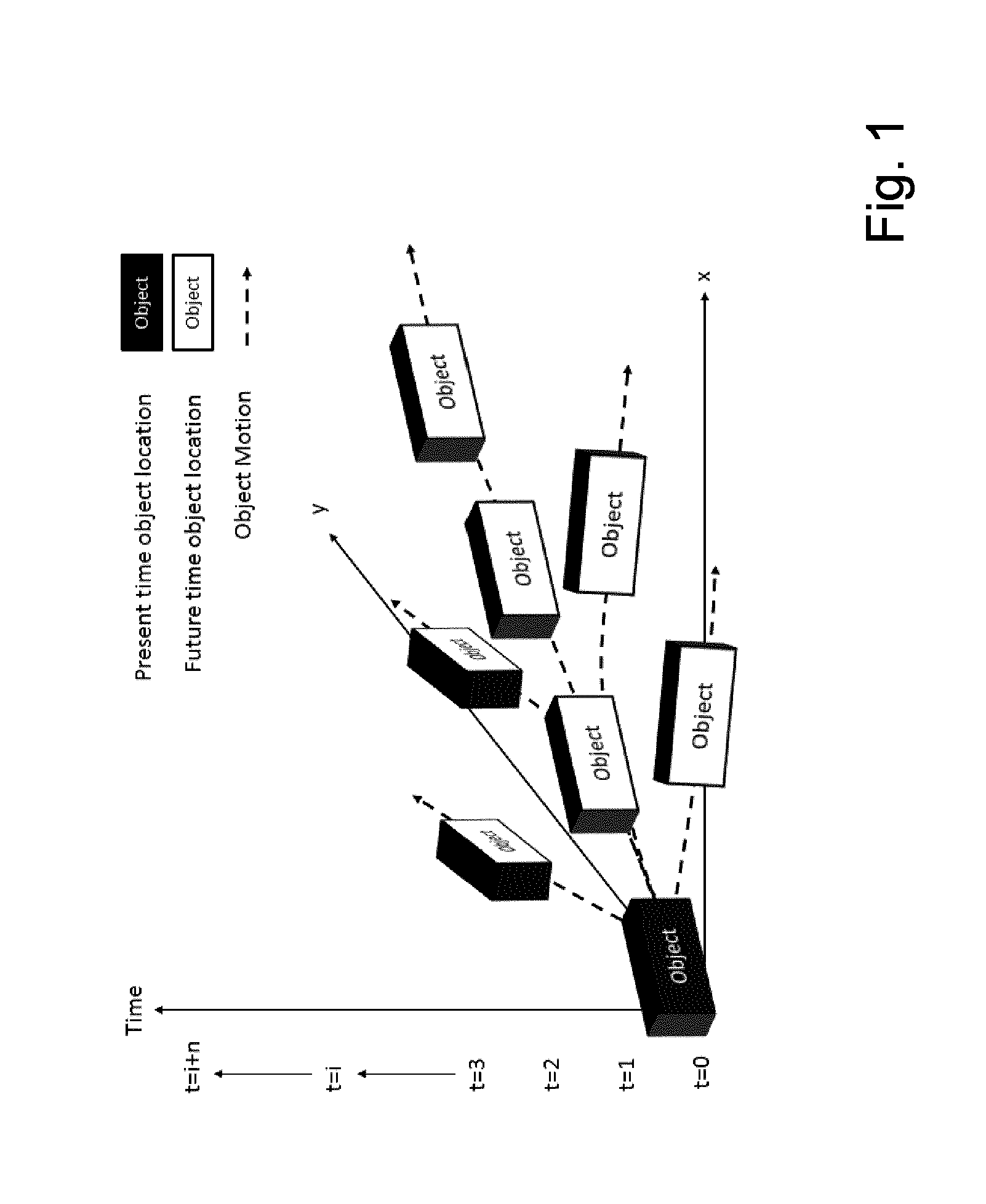

[0008] FIG. 1 shows a time variant object motion;

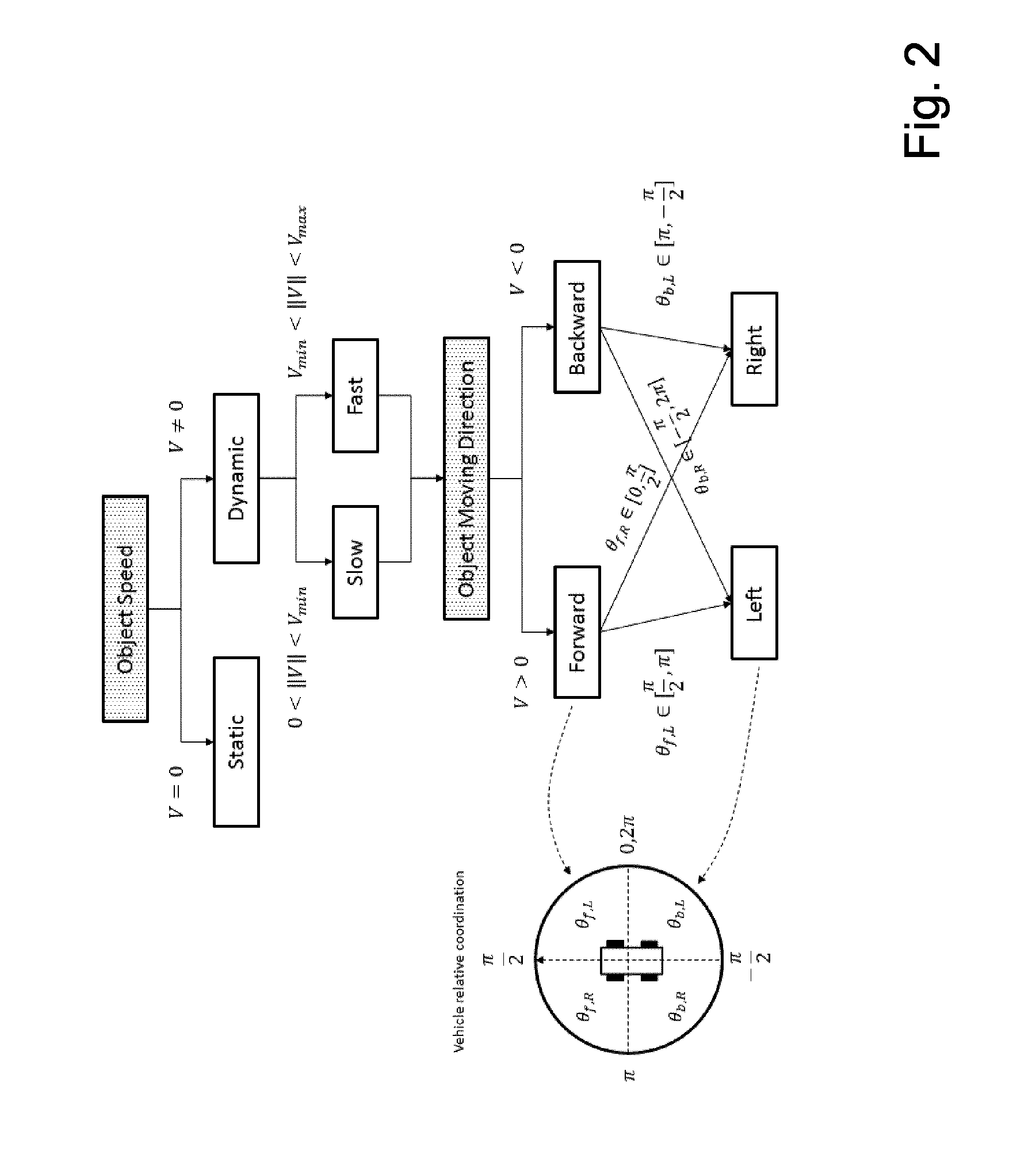

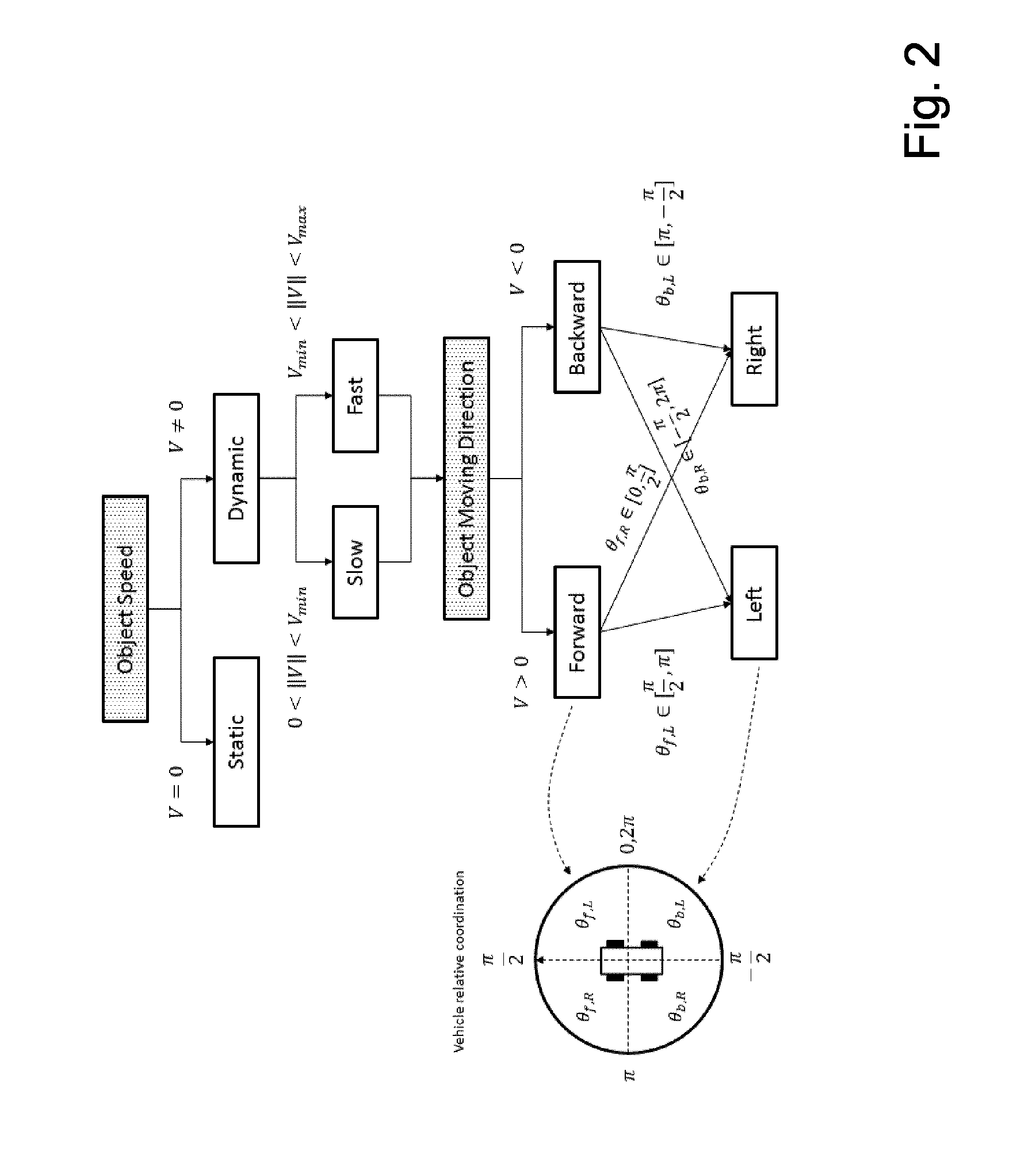

[0009] FIG. 2 shows a method for determining object motion and steering;

[0010] FIG. 3 shows a system according to an embodiment of the present invention;

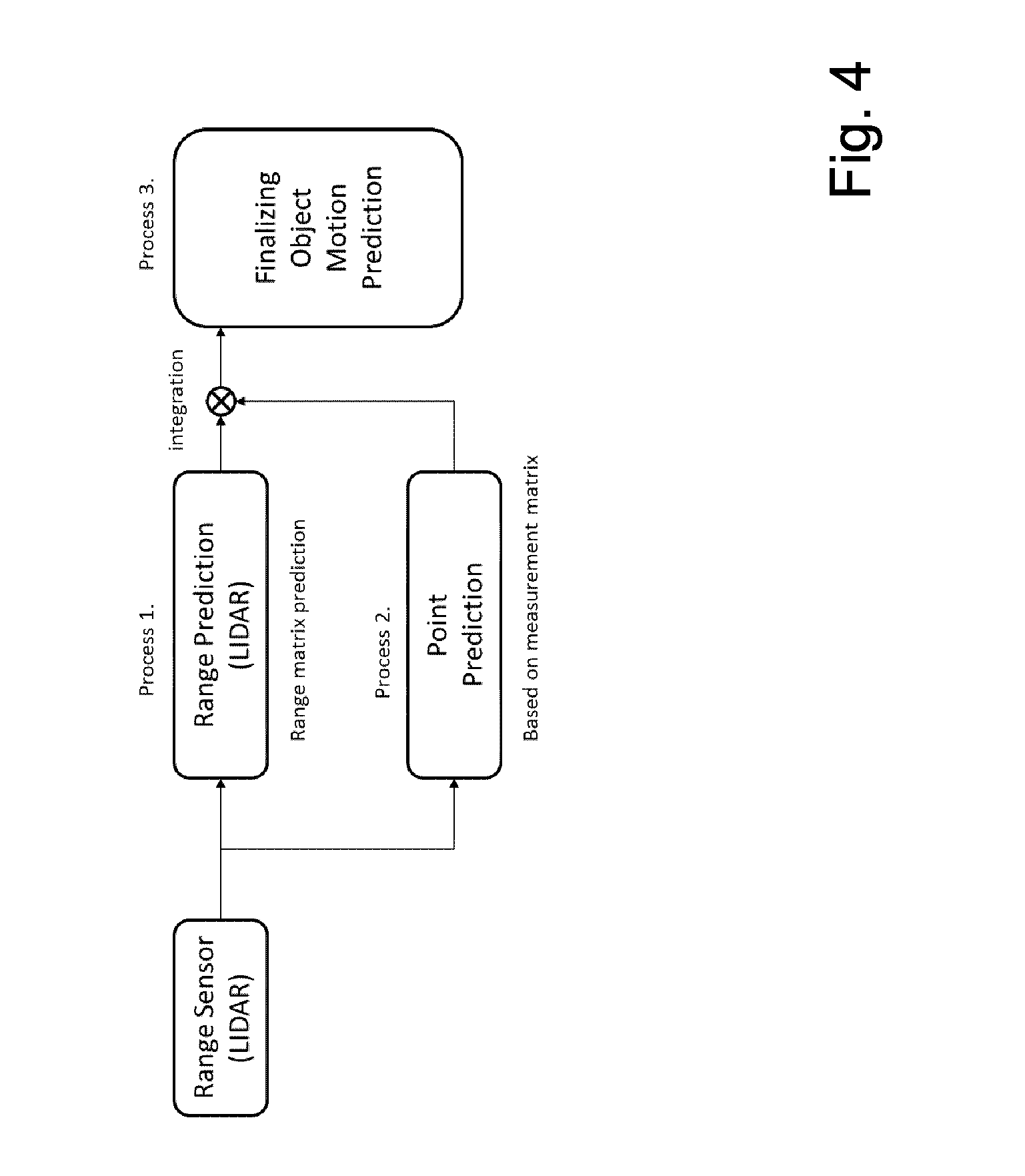

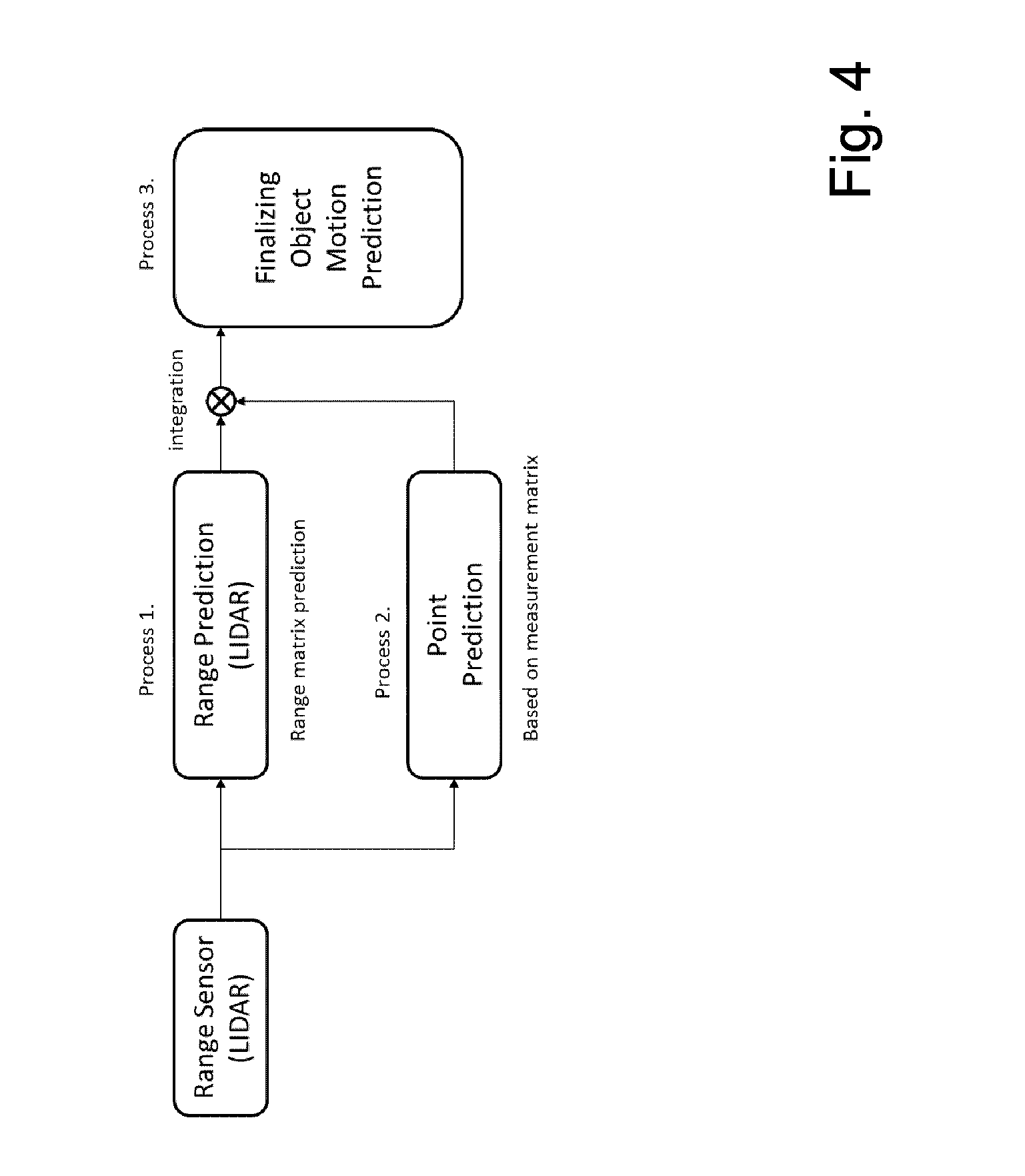

[0011] FIG. 4 shows part of the system according to a further embodiment of the present invention;

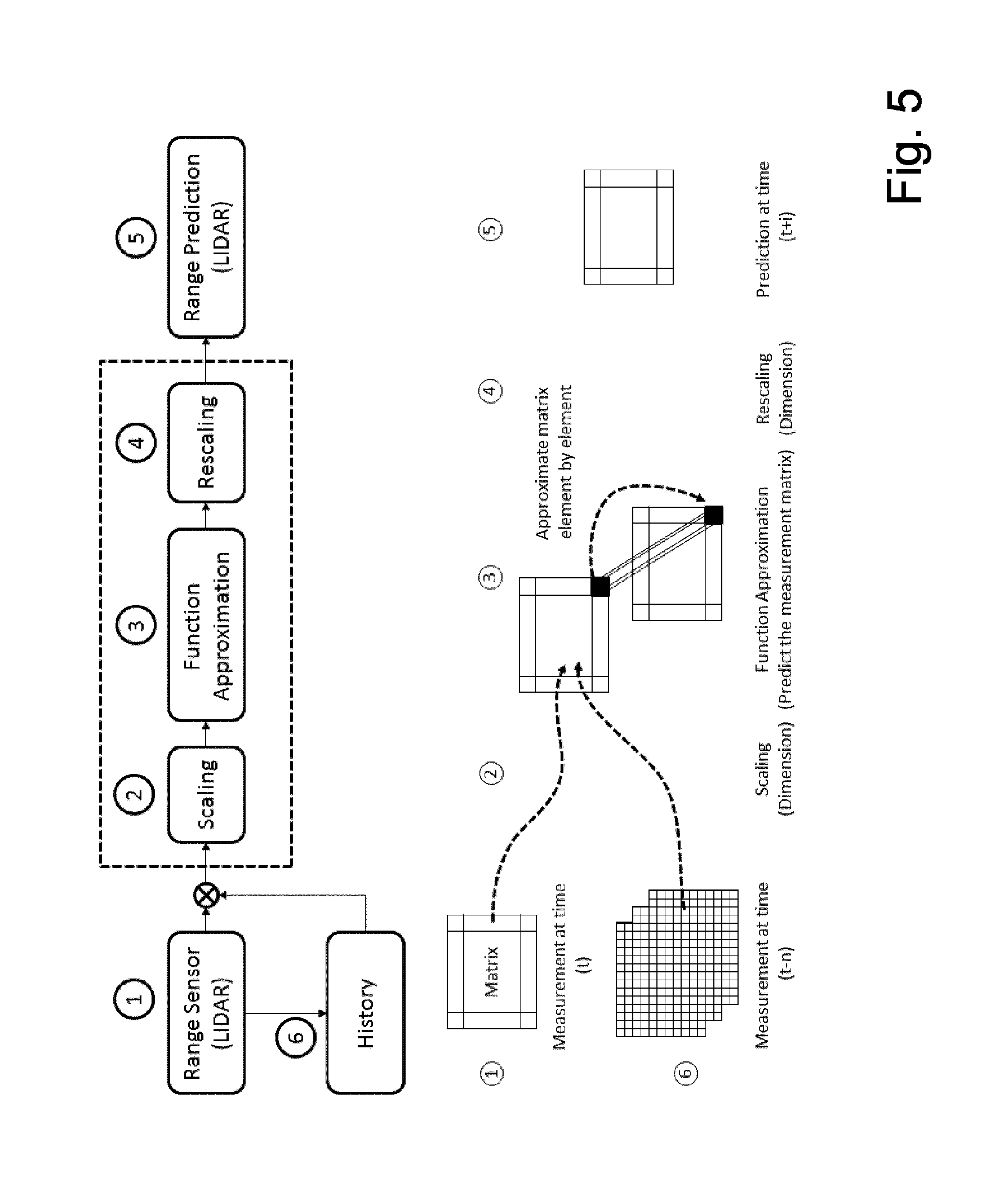

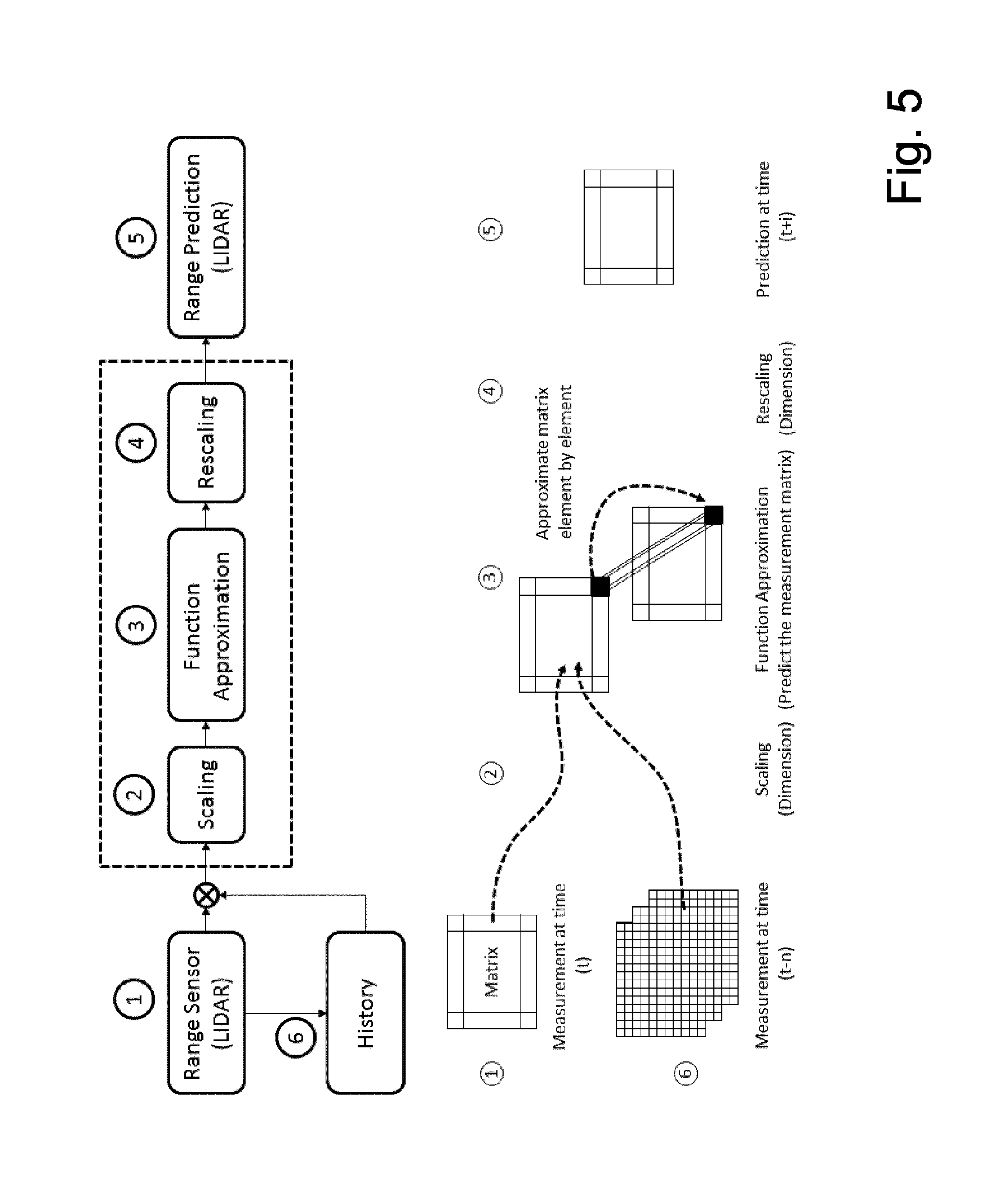

[0012] FIG. 5 shows part of a method according to an embodiment of the present invention;

[0013] FIG. 6 shows part of a method according to a further embodiment of the present invention;

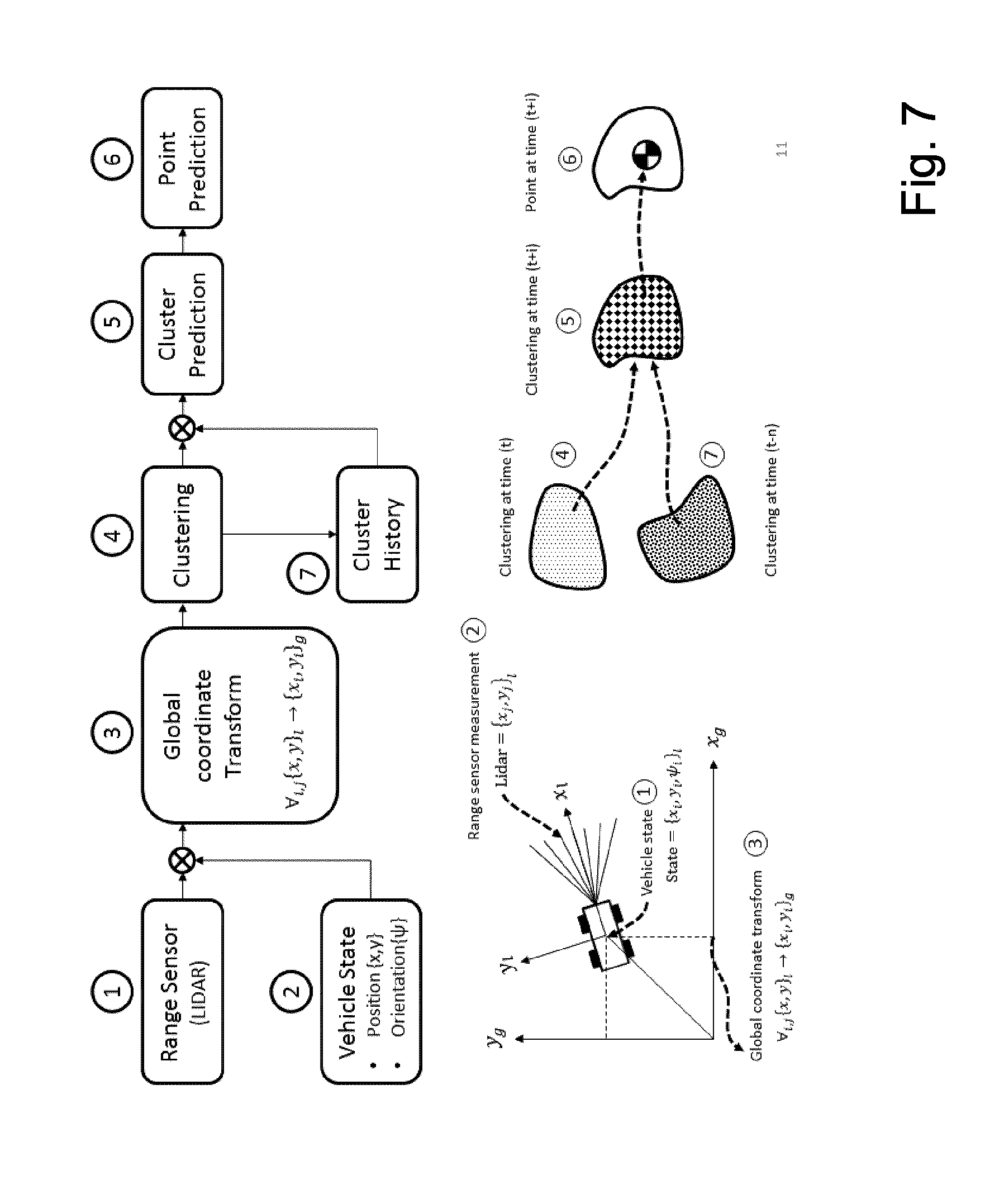

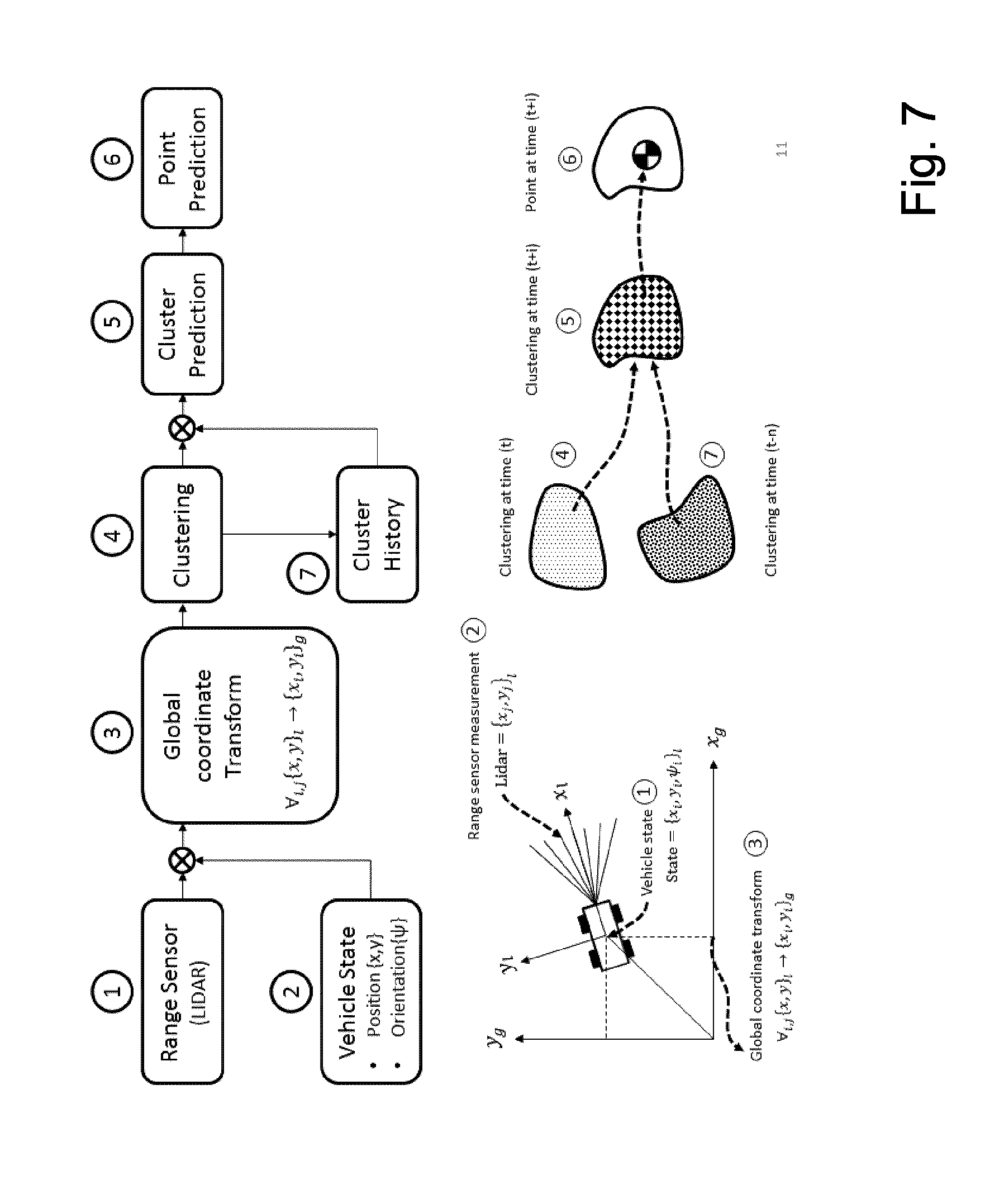

[0014] FIG. 7 shows part of a method according to a further embodiment of the present invention;

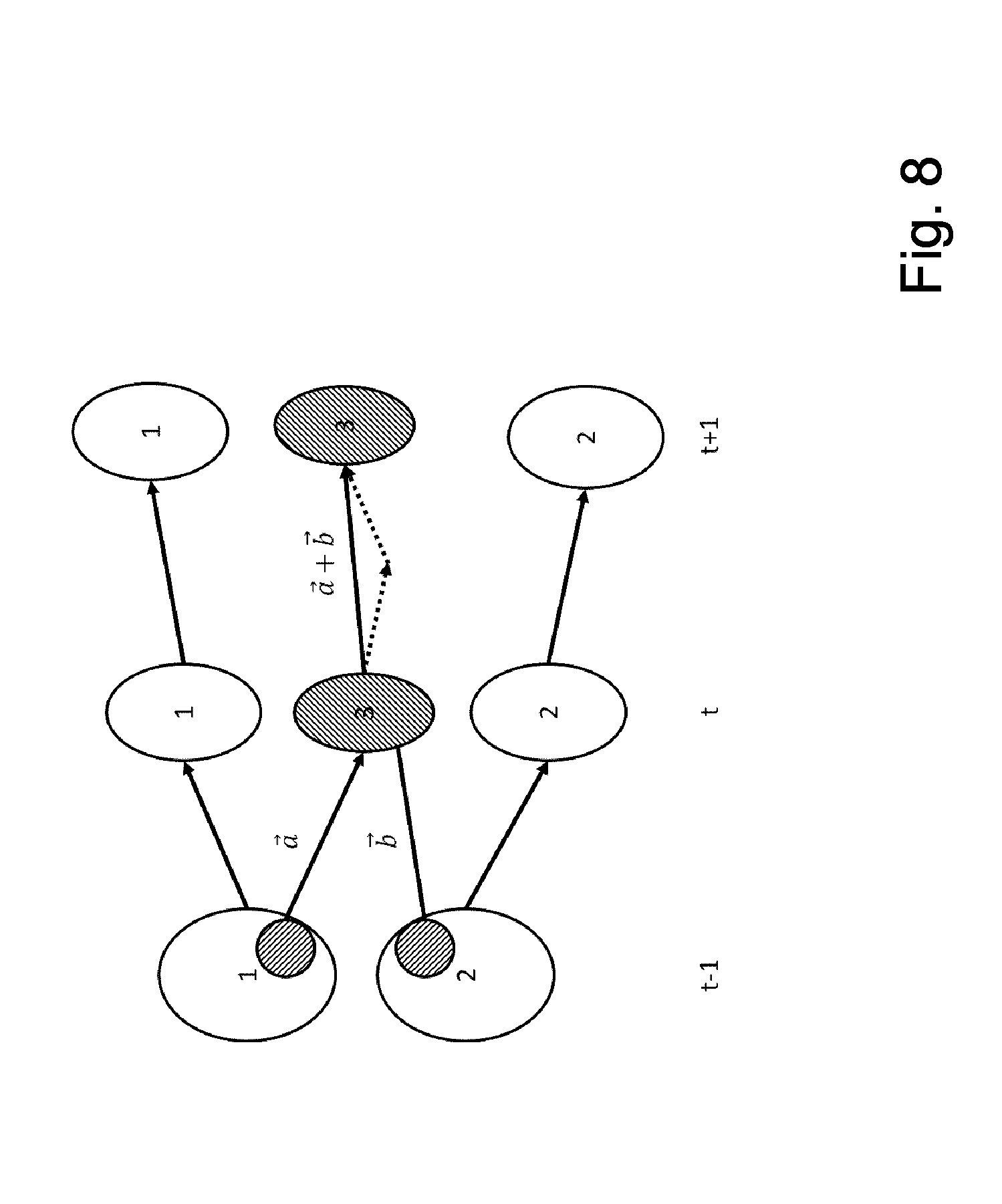

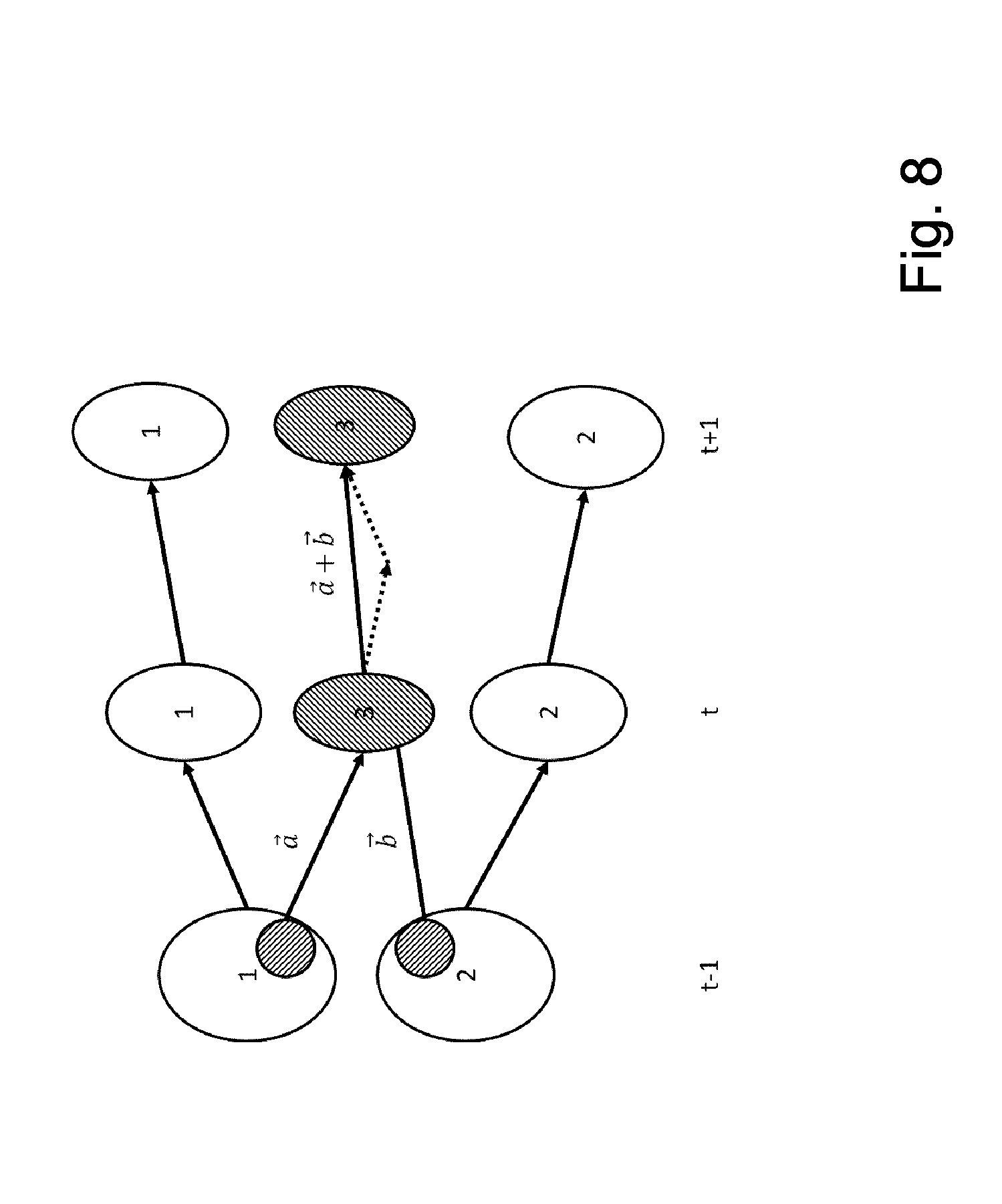

[0015] FIG. 8 shows part of a method according to a further embodiment of the present invention; and

[0016] FIG. 9 shows steps of a method according to a further embodiment of the present invention.

DETAILED DESCRIPTION

[0017] Although applicable in general to any kind of object, the present invention will be described with regard to vehicles like autonomous robots or the like.

[0018] Since object motion naturally is time varying, identifying the object motion in the future is difficult. The object motion changes over time either with linear or nonlinear relationship.

[0019] FIG. 1 shows the multiple variations of the object motion over the time. Problems which arise are illustrated in FIG. 2. They may be categorized into two points: [0020] 1. The speed of the object: Information whether this object is static or dynamic and approximate how fast the object move. [0021] 2. The moving direction of object: Information where the object will go.

[0022] Embodiments of the present invention enable a higher accuracy when predicting a motion of an object.

[0023] Embodiments of the present invention provide a smooth and reliable motion of an object.

[0024] In an embodiment, the present invention provides a method for predicting a motion of an object, where the motion of the object is predicted based on predicted future sensor readings, where the predicted future sensor readings are computed based on current measurement data of one or more range sensors.

[0025] In a further embodiment, the present invention provides a computing entity for predicting a motion of an object, including an input interface for receiving data of one or more range sensors, an output interface for output a predicted motion of the object and computation means including a processor and a memory, being adapted to predict the motion of the object is predicted based on predicted future sensor readings, where the predicted future sensor readings are computed based on current measurement data of one or more range sensors.

[0026] In a further embodiment, the present invention provides a non-transitory computer readable medium storing a program causing a computer to execute a method predicting a motion of an object, where the motion of the object is predicted based on predicted future sensor readings, where the predicted future sensor readings are computed based on current measurement data of one or more range sensors.

[0027] The term "object" is in particular in the claims, preferably in the specification, to be understood in its broadest sense and relates to any static or moving physical entity.

[0028] The term "motion" with regard to "object" is to be understood in its broadest sense and refers preferably in the claims, in particular in the specification, to the information related to the object associated with a state and/or movement of the object.

[0029] The term "obstacle" is to be understood in his broadest sense and refers preferably in the claims, in particular in the specification, to any kind of object which might be able to cross the trajectory, path or the like of another object and is detectable by sensors.

[0030] The terms "computing device" or "computing entity", etc. refer in particular in the claims, preferably in the specification each to a device adapted to perform computing like a personal computer, a tablet, a mobile phone, a server, a router, a switch or the like and includes one or more processors having one or more cores and may be connectable to a memory for storing an application which is adapted to perform corresponding steps of one or more of the embodiments of the present invention. Any application may be software based and/or hardware based installed in the memory on which the processor(s) can work on. The computing devices or computing entities may be adapted in such a way that the corresponding steps to be computed are performed in an optimized way. For instance different steps may be performed in parallel with a single processor on different of its cores. Further the computing devices or computing entities may be identical forming a single computing device.

[0031] The term "computer readable medium" may refer to any kind of medium, which can be used together with a computation device or computer and on which information can be stored. The information may be any kind of data which can be read into a memory of a computer. For example the information may include program code for executing with the computer. Examples of a computer readable medium are tapes, CD-ROMs, DVD-ROMs, DVD-RAMs, DVD-RWs, BluRay, DAT, MiniDisk, solid state disks SSD, floppy disks, SD-cards, CF-cards, memory-sticks, USB-sticks, EPROM. EEPROM or the like.

[0032] Further features, advantages and further embodiments are described or may become apparent in the following:

[0033] The motion of the object may be predicted further based on historic measurement data of the one or more range sensors. This enables a more precise prediction of a motion of an object.

[0034] A point clustering may be performed on the current and on historic measurement data of the one or more range sensors and current object state data based on a distance between two points resulting in one or more clusters and where future clusters are predicted and the object is identified in a predicted cluster to predict the motion of the object This enhances the precision of the prediction of a motion of an object.

[0035] At least two different prediction methods may be used to compute method-dependent predicted future sensor readings. This even further enhances robustness and precision of the object motion prediction since the outcome of different prediction methods can be combined to obtain a more precise and robust object motion prediction.

[0036] The method-dependent predicted future sensor readings of the at least two prediction methods may be weighed and combined to obtain the predicted sensor readings. This enables in a very flexible way to provide a more precise combination of the predicted sensors readings.

[0037] The predicted sensor readings may be corrected during computing by using Gaussian Process Approximation. This enables to predict computer sensor measurements based on the corrected trend with the Gaussian Process Approximation. Gaussian Process Approximation is for example disclosed in the non-patent literature of Snelson, Edward, Ghahramani, Z. "Local and global sparse Gaussian process approximations", Gatsby Computational Neuroscience Unit, University College London, UK.

[0038] A future cluster may be predicted using a Kalman filter for a movement of the cluster. A Kalman filter provides linear quadratic estimation using a series of measurements observed over time including statistical noise, etc. and the output of a Kalman filtering provides estimates of unknown variables tending to be more precise than those which are based on a single measurement. Kalman filtering is for example disclosed in the non-patent literature of Kalman, R. E., 1960, "A new approach to linear filtering and prediction problems", Journal of Basic Engineering 82:35. Doi:10.111.5/1.3662552.

[0039] Historical data of one ore more range sensors may be used for a computing the predicted sensor readings. This further enhances the precision of the object motion prediction since historical data can be used to train either online or offline the computation of the object motion prediction.

[0040] Motion prediction feedback of that the object may be included when computing the predicted motion of the object. This further allows to refine the prediction of a motion of an object taking into account motion feedback of the object itself.

[0041] The range sensors may be provided in form of Light Detection and Ranging LIDAR sensors. LIDAR sensors enable light detection and ranging, i.e. LIDAR sensors enable optical range and velocity measurements by use of for example light of a laser or the like.

[0042] There are several ways how to design and further develop the teaching of the present invention in an advantageous way. To this end it is to be referred to the patent claims subordinate to the independent claims on the one hand and to the following explanation of further embodiments of the invention by way of example, illustrated by the figures on the other hand. In connection with the explanation of the further embodiments of the invention by the aid of the figures, generally further embodiments and further developments of the teaching will be explained.

[0043] FIG. 1 shows a time variant object motion.

[0044] In FIG. 1, a time variant object motion is shown beginning at the object location at the present time t=0. The object moves then in x-y-direction over time t.

[0045] FIG. 2 shows a method for determining object motion and steering.

[0046] In FIG. 2, describing parameters for an object movement of a vehicle like a car are shown. For example the object speed can be either static or dynamic, i.e. the vehicle moves or not. In case the vehicle moves, i.e. is "dynamic", the object speed may be categorized into "slow" or "fast" where "slow" means the velocity below a certain threshold and "fast" above a certain threshold until the maximum possible speed of the object. Considering the object speed also the moving direction "forward" or "backward" and of course the steering of the object can be "left" or "right" in both directions, i.e. "forward" or "backward".

[0047] FIG. 3 shows a system according to an embodiment of the present invention.

[0048] In FIG. 3, a system architecture for an object motion prediction application is shown. In FIG. 3, the sensor measurement is predicted, i.e. what the sensor will be read in the next timing step instead of conventionally predicting the outcome of the object movement. The system architecture of the object motion prediction can be illustrated with the closed loop block diagram in FIG. 3.

[0049] It includes six main blocks. Block (1) includes the mission goal (reference value), where the object shall go. Block (2) provides map data, e.g. a digital map, provided by a database in form of the road geometry and landmark environment. In Block (3) the input from a Feedback measurement Block (6) is received and the object motion in the future time is predicted and corrected as an output to the motion planning in Block (4). Block (5) provides a trajectory control for the object receiving the time dependent trajectory from Block (4) and generating the actual vehicle control parameters, Steering angle and Speed. Block (7) provides the update future trajectory of the vehicle, based on the planned trajectory and the current vehicle state, to the Object Motion block (3).

[0050] FIG. 4 shows part of the system according to a further embodiment of the present invention.

[0051] In FIG. 4, object motion in form of a block diagram is shown. Block (3) includes at least three prediction processes. [0052] 1. Process 1: Range matrix prediction: This is the sensor measurement prediction process. It takes input data from the range sensor (e.g. a LIDAR sensor) at the current measurement time (t) and predicts the future sensor reading. Based on the predicted sensor readings, the object movement is computed. [0053] 2. Process 2: Point prediction: This is the object location prediction process, which uses a clustering algorithm. It takes input data from a range sensor (LIDAR) at the current measurement time (t). Points are clustered in clouds to find the representing point of the object and predicting the new cluster in the future time, based on current and historical clusters. Then the object point of this new cluster is found. [0054] 3. Process 3: Finalizing object motion prediction is the merging of the results of two point predictions according to processes 1 and 2. This process 3 integrates the prediction of the two previous processes 1 and 2 and assigns a new position to each point and an associated standard deviation derived by the previous processes 1 and 2. This block 3 receives also the vehicle current and future position; this information is used to correct, e.g. obstacle positions.

[0055] FIG. 5 shows part of a method according to an embodiment of the present invention.

[0056] In FIG. 5, in more detail the Process 1, i.e. the range matrix prediction is described.

[0057] Range prediction is performed by predicting the future range sensor measurement, e.g. as from a LIDAR sensor. The prediction includes the following steps: [0058] Training the prediction system with the historical measurement data, depicted with reference sign 6. The historic and current sensor measurements are accumulated and transmitted to a training system. Based on this information the training system computes a new configuration of a predicting entity. The configuration can be updated at periodic times, continuously or on specific condition, as for example when computational resources are available. [0059] Using the historical data to predict the trend of the current sensor reading. [0060] Determining the prediction error by comparing the current sensor measurement with the predicting value. [0061] Correcting the predicting trend. Each reading has a variance that is converted into a point variance. [0062] Predicting the future sensor measurement based on the corrective trend with the Gaussian Process Approximation, depicted with reference sign 3. [0063] Transform the predicted range measurement to an actual point location.

[0064] Actual measured data from the sensor (reference sign 1) and historical data are combined together to predict the trend of the current sensor reading. Prediction errors are determined and the predicting trend is corrected. Then after scaling the current and historic measurement data (reference sign 6) the corrective trend using Gaussian Process Approximation (reference sign 3) is used for predicting the future sensor measurement. After rescaling (reference sign 4) this results then in the range prediction (reference sign 5).

[0065] Here in FIG. 5, a mapping of the information from the future instant is based on the Gaussian Process Approximation. To improve performance, a parallel stage that used spatial ARIMA predictor may be used and the two predictions based on different methods are then integrated. Other methods that can additionally or alternatively be used as prediction component are e.g. random forest or bootstrapped neural networks. The final prediction is computed by e.g. a linear combination of each method, where the weight may be, e.g. inversely proportional to the error of prediction. Some of the prediction methods can be trained offline to reduce the computational requirement of combining multiple predictions. Such an ensemble prediction based on the plurality of prediction methods is schematically shown in FIG. 6.

[0066] FIG. 7 shows part of a method according to a further embodiment of the present invention.

[0067] FIG. 7 the Process 2, i.e. the point prediction based on clustering is described as shown in FIG. 4.

[0068] Due to the raw measurement the data from sensors, from trajectory tracking and from the object motion detection includes a plurality of (data)points. The process predicts the future object motion by using a (data)point clustering method and finding the representing point of the object in the future cluster.

[0069] Predicting the future object motion is performed by the following steps: [0070] Transmitting (reference sign 3) all measurement data in vehicle coordinates, range data form range sensor measurements (reference sign 2) and vehicle state data (reference sign 1) to global coordinates. In other words, the sensor measurement at the current time is respected to a vehicle coordinate, so in order to use this sensor information, all sensor measurement data has to be transformed into a global coordinate, to have a common reference. This process may done by a general conventional technique. [0071] Clustering (reference sign 4) points of the current measurement by taking into consideration the size of the points (distance). In other words, after obtaining global information of sensor measurement, the resulting point cloud is interpreted to objects by clustering them into a group, for which a conventional clustering technique i.e, k-means, dbscan (density based) or the method according to an embodiment of the present invention based on statistical model, density, mean and variance shown in FIG. 8. [0072] Clusters at successive time are matched [0073] Predicting the future cluster (reference sign 5) based on using the current cluster and historical clusters (reference sign 7). In other words, a cluster movement is predicted in advance e.g. 5-10 s ahead of the current cluster, using the historical cluster data from the previous times. [0074] Clusters movement is processed e.g. using Kalman filter for object tracking, Optical flow or according to an embodiment of the present invention based on machine learning like an evolution algorithm. [0075] Predicting the future representing points of the objects (reference sign 6). The point movement (represented by the shift vector) is derived from the cluster motion, where it belongs. Each point may be assigned a new position and a variance. In other words, the last step is to predict a point that can represent an object i.e., the center of gravity of the cluster is assumed to be a center of gravity, `CG`, of an object. (This can be done by a conventional CG calculation method).

[0076] To summarize, the cluster is predicted before a point is predicted in contrast to a conventional algorithm/method determining a point directly without clustering process. A point cloud is always contaminated with noise either from internal or external disturbances, e.g. caused by sensor sensitivity. A point cloud is dynamic, i.e. it can split or merge over time. It means that predicting a point directly from a point cloud may result in high fluctuations when a point cloud splits or merges, but using clustering enables stability, less fluctuation in point prediction, and provides more accurate future object movement prediction.

[0077] FIG. 8 shows part of a method according to a further embodiment of the present invention.

[0078] In FIG. 8 the principle of clustering prediction is shown at different times.

[0079] Since single point motion prediction may be not reliable, group point prediction may be used where the groups of points are based on clustering. Since groups disappear, merge or split on successive instants of time, a transition matrix is used. The entries of the matrix represent the probability of transition of each cluster for successive instants of time. The transition probability is used to derive the location of centroid of clusters in the future as described in FIG. 8.

[0080] After performing process 1 and 2, Process 3, i.e. finalizing object motion prediction, combines the two predictions results of the object prediction from current and future LIDAR measurement.

[0081] The output of a single predicting entity may be transmitted to a combination system being adapted to compare the results and decides the most like object position with its associated error for each of the time instant in the future horizon.

[0082] For finalizing the future object motion: [0083] Matching the points from two methods is performed according to the following: [0084] If a point has not been matched, his prediction variance gets the maximum, but its future position is considered. [0085] If a point is reproduced, i.e. matched in the two methods, the variance is proportional to the variance of the two predictions and the distance. [0086] Correcting the object location based on the corrected trajectory according to the matched points.

[0087] FIG. 9 shows steps of a method according to a further embodiment of the present invention.

[0088] In FIG. 9 a method for computing the position of the object in future instant of time is shown including the steps of: [0089] 1) Predicting the sensor measurement, using approximation function, [0090] 2) Predicting the object motion, using a clustering-based method, [0091] 3) Combining two prediction results for achieving the future object motion, where the update trajectory is used from a driving module providing trajectory tracking to improve accuracy of the prediction and where the output of the motion planning may be changed for autonomous and automated driving. The planned trajectory may be considered in the single prediction methods, i.e. method for sensor measurement prediction and method for object motion prediction and in a combination system to compensate for the object future motion. The motion plan generated by a motion planner may used by a vehicle maneuvering and trajectory control system to steer, accelerate and decelerate a vehicle.

[0092] In summary the present invention provides or enables: [0093] 1) An object motion prediction that uses range measure prediction: [0094] a. Training function with historical LIDAR data for sensor measurement prediction. [0095] b. Online prediction of the future LIDAR measurement based on function approximation [0096] c. Gaussian Process Function approximation [0097] d. Combination (ensemble) of multiple predictors (prediction methods: Spatial ARIMA, Random Forest, Bootstrapped Neural network). [0098] 2) Combination of prediction method results, which includes range and physical predictors, for higher accuracy and robust object motion prediction.

[0099] In summary, the present invention enables motion planning of autonomous robots in particular resulting in more precise future known circumstances. Even further the present invention provides a continuous trajectory. Further the present invention provides a stable objection motion prediction system in which steering, heading and speed can be smoothly changed, i.e. a robot will not hit an expected future, object. Even further the present invention provides increased robustness of prediction reducing the error and variance by combining a range and point prediction.

[0100] Many modifications and other embodiments of the invention set forth herein will come to mind to the one skilled in the art to which the invention pertains having the benefit of the teachings presented in the foregoing description and the associated drawings. Therefore, it is to be understood that the invention is not to be limited to the specific embodiments disclosed and that modifications and other embodiments are intended to be included within the scope of the appended claims. Although specific terms are employed herein, they are used in a generic and descriptive sense only and not for purposes of limitation.

[0101] While the invention has been illustrated and described in detail in the drawings and foregoing description, such illustration and description are to be considered illustrative or exemplary and not restrictive. It will be understood that changes and modifications may be made by those of ordinary skill within the scope of the following claims. In particular, the present invention covers further embodiments with any combination of features from different embodiments described above and below. Additionally, statements made herein characterizing the invention refer to an embodiment of the invention and not necessarily all embodiments.

[0102] The terms used in the claims should be construed to have the broadest reasonable interpretation consistent with the foregoing description. For example, the use of the article "a" or "the" in introducing an element should not be interpreted as being exclusive of a plurality of elements. Likewise, the recitation of "or" should be interpreted as being inclusive, such that the recitation of "A or B" is not exclusive of "A and B," unless it is clear from the context or the foregoing description that only one of A and B is intended. Further, the recitation of "at least one of A, B and C" should be interpreted as one or more of a group of elements consisting of A, B and C, and should not be interpreted as requiring at least one of each of the listed elements A, B and C, regardless of whether A, B and C are related as categories or otherwise. Moreover, the recitation of "A, B and/or C" or "at least one of A, B or C" should be interpreted as including any singular entity from the listed elements, e.g., A, any subset from the listed elements, e.g., A and B, or the entire list of elements A, B and C.

* * * * *

D00000

D00001

D00002

D00003

D00004

D00005

D00006

D00007

D00008

D00009

XML

uspto.report is an independent third-party trademark research tool that is not affiliated, endorsed, or sponsored by the United States Patent and Trademark Office (USPTO) or any other governmental organization. The information provided by uspto.report is based on publicly available data at the time of writing and is intended for informational purposes only.

While we strive to provide accurate and up-to-date information, we do not guarantee the accuracy, completeness, reliability, or suitability of the information displayed on this site. The use of this site is at your own risk. Any reliance you place on such information is therefore strictly at your own risk.

All official trademark data, including owner information, should be verified by visiting the official USPTO website at www.uspto.gov. This site is not intended to replace professional legal advice and should not be used as a substitute for consulting with a legal professional who is knowledgeable about trademark law.