Detection and prevention of inmate to inmate message relay

Hodge Feb

U.S. patent number 10,572,961 [Application Number 15/071,082] was granted by the patent office on 2020-02-25 for detection and prevention of inmate to inmate message relay. This patent grant is currently assigned to Global Tel*Link Corporation. The grantee listed for this patent is GLOBAL TEL*LINK CORP.. Invention is credited to Stephen L. Hodge.

| United States Patent | 10,572,961 |

| Hodge | February 25, 2020 |

Detection and prevention of inmate to inmate message relay

Abstract

Secure system and method of detecting and preventing inmate to inmate message relays. A system and method which monitors inmate communications for similar phrases that occur as part of two or more separate inmate messages. These similar phrases may be overlapping in real time as in a conference call or can occur at separate times in separate messages. The communications that appear similar are assigned a score and the score is compared to a threshold. If the score is above a certain threshold, the communication is flagged and remedial actions are taken. If the flagged communication contains illegal matter then the communication can be disconnected or restricted in the future.

| Inventors: | Hodge; Stephen L. (Aubry, TX) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Applicant: |

|

||||||||||

| Assignee: | Global Tel*Link Corporation

(Reston, VA) |

||||||||||

| Family ID: | 58410484 | ||||||||||

| Appl. No.: | 15/071,082 | ||||||||||

| Filed: | March 15, 2016 |

Prior Publication Data

| Document Identifier | Publication Date | |

|---|---|---|

| US 20170270627 A1 | Sep 21, 2017 | |

| Current U.S. Class: | 1/1 |

| Current CPC Class: | G06F 16/258 (20190101); H04M 3/38 (20130101); H04M 3/42221 (20130101); G06F 40/242 (20200101); H04L 51/046 (20130101); G10L 15/26 (20130101); G06F 16/3344 (20190101); G06Q 50/26 (20130101); G10L 25/51 (20130101); H04M 3/2281 (20130101); G10L 15/02 (20130101); H04L 43/16 (20130101); G06F 16/24578 (20190101); H04M 2201/18 (20130101); G10L 15/005 (20130101); H04M 2203/2061 (20130101); H04M 2201/40 (20130101); G10L 2015/088 (20130101) |

| Current International Class: | G06Q 50/26 (20120101); G10L 15/02 (20060101); G10L 15/26 (20060101); G10L 25/51 (20130101); H04L 12/26 (20060101); H04L 12/58 (20060101); G06F 16/25 (20190101); G06F 16/33 (20190101); G06F 16/2457 (20190101) |

References Cited [Referenced By]

U.S. Patent Documents

| 4054756 | October 1977 | Comella et al. |

| 4191860 | March 1980 | Weber |

| 4670628 | June 1987 | Boratgis et al. |

| 4691347 | September 1987 | Stanley et al. |

| 4737982 | April 1988 | Boratgis et al. |

| 4813070 | March 1989 | Humphreys et al. |

| 4907221 | March 1990 | Pariani et al. |

| 4918719 | April 1990 | Daudelin |

| 4935956 | June 1990 | Hellwarth et al. |

| 4943973 | July 1990 | Werner |

| 4995030 | February 1991 | Helf |

| 5185781 | February 1993 | Dowden et al. |

| 5210789 | May 1993 | Jeffus et al. |

| 5229764 | July 1993 | Matchett et al. |

| 5291548 | March 1994 | Tsumura et al. |

| 5319702 | June 1994 | Kitchin et al. |

| 5319735 | June 1994 | Preuss et al. |

| 5345501 | September 1994 | Shelton |

| 5345595 | September 1994 | Johnson et al. |

| 5379345 | January 1995 | Greenberg |

| 5425091 | June 1995 | Josephs |

| 5438616 | August 1995 | Peoples |

| 5469370 | November 1995 | Ostrover et al. |

| 5485507 | January 1996 | Brown et al. |

| 5502762 | March 1996 | Andrew et al. |

| 5517555 | May 1996 | Amadon et al. |

| 5535194 | July 1996 | Brown et al. |

| 5535261 | July 1996 | Brown et al. |

| 5539731 | July 1996 | Haneda et al. |

| 5539812 | July 1996 | Kitchin et al. |

| 5555551 | September 1996 | Rudokas et al. |

| 5583925 | December 1996 | Bernstein |

| 5590171 | December 1996 | Howe |

| 5592548 | January 1997 | Sih |

| 5613004 | March 1997 | Cooperman |

| 5619561 | April 1997 | Reese |

| 5627887 | May 1997 | Freedman |

| 5634086 | May 1997 | Rtischev et al. |

| 5634126 | May 1997 | Norell |

| 5636292 | June 1997 | Rhoads |

| 5640490 | June 1997 | Hansen et al. |

| 5646940 | July 1997 | Hotto |

| 5649060 | July 1997 | Ellozy et al. |

| 5655013 | August 1997 | Gainsboro |

| 5675704 | October 1997 | Juang et al. |

| 5687236 | November 1997 | Moskowitz |

| 5710834 | January 1998 | Rhoads |

| 5719937 | February 1998 | Warren et al. |

| 5745558 | April 1998 | Richardson, Jr. et al. |

| 5745569 | April 1998 | Moskowitz |

| 5745604 | April 1998 | Rhoads |

| 5748726 | May 1998 | Unno |

| 5748763 | May 1998 | Rhoads |

| 5748783 | May 1998 | Rhoads |

| 5757889 | May 1998 | Ohtake |

| 5768355 | June 1998 | Salibrici |

| 5768426 | June 1998 | Rhoads |

| 5774452 | June 1998 | Greenberg |

| 5793415 | August 1998 | Gregory, III et al. |

| 5796811 | August 1998 | McFarlen |

| 5805685 | September 1998 | McFarlen |

| 5809462 | September 1998 | Nussbaum |

| 5822432 | October 1998 | Moskowitz |

| 5822436 | October 1998 | Rhoads |

| 5832068 | November 1998 | Smith |

| 5832119 | November 1998 | Rhoads |

| 5835486 | November 1998 | Davis et al. |

| 5841886 | November 1998 | Rhoads |

| 5841978 | November 1998 | Rhoads |

| 5850481 | December 1998 | Rhoads |

| 5861810 | January 1999 | Nguyen |

| 5862260 | January 1999 | Rhoads |

| 5867562 | February 1999 | Scherer |

| 5883945 | March 1999 | Richardson et al. |

| 5889868 | March 1999 | Seraphim et al. |

| 5899972 | May 1999 | Miyazawa et al. |

| 5907602 | May 1999 | Peel et al. |

| 5915001 | June 1999 | Uppaluru |

| 5920834 | July 1999 | Sih et al. |

| 5923746 | July 1999 | Baker et al. |

| 5926533 | July 1999 | Gainsboro |

| 5930369 | July 1999 | Cox et al. |

| 5930377 | July 1999 | Powell et al. |

| 5937035 | August 1999 | Andruska et al. |

| 5953049 | September 1999 | Horn et al. |

| 5960080 | September 1999 | Fahlman et al. |

| 5963909 | October 1999 | Warren et al. |

| 5982891 | November 1999 | Ginter et al. |

| 5991373 | November 1999 | Pattison et al. |

| 5999828 | December 1999 | Sih et al. |

| 6011849 | January 2000 | Orrin |

| 6026193 | February 2000 | Rhoads |

| 6035034 | March 2000 | Trump |

| 6038315 | March 2000 | Strait et al. |

| 6052454 | April 2000 | Kek et al. |

| 6052462 | April 2000 | Lu |

| 6058163 | May 2000 | Pattison et al. |

| 6064963 | May 2000 | Gainsboro |

| 6072860 | June 2000 | Kek et al. |

| 6078567 | June 2000 | Traill et al. |

| 6078645 | June 2000 | Cai et al. |

| 6078807 | June 2000 | Dunn et al. |

| 6111954 | August 2000 | Rhoads |

| 6118860 | September 2000 | Hillson et al. |

| 6122392 | September 2000 | Rhoads |

| 6122403 | September 2000 | Rhoads |

| 6138119 | October 2000 | Hall et al. |

| 6141406 | October 2000 | Johnson |

| 6160903 | December 2000 | Hamid et al. |

| 6173284 | January 2001 | Brown |

| 6175831 | January 2001 | Weinreich et al. |

| 6185416 | February 2001 | Rudokas et al. |

| 6185683 | February 2001 | Ginter et al. |

| 6205249 | March 2001 | Moskowitz |

| 6211783 | April 2001 | Wang |

| 6219640 | April 2001 | Basu et al. |

| 6233347 | May 2001 | Chen et al. |

| 6237786 | May 2001 | Ginter et al. |

| 6243480 | June 2001 | Zhao et al. |

| 6243676 | June 2001 | Witteman |

| 6253193 | June 2001 | Ginter et al. |

| 6263507 | July 2001 | Ahmad et al. |

| 6266430 | July 2001 | Rhoads |

| 6278772 | August 2001 | Bowater et al. |

| 6278781 | August 2001 | Rhoads |

| 6289108 | September 2001 | Rhoads |

| 6301360 | October 2001 | Bocionek et al. |

| 6308171 | October 2001 | De La Huerga |

| 6312911 | November 2001 | Bancroft |

| 6314192 | November 2001 | Chen et al. |

| 6324573 | November 2001 | Rhoads |

| 6324650 | November 2001 | Ogilvie |

| 6330335 | December 2001 | Rhoads |

| 6343138 | January 2002 | Rhoads |

| 6343738 | February 2002 | Ogilvie |

| 6345252 | February 2002 | Beigi et al. |

| 6381321 | April 2002 | Brown et al. |

| 6389293 | May 2002 | Clore et al. |

| 6421645 | July 2002 | Beigi et al. |

| 6526380 | February 2003 | Thelen et al. |

| 6542602 | April 2003 | Elazar |

| 6611583 | August 2003 | Gainsboro |

| 6625261 | September 2003 | Holtzberg |

| 6625587 | September 2003 | Erten et al. |

| 6633846 | October 2003 | Bennett et al. |

| 6636591 | October 2003 | Swope et al. |

| 6639977 | October 2003 | Swope et al. |

| 6639978 | October 2003 | Draizin et al. |

| 6647096 | November 2003 | Milliorn et al. |

| 6665376 | December 2003 | Brown |

| 6665644 | December 2003 | Kanevsky et al. |

| 6668044 | December 2003 | Schwartz et al. |

| 6668045 | December 2003 | Mow |

| 6671292 | December 2003 | Haartsen |

| 6688518 | February 2004 | Valencia et al. |

| 6728345 | April 2004 | Glowny et al. |

| 6728682 | April 2004 | Fasciano |

| 6748356 | June 2004 | Beigi et al. |

| 6760697 | July 2004 | Neumeyer et al. |

| 6763099 | July 2004 | Blink |

| 6782370 | August 2004 | Stack |

| 6788772 | September 2004 | Barak et al. |

| 6810480 | October 2004 | Parker et al. |

| 6850609 | February 2005 | Schrage |

| 6880171 | April 2005 | Ahmad et al. |

| 6895086 | May 2005 | Martin |

| 6898612 | May 2005 | Parra et al. |

| 6907387 | June 2005 | Reardon |

| 6920209 | July 2005 | Gainsboro |

| 6947525 | September 2005 | Benco |

| 6970554 | November 2005 | Peterson et al. |

| 7032007 | April 2006 | Fellenstein et al. |

| 7035386 | April 2006 | Susen et al. |

| 7039171 | May 2006 | Gickler |

| 7039585 | May 2006 | Wilmot et al. |

| 7046779 | May 2006 | Hesse |

| 7050918 | May 2006 | Pupalaikis et al. |

| 7062286 | June 2006 | Grivas et al. |

| 7075919 | July 2006 | Wendt et al. |

| 7079636 | July 2006 | McNitt et al. |

| 7079637 | July 2006 | McNitt et al. |

| 7092494 | August 2006 | Anders et al. |

| 7103549 | September 2006 | Bennett et al. |

| 7106843 | September 2006 | Gainsboro et al. |

| 7123704 | October 2006 | Martin |

| 7133511 | November 2006 | Buntin et al. |

| 7133828 | November 2006 | Scarano et al. |

| 7133845 | November 2006 | Ginter et al. |

| 7149788 | December 2006 | Gundla et al. |

| 7191133 | March 2007 | Pettay |

| 7197560 | March 2007 | Caslin et al. |

| 7236932 | June 2007 | Grajski |

| 7248685 | July 2007 | Martin |

| 7256816 | August 2007 | Profanchik et al. |

| 7277468 | October 2007 | Tian et al. |

| 7280816 | October 2007 | Fratti et al. |

| 7324637 | January 2008 | Brown et al. |

| 7333798 | February 2008 | Hodge |

| 7366782 | March 2008 | Chong et al. |

| 7406039 | July 2008 | Cherian et al. |

| 7417983 | August 2008 | He et al. |

| 7424715 | September 2008 | Dutton |

| 7466816 | December 2008 | Blair |

| 7494061 | February 2009 | Reinhold |

| 7496345 | February 2009 | Rae et al. |

| 7505406 | March 2009 | Spadaro et al. |

| 7519169 | April 2009 | Hingoranee et al. |

| 7529357 | May 2009 | Rae et al. |

| 7551732 | June 2009 | Anders |

| 7596498 | September 2009 | Basu et al. |

| 7639791 | December 2009 | Hodge |

| 7664243 | February 2010 | Martin |

| 7672845 | March 2010 | Beranek et al. |

| RE41190 | April 2010 | Darling |

| 7698182 | April 2010 | Falcone et al. |

| 7742581 | June 2010 | Hodge et al. |

| 7742582 | June 2010 | Harper |

| 7783021 | August 2010 | Hodge |

| 7804941 | September 2010 | Keiser et al. |

| 7826604 | December 2010 | Martin |

| 7848510 | December 2010 | Shaffer et al. |

| 7853243 | December 2010 | Hodge |

| 7860222 | December 2010 | Sidler et al. |

| 7881446 | February 2011 | Apple et al. |

| 7899167 | March 2011 | Rae |

| 7961860 | June 2011 | McFarlen |

| 8031052 | October 2011 | Polozola |

| 8135115 | March 2012 | Hogg, Jr. et al. |

| 8204177 | June 2012 | Harper |

| 8295446 | October 2012 | Apple et al. |

| 8458732 | June 2013 | Hanna et al. |

| 8488756 | July 2013 | Hodge et al. |

| 8498937 | July 2013 | Shipman, Jr. et al. |

| 8509390 | August 2013 | Harper |

| 8577003 | November 2013 | Rae |

| 8630726 | January 2014 | Hodge et al. |

| 8731934 | May 2014 | Olligschlaeger et al. |

| 8886663 | November 2014 | Gainsboro et al. |

| 8917848 | December 2014 | Torgersrud et al. |

| 8929525 | January 2015 | Edwards |

| 9020115 | April 2015 | Hangsleben |

| 9043813 | May 2015 | Hanna et al. |

| 9077680 | July 2015 | Harper |

| 9094500 | July 2015 | Edwards |

| 9143609 | September 2015 | Hodge |

| 9232051 | January 2016 | Torgersrud et al. |

| 9307386 | April 2016 | Hodge et al. |

| 9396320 | July 2016 | Lindemann |

| 9552417 | January 2017 | Olligschlaeger et al. |

| 9609121 | March 2017 | Hodge |

| 9615060 | April 2017 | Hodge |

| 9621504 | April 2017 | Torgersrud |

| 9674340 | June 2017 | Hodge |

| 9800830 | October 2017 | Humpries |

| 9923936 | March 2018 | Hodge |

| 2001/0036821 | November 2001 | Gainsboro et al. |

| 2001/0043697 | November 2001 | Cox et al. |

| 2001/0056349 | December 2001 | St John |

| 2001/0056461 | December 2001 | Kampe et al. |

| 2002/0002464 | January 2002 | Pertrushin |

| 2002/0010587 | January 2002 | Pertrushin |

| 2002/0032566 | March 2002 | Tzirkel-Hancock et al. |

| 2002/0046057 | April 2002 | Ross |

| 2002/0067272 | June 2002 | Lemelson et al. |

| 2002/0069084 | June 2002 | Donovan |

| 2002/0076014 | June 2002 | Holtzberg |

| 2002/0107871 | August 2002 | Wyzga et al. |

| 2002/0147707 | October 2002 | Kraay et al. |

| 2002/0174183 | November 2002 | Saeidi |

| 2003/0002639 | January 2003 | Huie |

| 2003/0023444 | January 2003 | St John |

| 2003/0023874 | January 2003 | Prokupets et al. |

| 2003/0035514 | February 2003 | Jang |

| 2003/0040326 | February 2003 | Levy et al. |

| 2003/0070076 | April 2003 | Michael |

| 2003/0086546 | May 2003 | Falcone et al. |

| 2003/0093533 | May 2003 | Ezerzer et al. |

| 2003/0099337 | May 2003 | Lord |

| 2003/0126470 | July 2003 | Crites et al. |

| 2003/0154072 | August 2003 | Young |

| 2003/0174826 | September 2003 | Hesse |

| 2003/0190045 | October 2003 | Huberman et al. |

| 2004/0008828 | January 2004 | Coles et al. |

| 2004/0029564 | February 2004 | Hodge |

| 2004/0081296 | April 2004 | Brown et al. |

| 2004/0161086 | August 2004 | Buntin et al. |

| 2004/0169683 | September 2004 | Chiu et al. |

| 2004/0249650 | December 2004 | Freedman et al. |

| 2004/0252184 | December 2004 | Hesse et al. |

| 2004/0252447 | December 2004 | Hesse et al. |

| 2005/0010411 | January 2005 | Rigazio et al. |

| 2005/0027723 | February 2005 | Jones et al. |

| 2005/0080625 | April 2005 | Bennett et al. |

| 2005/0094794 | May 2005 | Creamer et al. |

| 2005/0102371 | May 2005 | Aksu |

| 2005/0114192 | May 2005 | Tor et al. |

| 2005/0125226 | June 2005 | Magee |

| 2005/0128283 | June 2005 | Bulriss et al. |

| 2005/0141678 | June 2005 | Anders et al. |

| 2005/0144004 | June 2005 | Bennett et al. |

| 2005/0170818 | August 2005 | Netanel et al. |

| 2005/0182628 | August 2005 | Choi |

| 2005/0207357 | September 2005 | Koga |

| 2006/0064037 | March 2006 | Shalon et al. |

| 2006/0087554 | April 2006 | Boyd et al. |

| 2006/0087555 | April 2006 | Boyd et al. |

| 2006/0093099 | May 2006 | Cho |

| 2006/0198504 | September 2006 | Shemisa et al. |

| 2006/0200353 | September 2006 | Bennett |

| 2006/0285650 | December 2006 | Hodge |

| 2006/0285665 | December 2006 | Wasserblat et al. |

| 2007/0003026 | January 2007 | Hodge |

| 2007/0011008 | January 2007 | Scarano et al. |

| 2007/0041545 | February 2007 | Gainsboro |

| 2007/0047734 | March 2007 | Frost |

| 2007/0071206 | March 2007 | Gainsboro et al. |

| 2007/0133437 | June 2007 | Wengrovitz et al. |

| 2007/0185717 | August 2007 | Bennett |

| 2007/0192174 | August 2007 | Bischoff |

| 2007/0195703 | August 2007 | Boyajian et al. |

| 2007/0237099 | October 2007 | He et al. |

| 2007/0244690 | October 2007 | Peters |

| 2008/0000966 | January 2008 | Keiser |

| 2008/0021708 | January 2008 | Bennett et al. |

| 2008/0046241 | February 2008 | Osburn et al. |

| 2008/0096178 | April 2008 | Rogers et al. |

| 2008/0106370 | May 2008 | Perez et al. |

| 2008/0118045 | May 2008 | Polozola et al. |

| 2008/0195387 | August 2008 | Zigel et al. |

| 2008/0198978 | August 2008 | Olligschlaeger |

| 2008/0201143 | August 2008 | Olligschlaeger |

| 2008/0201158 | August 2008 | Johnson et al. |

| 2008/0260133 | October 2008 | Hodge et al. |

| 2008/0300878 | December 2008 | Bennett |

| 2010/0177881 | July 2010 | Hodge |

| 2010/0202595 | August 2010 | Hodge et al. |

| 2010/0299761 | November 2010 | Shapiro |

| 2011/0055256 | March 2011 | Phillips et al. |

| 2011/0244440 | October 2011 | Saxon et al. |

| 2011/0279228 | November 2011 | Kumar et al. |

| 2012/0262271 | October 2012 | Torgersrud et al. |

| 2013/0104246 | April 2013 | Bear et al. |

| 2013/0124192 | May 2013 | Lindmark et al. |

| 2013/0179949 | July 2013 | Shapiro |

| 2014/0247926 | September 2014 | Gainsboro et al. |

| 2014/0273929 | September 2014 | Torgersrud |

| 2014/0287715 | September 2014 | Hodge et al. |

| 2014/0313275 | October 2014 | Gupta et al. |

| 2014/0334610 | November 2014 | Hangsleben |

| 2015/0206417 | July 2015 | Bush |

| 2015/0215254 | July 2015 | Bennett |

| 2015/0221151 | August 2015 | Bacco et al. |

| 2015/0281431 | October 2015 | Gainsboro et al. |

| 2015/0281433 | October 2015 | Gainsboro |

| 2016/0191484 | June 2016 | Gongaware |

| 2016/0224538 | August 2016 | Chandrasekar et al. |

| 2016/0239932 | August 2016 | Sidler et al. |

| 2016/0301728 | October 2016 | Keiser et al. |

| 2016/0371756 | December 2016 | Yokel et al. |

| 2016/0373909 | December 2016 | Rasmussen et al. |

| 2017/0295212 | October 2017 | Hodge |

| 1280137 | Dec 2004 | EP | |||

| 2579676 | Apr 2013 | EP | |||

| 2075313 | Nov 1981 | GB | |||

| 59225626 | Dec 1984 | JP | |||

| 60010821 | Jan 1985 | JP | |||

| 61135239 | Jun 1986 | JP | |||

| 3065826 | Mar 1991 | JP | |||

| WO 96/14703 | Nov 1995 | WO | |||

| WO 98/13993 | Apr 1998 | WO | |||

| WO 2001/074042 | Oct 2001 | WO | |||

| WO 2016/028864 | Feb 2016 | WO | |||

Other References

|

"Cisco IAD2400 Series Business-Class Integrated Access Device", Cisco Systems Datasheet, 2003; 8 pages. cited by applicant . "Cisco IAD2420 Series Integrated Access Devices Software Configuration Guide--Initial Configuration," Cisco Systems, accessed Sep. 23, 2014, accessible at http://www.cisco.com/en/US/docs/routers/access/2400/2420/software/configu- ration/guide/init_cf.html; 5 pages. cited by applicant . "Hong Kong: Prison Conditions in 1997," Human Rights Watch, Mar. 1, 1997, C905, available at http://www.refworld.org/docid/3ae6a7d014.html, accessed May 29, 2014; 48 pages. cited by applicant . "PacketCable.TM. 1.0 Architecture Framework Technical Report", PKT-TR-ARCh-V0 1-001201 (Cable Television Laboratories, Inc. 1999). cited by applicant . "PacketCable.TM. Audio/Video Codecs Specification," Cable Television Laboratories, Inc., Ser. No. PKT-SP-CODEC-I05-040113 (2004). cited by applicant . "Service-Observing Arrangements Using Key Equipment for Telephone Company Business Offices, Description and Use," Pac. Tel. & Tel. Co., Bell System Practices, Station Operations Manual, Section C71.090, Issue A, 1-1-57-N, 1957; 8 pages. cited by applicant . "SIP and IPLink.TM. in the Next Generation Network: An Overview," Intel, 2001; 6 pages. cited by applicant . "The AutoEDMS Document Management and Workflow System: An Overview of Key Features, Functions and Capabilities," ACS Software, May 2003; 32 pages. cited by applicant . "Voice Over Packet in Next Generation Networks: An Architectural Framework," Bellcore, Special Report SR-4717, Issue 1, Jan. 1999; 288 pages. cited by applicant . "Cool Edit Pro, Version 1.2 User Guide," Syntrillium Software Corporation, 1998; 226 pages. cited by applicant . "Global Call API for Linux and Windows Operating Systems," Intel Dialogic Library Reference, Dec. 2005; 484 pages. cited by applicant . "The NIST Year 2002 Speaker Recognition Evaluation Plan," NIST, Feb. 27, 2002, accessible at http://www.itl.nist.gov/iad/mig/tests/spk/2002/2002-spkrecevalplan-v60.pd- f; 9 pages. cited by applicant . Aggarwal, et al., "An Environment for Studying Switching System Software Architecture," IEEE, Global Telecommunications Conference, 1988; 7 pages. cited by applicant . Amendment and Response Under 37 C.F.R. .sctn. 1.111 dated Sep. 30, 2011, in U.S. Appl. No. 11/706,431; 12 pages. cited by applicant . Auckenthaler, et al., "Speaker-Centric Score Normalization and Time Pattern Analysis for Continuous Speaker Verification," International Conference on Acoustics, Speech, and Signal Processing (ICASSP), vol. 2, Jun. 2000, pp. 1065-1068. cited by applicant . Audacity Team, "About Audacity," World Wide Web, 2014, accessible at http://wiki.audacity.team.org/wiki/About_Audacity; 3 pages. cited by applicant . Beek et al., "An Assessment of the Technology of Automatic Speech Recognition for Military Applications," IEEE Trans. Acoustics, Speech, and Signal Processing, vol. ASSP-25, No. 4, 1977; pp. 310-322. cited by applicant . Beigi, et al., "A Hierarchical Approach to Large-Scale Speaker Recognition," EuroSpeech 1999, Sep. 1999, vol. 5; pp. 2203-2206. cited by applicant . Beigi, et al., "IBM Model-Based and Frame-By-Frame Speaker-Recognition," Speaker Recognition and its Commercial and Forensic Applications, Apr. 1998; pp. 1-4. cited by applicant . Beigi, H., "Challenges of Large-Scale Speaker Recognition," 3rd European Cooperation in the Field of Scientific and Technical Research Conference, Nov. 4, 2005; 33 pages. cited by applicant . Beigi, H., "Decision Theory," Fundamentals of Speaker Recognition, Ch. 9, Springer, US 2011; pp. 313-339. cited by applicant . Bender, et al., "Techniques for Data Hiding," IBM Systems Journal, vol. 35, Nos. 3&4, 1996; 24 pages. cited by applicant . Boersma, et al., "Praat: Doing Phonetics by computer," World Wide Web, 2015, accessible at http://www.fon.hum.uva.nl/praat; 2 pages. cited by applicant . Bolton, et al., "Statistical Fraud Detection: A Review," Statistical Science, vol. 17, No. 3 (2002), pp. 235-255. cited by applicant . Boney, L., et al., "Digital Watermarks for Audio Signals" Proceedings of EUSIPC0-96, Eighth European Signal processing Conference, Trieste, Italy, Oct. 13, 1996. cited by applicant . Boney, L., et al., "Digital Watermarks for Audio Signals" Proceedings of the International Conference on Multimedia Computing Systems, p. 473-480, IEEE Computer Society Press, United States (1996). cited by applicant . Bur Goode, Voice Over Internet Protocol (VoIP), Proceedings of the IEEE, vol. 90, No. 9, Sep. 2002; pp. 1495-1517. cited by applicant . Carey, et al., "User Validation for Mobile Telephones," International Conference on Acoustics, Speech, and Signal Processing (ICASSP), vol. 2, Jun. 2000, pp. 1093-1096. cited by applicant . Chau, et al., "Building an Infrastructure for Law Enforcement Information Sharing and Collaboration: Design Issues and Challenges," National Conference on Digital Government, 2001; 6 pages. cited by applicant . Chaudhari, et al., "Transformation enhanced multi-grained modeling for text-independent speaker recognition," International Conference on Spoken Language Processing, 2000, pp. 298-301. cited by applicant . Christel, et al., "Interactive Maps for a Digital Video Library," IEEE Special Edition on Multimedia Computing, Jan.-Mar. 2000, IEEE, United States; pp. 60-67. cited by applicant . Clavel, et al., "Events Detection for an Audio-Based Surveillance System," IEEE International Conference on Multimedia and Expo (ICME2005), Jul. 6-8, 2005, pp. 1306-1309. cited by applicant . Coden, et al., "Speech Transcript Analysis for Automatic Search," Proceedings of the 34th Hawaii International Conference on System Sciences, IEEE, 2001; 9 pages. cited by applicant . Coherent Announces Industry's First Remote Management System for Echo Canceller, Business Wire, Mar. 3, 1997; 3 pages. cited by applicant . Complaint for Patent Infringement, filed Aug. 1, 2013, Securus Technologies, Inc. v. Global Tel*Link Corporation, Case No. 3:13-cv-03009-K (N.D. Tex.); 9 pages. cited by applicant . Corbato, et al., "Introduction and Overview of the MULTICS System," Proceedings--Fall Joint Computer Conference, 1965; 12 pages. cited by applicant . Cox, et al.; "Secure Spread Spectrum Watermarking for Multimedia," NEC Research Institute, Technical Report 95-10, Dec. 1997; 34 pages. cited by applicant . Defendant's Responsive Claim Construction Brief, Global Tel*Link Corporation v. Securus Technologies, Inc., Case No. 3:14-cv-0829-K (N.D. Tex.), filed Dec. 10, 2014; 21 pages. cited by applicant . Definition of "constantly", The American Heritage Dictionary, 4th Ed. (2002); p. 306. cited by applicant . Definition of "logic", IEEE 100: The Authoritative Dictionary of IEEE Standard Terms, Seventh Edition, Standards Information Network, IEEE Press (2000). cited by applicant . Definition of "telephony", McGraw-Hill Dictionary of Scientific and Technical Terms, 6th Edition (McGraw-Hill, 2003); p. 2112. cited by applicant . Definitions of "Local Area Network (LAN)" and "Wide Area Network (WAN)," Microsoft Computer Dictionary (Microsoft Press 2002), pp. 304 and 561. cited by applicant . Definition of "call data", Newton's Telecom Dictionary, 21st edition, San Francisco: CMP Books, 2005; p. 150. cited by applicant . Definitions of "suspicion" and "suspect", American Heritage Dictionary, 4th Edition, New York: Houghton Mifflin, 2006; pp. 1743-1744. cited by applicant . "Bellcore Notes on the Networks," Bellcore, Special Report SR-2275, Issue 3, Dec. 1997. cited by applicant . Doddington, G., "Speaker Recognition based on Idiolectal Differences between Speakers," 7th European Conference on Speech Communication and Technology, Sep. 3-7, 2001; 4 pages. cited by applicant . Dunn, et al., "Approaches to speaker detection and tracking in conversational speech," Digital Signal Processing, vol. 10, 2000; pp. 92-112. cited by applicant . Dye, Charles, "Oracle Distributed Systems," O'Reilly Media, Inc., Apr. 1, 1999; 29 pages. cited by applicant . Excerpts from the Prosecution History of U.S. Appl. No. 10/135,878, filed Apr. 29, 2002. cited by applicant . File History of U.S. Pat. No. 8,135,115, U.S. Appl. No. 11/603,938, filed Nov. 22, 2006. cited by applicant . File History of U.S. Appl. No. 12/861,322, filed Aug. 23, 2010. cited by applicant . File History of U.S. Pat. No. 8,577,003, U.S. Appl. No. 13/009,483, filed Jan. 19, 2011. cited by applicant . File History of U.S. Pat. No. 8,886,663, U.S. Appl. No. 12/284,450, filed Sep. 20, 2008. cited by applicant . Fischer, Alan D., "COPLINK nabs criminals faster," Arizona Daily Star, Jan. 7, 2001; 5 pages. cited by applicant . Fleischman, E., "Advanced Streaming Format (ASF) Specification," Microsoft Corporation, Jan. 9, 1998; 78 pages. cited by applicant . Fox, B., "The First Amendment Rights of Prisoners," 63 J. Crim. L. Criminology & Police Sci. 162, 1972; 24 pages. cited by applicant . Frankel, E., Audioconferencing Options (Teleconferencing Units, Conference Bridges and Service Bureaus), Teleconnect, vol. 4, No. 5, p. 131(3), May 1996; 6 pages. cited by applicant . Furui, et al., "Experimental studies in a new automatic speaker verification system using telephone speech," Acoustics, Speech, and Signal Processing, IEEE International Conference on ICASSP '80, vol. 5, Apr. 1980, pp. 1060-1062. cited by applicant . Furui, S., "50 Years of Progress in Speech and Speaker Recognition Research," ECTI Transactions on Computer and Information Technology, vol. 1, No. 2, Nov. 2005, pp. 64-74. cited by applicant . Hansen, et al., "Speaker recognition using phoneme-specific gmms," The Speaker and Language Recognition Workshop, May-Jun. 2004; 6 pages. cited by applicant . Hauck, et al., "Coplink: A Case of Intelligent Analysis and Knowledge Management," University of Arizona, 1999; 20 pages. cited by applicant . Hewett, et al., Signaling System No. 7 (SS7/C7): Protocol, Architecture, and Services (Networking Technology), Cisco Press, Jun. 2005; 8 pages. cited by applicant . IMAGIS Technologies, Inc. "Integrated Justice System--Web-based Image and Data Sharing" [retrieved from http://www.imagistechnologies.com/Product/IJISFramework.htm>] (Nov. 5, 2002) 4 pages. cited by applicant . I2 Investigative Analysis Software; "Chart Reader", URL: http://www.i2.eo.uk/Products/Chart Reader. Jun. 13, 2005. cited by applicant . I2 Investigative Analysis Software; "i2 TextChart--Text Visualized", URL: http://www.i2.co.uk/Products/i2TextChart/. Jun. 13, 2005. cited by applicant . I2 Investigative Analysis Software; "iBase-Information Captured", URL: http://www.i2.co.uk/Products/iBase/. Jun. 13, 2005. cited by applicant . I2 Investigative Analysis Software; "iBridge", URL: http://www.i2.eo.uk/Products/iBridge/. Jun. 13, 2005. cited by applicant . I2 Investigative Analysis Software; "Pattern Tracer", URL: http://www.i2.co.uk/Products/Pattern Tracer/. Jun. 13, 2005. cited by applicant . I2 Investigative Analysis Software; "Prisons", URL: http://www.i2.co.uk/Solutions/Prisons/default.aso. Jun. 13, 2005. cited by applicant . I2 Investigative Analysis Software; "Setting International Standards for Investigative Analysis", URL: htto://www.i2.co.uk/Products/index.htm. Jun. 13, 2005. cited by applicant . Excerpts from IEEE 100: The Authoritative Dictionary of IEEE Standard Terms, Seventh Edition, Standards Information Network, IEEE Press (2000). cited by applicant . IMAGIS Technologies, Inc. "Computer Arrest and Booking System", [retrieved from http://www.imagistechnologies.com/Product/CABS.htm] (Nov. 5, 2002) 5 pages. cited by applicant . Intel.RTM. NetStructure High-Density Station Interface (HDSI) Boards Archived Webpage, Intel Corporation, 2003; 2 pages. cited by applicant . Inmate Telephone Services: Large Business: Voice, Oct. 2, 2001; 3 pages. cited by applicant . International Search Report and Written Opinion directed to International Application No. PCT/US2017/022169, dated May 29, 2017; 57 pages. cited by applicant . International Search Report for International Application No. PCT/US04/025029, European Patent Office, Netherlands, dated Mar. 14, 2006. cited by applicant . Joint Claim Construction and Prehearing Statement, Exhibit B: Securus' Intrinsic and Extrinsic Evidence Charts, Global Tel*Link Corporation v. Securus Technologies, Inc., No. 3:14-cv-00829-K (N.D. Tex.), Sep. 26, 2014. cited by applicant . Isobe, et al., "A new cohort normalization using local acoustic information for speaker verification," Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, vol. 2, Mar. 1999; pp. 841-844. cited by applicant . Juang, et al., "Automatic Speech Recognition--A Brief History of the Technology Development," Oct. 8, 2014; 24 pages. cited by applicant . Kinnunen, et al., "Real-Time Speaker Identification and Verification," IEEE Transactions on Audio, Speech, and Language Processing, vol. 14, No. 1, Jan. 2006, pp. 277-288. cited by applicant . Kozamernik, F., "Media Streaming over the Internet--an overview of delivery technologies," EBU Technical Review, Oct. 2002; 15 pages. cited by applicant . Zajic, et al., "A Cohort Methods for Score Normalization in Speaker Verification System, Acceleration of On-Line Cohort Methods," Proceedings of the 12th International Conference "Speech and Computer," Oct. 15-18, 2007; 6 pages. cited by applicant . Lane, et al., Language Model Switching Based on Topic Detection for Dialog Speech Recognition, Proceedings of the IEEE-ICASSP, vol. 1, 2003, IEEE; pp. 616-619. cited by applicant . Maes, et al., "Conversational speech biometrics," E-Commerce Agents, Marketplace Solutions, Security Issues, and Supply and Demand, Springer-Verlang, London, UK, 2001, pp. 166-179. cited by applicant . Maes, et al., "Open SESAME! Speech, Password or Key to Secure Your Door?," Asian Conference on Computer Vision, Jan. 1998; pp. 1-3. cited by applicant . Matsui, et al., "Concatenated Phoneme Models for Text-Variable Speaker Recognition," International Conference on Acoustics, Speech, and Signal Processing (ICASSP), vol. 2, Apr. 1993; pp. 391-394. cited by applicant . McCollum, "Federal Prisoner Health Care Copayment Act of 2000," House of Representatives Report 106-851, 106th Congress 2d Session, Sep. 14, 2000; 22 pages. cited by applicant . Microsoft Computer Dictionary, Fifth Edition, Microsoft Computer Press: Redmond, WA, 2002; 652 pages. cited by applicant . Microsoft White Paper: "Integrated Justice Information Systems", retrieved from Microsoft Justice & Public Safety Solutions (Nov. 5, 2002) [http://jps.directtaps.net_vti bin/owssvr.dll?Using=Default%2ehtm]; 22 pages. cited by applicant . Moattar, et al., "Speech Overlap Detection using Spectral Features and its Application in Speech Indexing," 2nd International Conference on Information & Communication Technologies, 2006; pp. 1270-1274. cited by applicant . National Alliance of Gang Investigators Associations, 2005 National Gang Threat Assessment, 2005, Bureau of Justice Assistance, Office of Justice Programs, U.S. Department of Justice; 73 pages. cited by applicant . National Major Gang Taskforce, "A Study of Gangs and Security Threat Groups in America's Adult Prisons and Jails," 2002; 38 pages. cited by applicant . Navratil, et al., "A Speech Biometrics System With MultiGrained Speaker Modeling," 2000; 5 pages. cited by applicant . Navratil, et al., "Phonetic speaker recognition using maximum-likelihood binary-decision tree models," Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, Apr. 6-10, 2003; 4 pages. cited by applicant . Newton's Telecom Dictionary, 18th Edition, Feb. 2002; p. 655. cited by applicant . Newton's Telecom Dictionary, 18th Edition, Feb. 2002; p. 168. cited by applicant . Office Action dated Dec. 1, 2011, in Canadian Patent Application No. 2,534,767, DSI-ITI, LLC, filed Aug. 4, 2004. cited by applicant . O'Harrow, R. "U.S. Backs Florida's New Counterterrorism Database; `Matrix` Offers Law Agencies Faster Access to Americans' Personal Records"; The Washington Post. Washington, D.C., Aug. 6, 2003; p. A 01. cited by applicant . O'Harrow, R.. "Database will make tracking suspected terrorists easier", The Dallas Morning News. Dallas, TX, Aug. 6, 2003; p. 7A. cited by applicant . Olligschlaeger, A. M., "Criminal Intelligence Databases and Applications," in Marilyn B. Peterson, Bob Morehouse, and Richard Wright, Intelligence 2000: Revising the Basic Elements--A Guide for Intelligence Professionals, Mar. 30, 2000 a joint publication of IALEIA and LEIU; 53 pages. cited by applicant . Original Specification as-filed Aug. 26, 2005, in U.S. Appl. No. 11/212,495 to Frost. cited by applicant . Original Specification as-filed Jul. 22, 2005, in U.S. Appl. No. 11/187,423 to Shaffer. cited by applicant . Osifchin, N., "A Telecommunications Buildings/Power Infrastructure in a New Era of Public Networking," IEEE 2000; 7 pages. cited by applicant . Pages from http://www.corp.att.com/history, archived by web.archive.org on Nov. 4, 2013. cited by applicant . Pelecanos, J. "Conversational biometrics," in Biometric Consortium Meeting, Baltimore, MD, Sep. 2006, accessible at http://www.biometrics.org/bc2006/presentations/Thu_Sep_21/Session_I/Pelec- anos_Conversational_Biometrics.pdf; 14 pages. cited by applicant . Plaintiff's Opening Claim Construction Brief, Global Tel*Link Corporation v. Securus Technologies, Inc., Case No. 3:14-cv-0829-K (N.D. Tex.), filed Nov. 19, 2014. cited by applicant . Pollack, et al., "On the Identification of Speakers by Voice," The Journal of the Acoustical Society of America, vol. 26, No. 3, May 1954; 4 pages. cited by applicant . Prosecution History of International Patent Application No. PCT/US99/09493 by Brown et al., filed Apr. 29, 1999. cited by applicant . Prosecution History of U.S. Appl. No. 09/072,436, filed May 4, 1998. cited by applicant . Prosecution History of U.S. Appl. No. 11/005,816, filed Dec. 7, 2004. cited by applicant . Prosecution History of U.S. Appl. No. 11/045,589, filed Jan. 28, 2005. cited by applicant . Prosecution History of U.S. Appl. No. 11/182,625, filed Jul. 15, 2005. cited by applicant . Prosecution History of U.S. Appl. No. 11/479,990, filed Jun. 30, 2006. cited by applicant . Prosecution History of U.S. Appl. No. 11/480,258, filed Jun. 30, 2006. cited by applicant . Prosecution History of U.S. Appl. No. 11/609,397, filed Dec. 12, 2006. cited by applicant . Prosecution History of U.S. Appl. No. 12/002,507, filed Dec. 17, 2007. cited by applicant . Response to Office Action, filed Jan. 6, 2009, in Prosecution History of U.S. Appl. No. 10/642,532, filed Aug. 15, 2003. cited by applicant . Reynolds, D., "Automatic Speaker Recognition Using Gaussian Mixture Speaker Models," The Lincoln Laboratory Journal, vol. 8, No. 2, 1995; pp. 173-192. cited by applicant . Rosenberg, et al., "The Use of Cohort Normalized Scores for Speaker Verification," Speech Research Department, AT&T Bell Laboratories, 2nd International Conference on Spoken Language Processing, Oct. 12-16, 1992; 4 pages. cited by applicant . Ross, et al., "Multimodal Biometrics: An Overview," Proc. of 12th European Signal Processing Conference (EUSIPCO), Sep. 2004; pp. 1221-1224. cited by applicant . Science Dynamics, BubbleLINK Software Architecture, 2003; 10 pages. cited by applicant . Science Dynamics, Commander Call Control System, Rev. 1.04, 2002; 16 pages. cited by applicant . Science Dynamics, Inmate Telephone Control Systems, http://scidyn.com/fraudprev_main.htm (archived by web.archive.org on Jan. 12, 2001). cited by applicant . Science Dynamics, SciDyn BubbleLINK, http://www.scidyn.com/products/bubble.html (archived by web.archive.org on Jun. 18, 2006). cited by applicant . Science Dynamics, SciDyn Call Control Solutions: Commander II, http://www.scidyn.com/products/commander2.html (archived by web.archive.org on Jun. 18, 2006). cited by applicant . Science Dynamics, SciDyn IP Gateways, http://scidyn.com/products/ipgateways.html (archived by web.archive.org on Aug. 15, 2001). cited by applicant . Science Dynamics, Science Dynamics--IP Telephony, http://www.scidyn.com/iptelephony_main.htm (archived by web.archive.org on Oct. 12, 2000). cited by applicant . Shearme, et al., "An Experiment Concerning the Recognition of Voices," Language and Speech, vol. 2, No. 3, Jul./Sep. 1959; 10 pages. cited by applicant . Silberg, L., Digital on Call, HFN The Weekly Newspaper for the Home Furnishing Network, Mar. 17, 1997; 4 pages. cited by applicant . Silberschatz, et al., Operating System Concepts, Third Edition, Addison-Wesley: Reading, MA, Sep. 1991; 700 pages. cited by applicant . Simmons, R., "Why 2007 is Not Like 1984: A Broader Perspective on Technology's Effect on Privacy and Fourth Amendment Jurisprudence," J. Crim. L. & Criminology vol. 97, No. 2, Winter 2007; 39 pages. cited by applicant . Smith, M., "Corrections Turns Over a New LEAF: Correctional Agencies Receive Assistance From the Law Enforcement Analysis Facility," Corrections Today, Oct. 1, 2001; 4 pages. cited by applicant . Specification of U.S. Appl. No. 10/720,848, "Information Management and Movement System and Method," to Viola, et al., filed Nov. 24, 2003. cited by applicant . Specification of U.S. Appl. No. 11/045,589, "Digital Telecommunications Call Management and Monitoring System," to Hodge, filed Jan. 28, 2005; 64 pages. cited by applicant . State of North Carolina Department of Correction RFP #ITS-000938A, issued May 25, 2004; 8 pages. cited by applicant . Statement for the Record of John S. Pistole, Assistant Director, Counterterrorism Division, Federal Bureau of Investigation, Before the Senate Judiciary Committee, Subcommittee on Terrorism, Technology, and Homeland Security, Oct. 14, 2003. cited by applicant . Sundstrom, K., "Voice over IP: An Engineering Analysis," Master's Thesis, Department of Electrical and Computer Engineering, University of Manitoba, Sep. 1999; 140 pages. cited by applicant . Supplementary European Search Report for EP Application No. EP 04 80 9530, Munich, Germany, completed on Mar. 25, 2009. cited by applicant . Tanenbaum, A., Modern Operating Systems, Third Edition, Peason Prentice Hall: London, 2009; 552 pages. cited by applicant . Tirkel, A., et al.; "Image Watermarking--A Spread Spectrum Application," Sep. 22-25, 1996; 7 pages. cited by applicant . U.S. Appl. No. 60/607,447, "IP-based telephony system and method," to Apple, et al., filed Sep. 3, 2004. cited by applicant . USPTO Class Definition, Class 379 Telephonic Communications, available at http://www.uspto.gov/web/patents/classification/uspc379/defs379.htm. cited by applicant . Viswanathan, et al., "Multimedia Document Retrieval using Speech and Speaker Recognition," International Journal on Document Analysis and Recognition, Jun. 2000, vol. 2; pp. 1-24. cited by applicant . Walden, R., "Performance Trends for Analog-to-Digital Converters," IEEE Communications Magazine, Feb. 1999. cited by applicant . Weinstein, C., MIT, The Experimental Integrated Switched Network--A System-Level Network Test Facility, IEEE 1983; 8 pages. cited by applicant . Wilkinson, Reginald A., "Visiting in Prison," Prison and Jail Administration's Practices and Theory, 1999; 7 pages. cited by applicant . Rey, R.F., ed., "Engineering and Operations in the Bell System," 2nd Edition, AT&T Bell Laboratories: Murray Hill, NJ, 1983. cited by applicant . "Criminal Calls: A Review of the Bureau of Prisons' Management of Inmate Telephone Privileges," U.S. Department of Justice, Office of the Inspector General, Aug. 1999. cited by applicant . Rosenberg, et al., "SIP: Session Initial Protocol," Network Working Group, Standard Track, Jun. 2002; 269 pages. cited by applicant . File History of U.S. Pat. No. 9,094,500, U.S. Appl. No. 14/322,869, filed Jul. 2, 2014. cited by applicant . Knox, "The Problem of Gangs and Security Threat Groups (STG's) in American Prisons Today: Recent Research Findings From the 2004 Prison Gang Survey," National Gang Crime Research Center, 2005; 67 pages. cited by applicant . Winterdyk et al., "Managing Prison Gangs," Journal of Criminal Justice, vol. 38, 2010; pp. 730-736. cited by applicant . Excerpts from the Prosecution History of U.S. Pat. No. 7,899,167, U.S. Appl. No. 10/642,532, filed Aug. 15, 2003. cited by applicant . File History of U.S. Pat. No. 9,143,609, U.S. Appl. No. 13/949,980, filed Jul. 24, 2013. cited by applicant . Parties' Proposed Claim Constructions in Global Tel*Link Corporation v. Securus Technologies, Inc., No. 3:14-cv-00829-K (N.D. Tex.), filed Sep. 26, 2014; 17 pages. cited by applicant . International Search Report and Written Opinion directed to International Application No. PCT/US2017/026570, dated May 8, 2017;7 pages. cited by applicant . Securus Technologies, Inc. v. Global Tel*Link Corporation, Case No. IPR2016-01333, Petition for Inter Partes Review filed Jun. 30, 2016. cited by applicant . Securus Technologies, Inc. v. Global Tel*Link Corporation, Case No. IPR2016-0096, Petition for Inter Partes Review filed May 4, 2016. cited by applicant. |

Primary Examiner: Parry; Chris

Assistant Examiner: Abderrahmen; Chouat

Attorney, Agent or Firm: Sterne, Kessler, Goldstein & Fox P.L.L.C.

Claims

What is claimed is:

1. A communication monitoring method, comprising: receiving a communication involving an inmate of a controlled-environment facility, the received communication including audio data of a recorded conversation and metadata; extracting the metadata from the received communication, the metadata describing non-content information about the received communication and/or the conversation and including at least one timestamp; comparing the extracted metadata to metadata of a plurality of previously-stored communications; identifying a previously-stored second communication, from among the plurality of previously-stored communications that has metadata that is similar to the extracted metadata to within a predetermined degree; retrieving the previously-stored second communication; correlating spoken content of the received communication with spoken content of the previously-stored second communication, the correlating including: first comparing spoken content included in the audio data of the received communication to spoken content of the previously-stored second communication; second comparing non-spoken content included in the audio data of the received communication to non-spoken content of the previously-stored second communication; identifying a plurality of shared words and/or phrases that were spoken in both the received communication and the previously-stored second communication; for each of the plurality of shared words and/or phrases, determining a relevancy score indicative of a uniqueness and/or importance of the shared word and/or phrase; and determining a frequency of each of the shared words and/or phrases that appear in both the received communication and the previously-stored second communication, each frequency indicating a number of times the corresponding shared word and/or phrase appears in both the received communication and the previously-stored second communication; assigning a score to the received communication based on the correlation, the frequencies, and the relevancy scores of the shared words and/or phrases; comparing the score with a predetermined threshold; and determining the received communication to be an inmate-to-inmate communication based on the score comparison.

2. The communication monitoring method of claim 1, wherein the received communication is in an audio format, the communication monitoring method further comprising: storing the received communication in the audio format; and transcribing the received communication into a text format, wherein the text format reflects contents of the received communication.

3. The communication monitoring method of claim 1, further comprising: determining that the received communication contains non-standard language; and converting non-standard language portions of the received communication into a standard language, the converting including: accessing a language dictionary to identify the non-standard language; and translating the non-standard language into the standard language using the language dictionary and a plurality of conversion protocols.

4. The communication monitoring method of claim 1, further comprising: determining that the received communication is a real-time communication; detecting keywords and/or key phrases within the received communication in real-time; and storing a recording of the received communication for performing the correlating at a later time.

5. The communication monitoring method of claim 1, further comprising: determining that the received communication is not a real-time communication; identifying a stored second communication having overlapping metadata with the communication in response to the determining; and correlating contents of the received communication with contents of the identified stored second communication.

6. The communication monitoring method of claim 4, further comprising, in response to the determining, taking a remedial action, wherein the remedial action includes alerting a supervising personnel and disconnecting the communication.

7. The communication monitoring method of claim 5, further comprising, in response to the determining, taking a remedial action, wherein the remedial action includes withholding the received communication from being forwarded to an intended recipient until after the correlating.

8. A communication monitoring method, comprising: receiving a first communication that involves an inmate of a controlled-environment facility, the first communication including audio data of a recorded conversation and metadata; extracting the metadata from the first communication, the metadata describing non-content information about the first communication and/or the recorded conversation including at least one timestamp; identifying keywords and/or keyphrases in the first communication; comparing the extracted metadata to metadata of a plurality of previously-stored communications; identifying a previously-stored second communication, from among the plurality of previously-stored communications, that has metadata that is similar to the extracted metadata to within a predetermined degree; searching the previously-stored second communication for instances of the keywords and/or keyphrases identified in the first communication to determine a plurality of shared words and/or phrases; determining, for each of the plurality of shared words and/or phrases, a relevancy score indicative of a uniqueness and/or importance of the corresponding shared word or phrase; determining a frequency of appearance of each of the plurality of shared words and/or phrases that appear in both the first communication and the previously-stored second communication, each frequency indicating a number of times the corresponding shared word or phrase appears in both the first communication and the previously-stored second communication; scoring the first communication based on the frequencies of appearance and the relevancy scores of the shared words and/or phrases; comparing the score with a predetermined threshold; and identifying the first communication as an inmate-to-inmate communication based on the score comparing.

9. The communication monitoring method of claim 8, further comprising: determining that the first communication is a real-time communication; forwarding the first communication to an intended recipient prior to completing the comparing; and storing a copy of the first communication, wherein the comparing is performed using the stored copy of the first communication.

10. The communication monitoring method of claim 9, further comprising correlating contents of the first communication with contents of the previously-stored second communication, wherein the scoring of the first communication is based on the correlation.

11. The communication monitoring method of claim 8, further comprising: determining that the first communication is not in real time; correlating contents of the first communication with contents of the previously-scored second communication in response to the determination before forwarding the first communication to an intended recipient; and scoring the first communication based on the correlation.

12. A communication monitoring system, the system comprising: a database; a transceiver, configured to receive a communication involving an inmate of a controlled-environment facility, the communication including audio data of a recorded conversation and metadata; a processing subsystem configured to extract the metadata from the communication, the metadata describing non-content information about the communication and/or the conversation and including at least one timestamp; and a detection and prevention analysis engine configured to: compare the extracted metadata to metadata of a plurality of stored communications; identify a stored communication from among the plurality of stored communications that has metadata that is similar to the extracted metadata to within a predetermined degree; correlate spoken content of the communication with spoken content of the stored communication, the correlating including: first comparing the spoken content included in the audio data of the communication to spoken content of the stored communication; second comparing non-spoken content included in the audio data of the communication to non-spoken content included in the stored communication; identifying a plurality of shared words and/or phrases that were spoken in both the communication and the stored communication; for each of the plurality of shared words and/or phrases, determine a relevancy score indicative of a uniqueness and/or importance of the shared word and/or phrase; and determining a frequency of each of the shared words and/or phrases that appear in both the communication and the stored communication, each frequency indicating a number of times the corresponding shared word and/or phrase appears in both the communication and the second communication; assign a score to the communication based on the correlation, the frequencies, and the relevancy scores of the shared words and/or phrases; compare the score with a predetermined threshold; and determine that the communication is an inmate-to-inmate communication based on the score comparison.

13. The communication monitoring system of claim 12, further comprising a communication storage configured to store the communication in an appropriate format depending on a type of the communication.

14. The communication monitoring system of claim 12, further comprising a transcriber configured to transcribe the communication into a text format, wherein the text format reflects contents of the communication.

15. The communication monitoring system of claim 12, further comprising a language converter configured to: determine that the communication contains non-standard language; and convert the communication into a standard language, wherein to convert the communication, the language converter is configured to: access a language dictionary to identify the non-standard language; and translate nonstandard language portions of the communication into the standard language using the language dictionary and a plurality of conversion protocols.

16. The communication monitoring system of claim 12, wherein the detection and prevention analysis engine is further configured to: determine that the communication is in real time; and forward the communication to an intended recipient prior to performing the correlating.

17. The communication monitoring system of claim 12, wherein the detection and prevention analysis engine is further configured to: determine that the communication is not in real time; and perform the correlating prior to forwarding the communication to an intended recipient.

18. The communication monitoring system of claim 16, wherein the detection and prevention analysis engine is further configured to take remedial action in response to the determining that the communication is an inmate-to-inmate communication, wherein the remedial action includes alerting a supervising personnel and disconnecting the communication.

19. The communication monitoring system of claim 17, wherein the detection and prevention analysis engine is further configured to take remedial action in response to the determining that the communication is an inmate-to-inmate communication, wherein the remedial action includes withholding the communication and preventing future communication containing the same metadata.

Description

BACKGROUND

Field

The disclosure relates to a system and method of detecting and preventing illegal inmate-to-inmate communications in a controlled environment facility.

Related Art

In current prison facilities, inmates are generally permitted to communicate, using the prison's communication system, with a wide variety of individuals both inside and outside of the prison facility. As such, inmates of a particular facility may be capable of communicating with other inmates, either in their own facility, or in other facilities. Even if direct contact is prohibited, such communication can still occur via an intermediary.

There are monitoring systems and methods currently implemented in controlled environment facilities. However, the ability of inmates to communicate with other inmates presents a number of challenges that are unique to a controlled environment facility and that are not sufficiently hampered by current monitoring and control systems.

BRIEF DESCRIPTION OF THE DRAWINGS/FIGURES

Embodiments are described with reference to the accompanying drawings. In the drawings, like reference numbers indicate identical or functionally similar elements. Additionally, the left most digit(s) of a reference number identifies the drawing in which the reference number first appears.

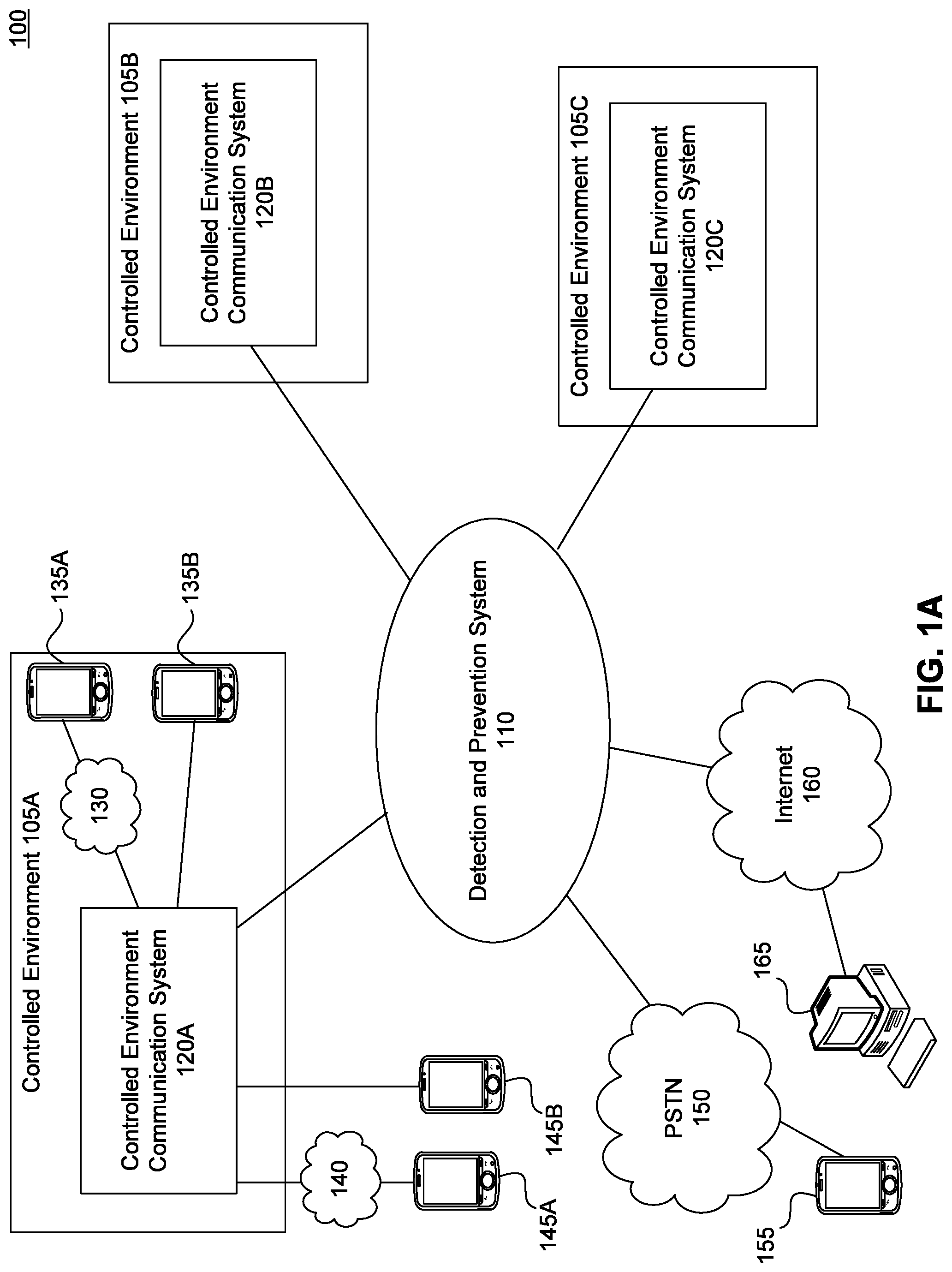

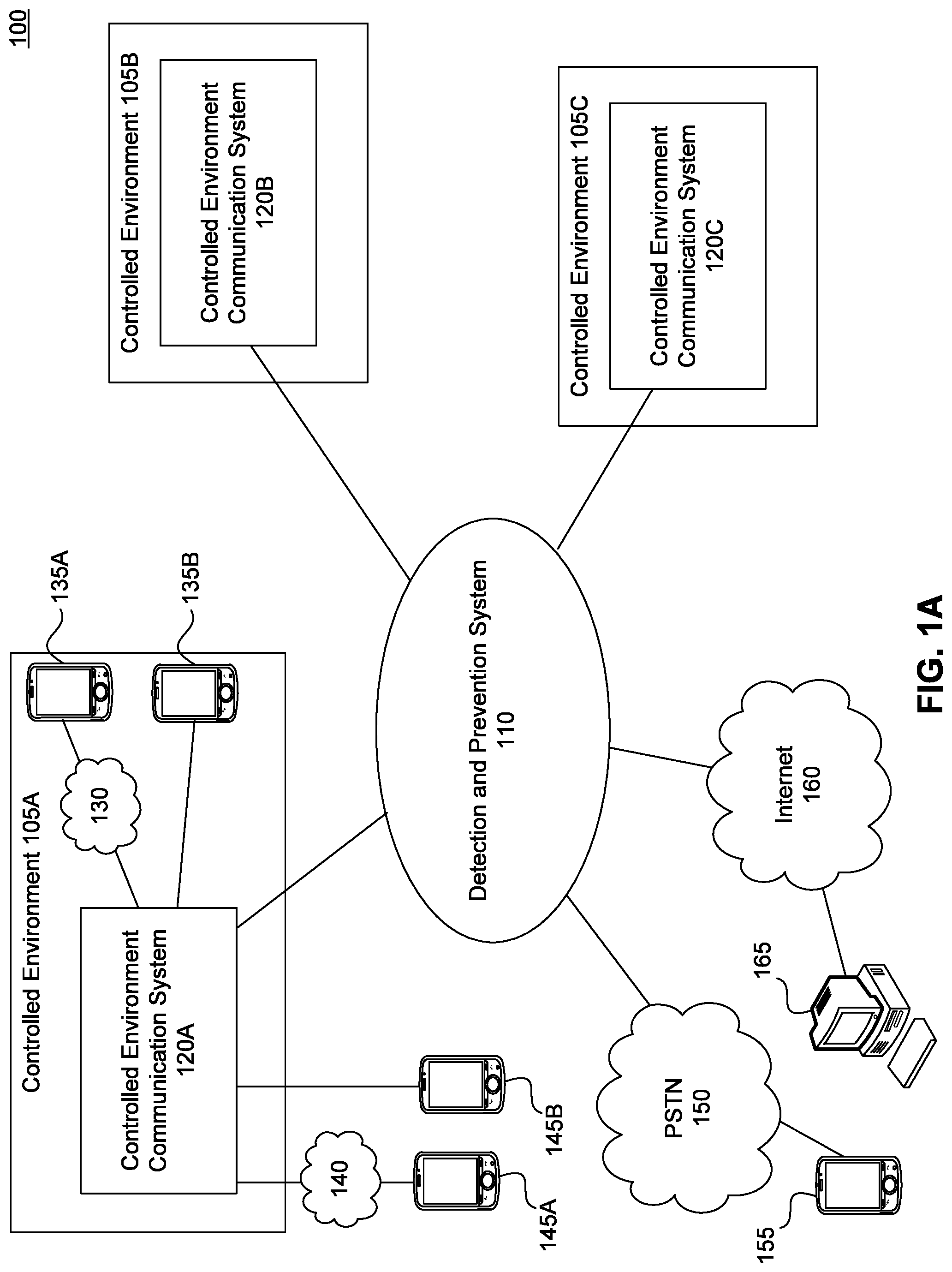

FIG. 1A illustrates a centralized detection and prevention system connected to several controlled environment facilities, according to an embodiment of the invention;

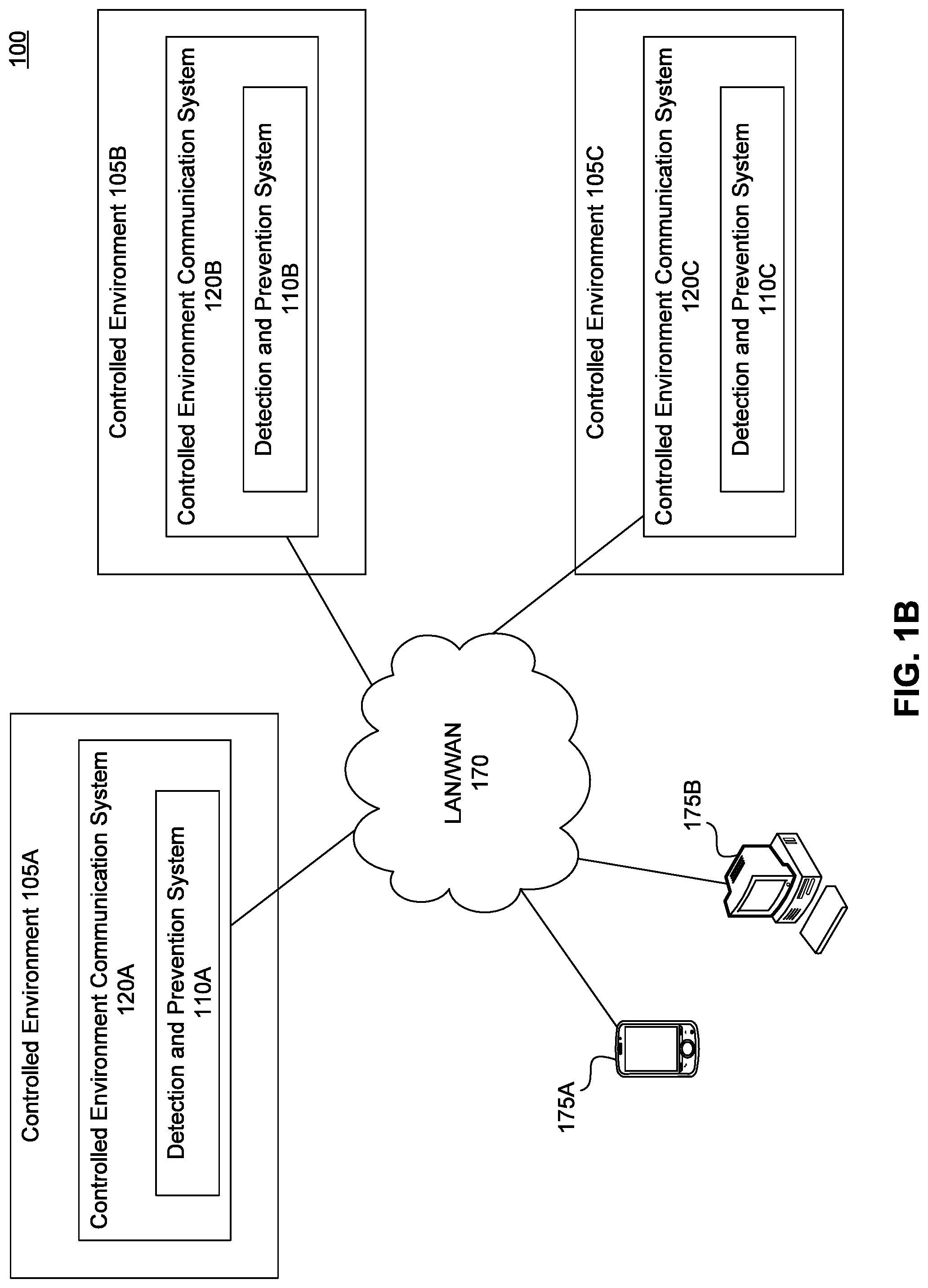

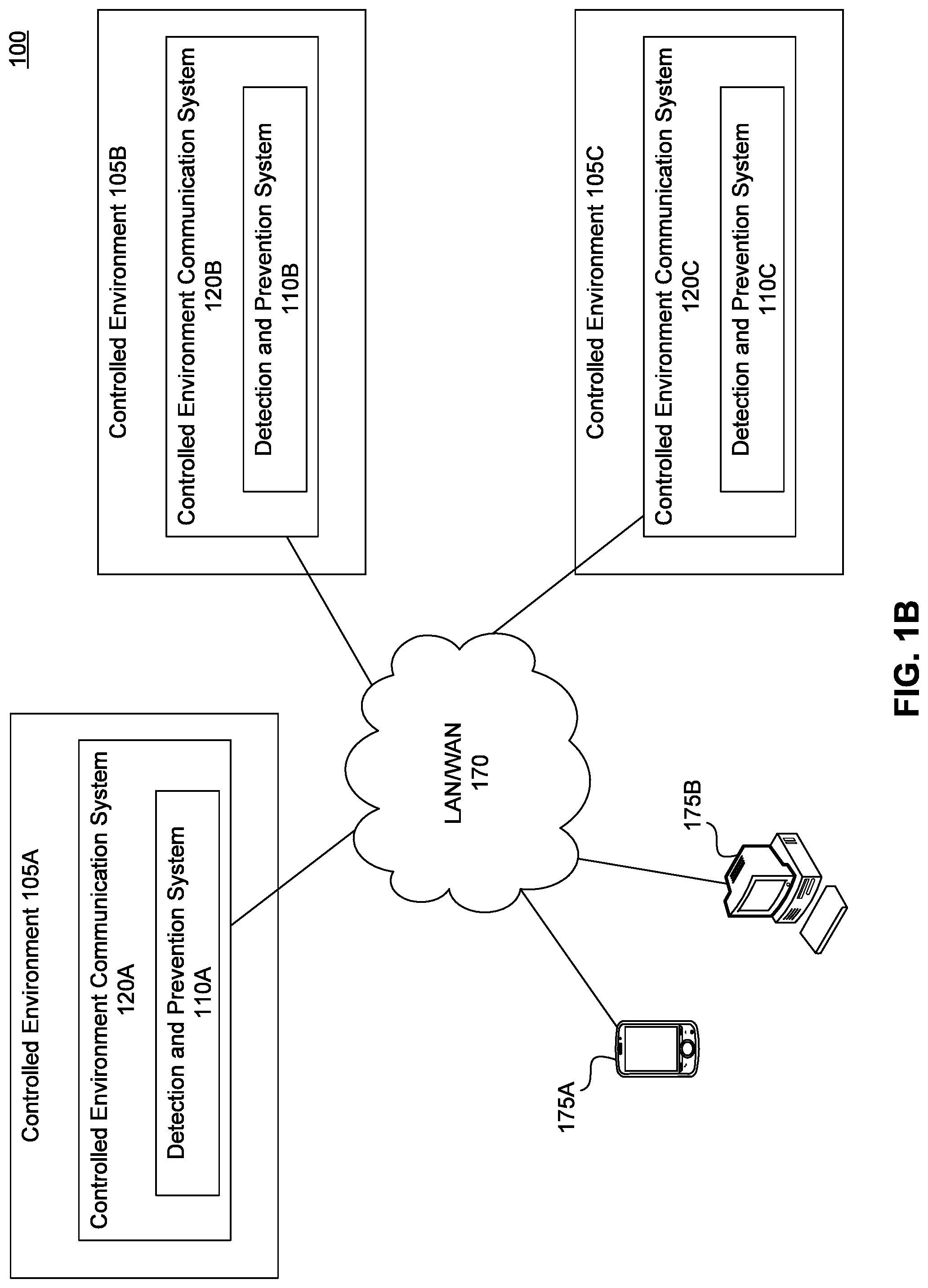

FIG. 1B illustrates localized detection and prevention systems supporting several controlled environment facilities, according to an embodiment of the invention;

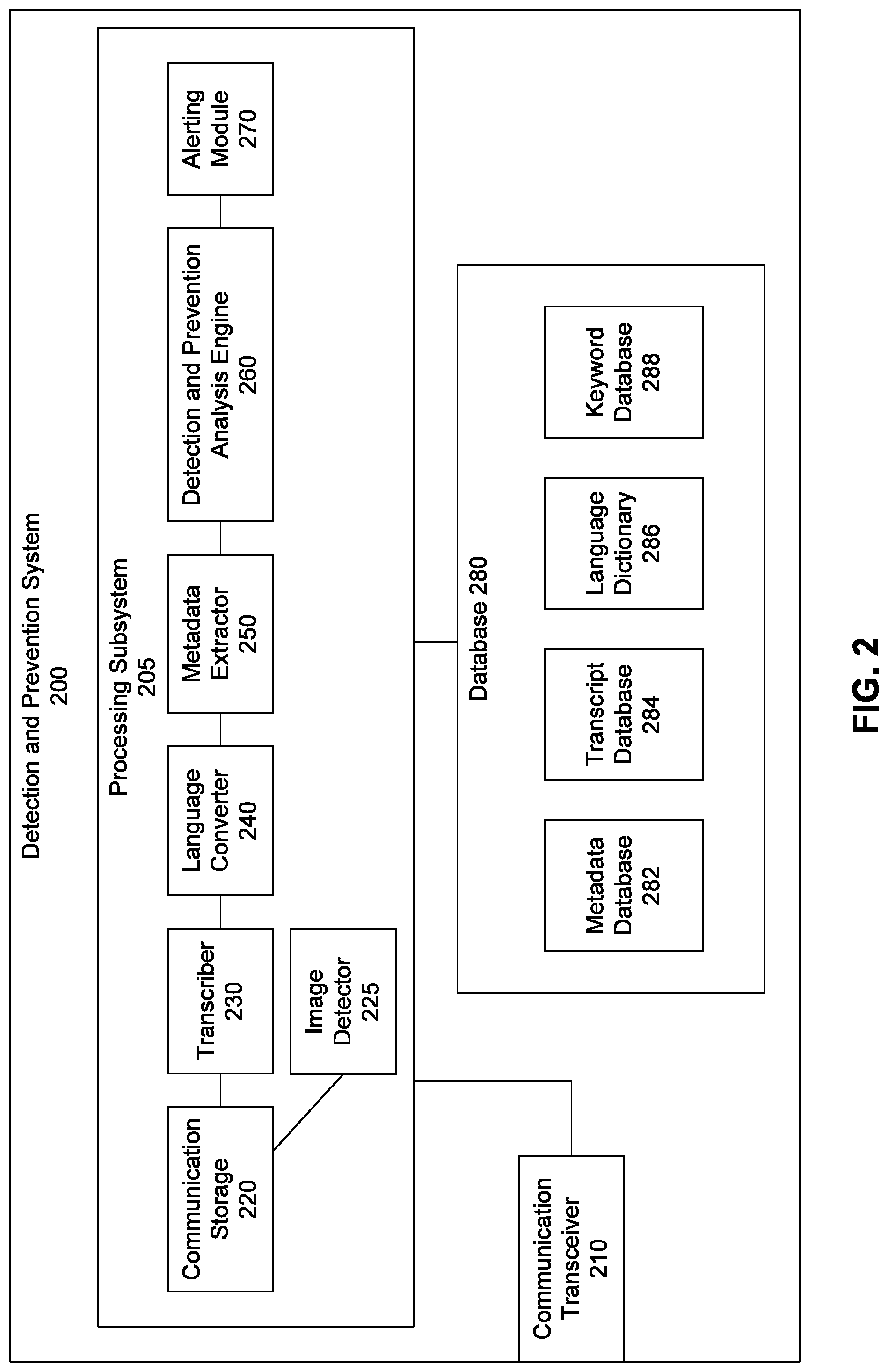

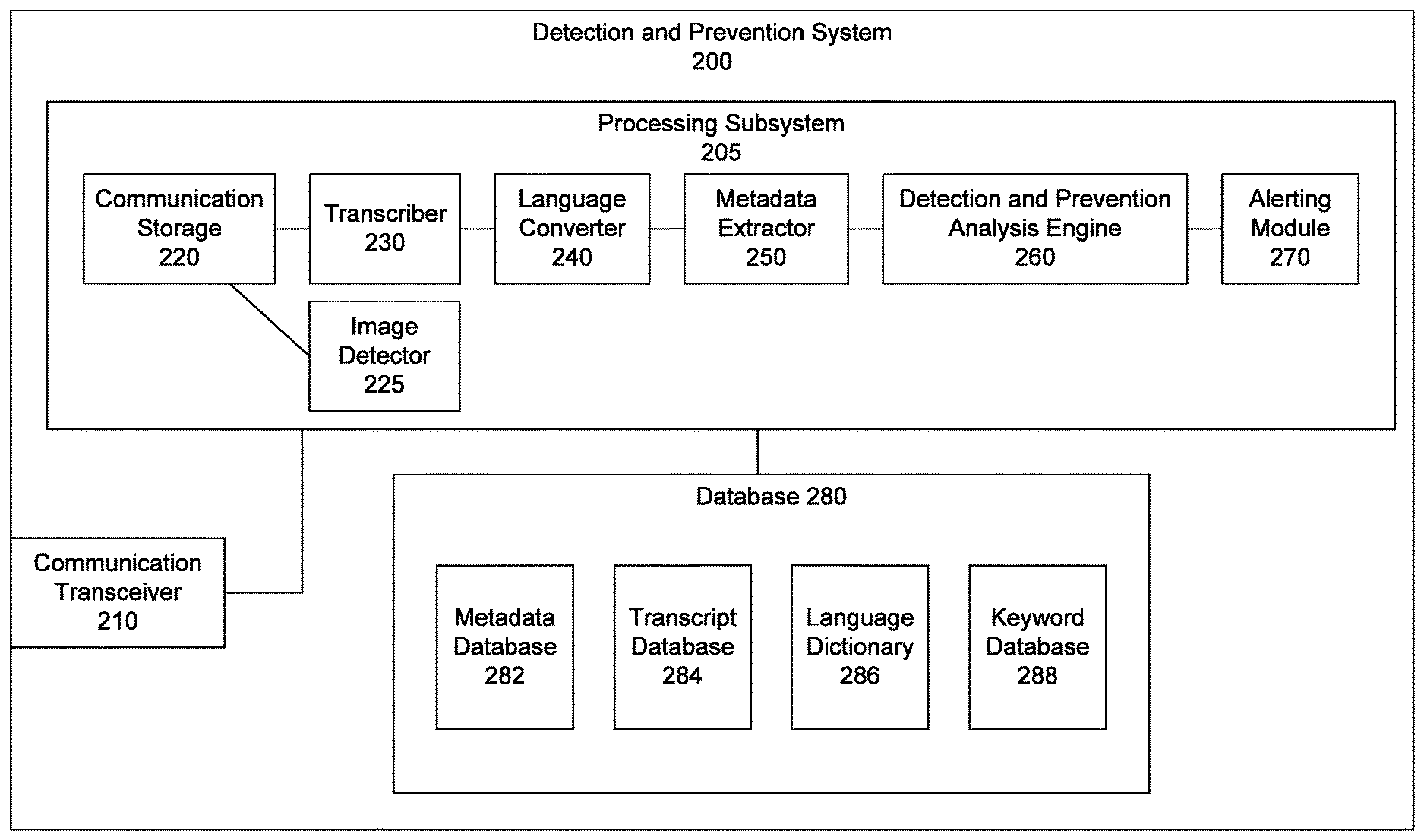

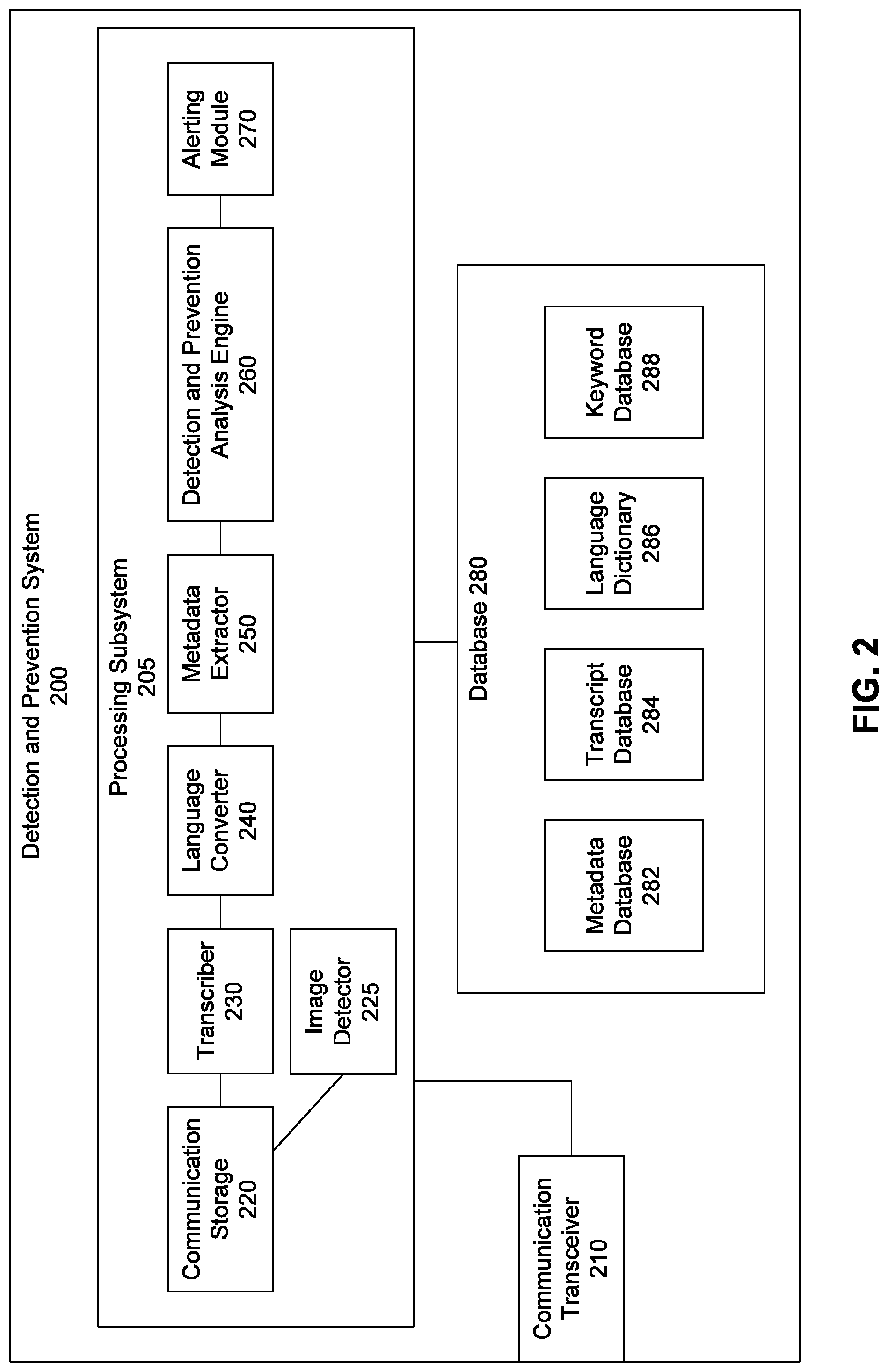

FIG. 2 illustrates a block diagram of a detection and prevention system, according to an embodiment of the invention;

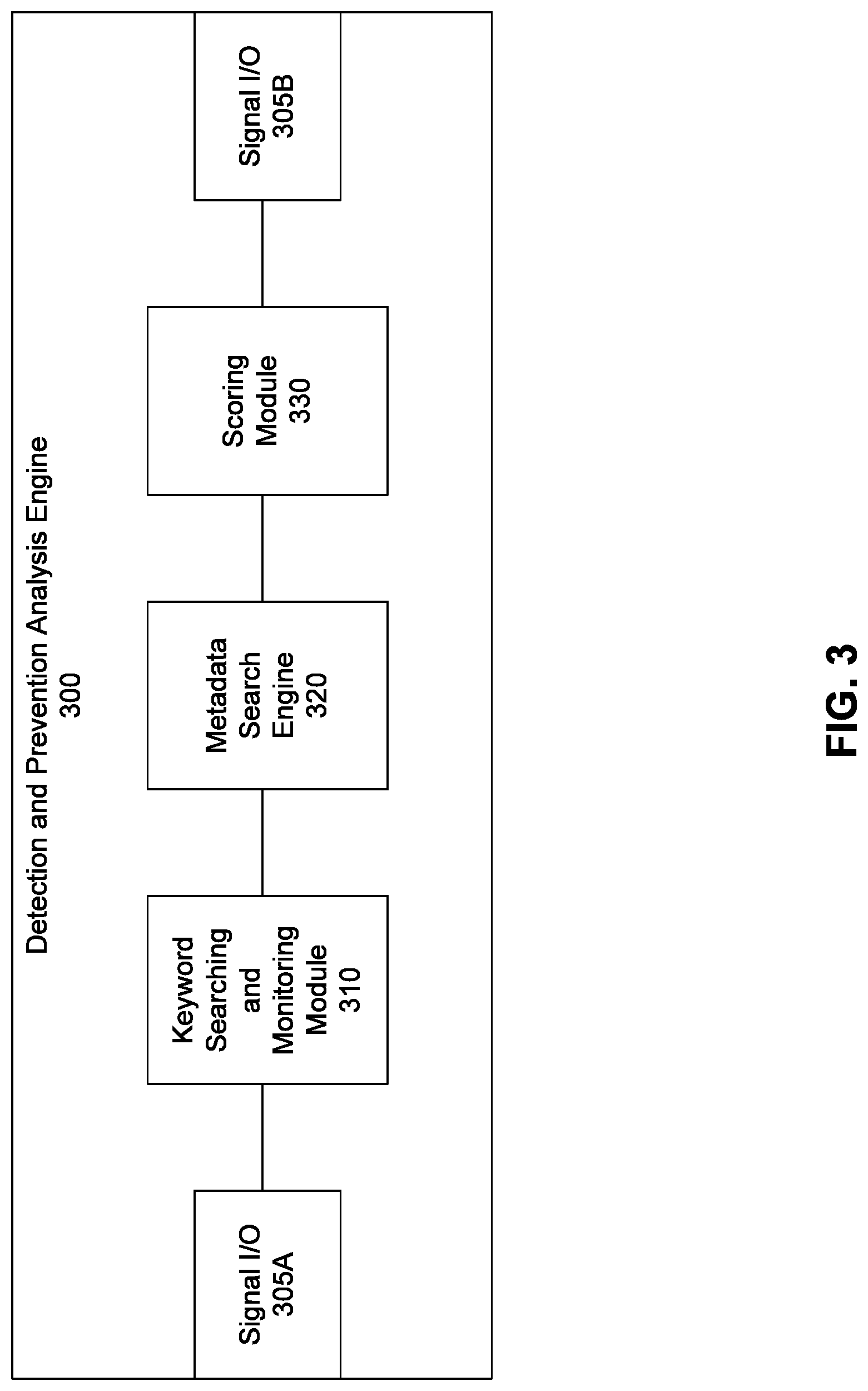

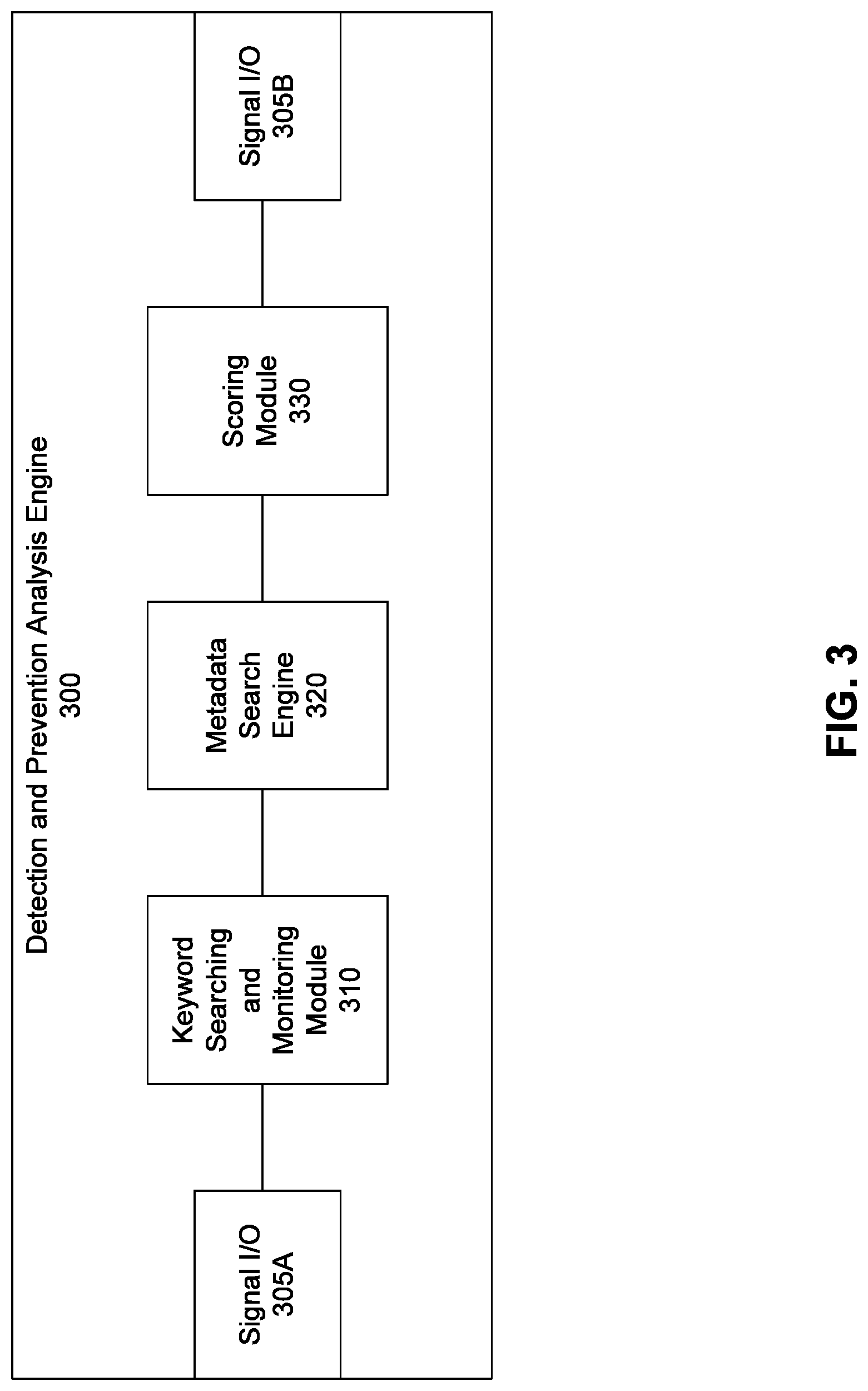

FIG. 3 illustrates a block diagram of a detection and prevention analysis engine, according to an embodiment of the invention;

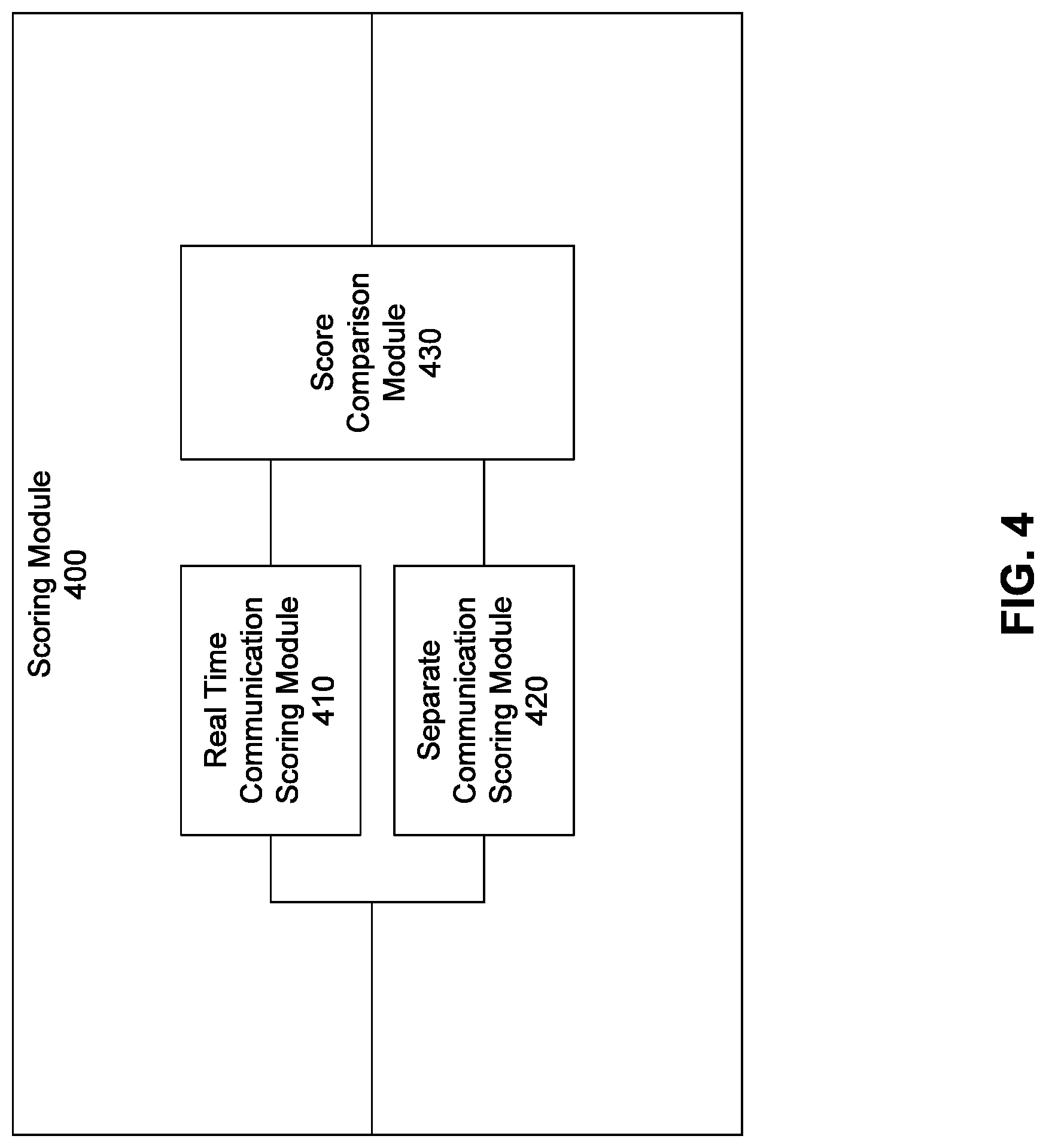

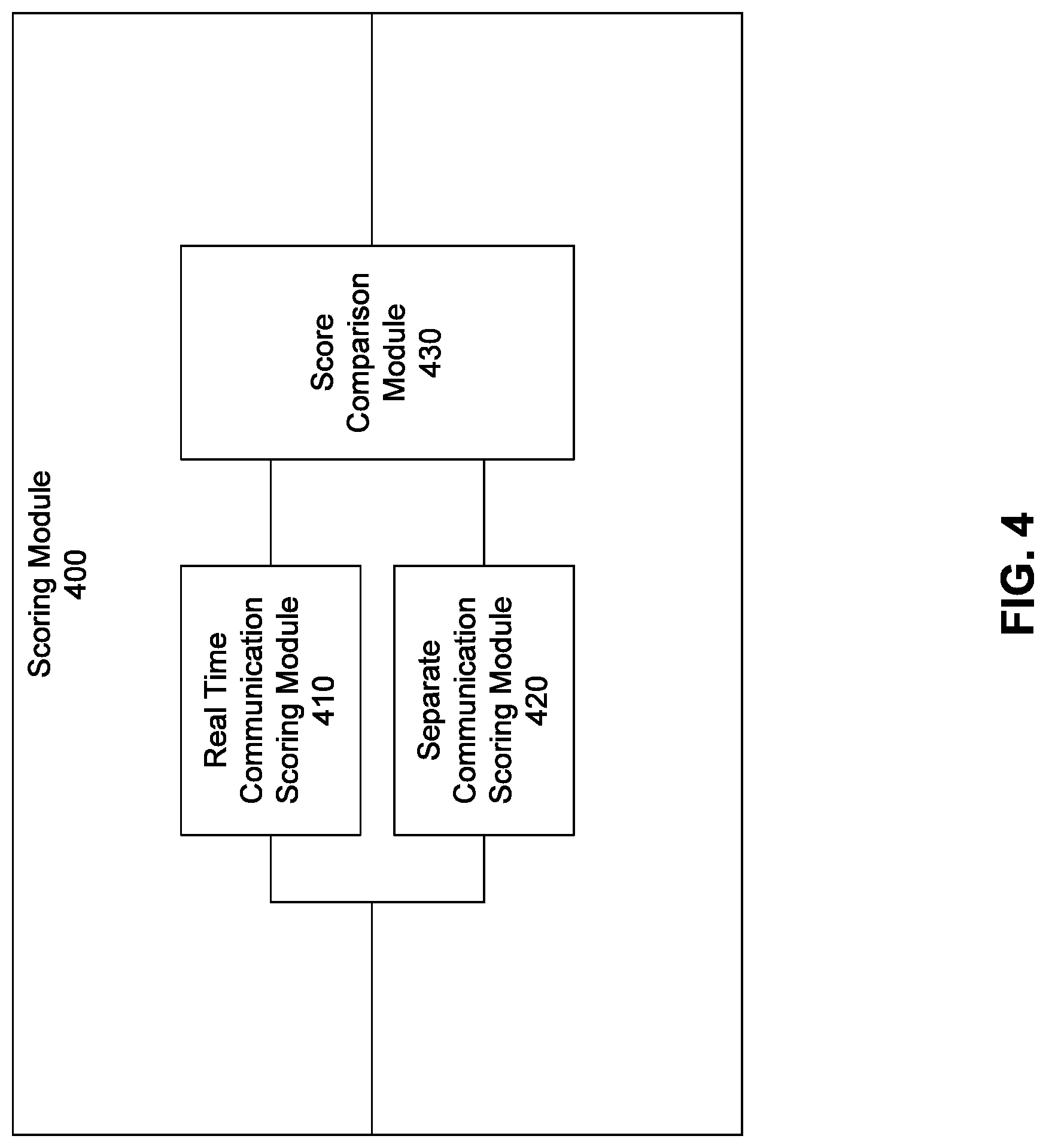

FIG. 4 illustrates a block diagram of a scoring module, according to an embodiment of the invention;

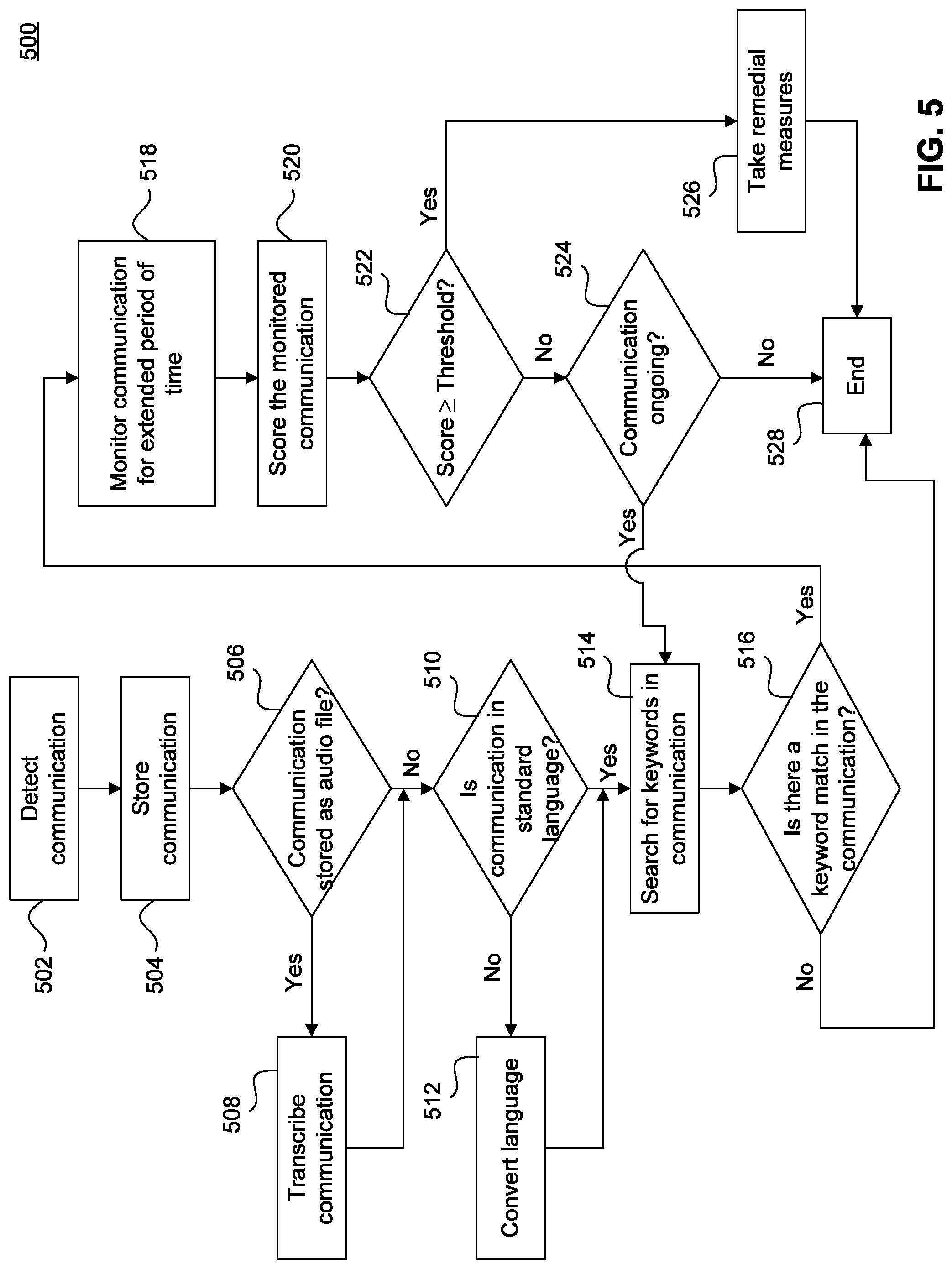

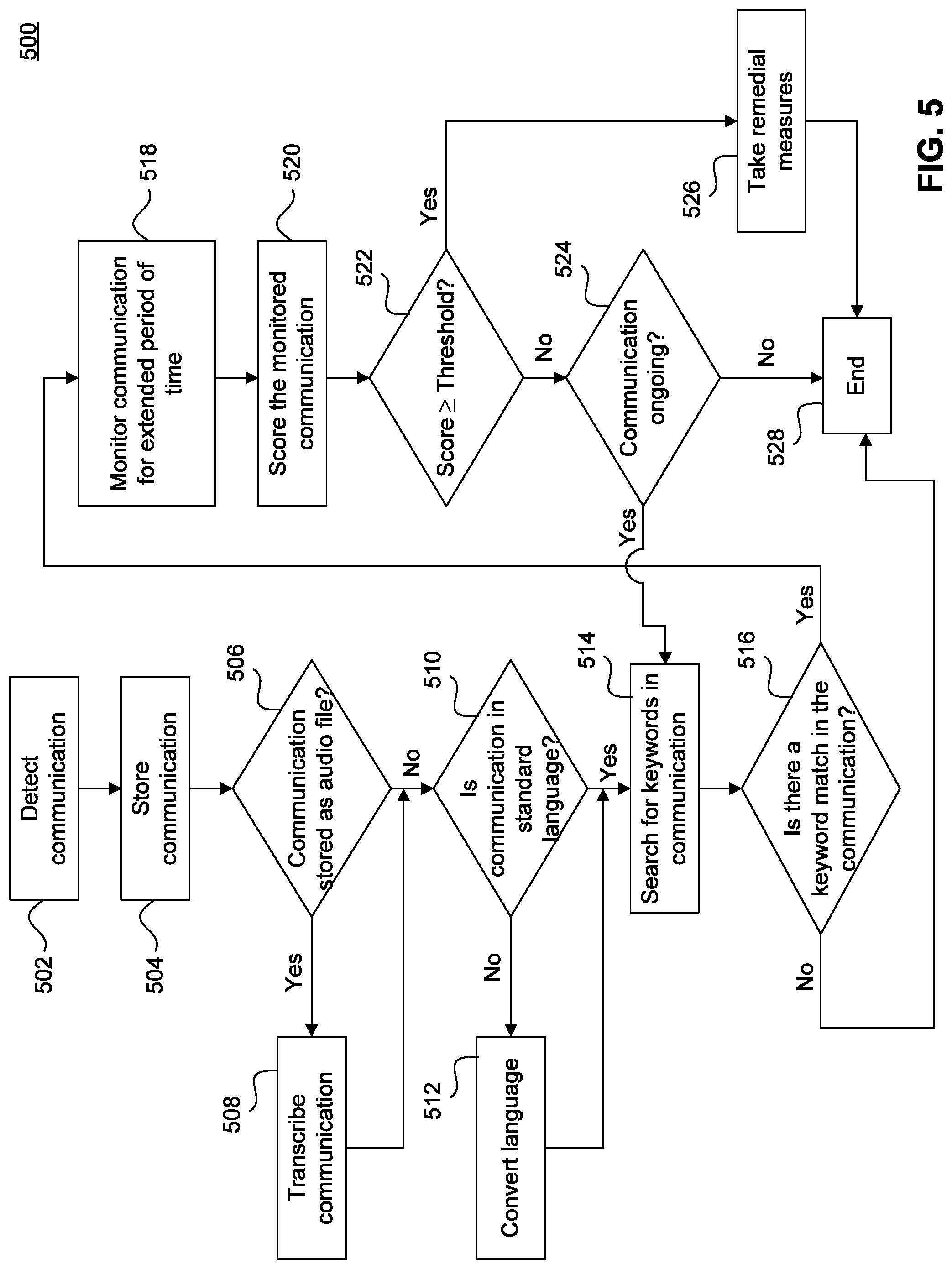

FIG. 5 illustrates a flowchart diagram of a method for detecting and preventing illegal communication, according to an embodiment of the invention;

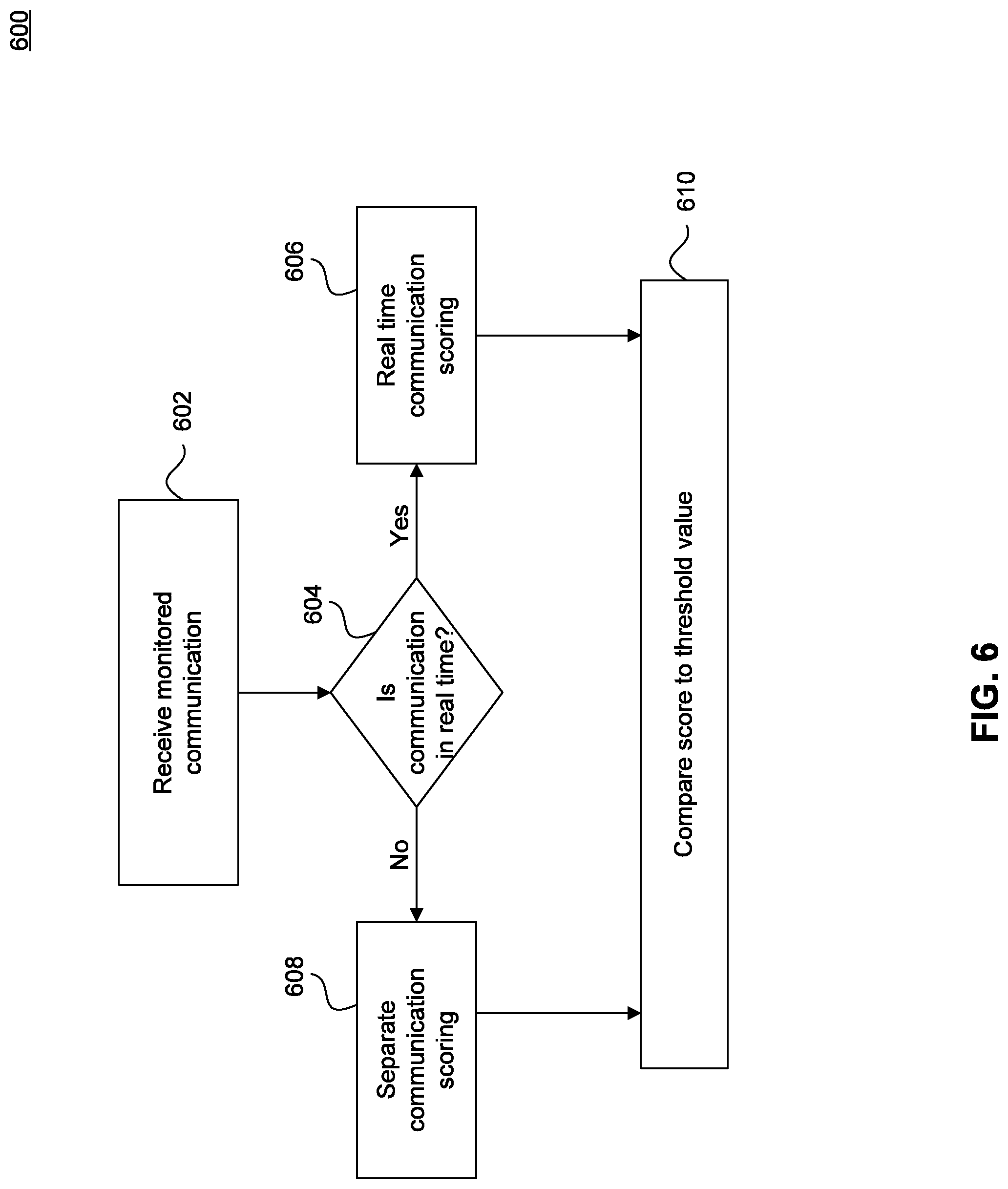

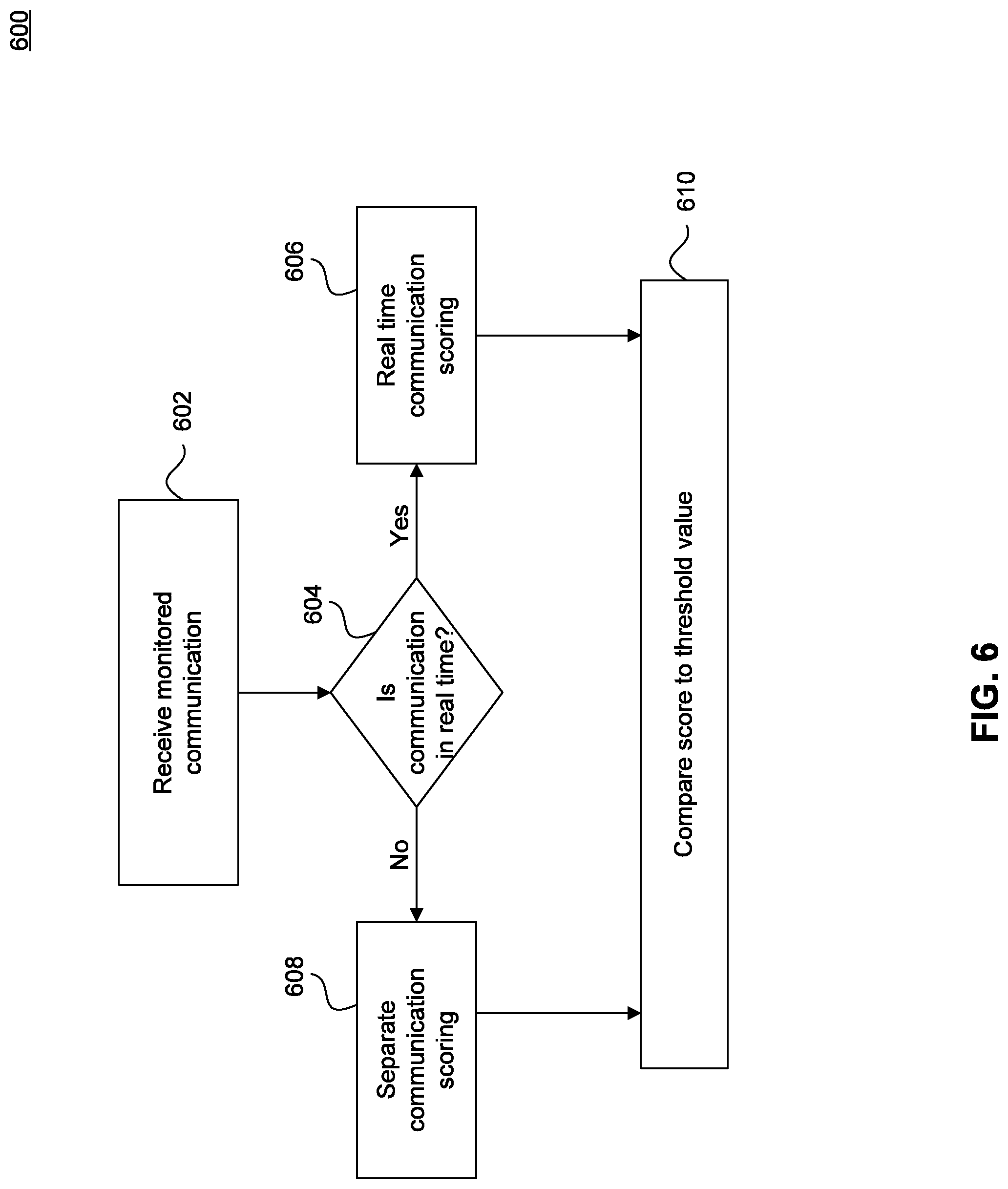

FIG. 6 illustrates a flowchart diagram of a method for scoring a monitored communication, according to an embodiment of the invention; and

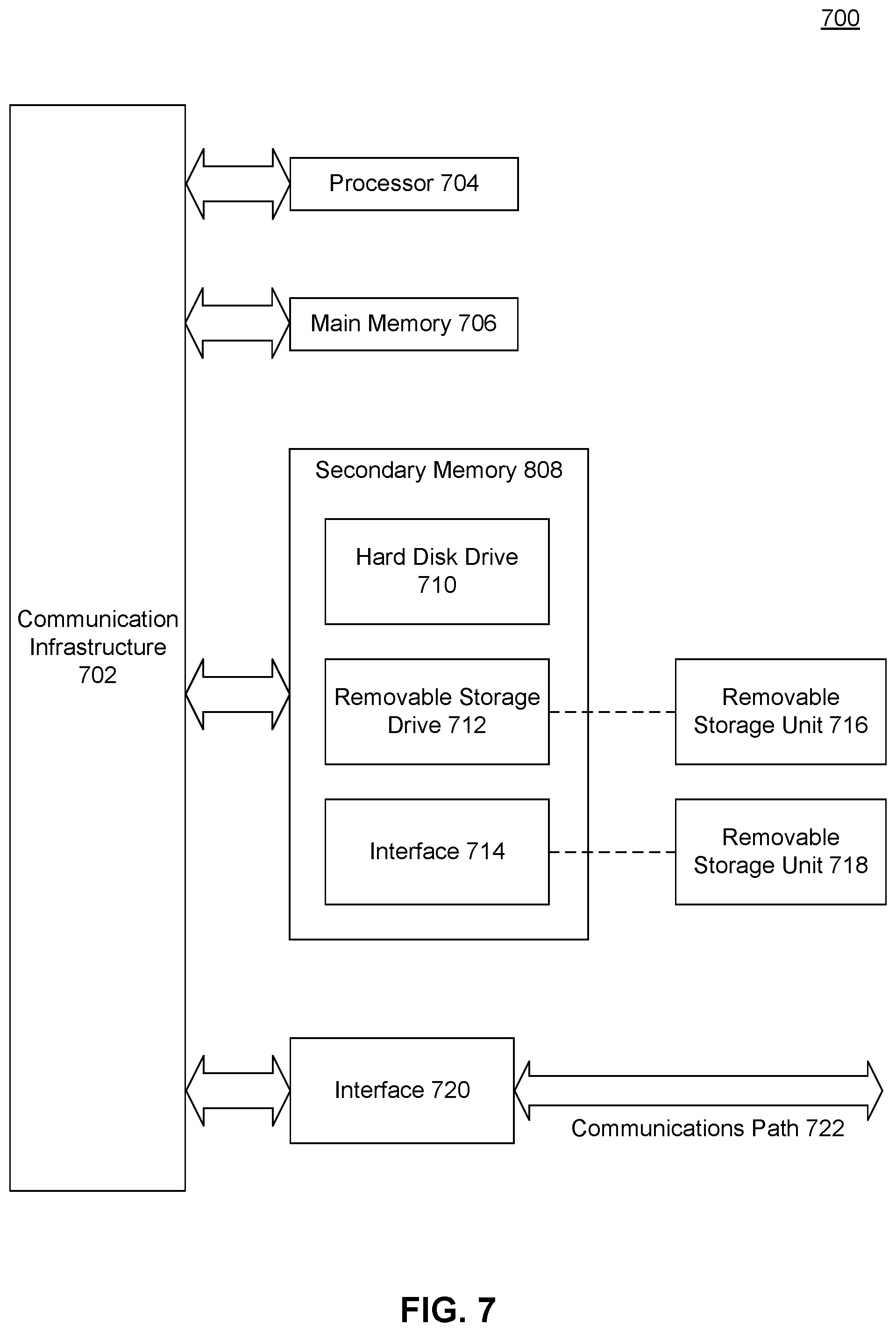

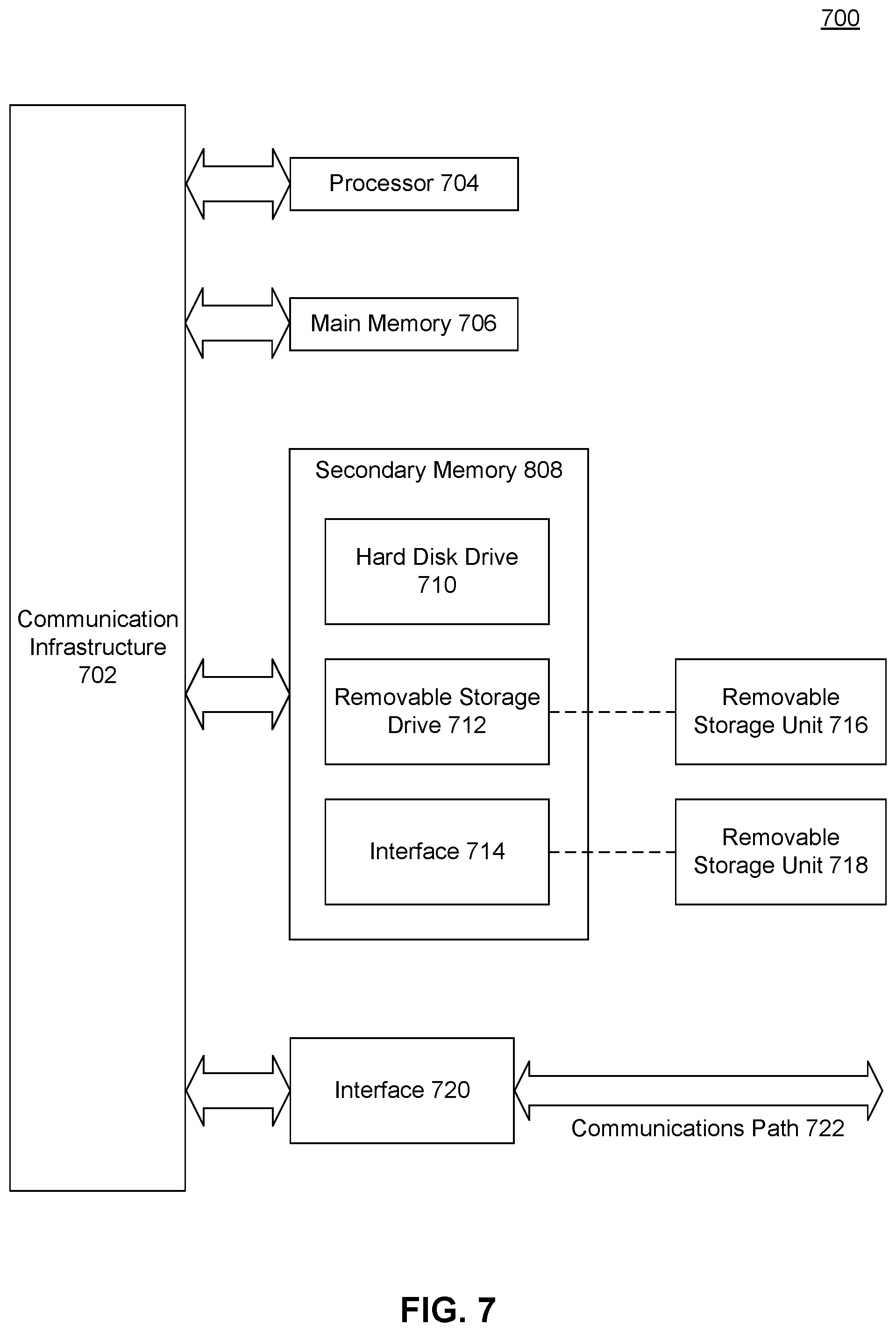

FIG. 7 illustrates a block diagram of a general purpose computer that may be used to perform various aspects of the present disclosure.

DETAILED DESCRIPTION

The following Detailed Description refers to accompanying drawings to illustrate exemplary embodiments consistent with the disclosure. References in the Detailed Description to "one exemplary embodiment," "an exemplary embodiment," "an example exemplary embodiment," etc., indicate that the exemplary embodiment described may include a particular feature, structure, or characteristic, but every exemplary embodiment may not necessarily include the particular feature, structure, or characteristic. Moreover, such phrases are not necessarily referring to the same exemplary embodiment. Further, when a particular feature, structure, or characteristic is described in connection with an exemplary embodiment, it is within the knowledge of those skilled in the relevant art(s) to affect such feature, structure, or characteristic in connection with other exemplary embodiments whether or not explicitly described.

Embodiments may be implemented in hardware (e.g., circuits), firmware, computer instructions, or any combination thereof. Embodiments may be implemented as instructions stored on a machine-readable medium, which may be read and executed by one or more processors. A machine-readable medium may include any mechanism for storing or transmitting information in a form readable by a machine (e.g., a computing device). For example, a machine-readable medium may include read only memory (ROM); random access memory (RAM); magnetic disk storage media; optical storage media; flash memory devices, or other hardware devices Further, firmware, routines, computer instructions may be described herein as performing certain actions. However, it should be appreciated that such descriptions are merely for convenience and that such actions in fact results from computing devices, processors, controllers, or other devices executing the firmware, routines, instructions, etc. Further, any of the implementation variations may be carried out by a general purpose computer, as described below.

For purposes of this discussion, the term "module" shall be understood to include at least one of hardware (such as one or more circuit, microchip, processor, or device, or any combination thereof), firmware, computer instructions, and any combination thereof In addition, it will be understood that each module may include one, or more than one, component within an actual device, and each component that forms a part of the described module may function either cooperatively or independently of any other component forming a part of the module. Conversely, multiple modules described herein may represent a single component within an actual device. Further, components within a module may be in a single device or distributed among multiple devices in a wired or wireless manner.

The following Detailed Description of the exemplary embodiments will so fully reveal the general nature of the disclosure that others can, by applying knowledge of those skilled in relevant art(s), readily modify and/or adapt for various applications such exemplary embodiments, without undue experimentation, without departing from the spirit and scope of the disclosure. Therefore, such adaptations and modifications are intended to be within the meaning and plurality of equivalents of the exemplary embodiments based upon the teaching and guidance presented herein. It is to be understood that the phraseology or terminology herein is for the purpose of description and not of limitation, such that the terminology or phraseology of the present specification is to be interpreted by those skilled in relevant art(s) in light of the teachings herein.

Those skilled in the relevant art(s) will recognize that this description may be applicable to many different communications protocols.

American correctional facilities house millions of individuals in controlled environments all over the country. An ongoing problem for such correctional facilities is the ability for inmates to communicate with other inmates in order to arrange illegal activities. Such illegal activities might include a hit (e.g., orchestrated killing) on a person that is incarcerated or perhaps is part of the general public. Another example of such illegal activities might be to arrange and organize a riot. The ability of inmates to plan and coordinate these events with other inmates over the prison communication system is a serious security concern with potentially fatal consequences.

Generally, correctional facilities restrict phone access by designating the time and place of calls permitted to the inmates. Correctional facilities also restrict phone access for inmates by permitting communication with only preapproved individuals or prohibiting inmates from dialing specific numbers. In extreme situations, correctional facilities may terminate all prison communication services for a certain period of time, particularly during a riot or other emergency situation.

Most communications made by inmates are closely monitored by correctional facilities. However, regardless of the measures taken by correctional facilities, inmates repeatedly attempt to avoid detection of suspicious communication regarding illegal activities by relaying messages to other inmates or third parties outside of the correctional facilities. For example, in some instances, an inmate may be permitted to directly contact an inmate within their own facility, or within a separate facility. This provides a direct line of communication to facilitate the coordination of criminal acts. In other instances, an inmate may call an outside third party and instruct the third party to contact another inmate in order to relay certain information. In this manner, the inmate is able to skirt the restrictions in order to effectively "communicate" with the other inmate. Thus, even without the direct line of communication, coordination can still occur via the use of the intermediary.

As illustrated by these examples, there are many unique concerns associated with monitoring the communications by inmates of a controlled facility. To further complicate matters, certain facilities may be outfitted to allow inmates to carry personal inmate devices (PIDs) and use their own personal devices, in the form of tablet computers, smartphones, etc. for personal calls, digital content streaming, among other uses.

With these concerns in mind, it is preferable to implement a system and/or method of detecting and preventing inmate-to-inmate message relays. With this objective in mind, the following description is provided for a system and method which monitors separate inmate communications for similar phrases. These similar phrases may be overlapping in real time, for example as part of a conference call, or can occur at separate times in separate communications. Communications are scored based on their similarity, and the resulting score is compared to a threshold. If the score is above the threshold, any number of preventative measures are taken, such as flagging the communications and alerting authorities. Additional measures may include identifying whether the communications contain illegal matter and then disconnecting the communications, restricting future communications, and/or preparing countermeasures to thwart the illegal activity.

Exemplary Centralized Detection and Prevention System

FIG. 1A illustrates an exemplary centralized detection and prevention system environment 100. In the environment 100, a detection and prevention system 110 is a centralized system that is connected to one or more controlled environments 105A-C through each respective controlled environment communication system 120A-C. In an embodiment, the controlled environment communication systems 120A-C control the majority of communications coming into or leaving the respective facilities, particularly those by or for inmates within the facility. The detection and prevention system 110 may be connected through wired and/or wireless connections to the controlled environment communications systems 120A-C. In an embodiment, so as to facilitate communication with outsider third parties, the detection and prevention system 110 is connected to one or both of the PSTN 150 and the Internet 160. Each of the PSTN 150 and the Internet 160 may be connected to various communications devices, such as devices 155 and 165.

In an embodiment, the detection and prevention system 110 performs a variety of functions with respect to the communications, such as monitoring for key words and/or phrases indicative of a rules violation, recording conversations for future review by an investigator or other authorized person, as well as call control, such as disconnecting the call in response to the detection of a rules violation, alerting an official of a rules violation, etc. The function of the detection and prevention system 110 may vary depending on the time sensitivity of communications between outsider parties and inmates in the controlled environments 120A-C. For time sensitive communications, such as live telephone calls, the detection and prevention system 110 is transparent to the communication and monitors the ongoing communication until the detection and prevention system 110 detects a rule violation or until the communication ends. For non-time sensitive communications, such as emails and texts, the detection and prevention system 110 may act as a communication interceptor, withholding the communication while it is being analyzed for a rules violation before releasing the communication (e.g., forwarding it) to the intended recipient.

In an embodiment, the controlled environment communication system 120A provides a local wireless network 130 for providing wireless communication connectivity to wireless communication devices 135A. Wireless communication devices 135A may include electronic wireless communication devices such as smart phones, tablet computers, PIDs, game consoles, and laptops, among others. In an embodiment, wired communication devices 135B are also connected to the controlled environment communication system 120A. Wired communication devices 135B may include telephones, workstations, computers, in-pod kiosks, game consoles, and fax machines, among others.

In an embodiment, communication devices outside of the controlled environment 145A-B communicate with devices within the controlled environment 105A. Wireless communication devices 145A may be connected to the controlled environment communication system 120A through a wireless network 140. In an embodiment, wired communication devices 145B are connected directly to the controlled environment communication system 120A without an intervening network.

Exemplary Localized Detection and Prevention System

FIG. 1B illustrates an exemplary localized detection and prevention system environment 100. In the environment 100, detection and prevention systems 110A-C are localized systems that are located in one or more controlled environments 105A-C. Most communications flowing in and out of controlled environments 105A-C are controlled by the controlled environment communication systems 120A-C. Most communications flowing through the controlled environment communication systems 120A-C are monitored by the localized detection and prevention systems 110A-C. The detection and prevention systems 110A-C may be connected through wired and/or wireless connection to the controlled environment communications systems 120A-C. In an embodiment, so as to facilitate communication with other controlled environments 105A-C and outsider third parties, the detection and prevention systems 110A-C are connected to one or both of the LAN and WAN 170 networks, such as the PSTN and the Internet, among others. Each of the WAN and LAN 170 networks may provide connection for various communications devices 175A-B.

Exemplary Detection and Prevention System

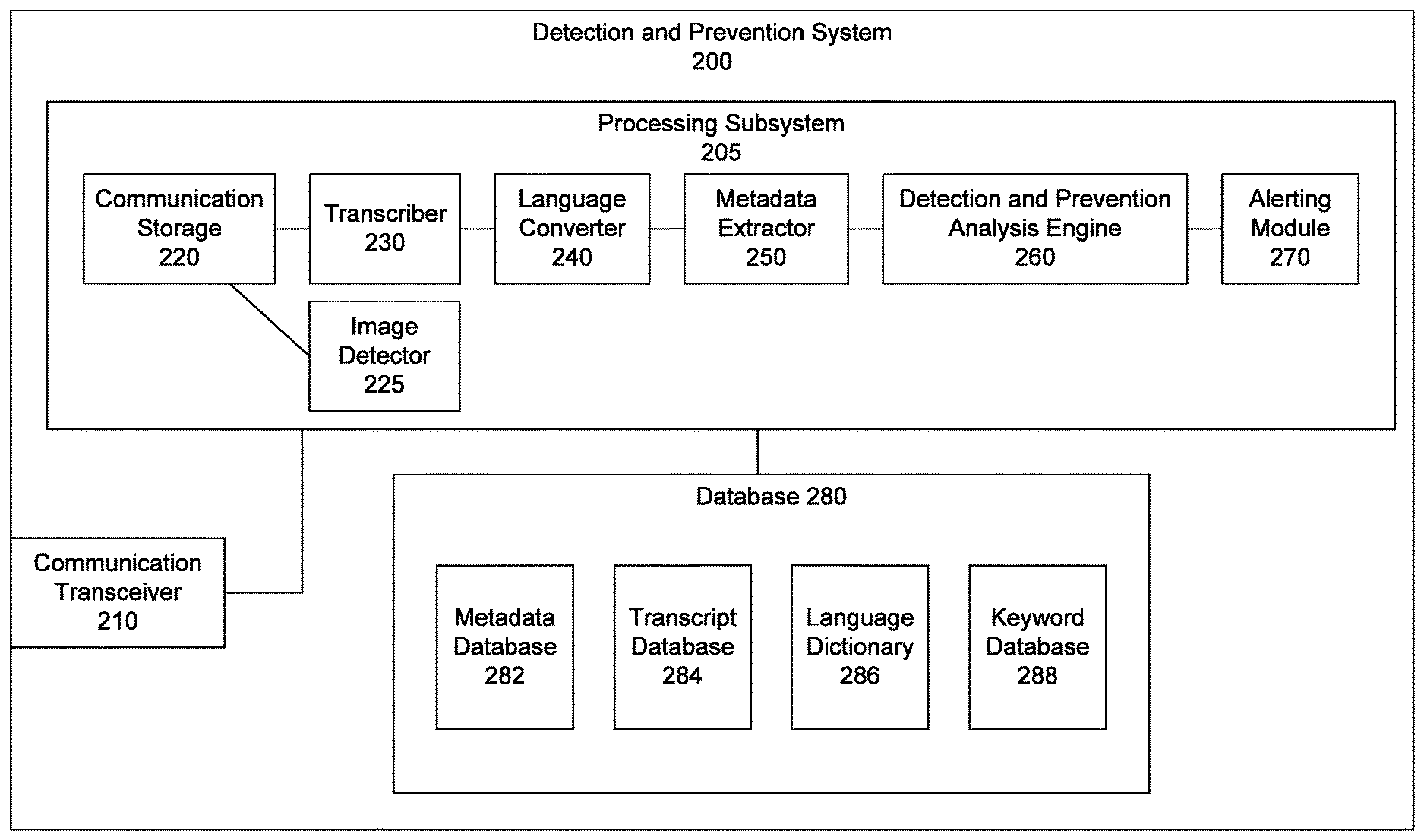

FIG. 2 illustrates a block diagram of a detection and prevention system 200, according to an embodiment of the invention. The detection and prevention system 200 includes at least a processing subsystem 205, a communication transceiver 210, and a database 280, and may represent an exemplary embodiment of any of the detection and prevention systems 110 illustrated in FIGS. 1A and 1B. The processing subsystem 205 includes a communication storage 220, an image detector 225, a transcriber 230, a language converter 240, a metadata extractor 250, a detection and prevention analysis engine 260, and an alerting module 270. The database 280 includes a metadata database 282, a transcript database 284, language dictionary 286, and a keyword database 288.

The communication transceiver 210 detects communications sent to/from the controlled environment. The communications may be time sensitive, such as phone calls or video conferences, in which case the communication transceiver 210 detects real time communications between inmates and outsider third parties. Examples of real time communications include voice calls, video conference calls, video visitation sessions, video hand messaging sessions, multi-player game sessions, among others. In another embodiment, the communication transceiver 210 detects non time sensitive communications such as, voice messages, email messages, text messages, among others. The communication transceiver 210 is connected to the processing subsystem 205 in order to further analyze the communication.

The communication storage 220 receives and stores communications detected by the communication transceiver 210, according to an embodiment of the invention. The communications are stored in the appropriate file format in which the communications have been detected by the communication transceiver 210. The communication storage 220 stores the detected communications in the appropriate file format according to the type of communication. Audio communications are stored in various uncompressed and compressed audio file formats. Exemplary audio file formats include, WAV, AIFF, FLAC, WavPack, MP3, among others. In an embodiment, communications detected by the communication transceiver 210 may be in the form of audio, video, text or other suitable formats depending on the type of communication received. Video communications are stored in various video file formats such as WMV, AVI, Quicktime, among others. Text communications are stored in various text file formats such as TXT, CSV, DOC, among others.

The image detector 225 receives and analyzes video files stored in the communication storage 220, according to an embodiment of the invention. In order to determine whether two or more inmates are present on the same video communication, the image detector 225 captures images from the video communication and utilizes well-known 2D and 3D image recognition algorithms to determine the images of the participants. The image detector 225 compares the determined images of the video communication participants with images of inmates at the controlled environment. Upon a determination by the image detector 225 that there are two or more inmates present on the same video communication, the image detector 225 transmits a notification signal regarding the violating communication to the alerting module 270. In an embodiment, the image detector 225 can determine the presence of the same inmate on two or more simultaneous video communications made to the same phone number, IP address, or MAC address. In other words, the image detector 225 is capable of detecting a violating video communication between two or more inmates connected by each inmate separately calling the same phone number, IP address, or MAC address at the same time.

The transcriber 230 receives and transcribes audio files stored in the communication storage 220, according to an embodiment of the invention. In order to monitor the communications for rule violations, the transcriber 230 directly transcribes the audio file into a text file. The resulting text file contains the transcript of the detected communication. In an embodiment, the transcriber 230 receives and transcribes audio associated with video files stored in the communication storage 220.

The transcriber 230 performs analysis of the audio file for the transcription and annotation of speech signals by comparing the speech signals with known sound waveforms and speech signature frequencies stored in the language dictionary 286. The language dictionary 286 contains sound waveforms and speech signature frequencies for different languages. In an embodiment, the transcriber 286 can analyze an audio file containing different languages by comparing it with the sound waveforms and speech signature frequencies for the corresponding language contained in the language dictionary 286. In another embodiment, the transcriber 230 conducts voice analysis of the audio file and recognizes phonemes and/or intonations and identifies the speech as associated with the different speakers using voice analysis techniques. In other words, the transcriber can assign speech segments to different speakers in order to generate a dialog transcript of the actual conversation. The transcriber 230 lists and names the different participants in the audio file and labels the sentences in the transcript in order to indicate which speaker is communicating in that particular portion of the transcript. The transcriber 230 transcribes events that occur during the conversation such as long pauses, extra dialed digits, progress tones, among others into the transcript. The transcriber 230 can conduct phonetic transcription of the audio based on a phoneme chart stored in the language dictionary 286.

The language converter 240 receives the communications from the transcriber 230 and the communication storage 220 and converts the communications into a standard language, according to an embodiment of the invention. As mentioned above, the communications may be conducted in different languages. The conversion into a standard language facilitates a more efficient and universal method of monitoring the communications to detect rule violations. In an embodiment, the language converter 240 converts the communications into English. The language dictionary contains dictionaries for many different languages. When the language converter 240 receives a communication containing languages other than English, the language converter 240 searches the language dictionary 286 for the corresponding words and phrases in English and converts the communication accordingly. Using well-known grammatical algorithms, the language converter 240 is able to perform the language conversion without significant grammatical errors in the converted text. The conversion results in a text file containing a transcript of the detected communication in a standard language. In an embodiment, the language converter 240 and the transcriber work as part of a combined system to simultaneously perform language conversion and transcription.

The metadata extractor 250 receives the transcribed and/or converted communication from the language converter 240 and extracts metadata from the communication, according to an embodiment of the invention. The metadata summarizes basic information about the communication. For example, the basic information about the communication may include the names of the people communicating, the phone numbers or email accounts, and the date and time of the communication, among others. The metadata extractor 250 transmits and saves the metadata in the metadata database 282 and the text file containing the corresponding communication in the transcript database 284.

The detection and prevention analysis engine 260 receives the communication from the metadata extractor 250 and analyzes the communication for illegal or suspicious contents, according to an embodiment of the invention. The detection and prevention analysis engine 260 analyzes the communication and compares it to past communications to detect rule violations. The detection and prevention analysis engine 260 will be described in detail in the following FIG. 3.