Playback device grouping

Lambourne , et al. A

U.S. patent number 10,387,102 [Application Number 15/095,145] was granted by the patent office on 2019-08-20 for playback device grouping. This patent grant is currently assigned to Sonos, Inc.. The grantee listed for this patent is Sonos, Inc.. Invention is credited to Robert A. Lambourne, Nicholas A. J. Millington.

View All Diagrams

| United States Patent | 10,387,102 |

| Lambourne , et al. | August 20, 2019 |

Playback device grouping

Abstract

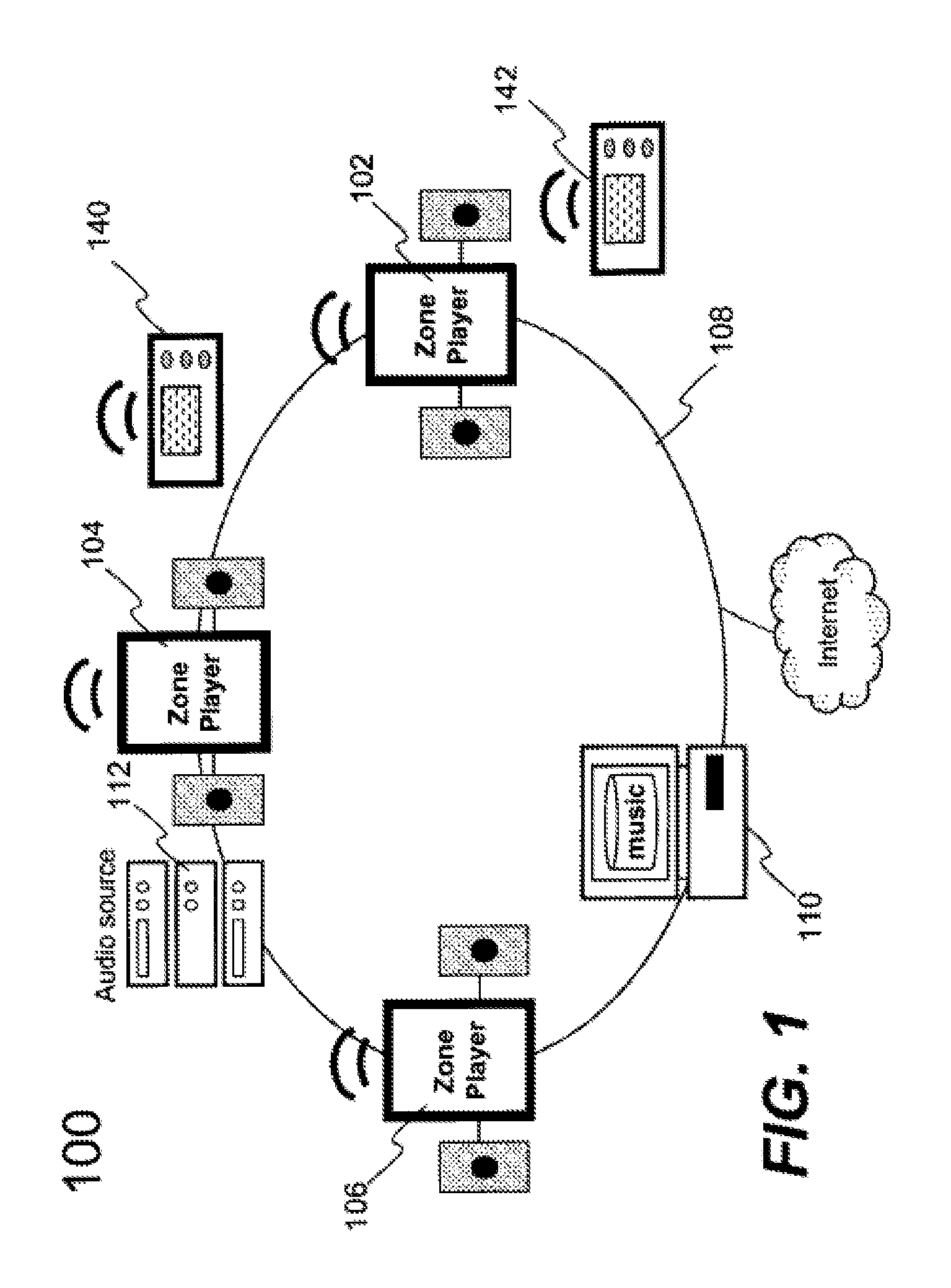

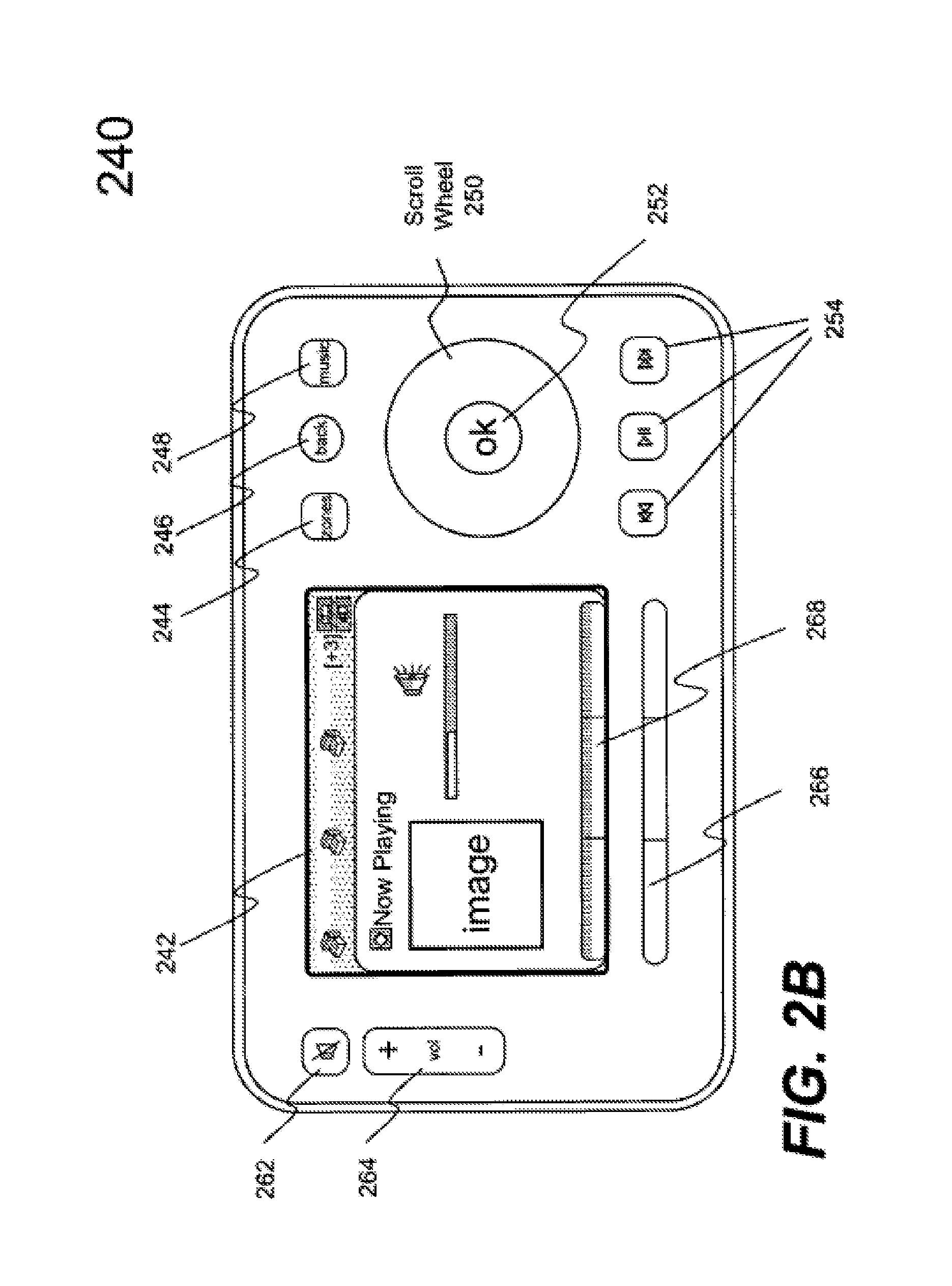

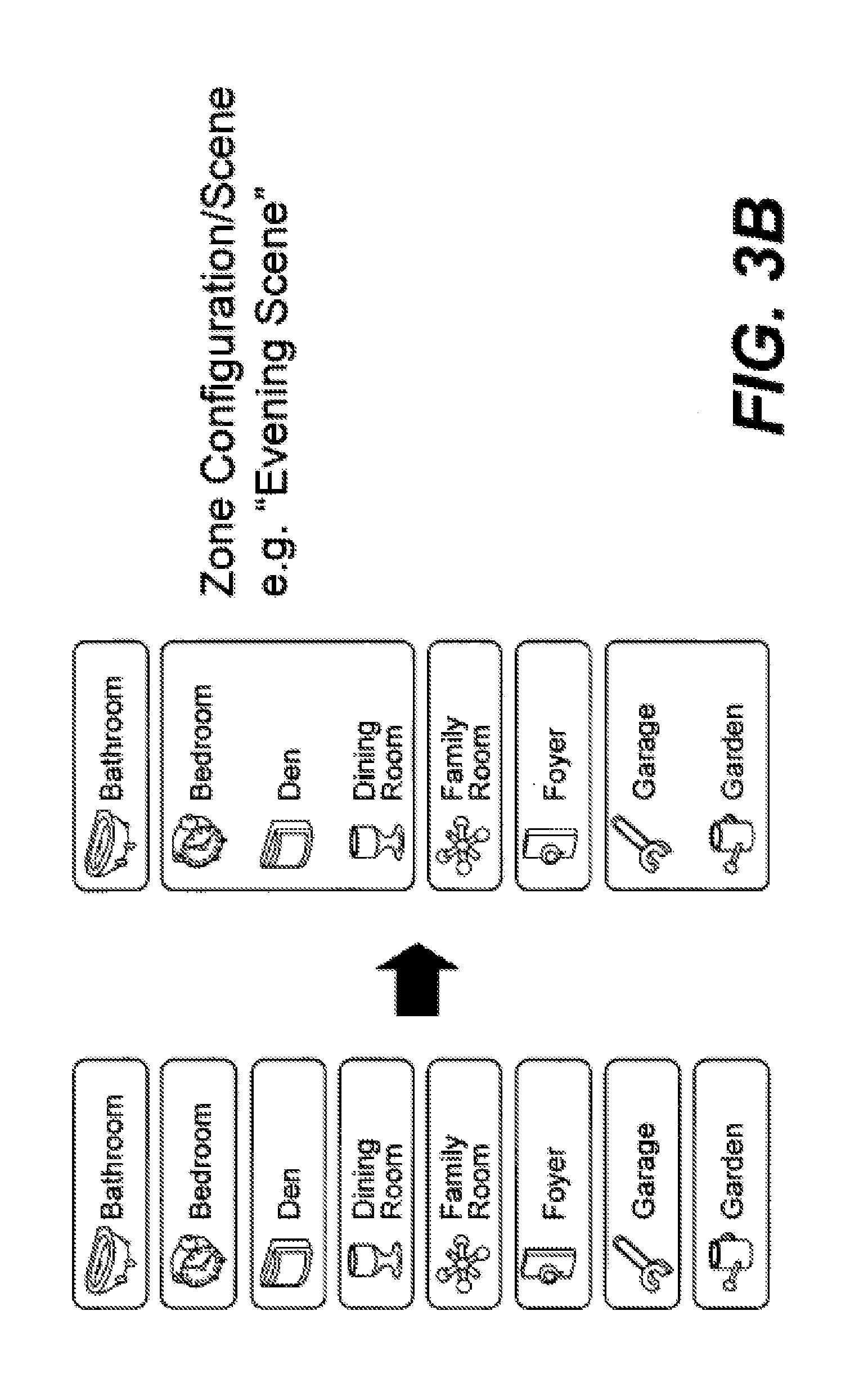

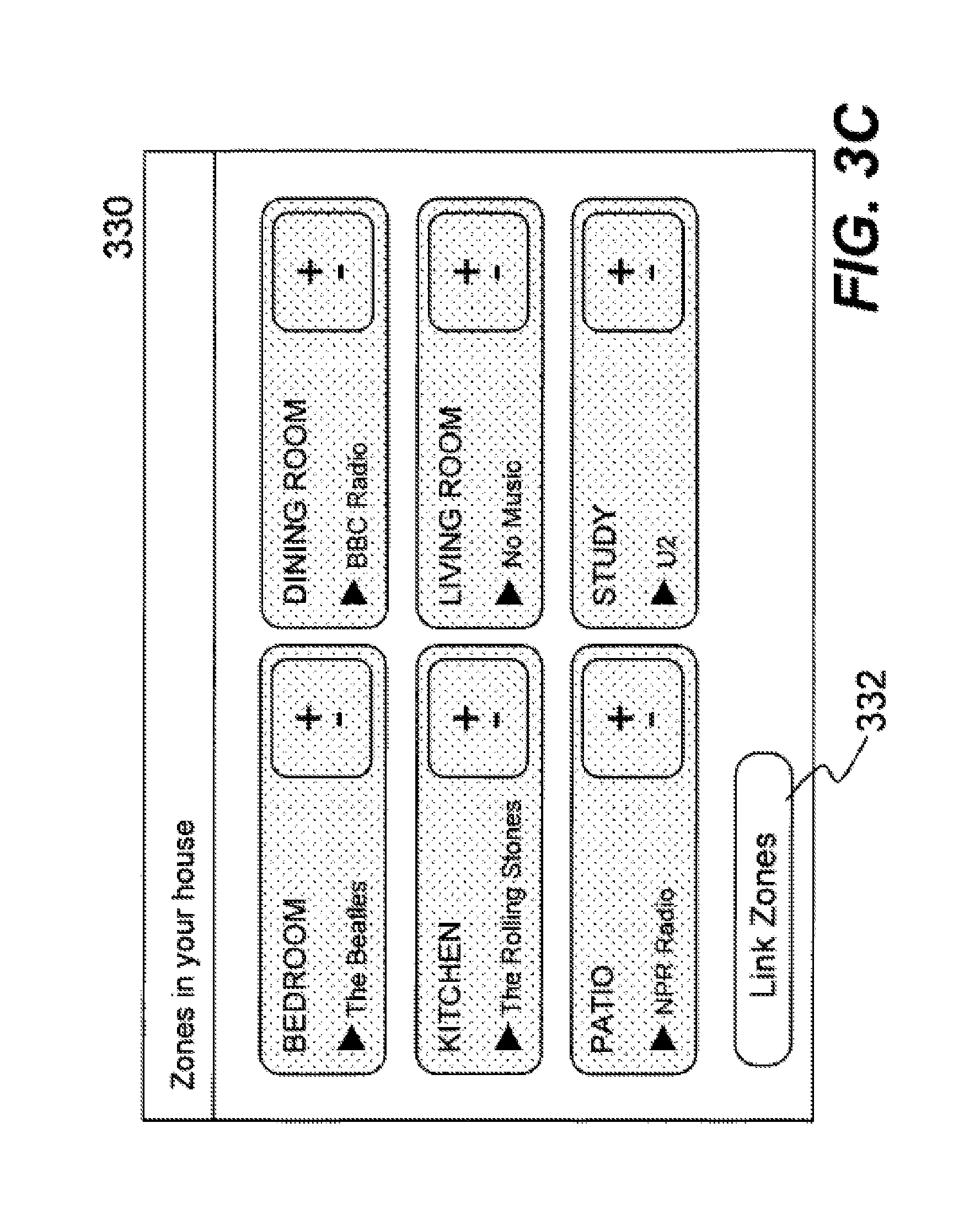

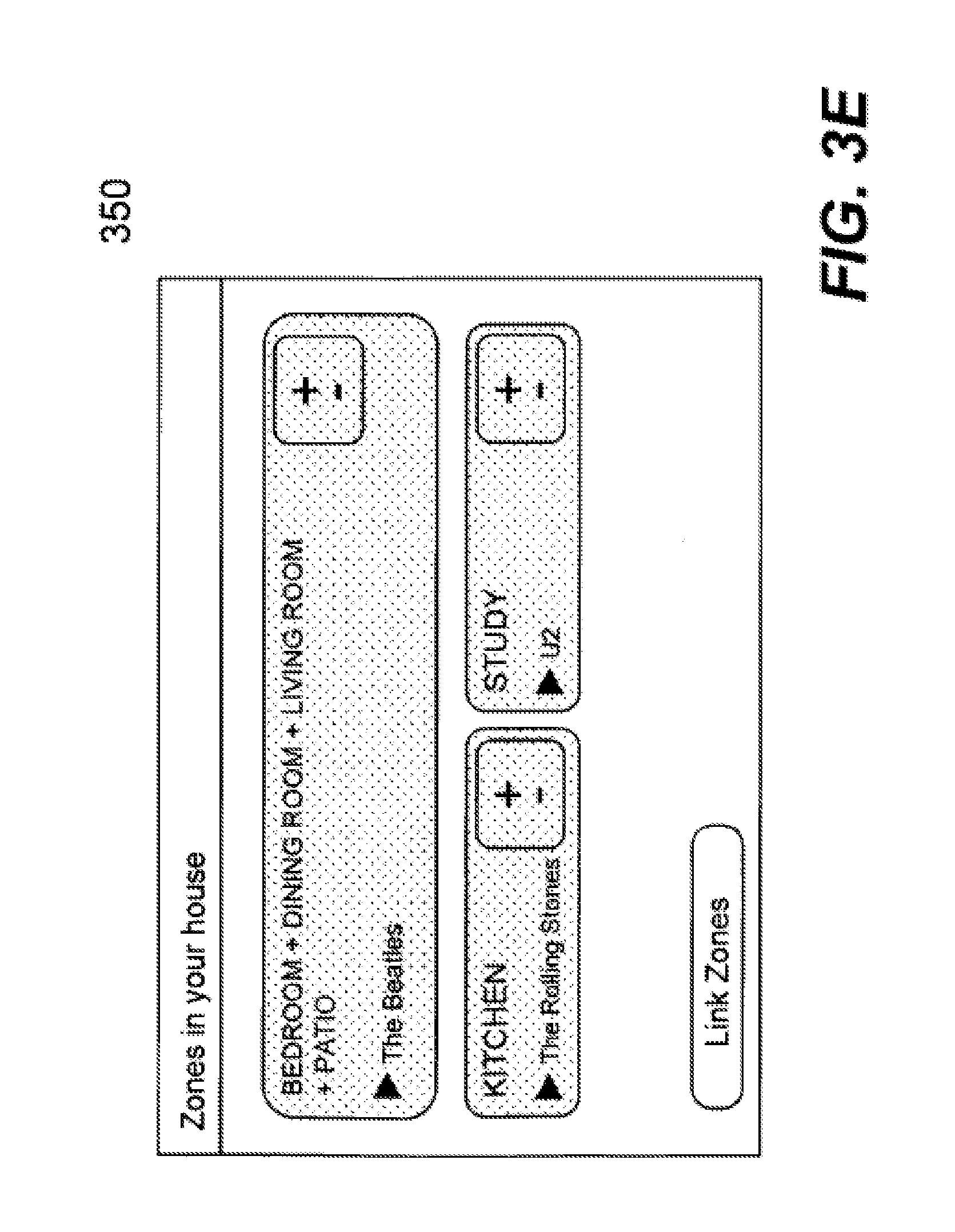

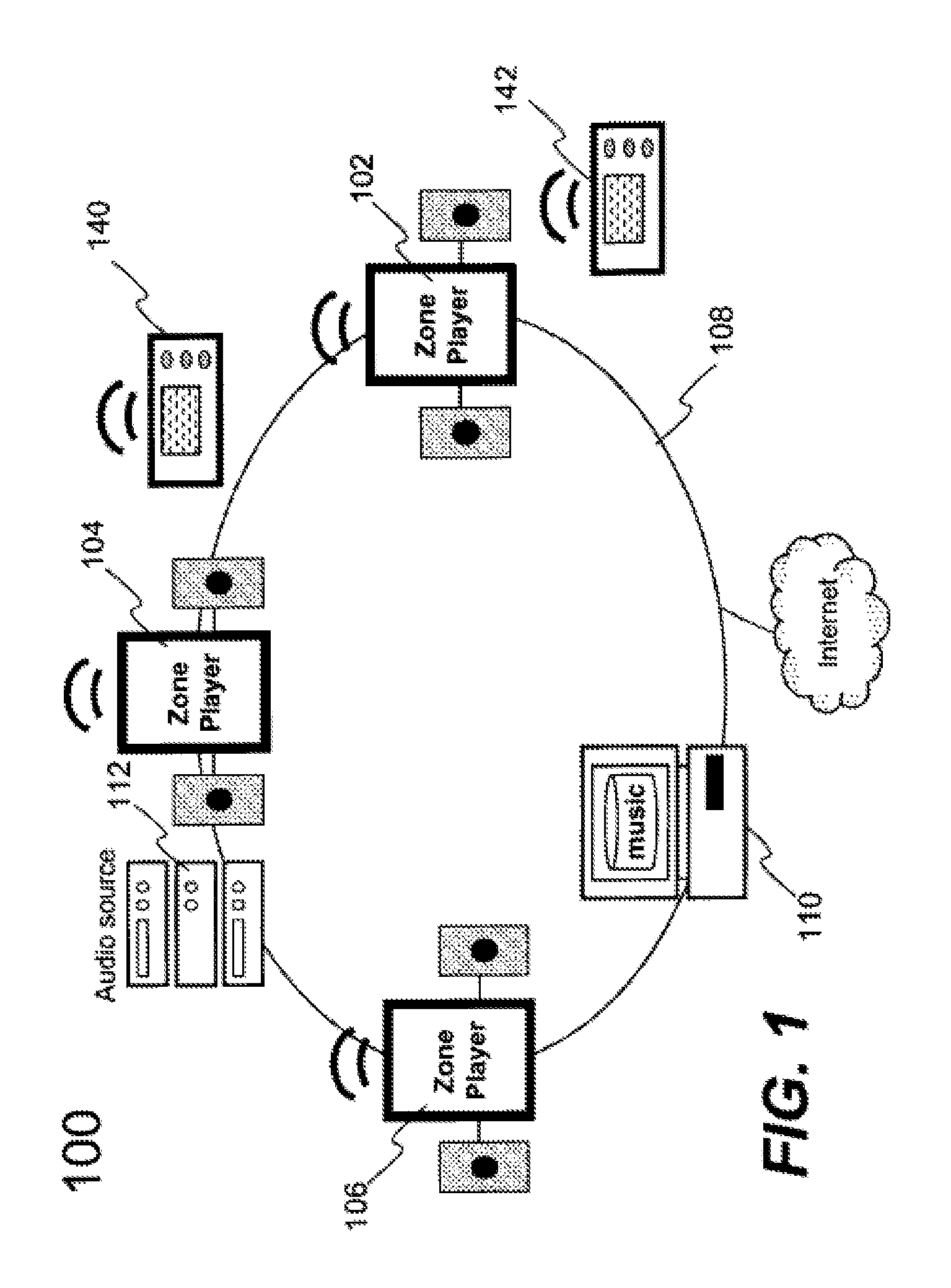

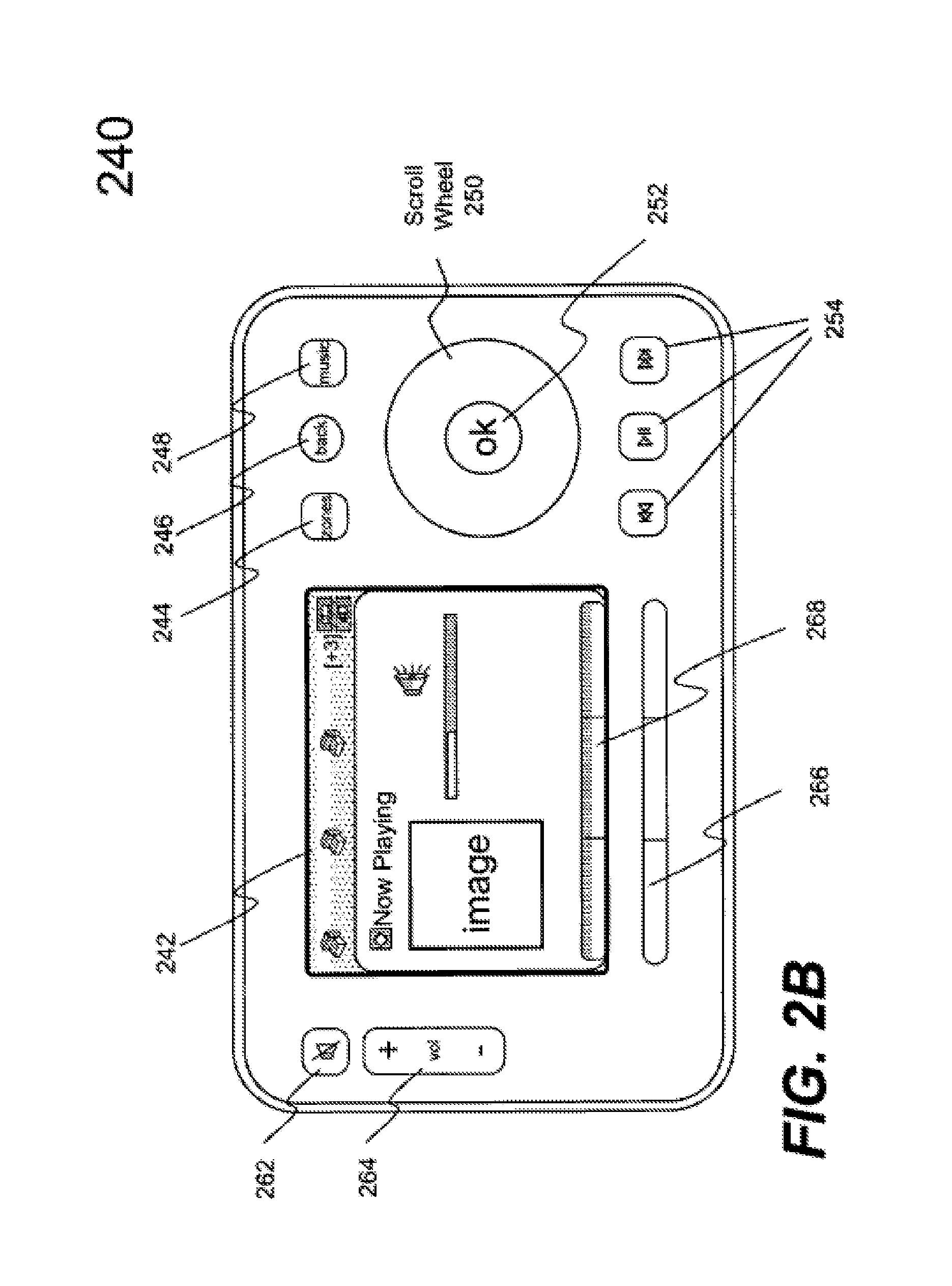

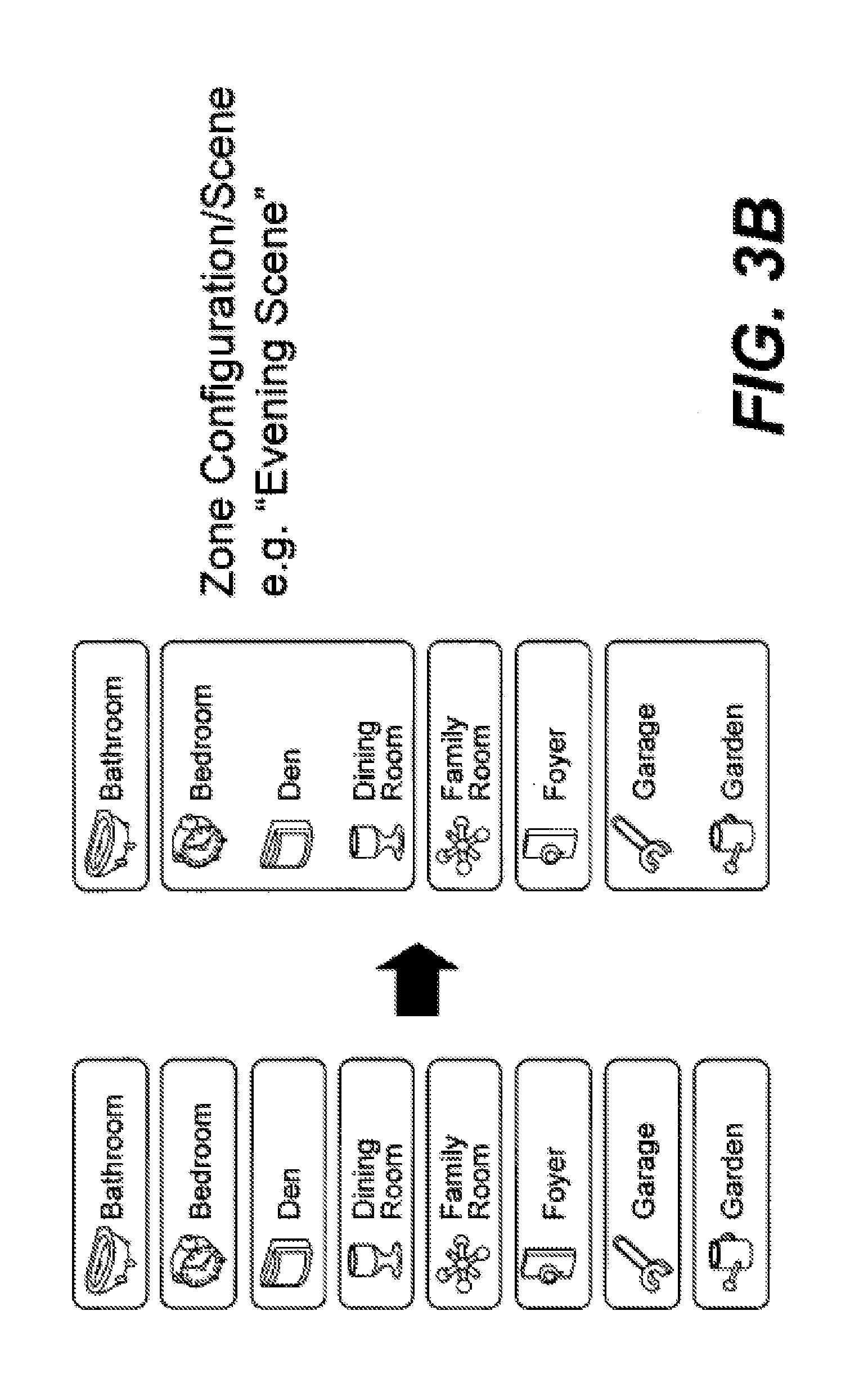

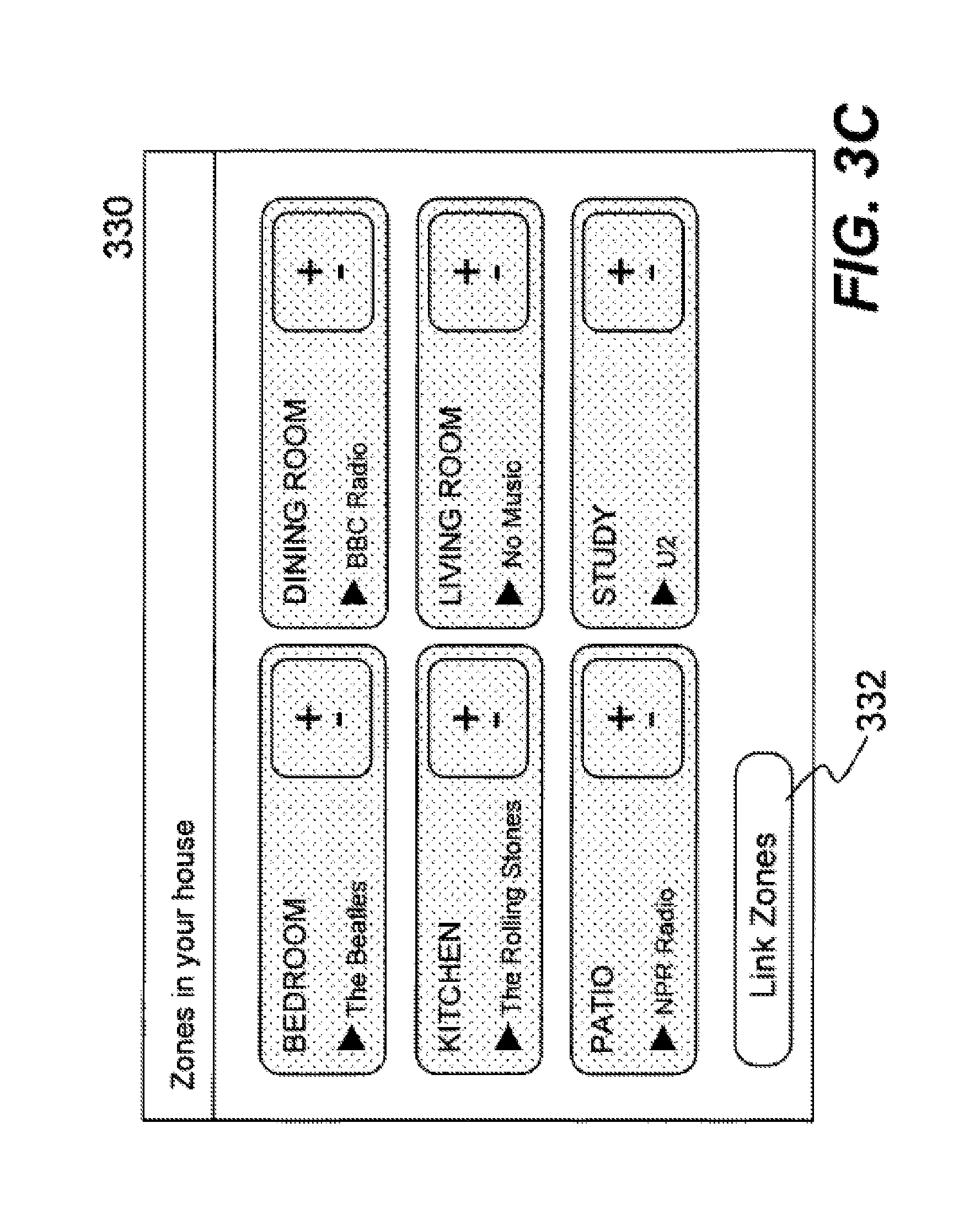

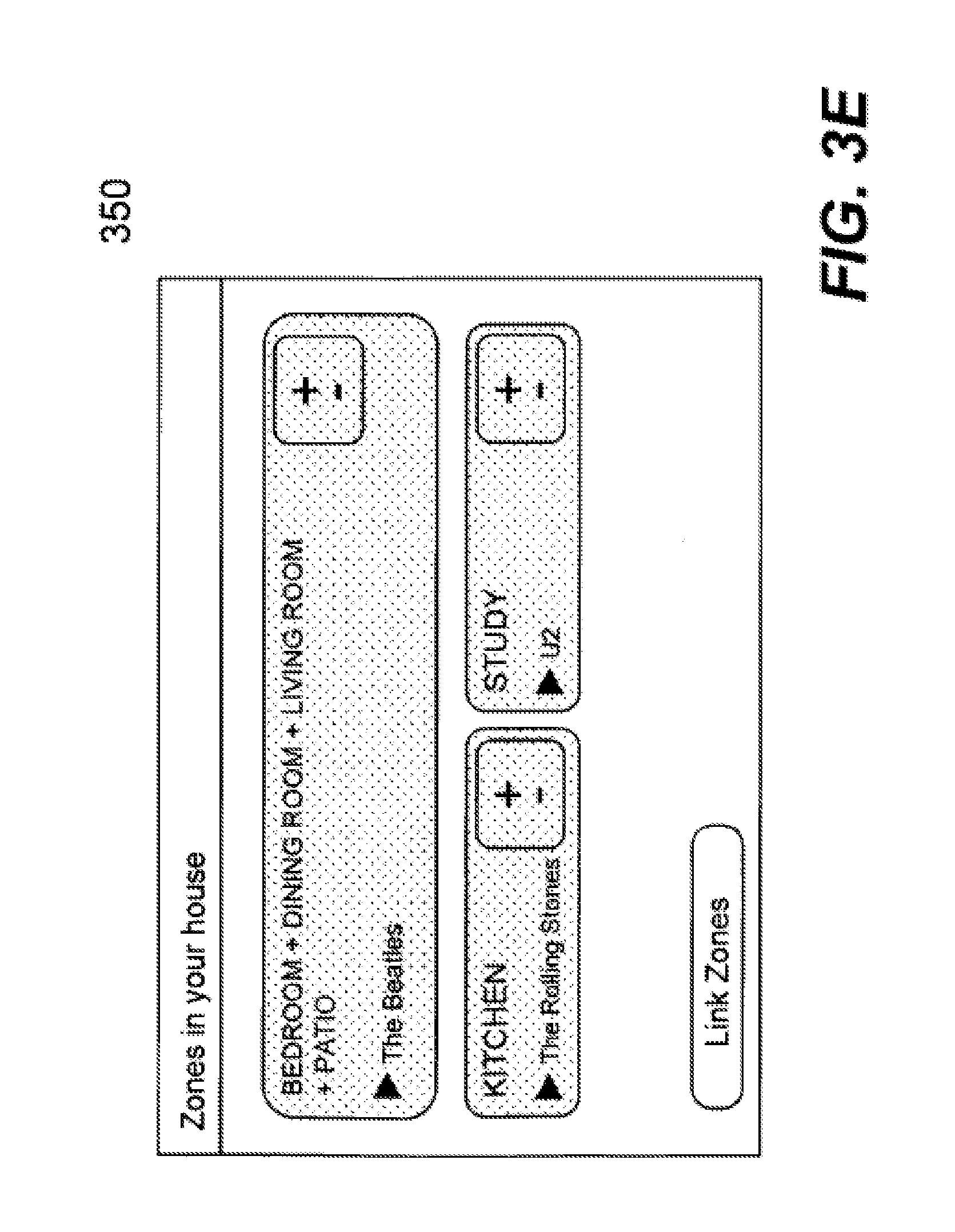

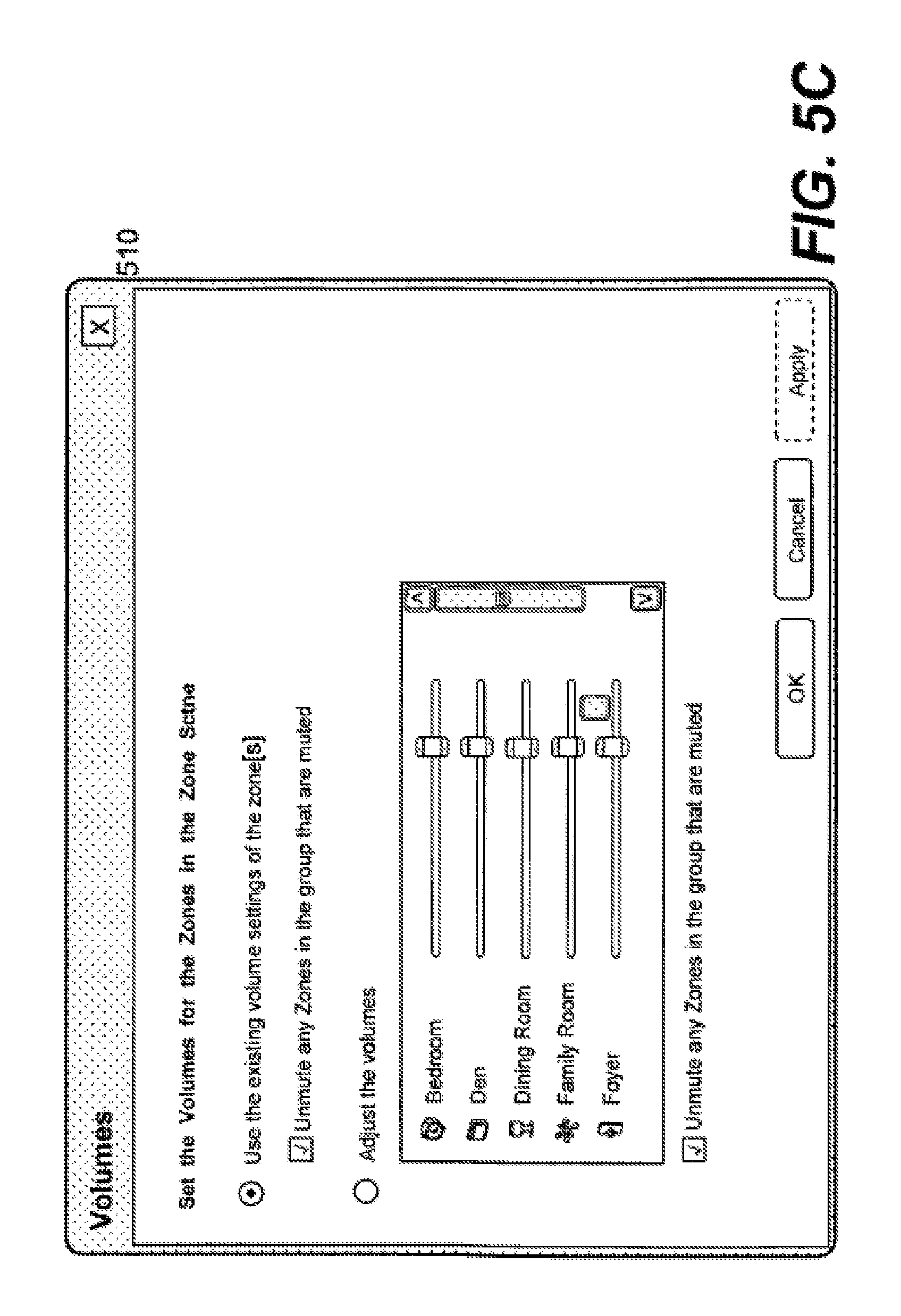

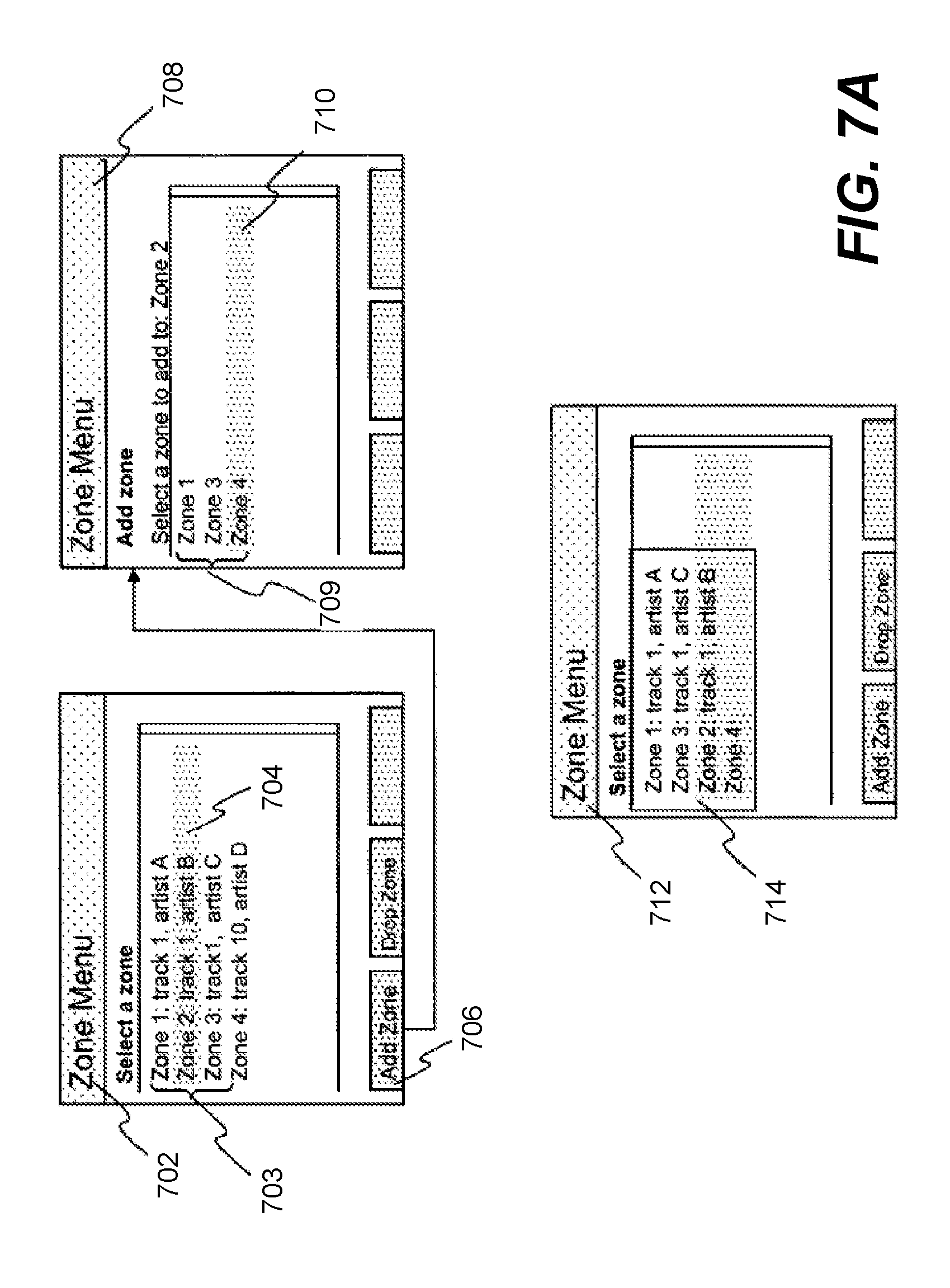

In general, user interfaces for controlling a plurality of multimedia players in groups are disclosed. According to one aspect of the present invention, a user interface is provided to allow a user to group some of the players according to a theme or scene, where each of the players is located in a zone. When the scene is activated, the players in the scene react in a synchronized manner. For example, the players in the scene are all caused to play a multimedia source or music in a playlist, wherein the multimedia source may be located anywhere on a network. The user interface is further configured to illustrate graphically a size of a group, the larger the group appears relatively, the more plays there are in the group.

| Inventors: | Lambourne; Robert A. (Santa Barbara, CA), Millington; Nicholas A. J. (Santa Barbara, CA) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Applicant: |

|

||||||||||

| Assignee: | Sonos, Inc. (Santa Barbara,

CA) |

||||||||||

| Family ID: | 46981809 | ||||||||||

| Appl. No.: | 15/095,145 | ||||||||||

| Filed: | April 10, 2016 |

Prior Publication Data

| Document Identifier | Publication Date | |

|---|---|---|

| US 20160241983 A1 | Aug 18, 2016 | |

Related U.S. Patent Documents

| Application Number | Filing Date | Patent Number | Issue Date | ||

|---|---|---|---|---|---|

| 14808875 | Jul 24, 2015 | ||||

| 13907666 | Sep 22, 2015 | 9141645 | |||

| 13619237 | Nov 19, 2013 | 8588949 | |||

| 12035112 | Oct 16, 2012 | 8290603 | |||

| 10861653 | Aug 4, 2009 | 7571014 | |||

| 10816217 | Jul 31, 2012 | 8234395 | |||

| 60490768 | Jul 28, 2003 | ||||

| Current U.S. Class: | 1/1 |

| Current CPC Class: | G11B 27/00 (20130101); G06F 3/0482 (20130101); H04S 7/30 (20130101); G06F 3/162 (20130101); G06F 3/04842 (20130101); G05B 15/02 (20130101); G06F 3/04855 (20130101); G06F 16/27 (20190101); G06F 3/165 (20130101); H04R 27/00 (20130101); G06F 3/04847 (20130101); H04S 7/00 (20130101); H04R 29/008 (20130101); H04R 2227/003 (20130101); H04R 2227/005 (20130101) |

| Current International Class: | G06F 17/00 (20190101); G06F 16/27 (20190101); G06F 3/0484 (20130101); G06F 3/0482 (20130101); G06F 3/16 (20060101); G06F 3/0485 (20130101); G11B 27/00 (20060101); H04R 27/00 (20060101); H04S 7/00 (20060101); G05B 15/02 (20060101); H04R 29/00 (20060101) |

| Field of Search: | ;700/94 ;381/119 |

References Cited [Referenced By]

U.S. Patent Documents

| 3956591 | May 1976 | Gates, Jr. |

| 4105974 | August 1978 | Rogers |

| D260764 | September 1981 | Castagna et al. |

| 4296278 | October 1981 | Cullison et al. |

| 4306114 | December 1981 | Callahan |

| 4509211 | April 1985 | Robbins |

| D279779 | July 1985 | Taylor |

| 4530091 | July 1985 | Crockett |

| 4696037 | September 1987 | Fierens |

| 4701629 | October 1987 | Citroen |

| 4712105 | December 1987 | Koehler |

| D293671 | January 1988 | Beaumont |

| 4731814 | March 1988 | Becker et al. |

| 4816989 | March 1989 | Finn et al. |

| 4824059 | April 1989 | Butler |

| D301037 | May 1989 | Matsuda |

| 4845751 | July 1989 | Schwab |

| D304443 | November 1989 | Grinyer et al. |

| D313023 | December 1990 | Kolenda et al. |

| D313398 | January 1991 | Gilchrist |

| D313600 | January 1991 | Weber |

| 4994908 | February 1991 | Kuban et al. |

| D320598 | October 1991 | Auerbach et al. |

| D322609 | December 1991 | Patton |

| 5086385 | February 1992 | Launey et al. |

| D326450 | May 1992 | Watanabe |

| D327060 | June 1992 | Wachob et al. |

| 5151922 | September 1992 | Weiss |

| 5153579 | October 1992 | Fisch et al. |

| D331388 | December 1992 | Dahnert et al. |

| 5182552 | January 1993 | Paynting |

| D333135 | February 1993 | Wachob et al. |

| 5185680 | February 1993 | Kakubo |

| 5198603 | March 1993 | Nishikawa et al. |

| 5237327 | August 1993 | Saitoh et al. |

| 5239458 | August 1993 | Suzuki |

| 5272757 | December 1993 | Scofield et al. |

| 5299266 | March 1994 | Lumsden |

| D350531 | September 1994 | Tsuji |

| D350962 | September 1994 | Reardon et al. |

| 5361381 | November 1994 | Short |

| 5372441 | December 1994 | Louis |

| D354059 | January 1995 | Hendricks |

| D354751 | January 1995 | Hersh et al. |

| D356093 | March 1995 | McCauley et al. |

| D356312 | March 1995 | Althans |

| D357024 | April 1995 | Tokiyama et al. |

| 5406634 | April 1995 | Anderson et al. |

| 5430485 | July 1995 | Lankford et al. |

| 5440644 | August 1995 | Farinelli et al. |

| D362446 | September 1995 | Gasiorek et al. |

| 5457448 | October 1995 | Totsuka et al. |

| D363933 | November 1995 | Starck |

| 5467342 | November 1995 | Logston et al. |

| D364877 | December 1995 | Tokiyama et al. |

| D364878 | December 1995 | Green et al. |

| D365102 | December 1995 | Gioscia |

| D366044 | January 1996 | Hara et al. |

| 5481251 | January 1996 | Buys et al. |

| 5491839 | February 1996 | Schotz |

| 5515345 | May 1996 | Barreira et al. |

| 5533021 | July 1996 | Branstad et al. |

| D372716 | August 1996 | Thorne |

| 5553147 | September 1996 | Pineau |

| 5553222 | September 1996 | Milne et al. |

| 5553314 | September 1996 | Grube et al. |

| D377651 | January 1997 | Biasotti et al. |

| 5596696 | January 1997 | Tindell et al. |

| 5602992 | February 1997 | Danneels |

| 5623483 | April 1997 | Agrawal et al. |

| 5625350 | April 1997 | Fukatsu et al. |

| 5633871 | May 1997 | Bloks |

| D379816 | June 1997 | Laituri et al. |

| 5636345 | June 1997 | Valdevit |

| 5640388 | June 1997 | Woodhead et al. |

| D380752 | July 1997 | Hanson |

| 5652749 | July 1997 | Davenport et al. |

| D382271 | August 1997 | Akwiwu |

| 5661665 | August 1997 | Glass et al. |

| 5661728 | August 1997 | Finotello et al. |

| 5668884 | September 1997 | Clair, Jr. et al. |

| 5673323 | September 1997 | Schotz et al. |

| D384940 | October 1997 | Kono et al. |

| D387352 | December 1997 | Kaneko et al. |

| 5696896 | December 1997 | Badovinatz et al. |

| D388792 | January 1998 | Nykerk |

| D389143 | January 1998 | Wicks |

| D392641 | March 1998 | Fenner |

| 5726989 | March 1998 | Dokic |

| 5732059 | March 1998 | Katsuyama et al. |

| D393628 | April 1998 | Ledbetter et al. |

| 5740235 | April 1998 | Lester et al. |

| 5742623 | April 1998 | Nuber et al. |

| D394659 | May 1998 | Biasotti et al. |

| 5751819 | May 1998 | Dorrough |

| 5761320 | June 1998 | Farinelli et al. |

| 5774016 | June 1998 | Ketterer |

| D395889 | July 1998 | Gerba et al. |

| 5787249 | July 1998 | Badovinatz et al. |

| 5790543 | August 1998 | Cloutier |

| D397996 | September 1998 | Smith |

| 5808662 | September 1998 | Kinney et al. |

| 5812201 | September 1998 | Yoo |

| 5815689 | September 1998 | Shaw et al. |

| 5818948 | October 1998 | Gulick |

| D401587 | November 1998 | Rudolph |

| 5832024 | November 1998 | Schotz et al. |

| 5838909 | November 1998 | Roy et al. |

| 5848152 | December 1998 | Slipy et al. |

| 5852722 | December 1998 | Hamilton |

| D404741 | January 1999 | Schumaker et al. |

| D405071 | February 1999 | Gambaro |

| 5867691 | February 1999 | Shiraishi |

| 5875233 | February 1999 | Cox |

| 5875354 | February 1999 | Charlton et al. |

| D406847 | March 1999 | Gerba et al. |

| D407071 | March 1999 | Keating |

| 5887143 | March 1999 | Saito et al. |

| 5905768 | May 1999 | Maturi et al. |

| D410927 | June 1999 | Yamagishi |

| 5917830 | June 1999 | Chen et al. |

| D412337 | July 1999 | Hamano |

| 5923869 | July 1999 | Kashiwagi et al. |

| 5923902 | July 1999 | Inagaki |

| 5946343 | August 1999 | Schotz et al. |

| 5956025 | September 1999 | Goulden et al. |

| 5956088 | September 1999 | Shen et al. |

| 5960006 | September 1999 | Maturi et al. |

| D415496 | October 1999 | Gerba et al. |

| D416021 | November 1999 | Godette et al. |

| 5984512 | November 1999 | Jones et al. |

| 5987525 | November 1999 | Roberts et al. |

| 5987611 | November 1999 | Freund |

| 5990884 | November 1999 | Douma et al. |

| 5991307 | November 1999 | Komuro et al. |

| 5999906 | December 1999 | Mercs et al. |

| 6009457 | December 1999 | Moller |

| 6018376 | January 2000 | Nakatani |

| D420006 | February 2000 | Tonino |

| 6026150 | February 2000 | Frank et al. |

| 6029196 | February 2000 | Lenz |

| 6031818 | February 2000 | Lo et al. |

| 6032202 | February 2000 | Lea et al. |

| 6038614 | March 2000 | Chan et al. |

| 6046550 | April 2000 | Ference et al. |

| 6061457 | May 2000 | Stockhamer |

| 6078725 | June 2000 | Tanaka |

| 6081266 | June 2000 | Sciammarella |

| 6088063 | July 2000 | Shiba |

| D429246 | August 2000 | Holma |

| D430143 | August 2000 | Renk |

| 6101195 | August 2000 | Lyons et al. |

| 6108485 | August 2000 | Kim |

| 6108686 | August 2000 | Williams, Jr. |

| 6122668 | September 2000 | Teng et al. |

| D431552 | October 2000 | Backs et al. |

| D432525 | October 2000 | Beecroft |

| 6127941 | October 2000 | Van Ryzin |

| 6128318 | October 2000 | Sato |

| 6148205 | November 2000 | Cotton |

| 6154772 | November 2000 | Dunn et al. |

| 6157957 | December 2000 | Berthaud |

| 6163647 | December 2000 | Terashima et al. |

| 6169725 | January 2001 | Gibbs et al. |

| 6175872 | January 2001 | Neumann et al. |

| 6181383 | January 2001 | Fox et al. |

| 6185737 | February 2001 | Northcutt et al. |

| 6195435 | February 2001 | Kitamura |

| 6195436 | February 2001 | Scibora et al. |

| 6199169 | March 2001 | Voth |

| 6212282 | April 2001 | Mershon |

| 6246701 | June 2001 | Slattery |

| 6253293 | June 2001 | Rao et al. |

| D444475 | July 2001 | Levey et al. |

| 6255961 | July 2001 | Van Ryzin et al. |

| 6256554 | July 2001 | Dilorenzo |

| 6269406 | July 2001 | Dutcher et al. |

| 6301012 | October 2001 | White et al. |

| 6308207 | October 2001 | Tseng et al. |

| 6310652 | October 2001 | Li et al. |

| 6313879 | November 2001 | Kubo et al. |

| 6321252 | November 2001 | Bhola et al. |

| 6324586 | November 2001 | Johnson |

| D452520 | December 2001 | Gotham et al. |

| 6332147 | December 2001 | Moran et al. |

| 6343028 | January 2002 | Kuwaoka |

| 6349285 | February 2002 | Liu et al. |

| 6349339 | February 2002 | Williams |

| 6349352 | February 2002 | Lea |

| 6351821 | February 2002 | Voth |

| 6353172 | March 2002 | Fay et al. |

| 6356871 | March 2002 | Hemkumar et al. |

| 6404811 | June 2002 | Cvetko et al. |

| 6418150 | July 2002 | Staats |

| 6430353 | August 2002 | Honda et al. |

| 6442443 | August 2002 | Fujii et al. |

| D462339 | September 2002 | Allen et al. |

| D462340 | September 2002 | Allen et al. |

| D462945 | September 2002 | Skulley |

| 6446080 | September 2002 | Van Ryzin et al. |

| 6449642 | September 2002 | Bourke-Dunphy et al. |

| 6449653 | September 2002 | Klemets et al. |

| 6456783 | September 2002 | Ando et al. |

| 6463474 | October 2002 | Fuh et al. |

| 6466832 | October 2002 | Zuqert et al. |

| 6469633 | October 2002 | Wachter |

| D466108 | November 2002 | Glodava et al. |

| 6487296 | November 2002 | Allen et al. |

| 6493832 | December 2002 | Itakura et al. |

| D468297 | January 2003 | Ikeda |

| 6522886 | February 2003 | Youngs et al. |

| 6526325 | February 2003 | Sussman et al. |

| 6526411 | February 2003 | Ward |

| 6535121 | March 2003 | Mathney et al. |

| D474763 | May 2003 | Tozaki et al. |

| D475993 | June 2003 | Meyer |

| D476643 | July 2003 | Yamagishi |

| D477310 | July 2003 | Moransais |

| 6587127 | July 2003 | Leeke et al. |

| 6598172 | July 2003 | Vandeusen et al. |

| D478051 | August 2003 | Sagawa |

| D478069 | August 2003 | Beck et al. |

| D478896 | August 2003 | Summers |

| 6611537 | August 2003 | Edens et al. |

| 6611813 | August 2003 | Bratton |

| D479520 | September 2003 | De Saulles |

| D481056 | October 2003 | Kawasaki et al. |

| 6631410 | October 2003 | Kowalski et al. |

| 6636269 | October 2003 | Baldwin |

| 6639584 | October 2003 | Li |

| 6653899 | November 2003 | Organvidez et al. |

| 6654720 | November 2003 | Graham et al. |

| 6654956 | November 2003 | Trinh et al. |

| 6658091 | December 2003 | Naidoo et al. |

| 6674803 | January 2004 | Kesselring |

| 6684060 | January 2004 | Curtin |

| D486145 | February 2004 | Kaminski et al. |

| 6687664 | February 2004 | Sussman et al. |

| 6704421 | March 2004 | Kitamura |

| 6741961 | May 2004 | Lim |

| D491925 | June 2004 | Griesau et al. |

| 6757517 | June 2004 | Chang |

| D493148 | July 2004 | Shibata et al. |

| 6763274 | July 2004 | Gilbert |

| D495333 | August 2004 | Borsboom |

| 6778073 | August 2004 | Lutter et al. |

| 6778493 | August 2004 | Ishii |

| 6778869 | August 2004 | Champion |

| D496003 | September 2004 | Spira |

| D496005 | September 2004 | Wang |

| D496335 | September 2004 | Spira |

| 6795852 | September 2004 | Kleinrock et al. |

| D497363 | October 2004 | Olson et al. |

| 6803964 | October 2004 | Post et al. |

| 6809635 | October 2004 | Kaaresoja |

| D499086 | November 2004 | Polito |

| 6816104 | November 2004 | Lin |

| 6816510 | November 2004 | Banerjee |

| 6816818 | November 2004 | Wolf et al. |

| 6823225 | November 2004 | Sass |

| 6826283 | November 2004 | Wheeler et al. |

| D499395 | December 2004 | Hsu |

| D499718 | December 2004 | Chen |

| D500015 | December 2004 | Gubbe |

| 6836788 | December 2004 | Kim et al. |

| 6839752 | January 2005 | Miller et al. |

| D501477 | February 2005 | Hall |

| 6859460 | February 2005 | Chen |

| 6859538 | February 2005 | Voltz |

| 6873862 | March 2005 | Reshefsky |

| 6882335 | April 2005 | Saarinen |

| D504872 | May 2005 | Uehara et al. |

| D504885 | May 2005 | Zhang et al. |

| 6898642 | May 2005 | Chafle et al. |

| 6901439 | May 2005 | Bonasia et al. |

| D506463 | June 2005 | Daniels |

| 6907458 | June 2005 | Tomassetti et al. |

| 6910078 | June 2005 | Raman et al. |

| 6912610 | June 2005 | Spencer |

| 6915347 | July 2005 | Hanko et al. |

| 6917592 | July 2005 | Ramankutty et al. |

| 6919771 | July 2005 | Nakajima |

| 6920373 | July 2005 | Xi et al. |

| 6931557 | August 2005 | Togawa |

| 6934766 | August 2005 | Russell |

| 6937988 | August 2005 | Hemkumar et al. |

| 6970482 | November 2005 | Kim |

| 6985694 | January 2006 | De Bonet et al. |

| 6987767 | January 2006 | Saito |

| D515072 | February 2006 | Lee |

| D515557 | February 2006 | Okuley |

| 7006758 | February 2006 | Yamamoto et al. |

| 7007106 | February 2006 | Flood et al. |

| 7020791 | March 2006 | Aweya et al. |

| D518475 | April 2006 | Yang et al. |

| 7043477 | May 2006 | Mercer et al. |

| 7043651 | May 2006 | Aweya et al. |

| 7046677 | May 2006 | Monta et al. |

| 7047308 | May 2006 | Deshpande |

| 7054888 | May 2006 | Lachapelle et al. |

| 7058889 | June 2006 | Trovato et al. |

| 7068596 | June 2006 | Mou |

| D524296 | July 2006 | Kita |

| D527375 | August 2006 | Flora et al. |

| 7092528 | August 2006 | Patrick et al. |

| 7092694 | August 2006 | Griep et al. |

| 7096169 | August 2006 | Crutchfield et al. |

| 7102513 | September 2006 | Taskin et al. |

| 7106224 | September 2006 | Knapp et al. |

| 7113999 | September 2006 | Pestoni et al. |

| 7115017 | October 2006 | Laursen et al. |

| 7120168 | October 2006 | Zimmermann |

| 7130316 | October 2006 | Kovacevic |

| 7130368 | October 2006 | Aweya et al. |

| 7130608 | October 2006 | Hollstrom et al. |

| 7130616 | October 2006 | Janik |

| 7136934 | November 2006 | Carter et al. |

| 7139981 | November 2006 | Mayer et al. |

| 7143141 | November 2006 | Morgan et al. |

| 7143939 | December 2006 | Henzerling |

| 7146260 | December 2006 | Preston et al. |

| 7158488 | January 2007 | Fujimori |

| 7161939 | January 2007 | Israel et al. |

| 7162315 | January 2007 | Gilbert |

| 7164694 | January 2007 | Nodoushani et al. |

| 7167765 | January 2007 | Janik |

| 7185090 | February 2007 | Kowalski et al. |

| 7187947 | March 2007 | White et al. |

| 7188353 | March 2007 | Crinon |

| 7197148 | March 2007 | Nourse et al. |

| 7206367 | April 2007 | Moore |

| 7206618 | April 2007 | Latto et al. |

| 7206967 | April 2007 | Marti et al. |

| 7209795 | April 2007 | Sullivan et al. |

| 7218708 | May 2007 | Berezowski et al. |

| 7218930 | May 2007 | Ko et al. |

| 7236739 | June 2007 | Chang |

| 7236773 | June 2007 | Thomas |

| 7251533 | July 2007 | Yoon et al. |

| 7257398 | August 2007 | Ukita et al. |

| 7260616 | August 2007 | Cook |

| 7263070 | August 2007 | Delker et al. |

| 7263110 | August 2007 | Fujishiro |

| 7277547 | October 2007 | Delker et al. |

| 7286652 | October 2007 | Azriel et al. |

| 7289631 | October 2007 | Ishidoshiro |

| 7293060 | November 2007 | Komsi |

| 7295548 | November 2007 | Blank et al. |

| 7305694 | December 2007 | Commons et al. |

| 7308188 | December 2007 | Namatame |

| 7310334 | December 2007 | Fitzgerald et al. |

| 7312785 | December 2007 | Tsuk et al. |

| 7313593 | December 2007 | Pulito et al. |

| 7319764 | January 2008 | Reid et al. |

| 7324857 | January 2008 | Goddard |

| 7330875 | February 2008 | Parasnis et al. |

| 7333519 | February 2008 | Sullivan et al. |

| 7356011 | April 2008 | Waters et al. |

| 7359006 | April 2008 | Xiang et al. |

| 7366206 | April 2008 | Lockridge et al. |

| 7372846 | May 2008 | Zwack |

| 7383036 | June 2008 | Kang et al. |

| 7391791 | June 2008 | Balassanian et al. |

| 7392102 | June 2008 | Sullivan et al. |

| 7392481 | June 2008 | Gewickey et al. |

| 7394480 | July 2008 | Song |

| 7400644 | July 2008 | Sakamoto et al. |

| 7412499 | August 2008 | Chang et al. |

| 7428310 | September 2008 | Park |

| 7430181 | September 2008 | Hong |

| 7433324 | October 2008 | Switzer et al. |

| 7434166 | October 2008 | Acharya et al. |

| 7457948 | November 2008 | Bilicksa et al. |

| 7469139 | December 2008 | Van De Groenendaal |

| 7472058 | December 2008 | Tseng et al. |

| 7474677 | January 2009 | Trott |

| 7483538 | January 2009 | McCarty et al. |

| 7483540 | January 2009 | Rabinowitz et al. |

| 7483958 | January 2009 | Elabbady et al. |

| 7492912 | February 2009 | Chung et al. |

| 7505889 | March 2009 | Salmonsen et al. |

| 7509181 | March 2009 | Champion |

| 7519667 | April 2009 | Capps |

| 7548744 | June 2009 | Oesterling et al. |

| 7548851 | June 2009 | Lau et al. |

| 7558224 | July 2009 | Surazski et al. |

| 7558635 | July 2009 | Thiel et al. |

| 7571014 | August 2009 | Lambourne et al. |

| 7574274 | August 2009 | Holmes |

| 7599685 | October 2009 | Goldberg et al. |

| 7606174 | October 2009 | Ochi et al. |

| 7607091 | October 2009 | Song et al. |

| 7627825 | December 2009 | Kakuda |

| 7630501 | December 2009 | Blank et al. |

| 7631119 | December 2009 | Moore et al. |

| 7643894 | January 2010 | Braithwaite et al. |

| 7653344 | January 2010 | Feldman et al. |

| 7657224 | February 2010 | Goldberg et al. |

| 7657644 | February 2010 | Zheng |

| 7657910 | February 2010 | McAulay et al. |

| 7665115 | February 2010 | Gallo et al. |

| 7668990 | February 2010 | Krzyzanowski et al. |

| 7669113 | February 2010 | Moore et al. |

| 7669219 | February 2010 | Scott, III |

| 7672470 | March 2010 | Lee |

| 7675943 | March 2010 | Mosig et al. |

| 7676044 | March 2010 | Sasaki et al. |

| 7676142 | March 2010 | Hung |

| 7688306 | March 2010 | Wehrenberg et al. |

| 7689304 | March 2010 | Sasaki |

| 7689305 | March 2010 | Kreifeldt et al. |

| 7702279 | April 2010 | Ko et al. |

| 7702403 | April 2010 | Gladwin et al. |

| 7710941 | May 2010 | Rietschel et al. |

| 7711774 | May 2010 | Rothschild |

| 7720096 | May 2010 | Klemets |

| 7721032 | May 2010 | Bushell et al. |

| 7742740 | June 2010 | Goldberg et al. |

| 7743009 | June 2010 | Hangartner et al. |

| 7746906 | June 2010 | Jinzaki et al. |

| 7756743 | July 2010 | Lapcevic |

| 7761176 | July 2010 | Ben-Yaacov et al. |

| 7765315 | July 2010 | Batson et al. |

| RE41608 | August 2010 | Blair et al. |

| 7793206 | September 2010 | Lim et al. |

| 7827259 | November 2010 | Heller et al. |

| 7831054 | November 2010 | Ball et al. |

| 7835689 | November 2010 | Goldberg et al. |

| 7853341 | December 2010 | McCarty et al. |

| 7865137 | January 2011 | Goldberg et al. |

| 7882234 | February 2011 | Watanabe et al. |

| 7885622 | February 2011 | Krampf et al. |

| 7907819 | March 2011 | Ando et al. |

| 7916877 | March 2011 | Goldberg et al. |

| 7917082 | March 2011 | Goldberg et al. |

| 7933418 | April 2011 | Morishima |

| 7934239 | April 2011 | Dagman |

| 7945143 | May 2011 | Yahata et al. |

| 7945636 | May 2011 | Nelson et al. |

| 7945708 | May 2011 | Ohkita |

| 7958441 | June 2011 | Heller et al. |

| 7966388 | June 2011 | Pugaczewski et al. |

| 7987294 | July 2011 | Bryce et al. |

| 7995732 | August 2011 | Koch et al. |

| 7996566 | August 2011 | Sylvain et al. |

| 7996588 | August 2011 | Subbiah et al. |

| 8014423 | September 2011 | Thaler et al. |

| 8015306 | September 2011 | Bowman |

| 8020023 | September 2011 | Millington et al. |

| 8023663 | September 2011 | Goldberg |

| 8028038 | September 2011 | Weel |

| 8028323 | September 2011 | Weel |

| 8041062 | October 2011 | Cohen et al. |

| 8045721 | October 2011 | Burgan et al. |

| 8045952 | October 2011 | Qureshey et al. |

| 8050203 | November 2011 | Jacobsen et al. |

| 8050652 | November 2011 | Qureshey et al. |

| 8055364 | November 2011 | Champion |

| 8074253 | December 2011 | Nathan |

| 8086752 | December 2011 | Millington et al. |

| 8090317 | January 2012 | Burge et al. |

| 8103009 | January 2012 | McCarty et al. |

| 8111132 | February 2012 | Allen et al. |

| 8112032 | February 2012 | Ko et al. |

| 8116476 | February 2012 | Inohara |

| 8126172 | February 2012 | Horbach et al. |

| 8131389 | March 2012 | Hardwick et al. |

| 8131390 | March 2012 | Braithwaite et al. |

| 8144883 | March 2012 | Pdersen et al. |

| 8148622 | April 2012 | Rothkopf et al. |

| 8150079 | April 2012 | Maeda et al. |

| 8169938 | May 2012 | Duchscher et al. |

| 8170222 | May 2012 | Dunko |

| 8170260 | May 2012 | Reining et al. |

| 8175297 | May 2012 | Ho et al. |

| 8185674 | May 2012 | Moore et al. |

| 8194874 | June 2012 | Starobin et al. |

| 8204890 | June 2012 | Gogan |

| 8208653 | June 2012 | Eo et al. |

| 8214447 | July 2012 | Deslippe et al. |

| 8214740 | July 2012 | Johnson |

| 8214873 | July 2012 | Weel |

| 8218790 | July 2012 | Bull et al. |

| 8230099 | July 2012 | Weel |

| 8233029 | July 2012 | Yoshida et al. |

| 8233648 | July 2012 | Sorek et al. |

| 8234305 | July 2012 | Seligmann et al. |

| 8234395 | July 2012 | Millington et al. |

| 8239748 | August 2012 | Moore et al. |

| 8275910 | September 2012 | Hauck |

| 8279709 | October 2012 | Choisel et al. |

| 8281001 | October 2012 | Busam et al. |

| 8285404 | October 2012 | Kekki |

| 8290603 | October 2012 | Lambourne |

| 8300845 | October 2012 | Zurek et al. |

| 8311226 | November 2012 | Lorgeoux et al. |

| 8315555 | November 2012 | Ko et al. |

| 8316147 | November 2012 | Batson et al. |

| 8325931 | December 2012 | Howard et al. |

| 8326951 | December 2012 | Millington et al. |

| 8340330 | December 2012 | Yoon et al. |

| 8345709 | January 2013 | Nitzpon et al. |

| 8364295 | January 2013 | Beckmann et al. |

| 8370678 | February 2013 | Millington et al. |

| 8374595 | February 2013 | Chien et al. |

| 8407623 | March 2013 | Kerr et al. |

| 8411883 | April 2013 | Matsumoto |

| 8423659 | April 2013 | Millington |

| 8423893 | April 2013 | Ramsay et al. |

| 8432851 | April 2013 | Xu et al. |

| 8433076 | April 2013 | Zurek et al. |

| 8442239 | May 2013 | Bruelle-Drews et al. |

| 8457334 | June 2013 | Yoon et al. |

| 8463184 | June 2013 | Dua |

| 8463875 | June 2013 | Katz et al. |

| 8473844 | June 2013 | Kreifeldt et al. |

| 8477958 | July 2013 | Moeller et al. |

| 8483853 | July 2013 | Lambourne |

| 8509211 | August 2013 | Trotter et al. |

| 8520870 | August 2013 | Sato et al. |

| 8565455 | October 2013 | Worrell et al. |

| 8577048 | November 2013 | Chaikin et al. |

| 8588949 | November 2013 | Lambourne et al. |

| 8600084 | December 2013 | Garrett |

| 8611559 | December 2013 | Sanders |

| 8615091 | December 2013 | Terwal |

| 8639830 | January 2014 | Bowman |

| 8654995 | February 2014 | Silber et al. |

| 8672744 | March 2014 | Gronkowski et al. |

| 8683009 | March 2014 | Ng et al. |

| 8689036 | April 2014 | Millington et al. |

| 8731206 | May 2014 | Park |

| 8750282 | June 2014 | Gelter et al. |

| 8751026 | June 2014 | Sato et al. |

| 8762565 | June 2014 | Togashi et al. |

| 8775546 | July 2014 | Millington |

| 8818538 | August 2014 | Sakata |

| 8819554 | August 2014 | Basso et al. |

| 8831761 | September 2014 | Kemp et al. |

| 8843586 | September 2014 | Pantos et al. |

| 8861739 | October 2014 | Ojanpera |

| 8868698 | October 2014 | Millington et al. |

| 8885851 | November 2014 | Westenbroek |

| 8904066 | December 2014 | Moore et al. |

| 8917877 | December 2014 | Haaff et al. |

| 8930006 | January 2015 | Haatainen |

| 8934647 | January 2015 | Joyce et al. |

| 8934655 | January 2015 | Breen et al. |

| 8938637 | January 2015 | Millington et al. |

| 8942252 | January 2015 | Balassanian et al. |

| 8942395 | January 2015 | Lissaman et al. |

| 8954177 | February 2015 | Sanders |

| 8965544 | February 2015 | Ramsay |

| 8966394 | February 2015 | Gates et al. |

| 9042556 | May 2015 | Kallai et al. |

| 9130770 | September 2015 | Millington et al. |

| 9137602 | September 2015 | Mayman et al. |

| 9160965 | October 2015 | Redmann et al. |

| 9195258 | November 2015 | Millington |

| 9456243 | September 2016 | Hughes et al. |

| 9507780 | November 2016 | Rothkopf et al. |

| 2001/0001160 | May 2001 | Shoff et al. |

| 2001/0009604 | July 2001 | Ando et al. |

| 2001/0022823 | September 2001 | Renaud |

| 2001/0027498 | October 2001 | Van De Meulenhof et al. |

| 2001/0032188 | October 2001 | Miyabe et al. |

| 2001/0042107 | November 2001 | Palm |

| 2001/0043456 | November 2001 | Atkinson |

| 2001/0046235 | November 2001 | Trevitt et al. |

| 2001/0047377 | November 2001 | Sincaglia et al. |

| 2001/0050991 | December 2001 | Eves |

| 2002/0002039 | January 2002 | Qureshey et al. |

| 2002/0002562 | January 2002 | Moran et al. |

| 2002/0002565 | January 2002 | Ohyama |

| 2002/0003548 | January 2002 | Krusche et al. |

| 2002/0015003 | February 2002 | Kato et al. |

| 2002/0022453 | February 2002 | Balog et al. |

| 2002/0026442 | February 2002 | Lipscomb et al. |

| 2002/0034374 | March 2002 | Barton |

| 2002/0035621 | March 2002 | Zintel et al. |

| 2002/0042844 | April 2002 | Chiazzese |

| 2002/0049843 | April 2002 | Barone et al. |

| 2002/0062406 | May 2002 | Chang et al. |

| 2002/0065926 | May 2002 | Hackney et al. |

| 2002/0067909 | June 2002 | Iivonen |

| 2002/0072816 | June 2002 | Shdema et al. |

| 2002/0072817 | June 2002 | Champion |

| 2002/0073228 | June 2002 | Cognet et al. |

| 2002/0078293 | June 2002 | Kou et al. |

| 2002/0080783 | June 2002 | Fujimori |

| 2002/0090914 | July 2002 | Kang et al. |

| 2002/0093478 | July 2002 | Yeh |

| 2002/0095460 | July 2002 | Benson |

| 2002/0098878 | July 2002 | Mooney et al. |

| 2002/0101357 | August 2002 | Gharapetian |

| 2002/0103635 | August 2002 | Mesarovic et al. |

| 2002/0109710 | August 2002 | Holtz et al. |

| 2002/0112244 | August 2002 | Liou et al. |

| 2002/0114354 | August 2002 | Sinha et al. |

| 2002/0114359 | August 2002 | Ibaraki et al. |

| 2002/0124097 | September 2002 | Isely et al. |

| 2002/0124182 | September 2002 | Bacso et al. |

| 2002/0129156 | September 2002 | Yoshikawa |

| 2002/0131398 | September 2002 | Taylor |

| 2002/0131761 | September 2002 | Kawasaki et al. |

| 2002/0136335 | September 2002 | Liou et al. |

| 2002/0137505 | September 2002 | Eiche et al. |

| 2002/0143998 | October 2002 | Rajagopal et al. |

| 2002/0150053 | October 2002 | Gray et al. |

| 2002/0159596 | October 2002 | Durand et al. |

| 2002/0163361 | November 2002 | Parkin |

| 2002/0165721 | November 2002 | Chang et al. |

| 2002/0165921 | November 2002 | Sapieyevski |

| 2002/0168938 | November 2002 | Chang |

| 2002/0173273 | November 2002 | Spurgat et al. |

| 2002/0177411 | November 2002 | Yajima et al. |

| 2002/0181355 | December 2002 | Shikunami et al. |

| 2002/0184310 | December 2002 | Traversat et al. |

| 2002/0188762 | December 2002 | Tomassetti et al. |

| 2002/0194260 | December 2002 | Headley et al. |

| 2002/0194309 | December 2002 | Carter et al. |

| 2003/0002609 | January 2003 | Faller et al. |

| 2003/0008616 | January 2003 | Anderson |

| 2003/0014486 | January 2003 | May |

| 2003/0018797 | January 2003 | Dunning et al. |

| 2003/0020763 | January 2003 | Mayer et al. |

| 2003/0023741 | January 2003 | Tomassetti et al. |

| 2003/0035072 | February 2003 | Hagg |

| 2003/0035444 | February 2003 | Zwack |

| 2003/0041173 | February 2003 | Hoyle |

| 2003/0041174 | February 2003 | Wen et al. |

| 2003/0043856 | March 2003 | Lakaniemi et al. |

| 2003/0043924 | March 2003 | Haddad et al. |

| 2003/0050058 | March 2003 | Walsh et al. |

| 2003/0055892 | March 2003 | Huitema et al. |

| 2003/0061428 | March 2003 | Garney et al. |

| 2003/0063528 | April 2003 | Ogikubo |

| 2003/0063755 | April 2003 | Nourse et al. |

| 2003/0066094 | April 2003 | Van Der Schaar et al. |

| 2003/0067437 | April 2003 | McClintock et al. |

| 2003/0073432 | April 2003 | Meade |

| 2003/0097478 | May 2003 | King |

| 2003/0099212 | May 2003 | Anjum et al. |

| 2003/0099221 | May 2003 | Rhee |

| 2003/0101253 | May 2003 | Saito et al. |

| 2003/0103088 | June 2003 | Dresti et al. |

| 2003/0109270 | June 2003 | Shorty |

| 2003/0110329 | June 2003 | Higaki et al. |

| 2003/0118158 | June 2003 | Hattori |

| 2003/0123853 | July 2003 | Iwahara et al. |

| 2003/0126211 | July 2003 | Anttila et al. |

| 2003/0135822 | July 2003 | Evans |

| 2003/0157951 | August 2003 | Hasty |

| 2003/0167335 | September 2003 | Alexander |

| 2003/0172123 | September 2003 | Polan et al. |

| 2003/0179780 | September 2003 | Walker et al. |

| 2003/0182254 | September 2003 | Plastina et al. |

| 2003/0185400 | October 2003 | Yoshizawa et al. |

| 2003/0187657 | October 2003 | Erhart et al. |

| 2003/0195964 | October 2003 | Mane |

| 2003/0198254 | October 2003 | Sullivan et al. |

| 2003/0198255 | October 2003 | Sullivan et al. |

| 2003/0198257 | October 2003 | Sullivan et al. |

| 2003/0200001 | October 2003 | Goddard |

| 2003/0204273 | October 2003 | Dinker et al. |

| 2003/0204509 | October 2003 | Dinker et al. |

| 2003/0210347 | November 2003 | Kondo |

| 2003/0210796 | November 2003 | McCarty et al. |

| 2003/0212802 | November 2003 | Rector et al. |

| 2003/0219007 | November 2003 | Barrack et al. |

| 2003/0227478 | December 2003 | Chatfield |

| 2003/0229900 | December 2003 | Reisman |

| 2003/0231208 | December 2003 | Hanon et al. |

| 2003/0231871 | December 2003 | Ushimaru |

| 2003/0235304 | December 2003 | Evans et al. |

| 2004/0001106 | January 2004 | Deutscher et al. |

| 2004/0001484 | January 2004 | Ozguner |

| 2004/0001591 | January 2004 | Mani et al. |

| 2004/0002938 | January 2004 | Deguchi |

| 2004/0008852 | January 2004 | Also et al. |

| 2004/0010727 | January 2004 | Fujinami |

| 2004/0012620 | January 2004 | Buhler et al. |

| 2004/0014426 | January 2004 | Moore |

| 2004/0015252 | January 2004 | Aiso et al. |

| 2004/0019497 | January 2004 | Volk et al. |

| 2004/0019807 | January 2004 | Freund et al. |

| 2004/0019911 | January 2004 | Gates et al. |

| 2004/0023697 | February 2004 | Komura |

| 2004/0024478 | February 2004 | Hans et al. |

| 2004/0024925 | February 2004 | Cypher et al. |

| 2004/0027166 | February 2004 | Mangum et al. |

| 2004/0032348 | February 2004 | Lai et al. |

| 2004/0032421 | February 2004 | Williamson et al. |

| 2004/0032922 | February 2004 | Knapp et al. |

| 2004/0037433 | February 2004 | Chen |

| 2004/0041836 | March 2004 | Zaner et al. |

| 2004/0042629 | March 2004 | Mellone et al. |

| 2004/0044742 | March 2004 | Evron et al. |

| 2004/0048569 | March 2004 | Kawamura |

| 2004/0059842 | March 2004 | Hanson et al. |

| 2004/0059965 | March 2004 | Marshall et al. |

| 2004/0066736 | April 2004 | Kroeger |

| 2004/0075767 | April 2004 | Neuman et al. |

| 2004/0078383 | April 2004 | Mercer et al. |

| 2004/0080671 | April 2004 | Siemens et al. |

| 2004/0093096 | May 2004 | Huang et al. |

| 2004/0098754 | May 2004 | Vella et al. |

| 2004/0111473 | June 2004 | Lysenko et al. |

| 2004/0117462 | June 2004 | Bodin et al. |

| 2004/0117491 | June 2004 | Karaoguz et al. |

| 2004/0117840 | June 2004 | Boudreau et al. |

| 2004/0117858 | June 2004 | Boudreau et al. |

| 2004/0128701 | July 2004 | Kaneko et al. |

| 2004/0131192 | July 2004 | Metcalf |

| 2004/0133689 | July 2004 | Vasisht |

| 2004/0143368 | July 2004 | May et al. |

| 2004/0143675 | July 2004 | Aust |

| 2004/0143852 | July 2004 | Meyers |

| 2004/0148237 | July 2004 | Bittmann et al. |

| 2004/0168081 | August 2004 | Ladas et al. |

| 2004/0170383 | September 2004 | Mazur |

| 2004/0171346 | September 2004 | Lin |

| 2004/0177167 | September 2004 | Iwamura et al. |

| 2004/0179554 | September 2004 | Tsao |

| 2004/0183827 | September 2004 | Putterman et al. |

| 2004/0185773 | September 2004 | Gerber et al. |

| 2004/0189363 | September 2004 | Takano |

| 2004/0203378 | October 2004 | Powers |

| 2004/0203590 | October 2004 | Shteyn |

| 2004/0208158 | October 2004 | Fellman et al. |

| 2004/0213230 | October 2004 | Douskalis et al. |

| 2004/0223622 | November 2004 | Lindemann et al. |

| 2004/0224638 | November 2004 | Fadell et al. |

| 2004/0228367 | November 2004 | Mosig et al. |

| 2004/0248601 | December 2004 | Chang |

| 2004/0249490 | December 2004 | Sakai |

| 2004/0249965 | December 2004 | Huggins et al. |

| 2004/0249982 | December 2004 | Arnold et al. |

| 2004/0252400 | December 2004 | Blank et al. |

| 2004/0253969 | December 2004 | Nguyen et al. |

| 2005/0010691 | January 2005 | Oyadomari et al. |

| 2005/0011388 | January 2005 | Kouznetsov |

| 2005/0013394 | January 2005 | Rausch et al. |

| 2005/0015551 | January 2005 | Eames et al. |

| 2005/0021590 | January 2005 | Debique et al. |

| 2005/0027821 | February 2005 | Alexander et al. |

| 2005/0047605 | March 2005 | Lee et al. |

| 2005/0058149 | March 2005 | Howe |

| 2005/0060435 | March 2005 | Xue et al. |

| 2005/0062637 | March 2005 | El Zabadani et al. |

| 2005/0081213 | April 2005 | Suzuoki et al. |

| 2005/0102699 | May 2005 | Kim et al. |

| 2005/0105052 | May 2005 | McCormick et al. |

| 2005/0114538 | May 2005 | Rose |

| 2005/0120128 | June 2005 | Willes et al. |

| 2005/0125222 | June 2005 | Brown et al. |

| 2005/0125357 | June 2005 | Saadat et al. |

| 2005/0131558 | June 2005 | Braithwaite et al. |

| 2005/0154766 | July 2005 | Huang et al. |

| 2005/0159833 | July 2005 | Giaimo et al. |

| 2005/0160270 | July 2005 | Goldberg et al. |

| 2005/0166135 | July 2005 | Burke et al. |

| 2005/0168630 | August 2005 | Yamada et al. |

| 2005/0170781 | August 2005 | Jacobsen et al. |

| 2005/0177643 | August 2005 | Xu |

| 2005/0181348 | August 2005 | Carey et al. |

| 2005/0195205 | September 2005 | Abrams |

| 2005/0195823 | September 2005 | Chen et al. |

| 2005/0197725 | September 2005 | Alexander et al. |

| 2005/0198574 | September 2005 | Lamkin et al. |

| 2005/0201549 | September 2005 | Dedieu et al. |

| 2005/0215265 | September 2005 | Sharma |

| 2005/0216556 | September 2005 | Manion et al. |

| 2005/0239445 | October 2005 | Karaoguz et al. |

| 2005/0246421 | November 2005 | Moore et al. |

| 2005/0262217 | November 2005 | Nonaka et al. |

| 2005/0281255 | December 2005 | Davies et al. |

| 2005/0283820 | December 2005 | Richards et al. |

| 2005/0288805 | December 2005 | Moore et al. |

| 2005/0289224 | December 2005 | Deslippe et al. |

| 2006/0041639 | February 2006 | Lamkin et al. |

| 2006/0049966 | March 2006 | Ozawa et al. |

| 2006/0072489 | April 2006 | Toyoshima |

| 2006/0095516 | May 2006 | Wijeratne |

| 2006/0098936 | May 2006 | Ikeda et al. |

| 2006/0119497 | June 2006 | Miller et al. |

| 2006/0142034 | June 2006 | Wentink et al. |

| 2006/0143236 | June 2006 | Wu |

| 2006/0155721 | July 2006 | Grunwald et al. |

| 2006/0161742 | July 2006 | Sugimoto et al. |

| 2006/0173844 | August 2006 | Zhang et al. |

| 2006/0173976 | August 2006 | Vincent et al. |

| 2006/0193454 | August 2006 | Abou-Chakra et al. |

| 2006/0222186 | October 2006 | Paige et al. |

| 2006/0227985 | October 2006 | Kawanami |

| 2006/0259649 | November 2006 | Hsieh et al. |

| 2006/0270395 | November 2006 | Dhawan et al. |

| 2007/0003067 | January 2007 | Gierl et al. |

| 2007/0022207 | January 2007 | Millington et al. |

| 2007/0038999 | February 2007 | Millington et al. |

| 2007/0043847 | February 2007 | Carter et al. |

| 2007/0047712 | March 2007 | Gross et al. |

| 2007/0048713 | March 2007 | Plastina et al. |

| 2007/0054680 | March 2007 | Mo et al. |

| 2007/0087686 | April 2007 | Holm et al. |

| 2007/0142022 | June 2007 | Madonna et al. |

| 2007/0142944 | June 2007 | Goldberg et al. |

| 2007/0143493 | June 2007 | Mullig et al. |

| 2007/0169115 | July 2007 | Ko et al. |

| 2007/0180137 | August 2007 | Rajapakse |

| 2007/0192156 | August 2007 | Gauger |

| 2007/0249295 | October 2007 | Ukita et al. |

| 2007/0265031 | November 2007 | Koizumi et al. |

| 2007/0271388 | November 2007 | Bowra et al. |

| 2007/0299778 | December 2007 | Haveson et al. |

| 2008/0002836 | January 2008 | Moeller et al. |

| 2008/0007649 | January 2008 | Bennett |

| 2008/0007650 | January 2008 | Bennett |

| 2008/0007651 | January 2008 | Bennett |

| 2008/0018785 | January 2008 | Bennett |

| 2008/0022320 | January 2008 | Ver Steeg |

| 2008/0025535 | January 2008 | Rajapakse |

| 2008/0060084 | March 2008 | Gappa et al. |

| 2008/0072816 | March 2008 | Riess et al. |

| 2008/0075295 | March 2008 | Mayman et al. |

| 2008/0077619 | March 2008 | Gilley et al. |

| 2008/0077620 | March 2008 | Gilley et al. |

| 2008/0086318 | April 2008 | Gilley et al. |

| 2008/0091771 | April 2008 | Allen et al. |

| 2008/0120429 | May 2008 | Millington et al. |

| 2008/0126943 | May 2008 | Parasnis et al. |

| 2008/0144861 | June 2008 | Melanson et al. |

| 2008/0144864 | June 2008 | Huon |

| 2008/0146289 | June 2008 | Korneluk et al. |

| 2008/0189272 | August 2008 | Powers et al. |

| 2008/0205070 | August 2008 | Osada |

| 2008/0212786 | September 2008 | Park |

| 2008/0215169 | September 2008 | Debettencourt et al. |

| 2008/0263010 | October 2008 | Roychoudhuri et al. |

| 2008/0303947 | December 2008 | Ohnishi et al. |

| 2009/0011798 | January 2009 | Yamada |

| 2009/0017868 | January 2009 | Ueda et al. |

| 2009/0031336 | January 2009 | Chavez et al. |

| 2009/0062947 | March 2009 | Lydon et al. |

| 2009/0070434 | March 2009 | Himmelstein |

| 2009/0077610 | March 2009 | White et al. |

| 2009/0089327 | April 2009 | Kalaboukis et al. |

| 2009/0100189 | April 2009 | Bahren et al. |

| 2009/0124289 | May 2009 | Nishida |

| 2009/0157905 | June 2009 | Davis |

| 2009/0164655 | June 2009 | Pettersson et al. |

| 2009/0193345 | July 2009 | Wensley et al. |

| 2009/0222115 | September 2009 | Malcolm et al. |

| 2009/0222392 | September 2009 | Martin et al. |

| 2009/0228919 | September 2009 | Zott et al. |

| 2009/0251604 | October 2009 | Iyer |

| 2010/0004983 | January 2010 | Dickerson et al. |

| 2010/0031366 | February 2010 | Knight et al. |

| 2010/0049835 | February 2010 | Ko et al. |

| 2010/0087089 | April 2010 | Struthers et al. |

| 2010/0228740 | September 2010 | Cannistraro et al. |

| 2010/0284389 | November 2010 | Ramsay et al. |

| 2010/0299639 | November 2010 | Ramsay et al. |

| 2011/0001632 | January 2011 | Hohorst |

| 2011/0002487 | January 2011 | Panther et al. |

| 2011/0066943 | March 2011 | Brillon et al. |

| 2011/0228944 | September 2011 | Croghan et al. |

| 2011/0316768 | December 2011 | McRae |

| 2012/0029671 | February 2012 | Millington et al. |

| 2012/0030366 | February 2012 | Collart et al. |

| 2012/0051567 | March 2012 | Castor-Perry |

| 2012/0060046 | March 2012 | Millington |

| 2012/0129446 | May 2012 | Ko et al. |

| 2012/0148075 | June 2012 | Goh et al. |

| 2012/0185771 | July 2012 | Rothkopf et al. |

| 2012/0192071 | July 2012 | Millington |

| 2012/0207290 | August 2012 | Moyers et al. |

| 2012/0237054 | September 2012 | Eo et al. |

| 2012/0281058 | November 2012 | Laney et al. |

| 2012/0290621 | November 2012 | Heitz, III et al. |

| 2013/0013757 | January 2013 | Millington et al. |

| 2013/0018960 | January 2013 | Knysz et al. |

| 2013/0031475 | January 2013 | Maor et al. |

| 2013/0038726 | February 2013 | Kim |

| 2013/0041954 | February 2013 | Kim et al. |

| 2013/0047084 | February 2013 | Sanders et al. |

| 2013/0052940 | February 2013 | Brillhart et al. |

| 2013/0070093 | March 2013 | Rivera et al. |

| 2013/0080599 | March 2013 | Ko et al. |

| 2013/0094670 | April 2013 | Millington |

| 2013/0124664 | May 2013 | Fonseca, Jr. et al. |

| 2013/0129122 | May 2013 | Johnson et al. |

| 2013/0132837 | May 2013 | Mead et al. |

| 2013/0159126 | June 2013 | Elkady |

| 2013/0167029 | June 2013 | Friesen et al. |

| 2013/0174100 | July 2013 | Seymour et al. |

| 2013/0174223 | July 2013 | Dykeman et al. |

| 2013/0179163 | July 2013 | Herbig et al. |

| 2013/0191454 | July 2013 | Oliver et al. |

| 2013/0197682 | August 2013 | Millington |

| 2013/0208911 | August 2013 | Millington |

| 2013/0208921 | August 2013 | Millington |

| 2013/0226323 | August 2013 | Millington |

| 2013/0230175 | September 2013 | Bech et al. |

| 2013/0232416 | September 2013 | Millington |

| 2013/0253934 | September 2013 | Parekh et al. |

| 2013/0279706 | October 2013 | Marti |

| 2013/0287186 | October 2013 | Quady |

| 2013/0290504 | October 2013 | Quady |

| 2014/0006483 | January 2014 | Garmark et al. |

| 2014/0037097 | February 2014 | Labosco |

| 2014/0064501 | March 2014 | Olsen et al. |

| 2014/0075308 | March 2014 | Sanders et al. |

| 2014/0075311 | March 2014 | Boettcher et al. |

| 2014/0079242 | March 2014 | Nguyen et al. |

| 2014/0108929 | April 2014 | Garmark et al. |

| 2014/0123005 | May 2014 | Forstall et al. |

| 2014/0140530 | May 2014 | Gomes-Casseres et al. |

| 2014/0161265 | June 2014 | Chaikin et al. |

| 2014/0181569 | June 2014 | Millington et al. |

| 2014/0233755 | August 2014 | Kim et al. |

| 2014/0242913 | August 2014 | Pang |

| 2014/0256260 | September 2014 | Ueda et al. |

| 2014/0267148 | September 2014 | Luna et al. |

| 2014/0270202 | September 2014 | Ivanov et al. |

| 2014/0273859 | September 2014 | Luna et al. |

| 2014/0279889 | September 2014 | Luna |

| 2014/0285313 | September 2014 | Luna et al. |

| 2014/0286496 | September 2014 | Luna et al. |

| 2014/0298174 | October 2014 | Ikonomov |

| 2014/0323036 | October 2014 | Daley et al. |

| 2014/0344689 | November 2014 | Scott et al. |

| 2014/0378056 | December 2014 | Liu |

| 2015/0019670 | January 2015 | Redmann |

| 2015/0026613 | January 2015 | Kwon et al. |

| 2015/0032844 | January 2015 | Tarr et al. |

| 2015/0043736 | February 2015 | Olsen et al. |

| 2015/0049248 | February 2015 | Wang et al. |

| 2015/0074527 | March 2015 | Sevigny et al. |

| 2015/0074528 | March 2015 | Sakalowsky et al. |

| 2015/0098576 | April 2015 | Sundaresan et al. |

| 2015/0139210 | May 2015 | Marin et al. |

| 2015/0256954 | September 2015 | Carlsson et al. |

| 2015/0304288 | October 2015 | Balasaygun et al. |

| 2015/0365987 | December 2015 | Weel |

| 2320451 | Mar 2001 | CA | |||

| 1598767 | Mar 2005 | CN | |||

| 101292500 | Oct 2008 | CN | |||

| 0251584 | Jan 1988 | EP | |||

| 0672985 | Sep 1995 | EP | |||

| 0772374 | May 1997 | EP | |||

| 1111527 | Jun 2001 | EP | |||

| 1122931 | Aug 2001 | EP | |||

| 1312188 | May 2003 | EP | |||

| 1389853 | Feb 2004 | EP | |||

| 2713281 | Apr 2004 | EP | |||

| 1517464 | Mar 2005 | EP | |||

| 0895427 | Jan 2006 | EP | |||

| 1416687 | Aug 2006 | EP | |||

| 1410686 | Mar 2008 | EP | |||

| 2043381 | Apr 2009 | EP | |||

| 2161950 | Mar 2010 | EP | |||

| 0742674 | Apr 2014 | EP | |||

| 2591617 | Jun 2014 | EP | |||

| 2284327 | May 1995 | GB | |||

| 2338374 | Dec 1999 | GB | |||

| 2379533 | Mar 2003 | GB | |||

| 2486183 | Jun 2012 | GB | |||

| 63269633 | Nov 1988 | JP | |||

| 07-210129 | Aug 1995 | JP | |||

| 2000149391 | May 2000 | JP | |||

| 2001034951 | Feb 2001 | JP | |||

| 2002111817 | Apr 2002 | JP | |||

| 2002123267 | Apr 2002 | JP | |||

| 2002141915 | May 2002 | JP | |||

| 2002358241 | Dec 2002 | JP | |||

| 2003037585 | Feb 2003 | JP | |||

| 2003506765 | Feb 2003 | JP | |||

| 2003101958 | Apr 2003 | JP | |||

| 2003169089 | Jun 2003 | JP | |||

| 2005108427 | Apr 2005 | JP | |||

| 2005136457 | May 2005 | JP | |||

| 2007241652 | Sep 2007 | JP | |||

| 2009506603 | Feb 2009 | JP | |||

| 2009075540 | Apr 2009 | JP | |||

| 2009135750 | Jun 2009 | JP | |||

| 2009535708 | Oct 2009 | JP | |||

| 2009538006 | Oct 2009 | JP | |||

| 2011130496 | Jun 2011 | JP | |||

| 439027 | Jun 2001 | TW | |||

| 199525313 | Sep 1995 | WO | |||

| 1999023560 | May 1999 | WO | |||

| 199961985 | Dec 1999 | WO | |||

| 0019693 | Apr 2000 | WO | |||

| 0110125 | Feb 2001 | WO | |||

| 200153994 | Jul 2001 | WO | |||

| 02073851 | Sep 2002 | WO | |||

| 03093950 | Nov 2003 | WO | |||

| 2003093950 | Nov 2003 | WO | |||

| 2005013047 | Feb 2005 | WO | |||

| 2007023120 | Mar 2007 | WO | |||

| 2007127485 | Nov 2007 | WO | |||

| 2007131555 | Nov 2007 | WO | |||

| 2007135581 | Nov 2007 | WO | |||

| 2008082350 | Jul 2008 | WO | |||

| 2008114389 | Sep 2008 | WO | |||

| 2012050927 | Apr 2012 | WO | |||

| 2014004182 | Jan 2014 | WO | |||

| 2014149533 | Sep 2014 | WO | |||

Other References

|