Systems, methods, and devices for an enterprise internet-of-things application development platform

Siebel , et al. October 27, 2

U.S. patent number 10,817,530 [Application Number 15/028,340] was granted by the patent office on 2020-10-27 for systems, methods, and devices for an enterprise internet-of-things application development platform. This patent grant is currently assigned to C3.ai, Inc.. The grantee listed for this patent is C3.ai, Inc.. Invention is credited to Edward Y. Abbo, Houman Behzadi, John Coker, Scott Kurinskas, Thomas Rothwein, Thomas M. Siebel, David Tchankotadze.

View All Diagrams

| United States Patent | 10,817,530 |

| Siebel , et al. | October 27, 2020 |

Systems, methods, and devices for an enterprise internet-of-things application development platform

Abstract

Systems, methods, and devices for a cyberphysical (IoT) software application development platform based upon a model driven architecture and derivative IoT SaaS applications are disclosed herein. The system may include concentrators to receive and forward time-series data from sensors or smart devices. The system may include message decoders to receive messages comprising the time-series data and storing the messages on message queues. The system may include a persistence component to store the time-series data in a key-value store and store the relational data in a relational database. The system may include a data services component to implement a type layer over data stores. The system may also include a processing component to access and process data in the data stores via the type layer, the processing component comprising a batch processing component and an iterative processing component.

| Inventors: | Siebel; Thomas M. (Woodside, CA), Abbo; Edward Y. (Woodside, CA), Behzadi; Houman (San Francisco, CA), Coker; John (Atherton, CA), Kurinskas; Scott (San Mateo, CA), Rothwein; Thomas (San Jose, CA), Tchankotadze; David (Sunnyvale, CA) | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Applicant: |

|

||||||||||

| Assignee: | C3.ai, Inc. (Redwood City,

CA) |

||||||||||

| Family ID: | 1000005143026 | ||||||||||

| Appl. No.: | 15/028,340 | ||||||||||

| Filed: | March 23, 2016 | ||||||||||

| PCT Filed: | March 23, 2016 | ||||||||||

| PCT No.: | PCT/US2016/023850 | ||||||||||

| 371(c)(1),(2),(4) Date: | April 08, 2016 | ||||||||||

| PCT Pub. No.: | WO2016/118979 | ||||||||||

| PCT Pub. Date: | July 28, 2016 |

Prior Publication Data

| Document Identifier | Publication Date | |

|---|---|---|

| US 20170006135 A1 | Jan 5, 2017 | |

Related U.S. Patent Documents

| Application Number | Filing Date | Patent Number | Issue Date | ||

|---|---|---|---|---|---|

| 62107262 | Jan 23, 2015 | ||||

| 62172012 | Jun 5, 2015 | ||||

| Current U.S. Class: | 1/1 |

| Current CPC Class: | G06F 16/283 (20190101); G06F 16/288 (20190101); G06F 16/254 (20190101); G06N 20/00 (20190101); G06F 8/35 (20130101); H04L 67/10 (20130101); H04L 29/08792 (20130101); H04L 29/08783 (20130101) |

| Current International Class: | G06F 16/25 (20190101); G06F 8/35 (20180101); G06N 20/00 (20190101); G06F 16/28 (20190101); H04L 29/08 (20060101) |

References Cited [Referenced By]

U.S. Patent Documents

| 5132920 | July 1992 | Bellows et al. |

| 6178362 | January 2001 | Woolard et al. |

| 7137071 | November 2006 | Fuller et al. |

| 7159208 | January 2007 | Keeley |

| 7200529 | April 2007 | Cifra et al. |

| 7590970 | September 2009 | Bromley |

| 7877421 | January 2011 | Berger et al. |

| 8166465 | April 2012 | Feblowitz et al. |

| 8180872 | May 2012 | Marinelli |

| 8442917 | May 2013 | Burke |

| 8626877 | January 2014 | Greene et al. |

| 8972484 | March 2015 | Naphade et al. |

| 9069737 | June 2015 | Kimotho et al. |

| 9148669 | September 2015 | Huang et al. |

| 9268815 | February 2016 | Chen et al. |

| 9281995 | March 2016 | Baba et al. |

| 9734221 | August 2017 | Sarferaz |

| 9799017 | October 2017 | Vermeulen |

| 9824133 | November 2017 | Kalki |

| 9886483 | February 2018 | Harrison |

| 9922101 | March 2018 | Reiner |

| 10114367 | October 2018 | Bates et al. |

| 10235780 | March 2019 | Noon et al. |

| 2003/0034998 | February 2003 | Kodosky et al. |

| 2003/0037322 | February 2003 | Kodosky et al. |

| 2003/0041163 | February 2003 | Rhoades et al. |

| 2003/0172368 | September 2003 | Alumbaugh |

| 2003/0177146 | September 2003 | Zimowski |

| 2004/0224549 | November 2004 | Yu |

| 2004/0225649 | November 2004 | Yeo et al. |

| 2005/0050054 | March 2005 | Clark |

| 2005/0177816 | August 2005 | Kudukoli et al. |

| 2005/0228808 | October 2005 | Mamou et al. |

| 2005/0234969 | October 2005 | Mamou et al. |

| 2005/0240592 | October 2005 | Mamou et al. |

| 2006/0010148 | January 2006 | Sattler et al. |

| 2006/0259442 | November 2006 | Iqbal |

| 2007/0112714 | May 2007 | Fairweather et al. |

| 2007/0214408 | September 2007 | Straub et al. |

| 2009/0044179 | February 2009 | Luszczek et al. |

| 2009/0044196 | February 2009 | Stefansson et al. |

| 2009/0132867 | May 2009 | Stefansson et al. |

| 2009/0228726 | September 2009 | Malik et al. |

| 2010/0070217 | March 2010 | Shimada et al. |

| 2010/0094475 | April 2010 | Masters et al. |

| 2010/0223296 | September 2010 | Angus et al. |

| 2010/0262313 | October 2010 | Chambers et al. |

| 2010/0274367 | October 2010 | Kaufman et al. |

| 2010/0274602 | October 2010 | Kaufman et al. |

| 2010/0286937 | November 2010 | Hedley et al. |

| 2011/0010016 | January 2011 | Giroti |

| 2011/0252426 | October 2011 | Antani et al. |

| 2012/0002733 | January 2012 | Misra et al. |

| 2012/0022910 | January 2012 | Chi et al. |

| 2012/0054147 | March 2012 | Goetz et al. |

| 2012/0266129 | October 2012 | Massoudi et al. |

| 2012/0271472 | October 2012 | Brunner et al. |

| 2012/0310559 | December 2012 | Taft |

| 2013/0124453 | May 2013 | Bhide |

| 2013/0173322 | July 2013 | Gray et al. |

| 2013/0211870 | August 2013 | Lawson et al. |

| 2013/0212420 | August 2013 | Lawson et al. |

| 2013/0238795 | September 2013 | Geffin et al. |

| 2013/0332194 | December 2013 | D'Auria |

| 2013/0346422 | December 2013 | Jordahl |

| 2014/0046956 | February 2014 | Zenger et al. |

| 2014/0047107 | February 2014 | Maturana et al. |

| 2014/0058572 | February 2014 | Stein et al. |

| 2014/0074670 | March 2014 | Garrity et al. |

| 2014/0156806 | June 2014 | Karpistsenko et al. |

| 2014/0279824 | September 2014 | Tamayo |

| 2014/0279837 | September 2014 | Guo |

| 2014/0279899 | September 2014 | Gu |

| 2014/0280142 | September 2014 | Wasson et al. |

| 2014/0337429 | November 2014 | Asenjo et al. |

| 2015/0019195 | January 2015 | Davis |

| 2015/0095303 | April 2015 | Sonmez |

| 2015/0120224 | April 2015 | Siebel et al. |

| 2015/0134733 | May 2015 | Maturana et al. |

| 2015/0233983 | August 2015 | Siebel et al. |

| 2016/0078379 | March 2016 | Branch et al. |

| 2017/0126741 | May 2017 | Lang et al. |

| 2018/0107660 | April 2018 | Wang et al. |

| 2019/0007517 | January 2019 | Jagannath et al. |

| 2019/0065545 | February 2019 | Hazel et al. |

| 2019/0265971 | August 2019 | Behzadi et al. |

| WO-2015066220 | May 2015 | WO | |||

| WO-2016118979 | Jul 2016 | WO | |||

Other References

|

Co-pending U.S. Appl. No. 15/890,867, filed Feb. 7, 2018. cited by applicant . EP14857450.2 Extended Search Report and Search Opinion dated Apr. 5, 2017. cited by applicant . International Search Report and Written Opinion for International Application No. PCT/US2014/062974 dated Feb. 19, 2015. cited by applicant . McKinsey and Company. The Internet of Things, Mapping the Value Beyond the Hype. Jun. 2015. cited by applicant . Office Action dated Jun. 16, 2017 for U.S. Appl. No. 14/495,827. cited by applicant . Office Action dated Dec. 12, 2017 for U.S. Appl. No. 14/495,827. cited by applicant . PCT/US2016/023850 International Search Report and Written Opinion dated Dec. 9, 2016. cited by applicant . Porter, et al. How Smart, Connected Products are Transforming Competition, Harvard Business Review, Nov. 2014. cited by applicant . UC Regents. Cyber-Physical systems. 2012. cited by applicant . U.S. Appl. No. 14/699,508 Office Action dated Jan. 25, 2018. cited by applicant . Wikipedia--Batch processing. Sep. 30, 2013; available at https://en.wikipedia.org/w/index.php?title=Batch_processing&oldid=5751047- 36. cited by applicant . Wikipedia--Stream processing. Sep. 22, 2013; Avaliable at https://en.wikipedia.org/w/index.php?title=Stream_processing&oldid=574006- 420. cited by applicant . ZapThink. Understanding the Real Costs of Integration. 2002. Available at http://www.zapthink.com/2002/10/23/understanding-the-real-costs-of-integr- ation. Accessed on Mar. 30, 2018. cited by applicant . Lheureux et al. Predicts 2015: Digital Business and Internet of Things Add Formidable Integration Challenges. Gartner, Nov. 11, 2014. cited by applicant . Middleton, et al. Forecast: Internet of Things, Endpoints and Associated Services, Worldwide, 2014. Gartner, Feb. 16, 2015. cited by applicant . Co-pending U.S. Appl. No. 16/044,199, filed Jul. 24, 2018. cited by applicant . Soley et al. Model Driven Architecture. Object Management Group (OMG), White Paper, Draft 3.2 (Nov. 27, 2000). 12 pages. Retrieved from URL: <https://www.omg.org/mda/mda_files/model_driven_architecture.htm>. cited by applicant . EP16740923.4 Extended European Search Report dated Jan. 7, 2019. cited by applicant . U.S. Appl. No. 14/699,508 Office Action dated Mar. 7, 2019. cited by applicant . U.S. Appl. No. 14/699,508 Office Action dated Sep. 7, 2018. cited by applicant . U.S. Appl. No. 15/890,867 Office Action dated Nov. 13, 2018. cited by applicant . U.S. Appl. No. 15/890,867 Office Action dated Apr. 1, 2019. cited by applicant . U.S. Appl. No. 15/890,867 Office Action dated Sep. 25, 2019. cited by applicant . U.S. Appl. No. 16/360,815 Office Action dated May 30, 2019. cited by applicant . Polamuri. Supervised and Unsupervised Learning (Sep. 19, 2014). Retrieved Oct. 25, 2019 from URL: <https://dataaspirant.com/2014/09/19/supervised-and-unsupervised-learn- ing/>. 9 pages. cited by applicant . U.S. Appl. No. 14/699,508 Office Action dated Nov. 18, 2019. cited by applicant . U.S. Appl. No. 14/699,508 Office Action dated Apr. 2, 2020. cited by applicant . U.S. Appl. no. 15/890,867 Office Action dated Apr. 9, 2020. cited by applicant . U.S. Appl. No. 15/890,867 Office Action dated Mar. 27, 2020. cited by applicant . U.S. Appl. no. 16/044,199 Office Action dated Jun. 26, 2020. cited by applicant . U.S. Appl. no. 16/360,815 Office Action dated Apr. 16, 2020. cited by applicant . U.S. Appl. No. 16/360,815 Office Action dated Oct. 30, 2019. cited by applicant . EP14857450.2 Summons to Attend Oral Proceedings dated Jul. 16, 2020. cited by applicant . U.S. Appl. No. 15/890,867 Notice of Allowance dated Aug. 27, 2020. cited by applicant. |

Primary Examiner: Zhang; Shirley X

Attorney, Agent or Firm: Wilson Sonsini Goodrich & Rosati

Parent Case Text

RELATED APPLICATION

This application is a national stage application of PCT/US2016/023850, filed on Mar. 23, 2016, which claims the benefit of U.S. Provisional Application No. 62/107,262, filed Jan. 23, 2015, and U.S. Provisional Application No. 62/172,012, filed Jun. 5, 2015, each of which is hereby incorporated by reference herein in its entirety.

Claims

The invention claimed is:

1. An application development platform implemented on one or more processors and one or more storage devices, said application development platform comprising: a persistence component to store time-series data in a key-value store and non-time-series data in a relational database; a data services component to implement abstraction over a plurality of data stores comprising the key-value store and the relational database, wherein the abstraction comprises a metadata-based mapping framework over a plurality of data formats associated with the plurality of data stores; a plurality of configurable transformers, wherein each configurable transformer is associated with exactly one of said plurality of data formats, wherein a configurable transformer associated with a selected data format comprises at least a transformation rule for transforming between the selected data format and a standardized data format that conforms to a canonical data model comprising standardized data definitions, without requiring direct conversion between the plurality of data formats; a user interface to receive a request for data having said selected data format on said application development platform, wherein said configurable transformer is configured to transform said requested data to said standardized data format that conforms to said canonical data model; and an application component for processing said transformed data, wherein said application component comprises application logic, including functions, data processing, and machine learning, configured to access or process said transformed data, wherein said application logic comprises at least two applications that have incompatible programmatic interfaces, and wherein said standardized data format is agnostic to said at least two applications, thereby enabling said at least two applications to communicate directly with each other.

2. The platform of claim 1, wherein the canonical data model comprises conceptual domain models of various attributes and processes related to different entities or domains.

3. The platform of claim 2, wherein the various attributes and processes comprise persistence, data representations, data interrelationships, computing processes, or machine learning algorithms.

4. The platform of claim 1, wherein the standardized data format comprises an aggregation and correlation of two or more data formats associated with the plurality of data stores, while ensuring automatic and guaranteed referential integrity of the underlying two or more data formats.

5. The platform of claim 4, wherein the standardized data format is automatically exposed and accessible through RESTful interfaces.

6. A method comprising: storing time-series data in a key-value store and non-time-series data in a relational database; implementing, on an application development platform, abstraction over a plurality of data stores comprising the key-value store and the relational database, wherein the abstraction comprises a metadata-based mapping framework over a plurality of data formats associated with the plurality of data stores by: transforming the plurality of data formats to a standardized data format that conforms to a canonical data model comprising standardized data definitions, wherein the transforming is performed with a plurality of configurable transformers, wherein each configurable transformer is associated with exactly one of said plurality of storage formats, wherein a configurable transformer associated with a selected data format comprises at least a transformation rule for transforming between the selected data format and the standardized data format, without requiring direct conversion between the plurality of data formats; in response to a request for data having said selected data format on said application development platform, using said configurable transformer to transform said requested data to said standardized data format that conforms to said canonical data model; and providing application logic, including functions, data processing, and machine learning, for one or more applications that access or process said requested data that was transformed to said selected defined type, wherein said one or more applications comprise at least two applications that have incompatible programmatic interfaces, and wherein said standardized format is agnostic to said at least two applications, thereby enabling said at least two applications to communicate directly with each other.

7. The method of claim 6, wherein the canonical data model comprises conceptual domain models of various attributes and processes related to different entities or domains.

8. The method of claim 7, wherein the various attributes and processes comprise persistence, data representations, data interrelationships, computing processes, or machine learning algorithms.

9. The method of claim 6, wherein the standardized data format comprises an aggregation and correlation of two or more data formats associated with the plurality of data stores, while ensuring automatic and guaranteed referential integrity of the underlying two or more data formats.

10. The method of claim 9, wherein the standardized data format is automatically exposed and accessible through RESTful interfaces.

11. The method of claim 6, wherein said canonical data model is extensible to allow users of said application development platform to integrate new data formats.

Description

TECHNICAL FIELD

The present disclosure relates to big data analytics, data integration, processing, machine learning, and more particularly relates to an enterprise Internet-of-Things (IoT) application development platform.

BRIEF DESCRIPTION OF THE DRAWINGS

Non-limiting and non-exhaustive embodiments of the present disclosure are described with reference to the following figures, wherein like reference numerals refer to like parts throughout the various figures unless otherwise specified.

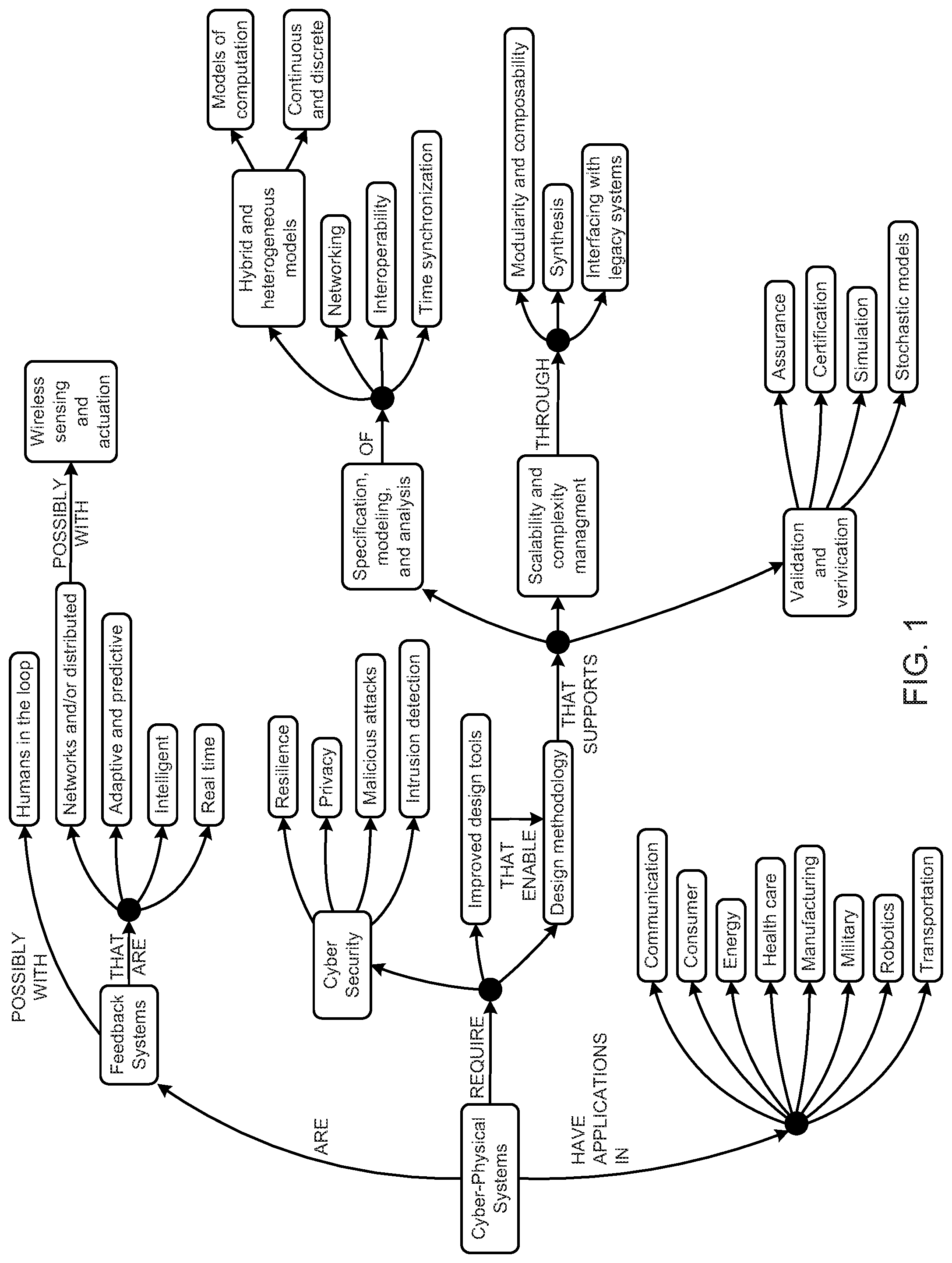

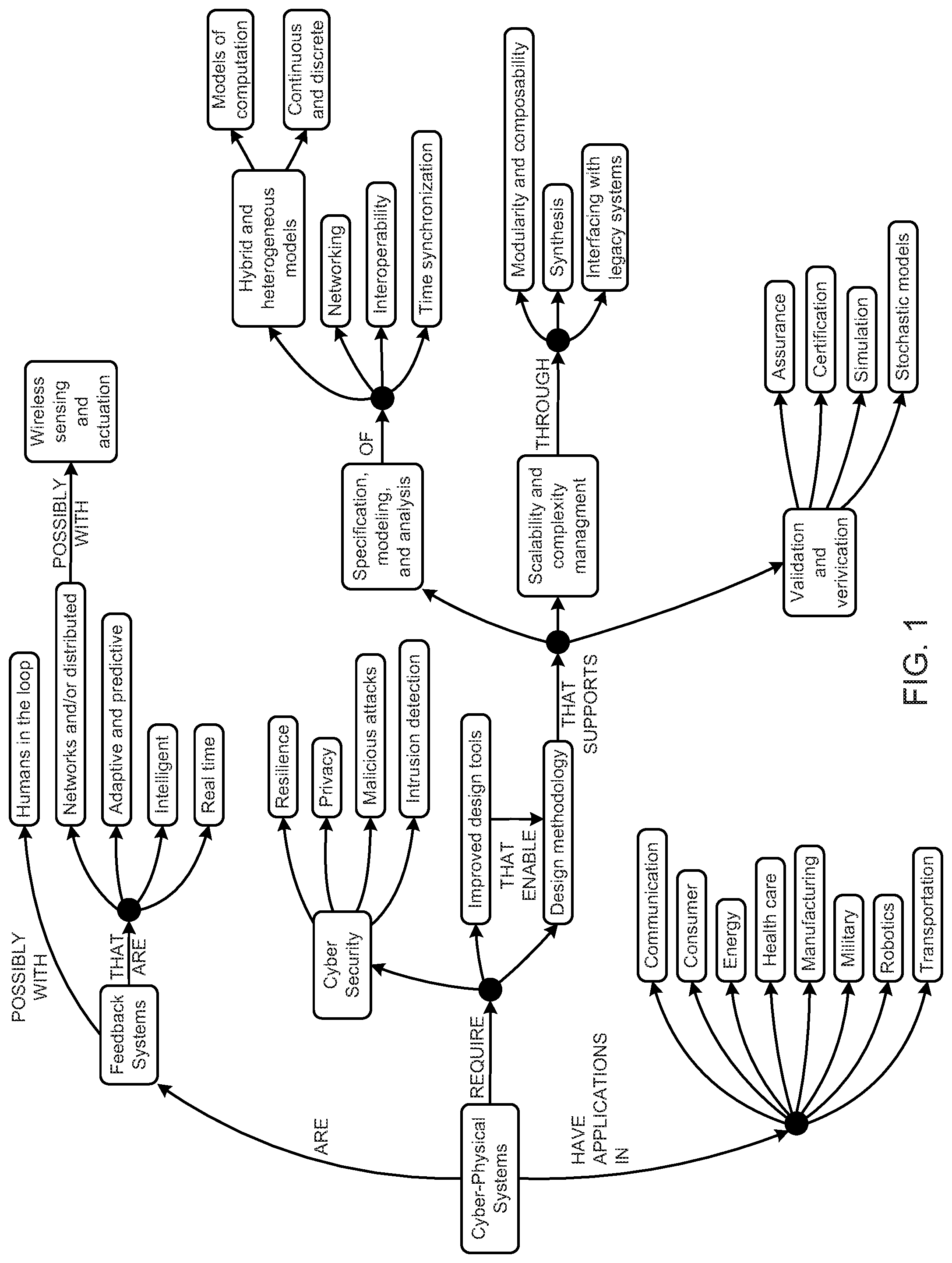

FIG. 1 is a schematic block diagram illustrating a concept map for a cyber-physical system.

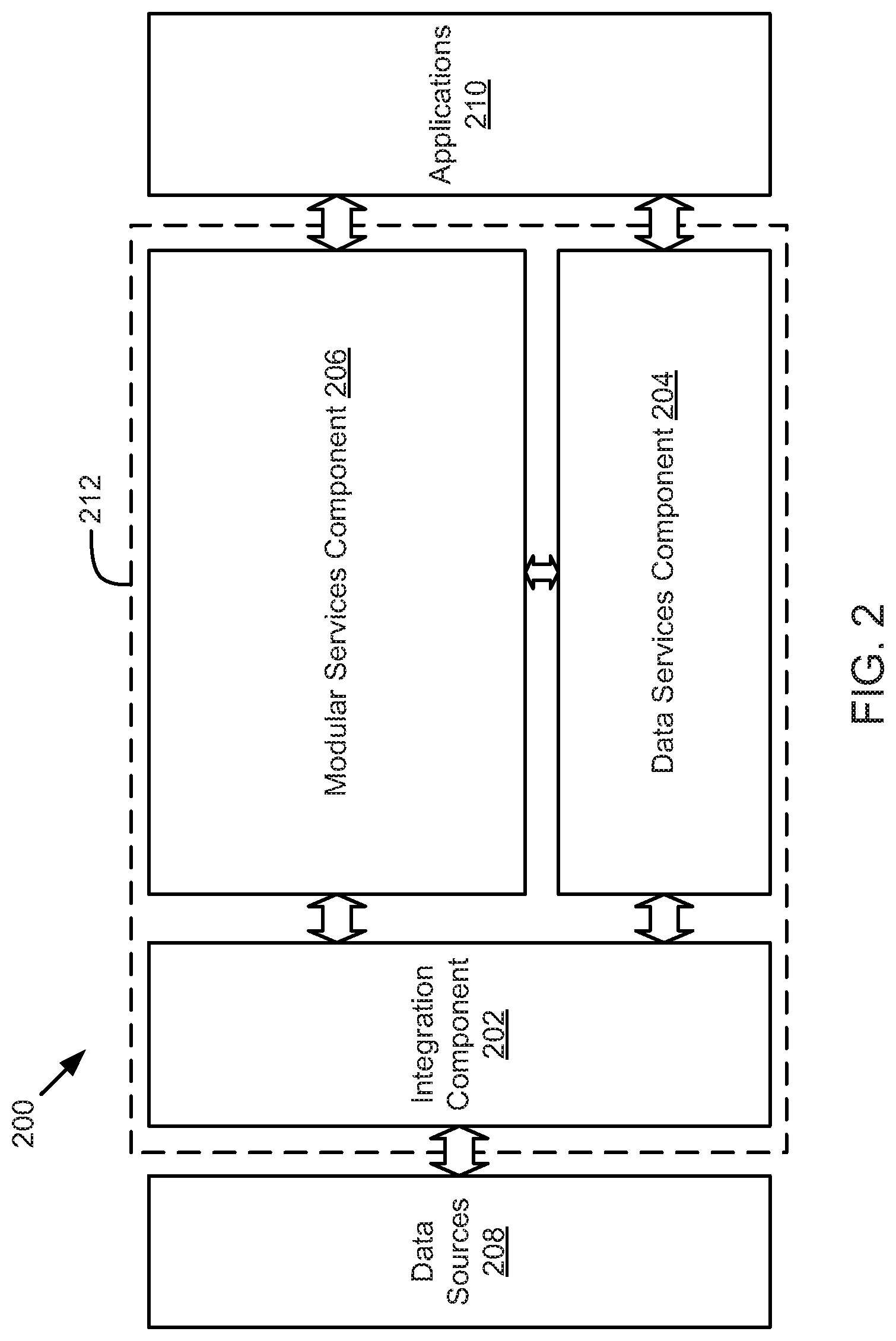

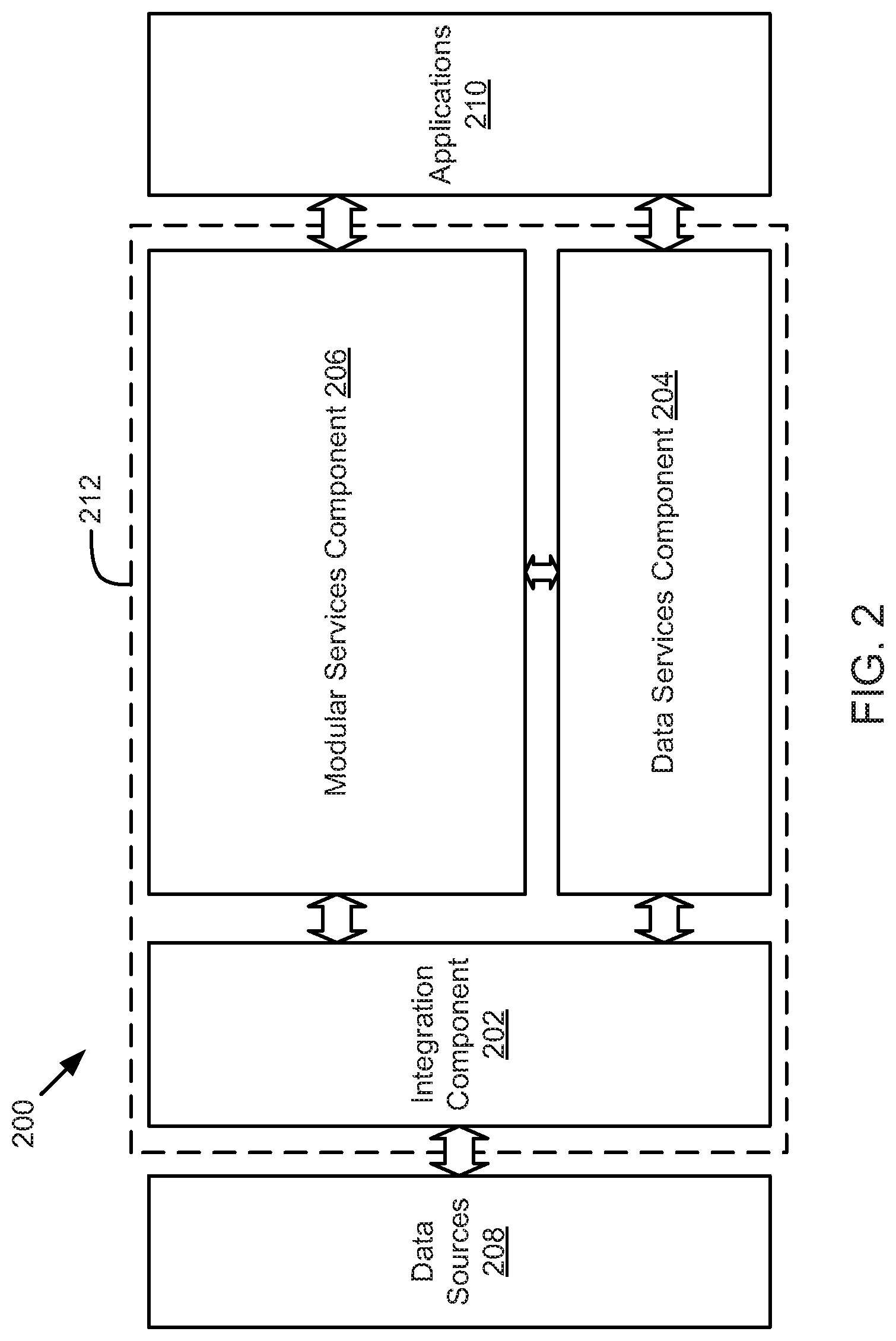

FIG. 2 is a schematic block diagram illustrating a system for integrating, processing, and abstracting data related to an enterprise Internet-of-Things application development platform, according to one embodiment.

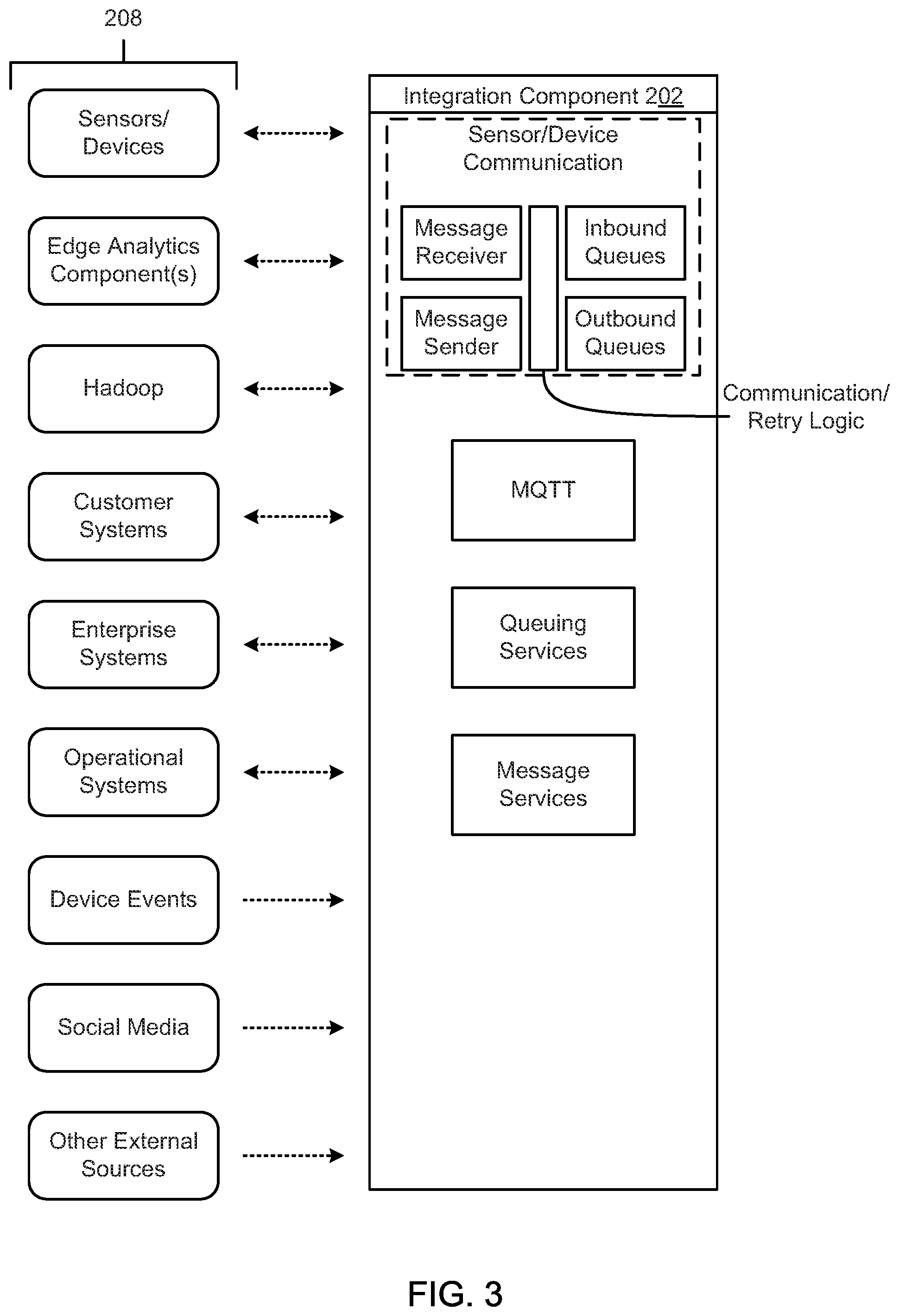

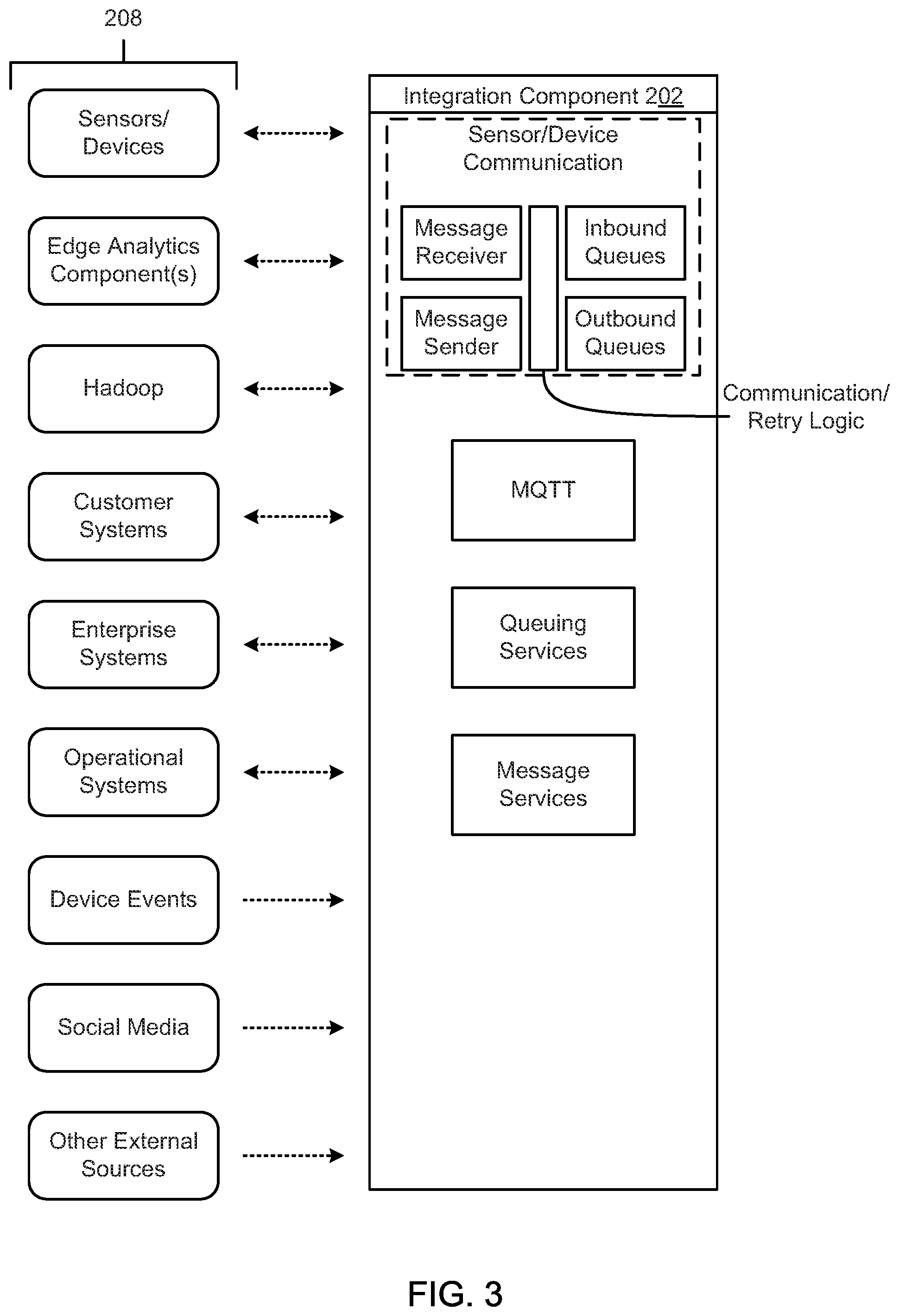

FIG. 3 is a schematic block diagram illustrating an integration component, according to one embodiment.

FIG. 4 is a schematic block diagram illustrating a data services component, according to one embodiment.

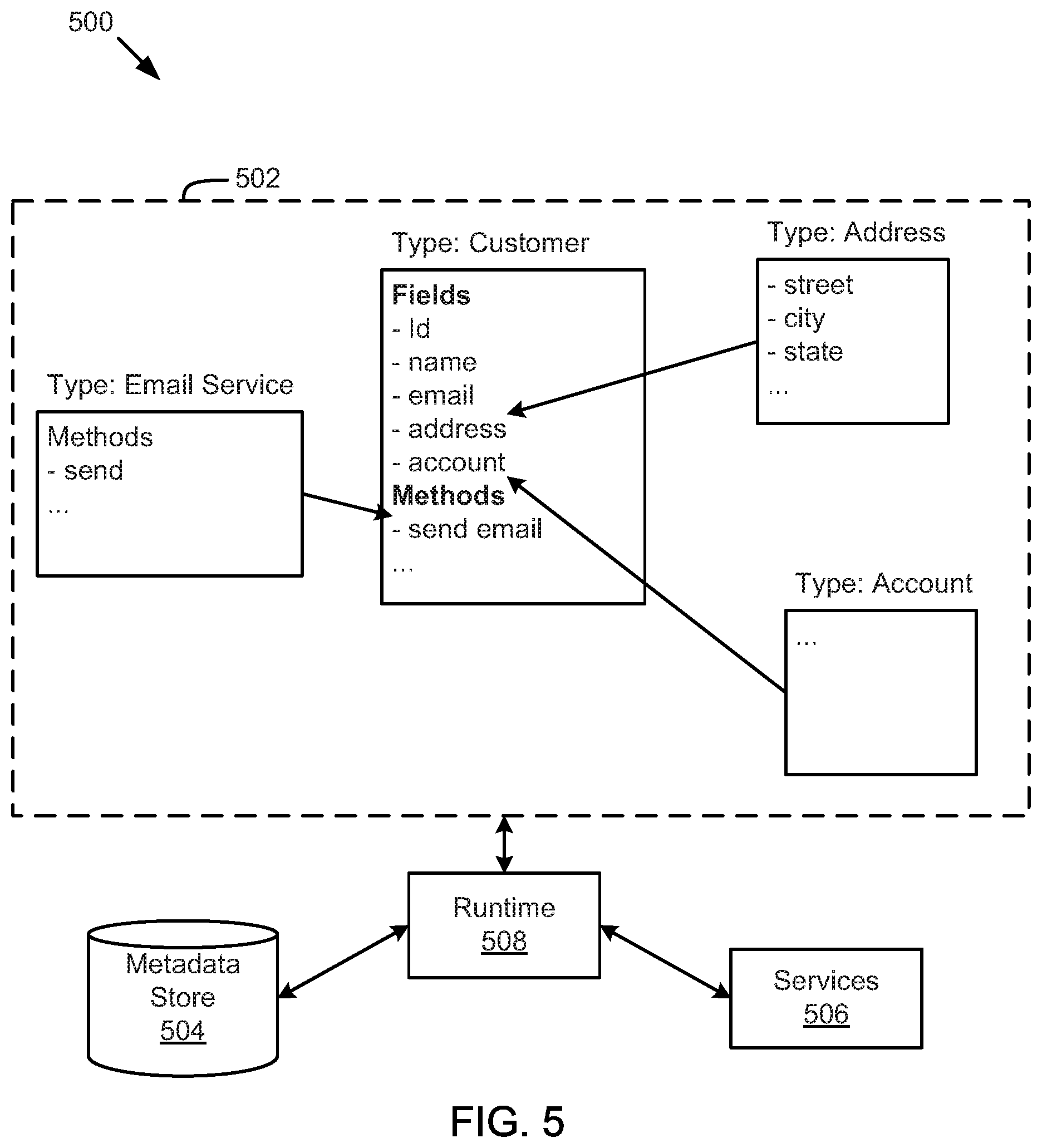

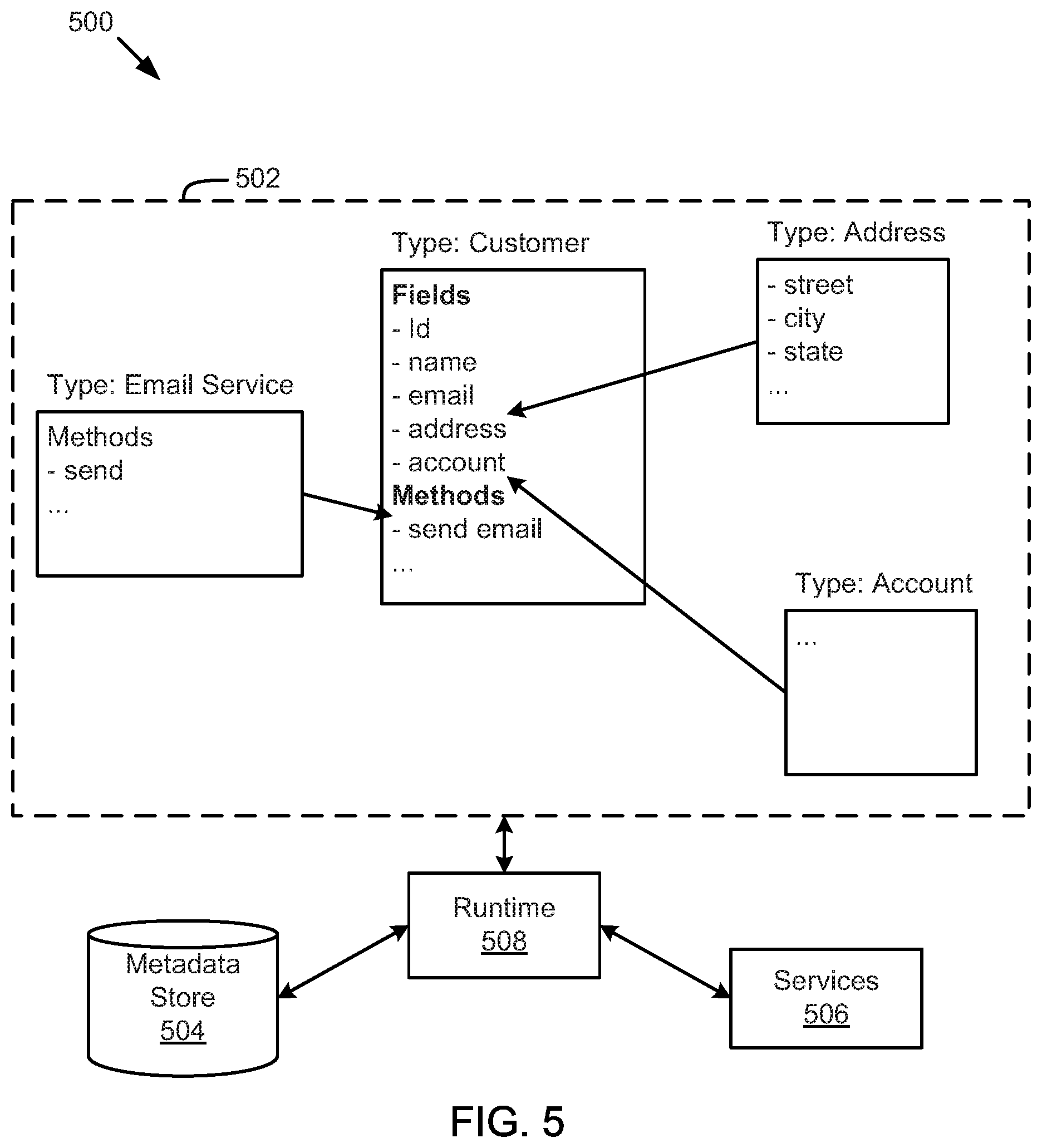

FIG. 5 is a schematic block diagram illustrating components of a type system for a distributed system, according to one embodiment.

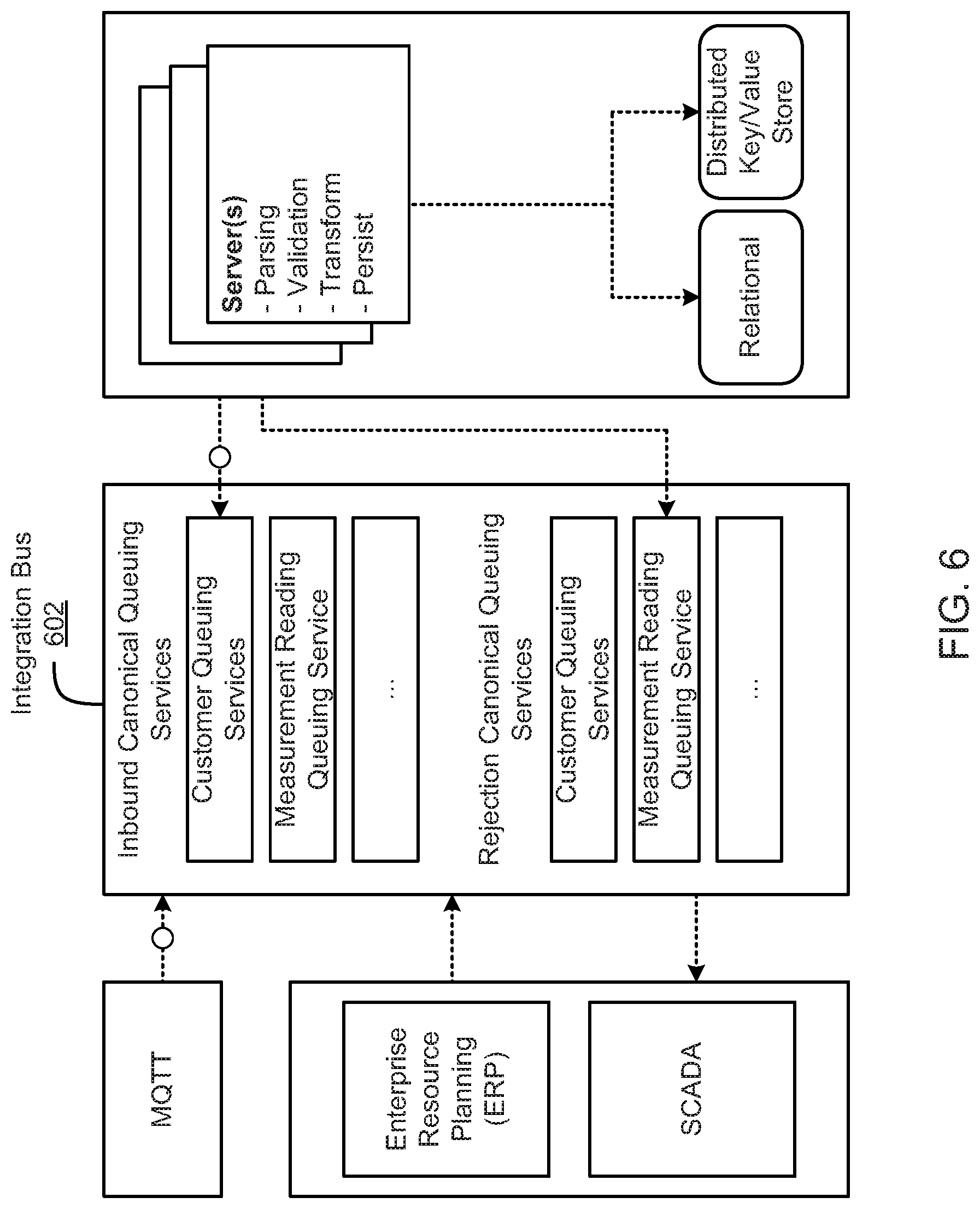

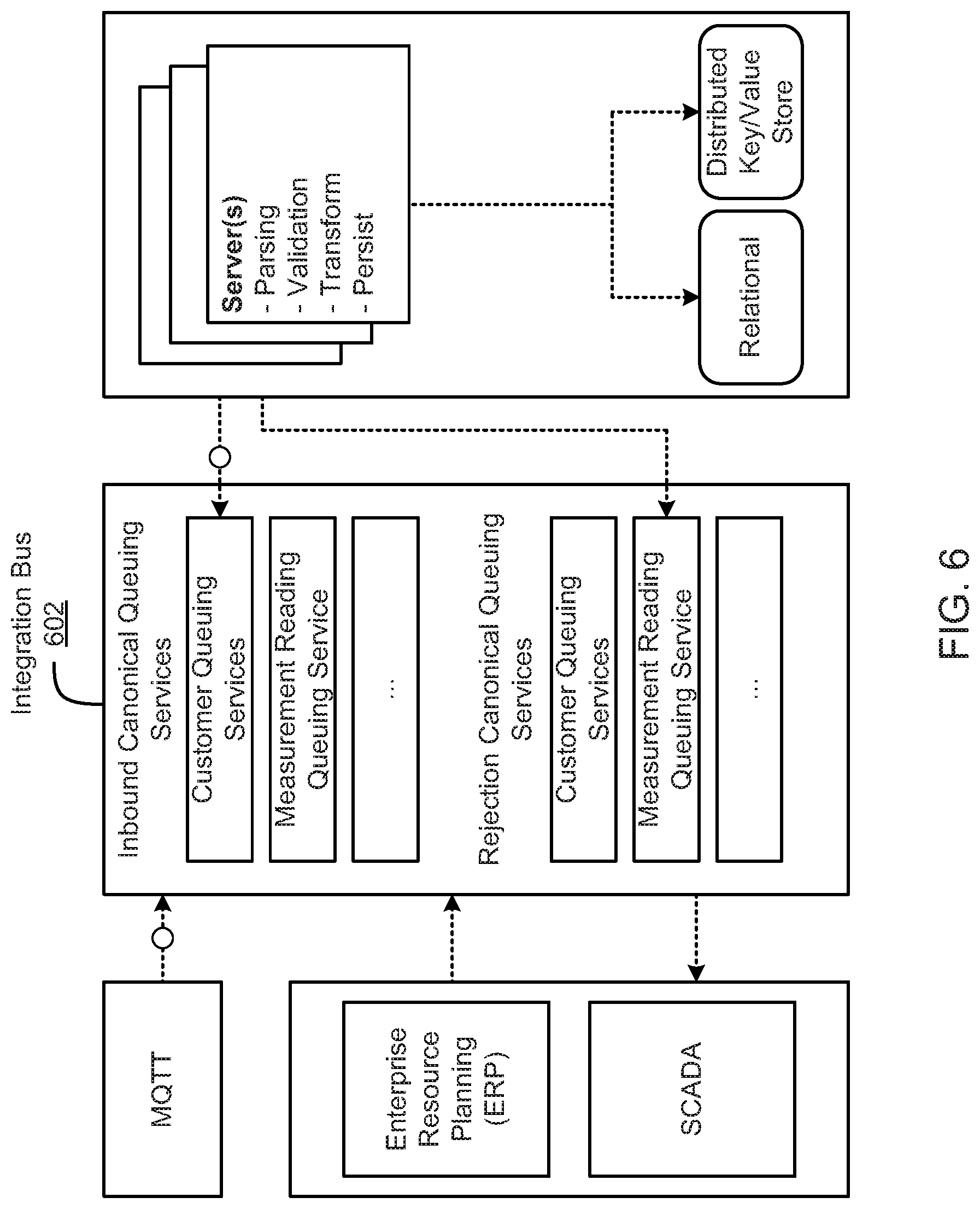

FIG. 6 is a schematic block diagram illustrating data flow on an integration bus, according to one embodiment.

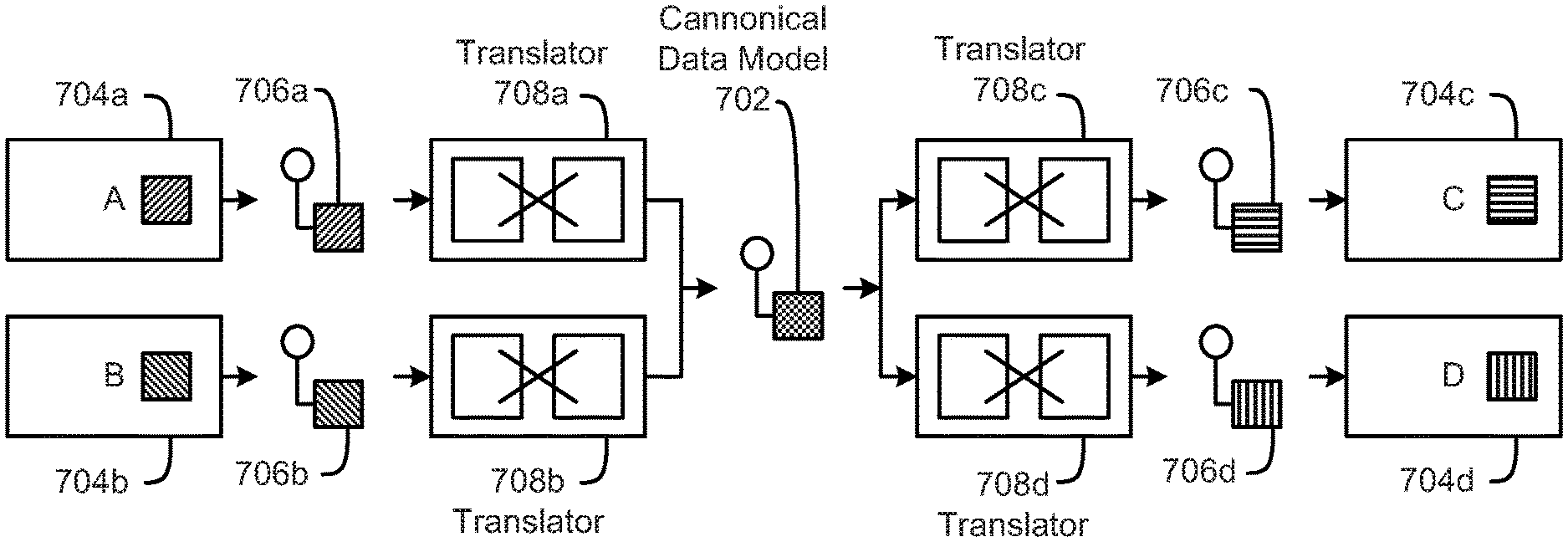

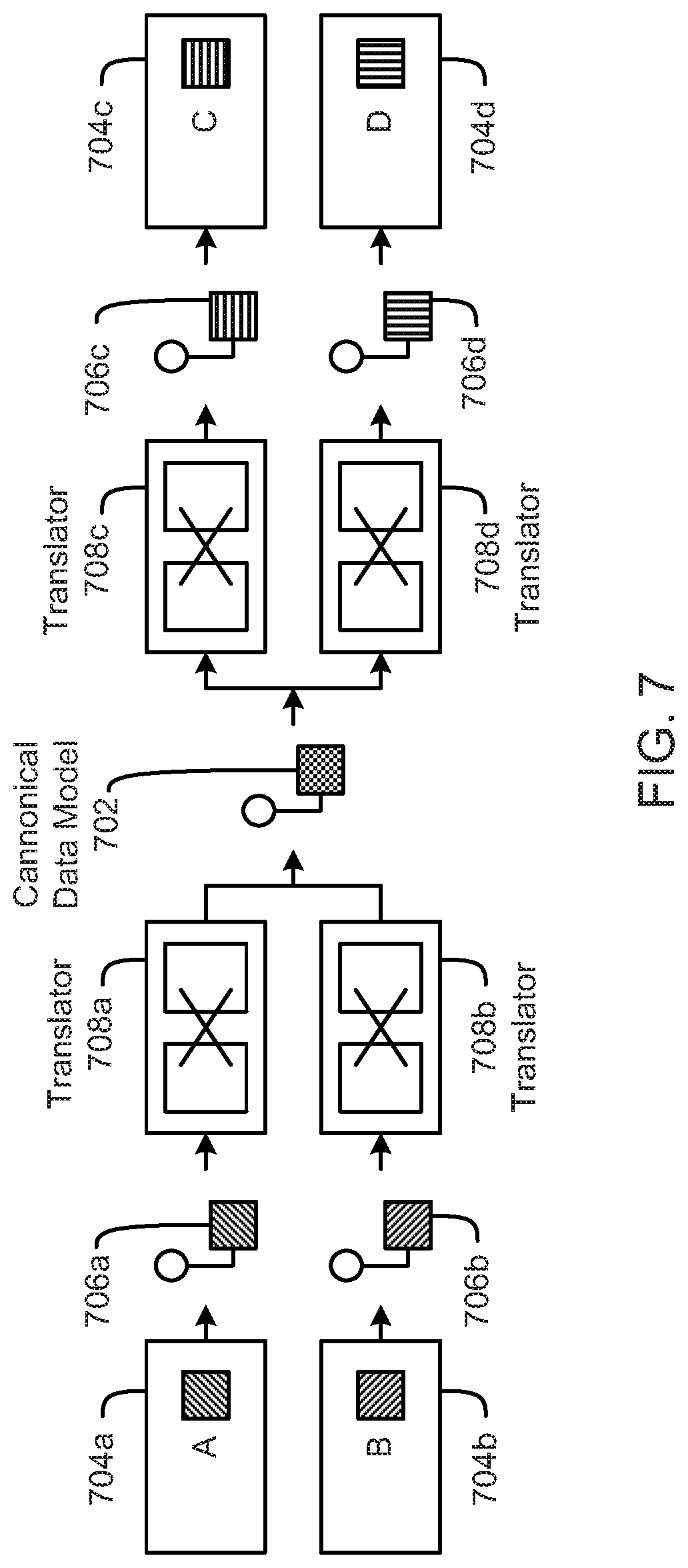

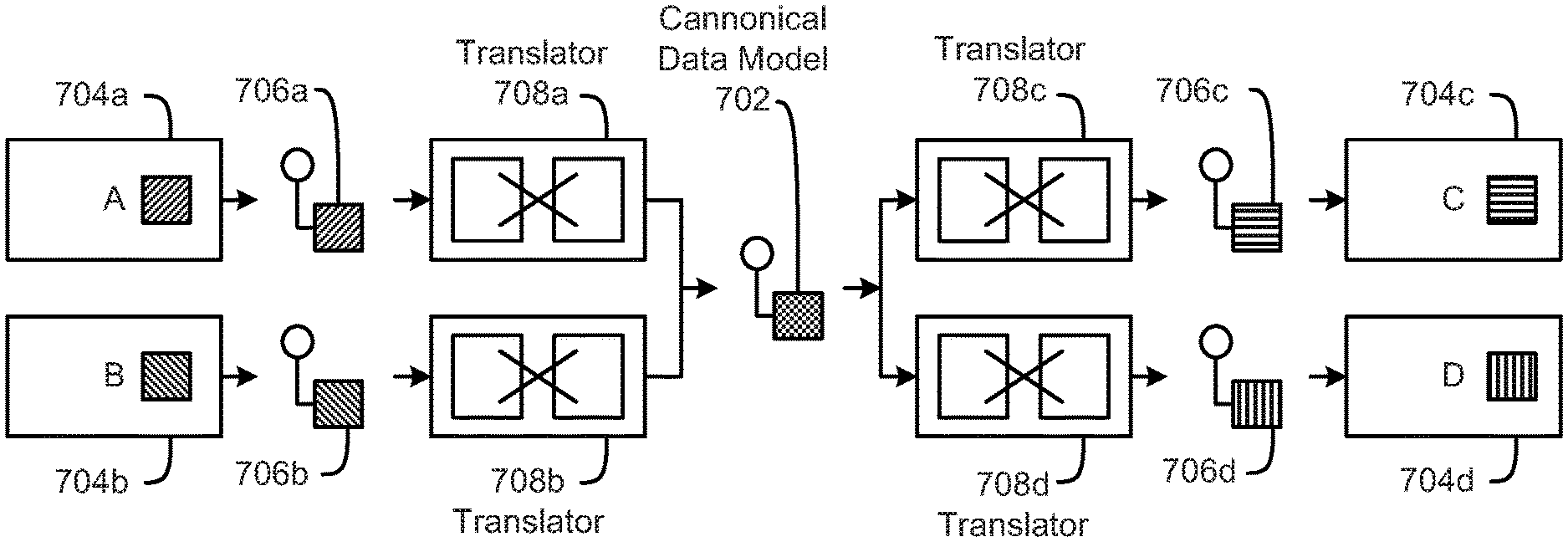

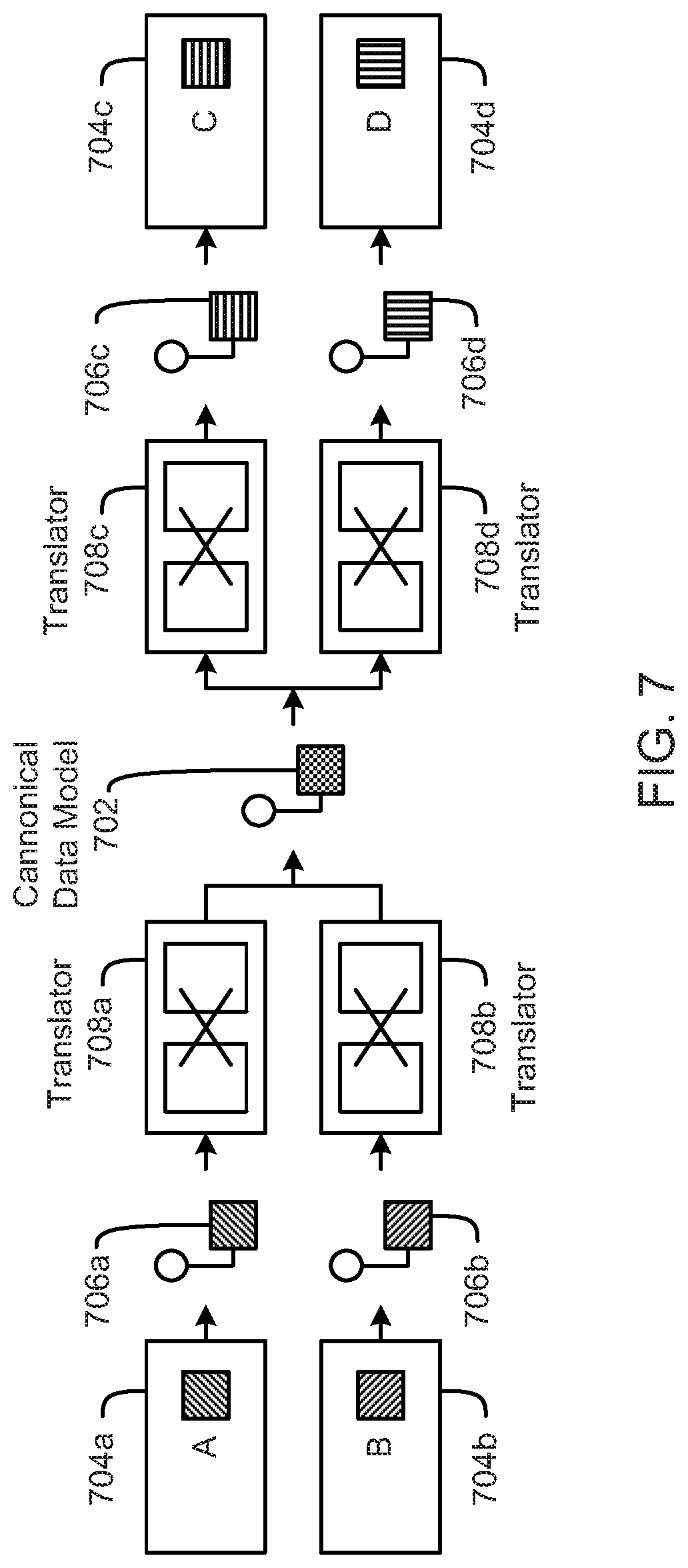

FIG. 7 is a schematic block diagram illustrating data transformations, according to one embodiment.

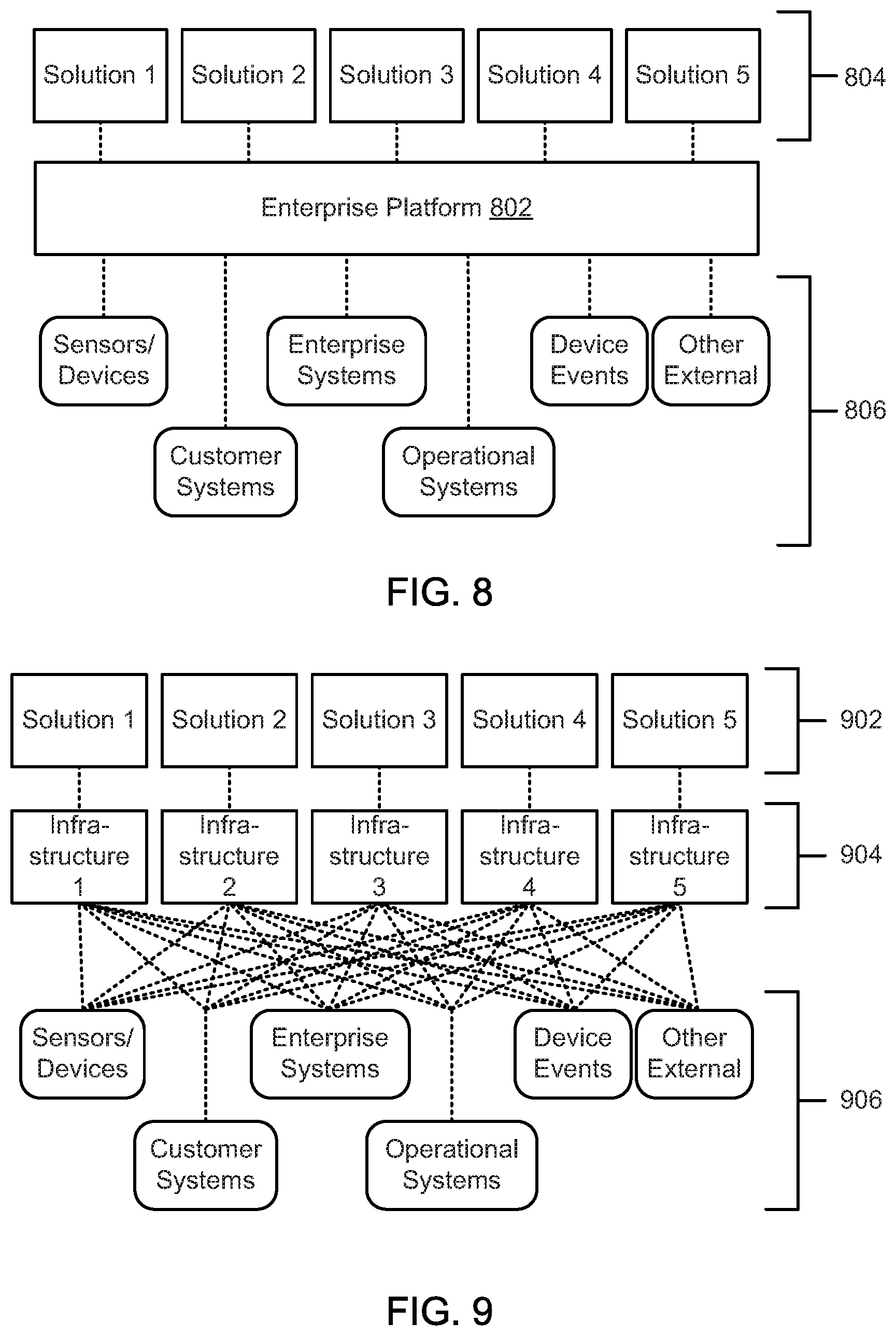

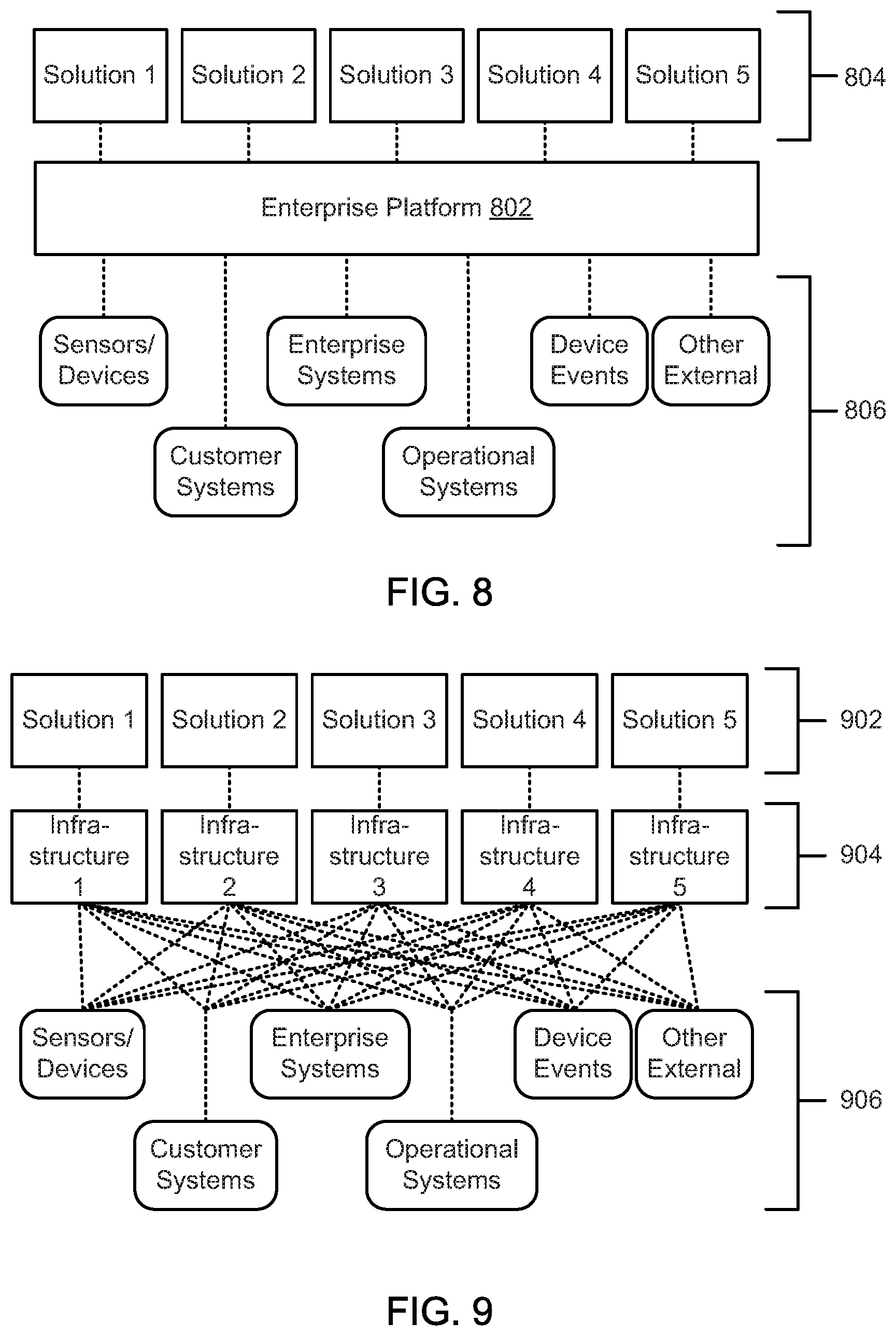

FIG. 8 is a schematic block diagram illustrating data integrations for an enterprise platform, according to one embodiment.

FIG. 9 is a schematic block diagram illustrating data integrations for individual point solutions, according to one embodiment.

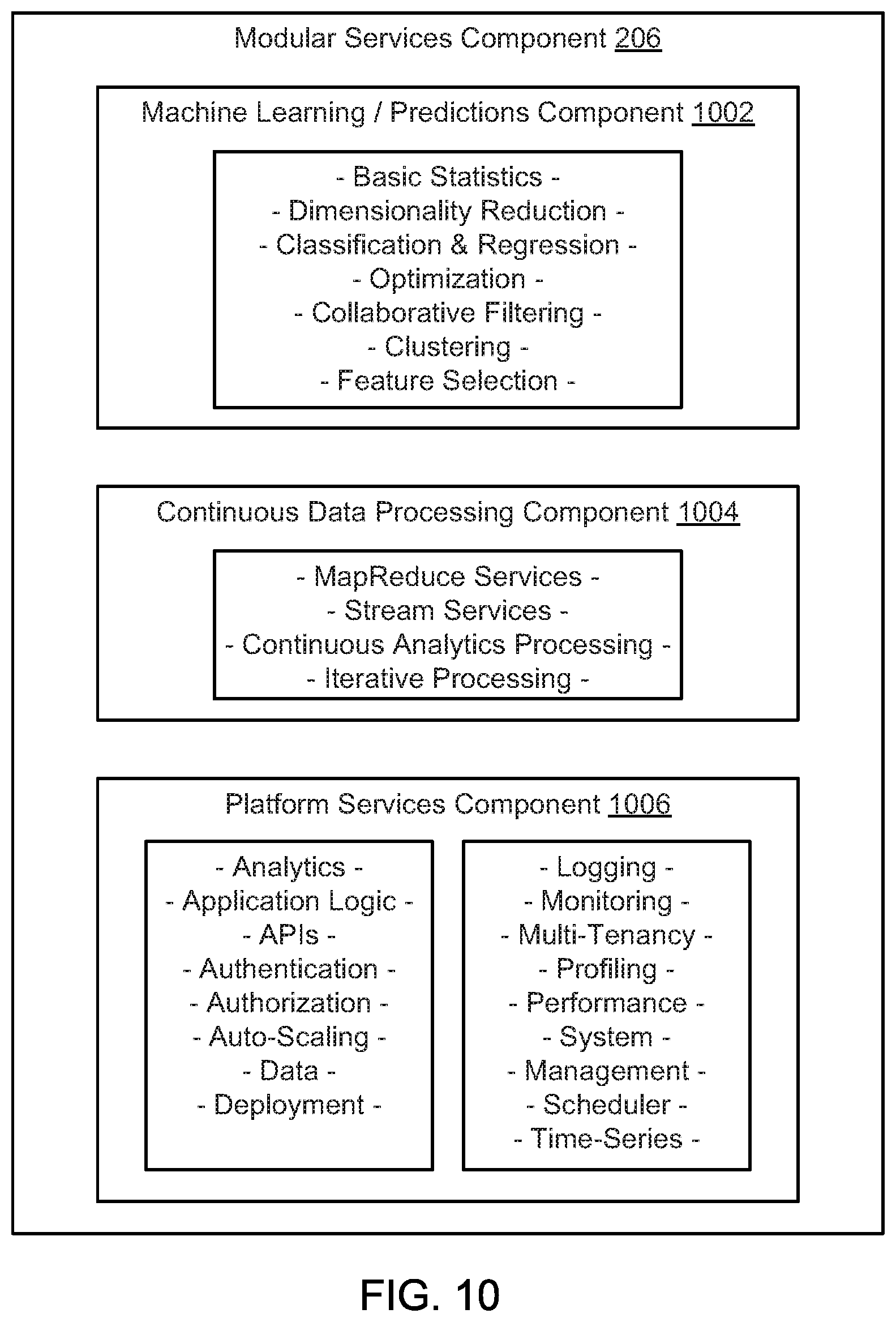

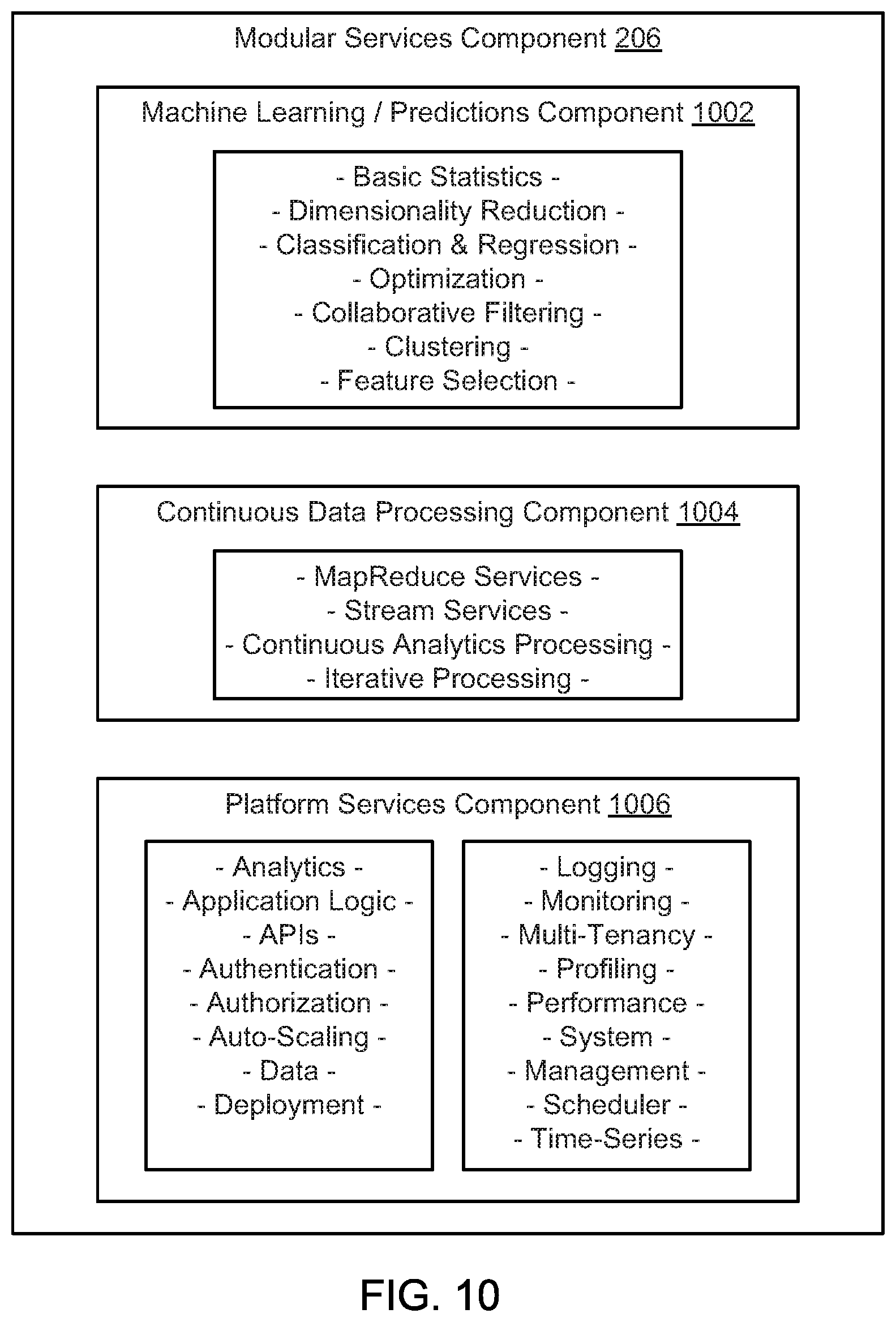

FIG. 10 is a schematic block diagram illustrating a modular services component, according to one embodiment.

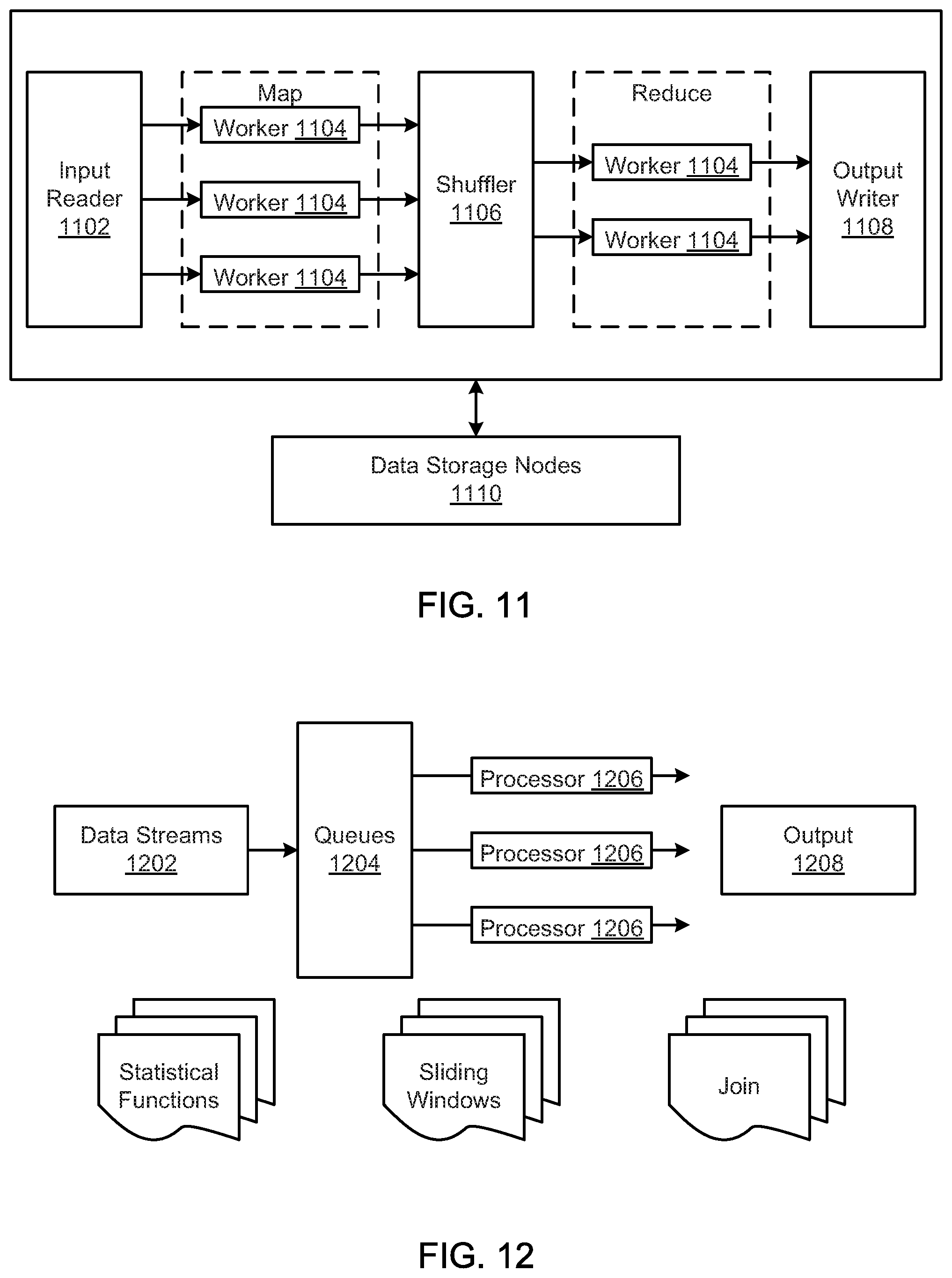

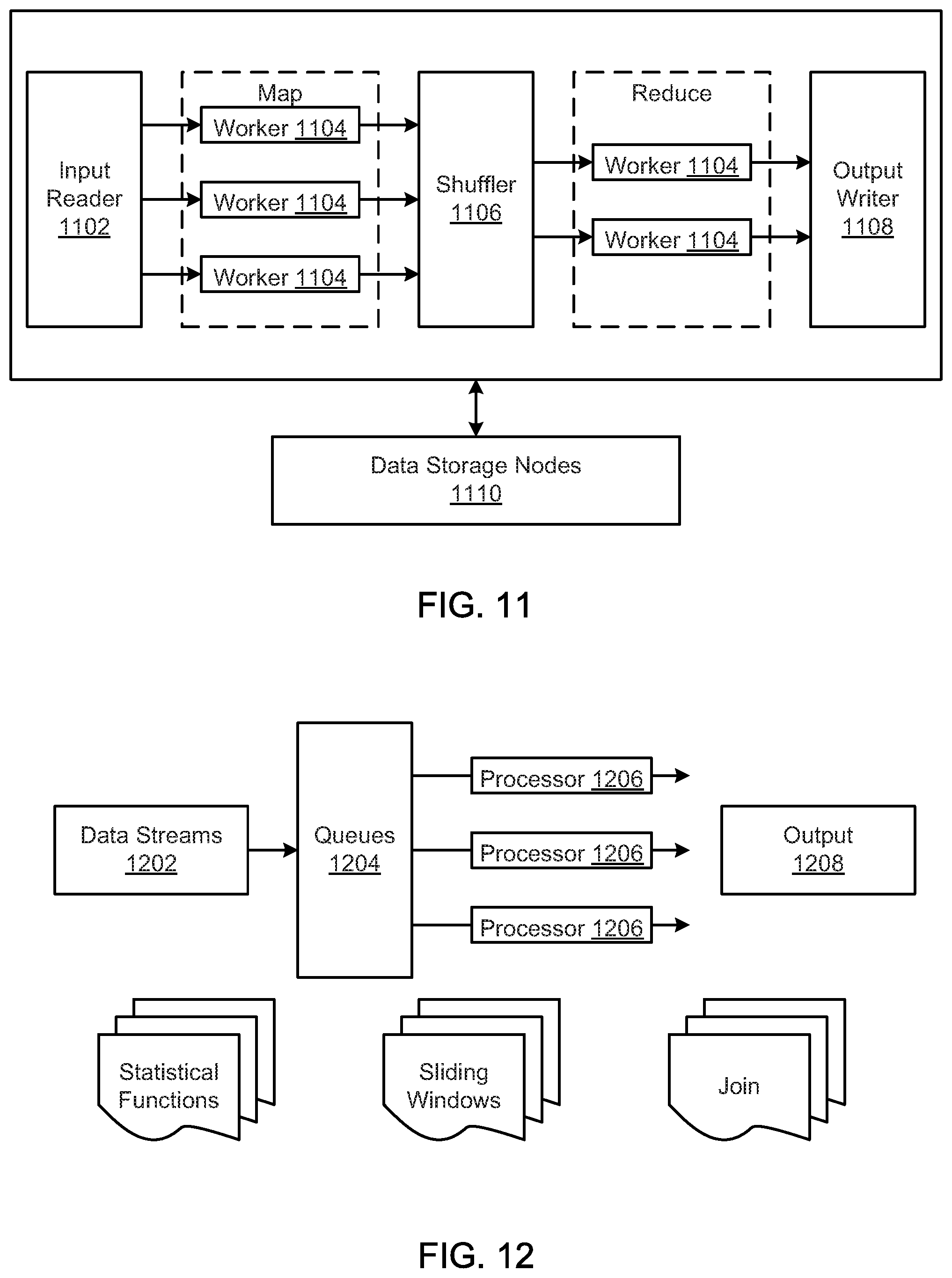

FIG. 11 is a schematic block diagram illustrating a Map reduce algorithm, according to one embodiment.

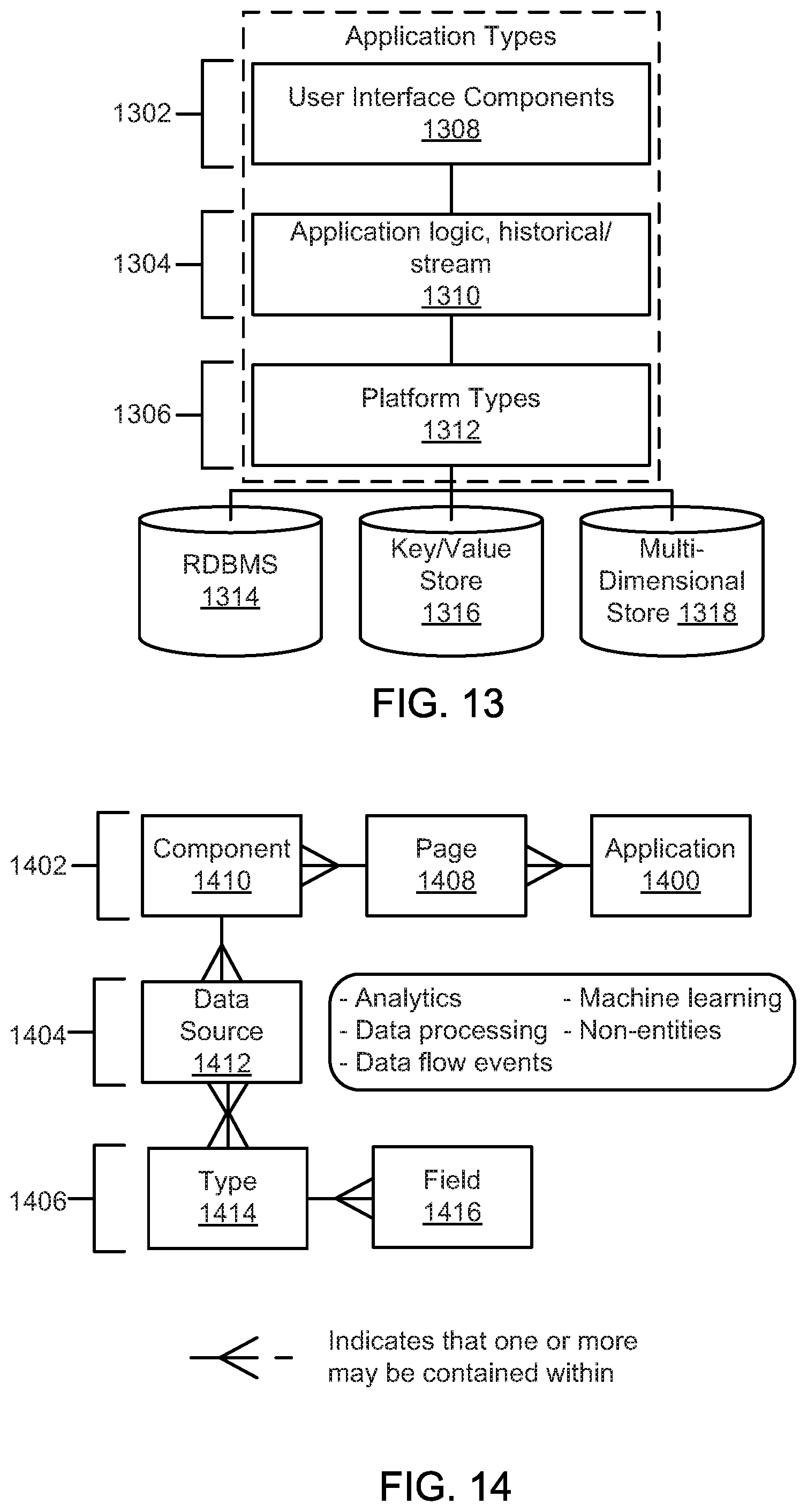

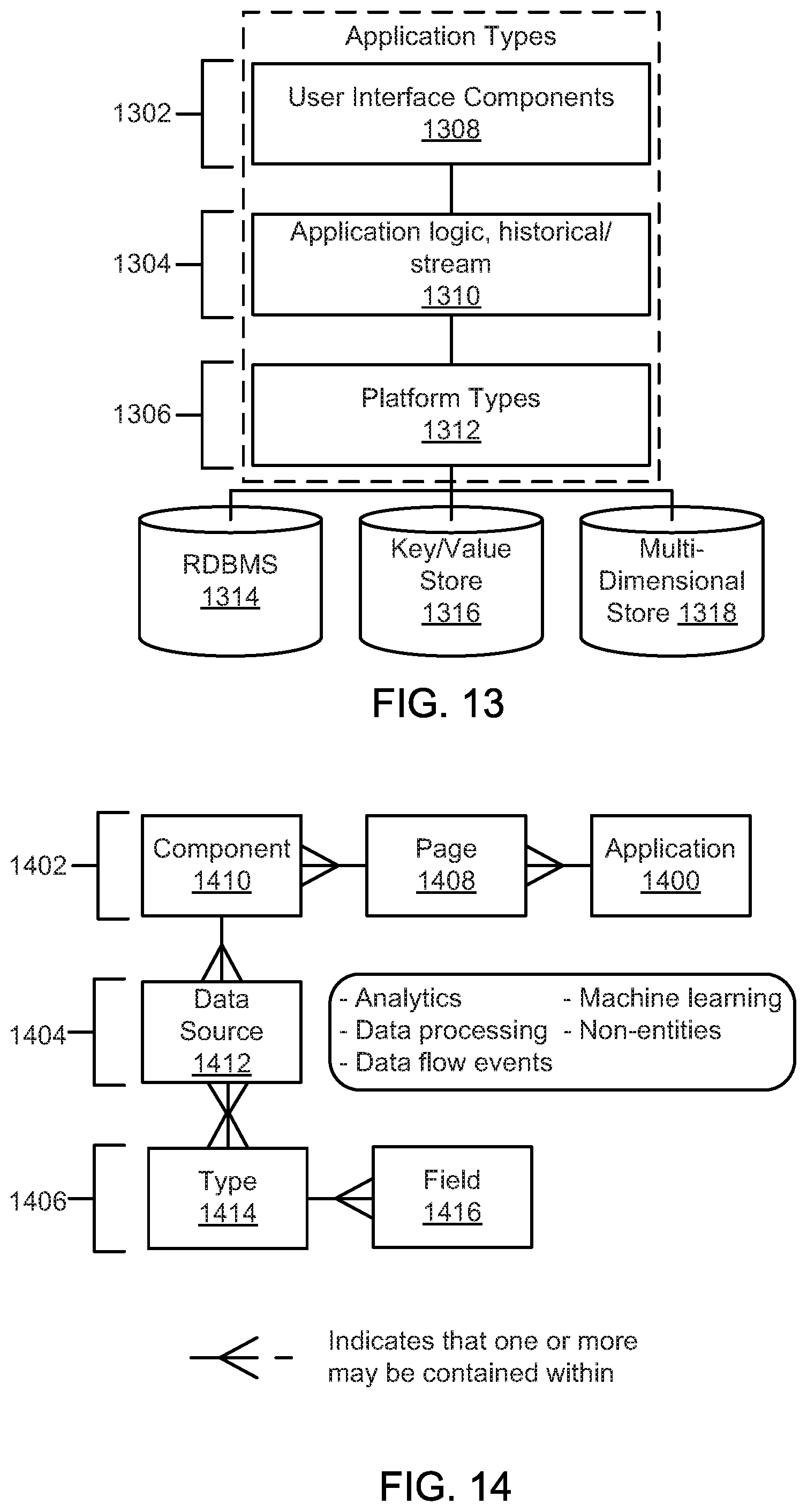

FIG. 12 is a schematic block diagram illustrating stream processing, according to one embodiment.

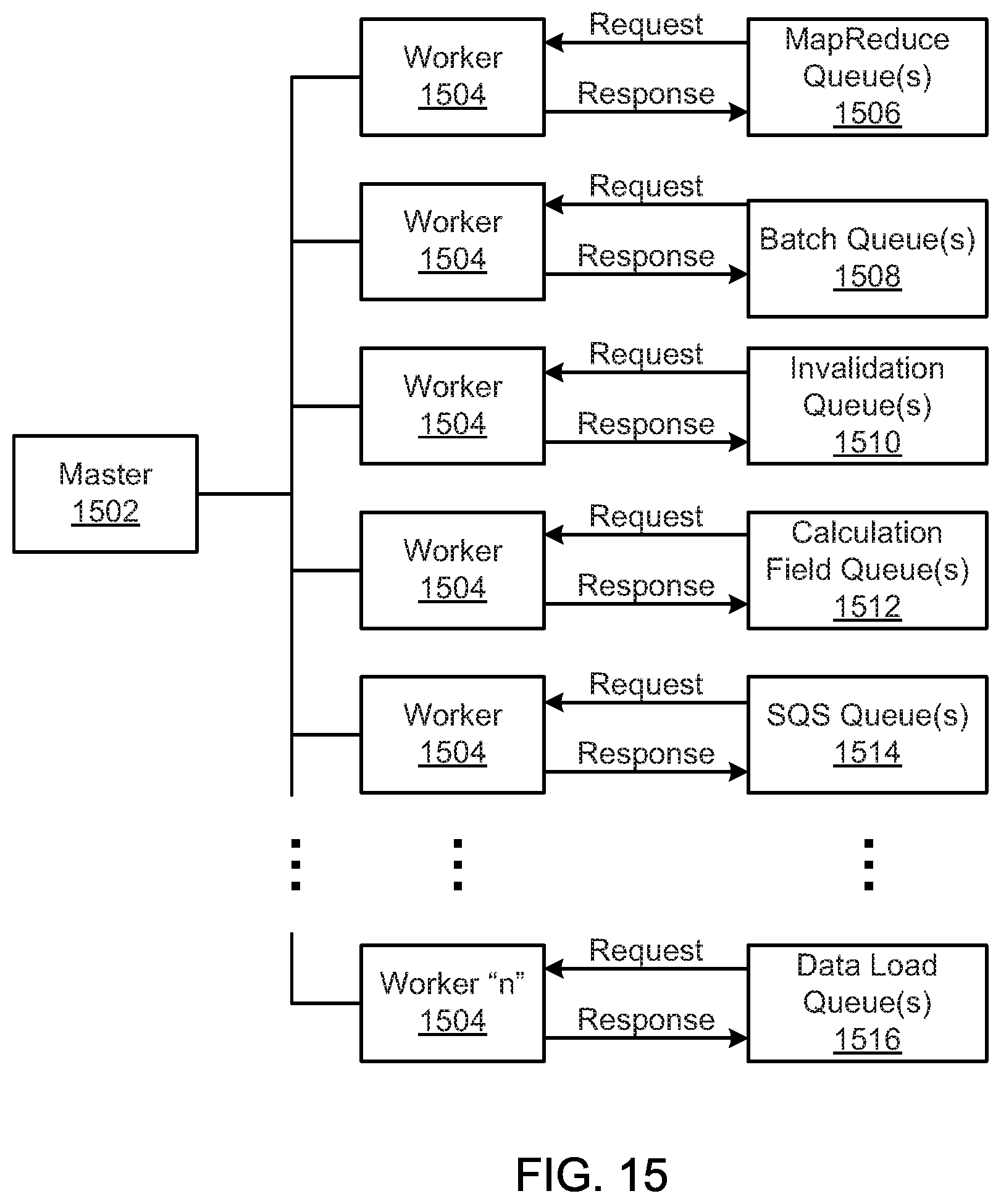

FIG. 13 is a schematic block diagram illustrating a tiered architecture of an application, according to one embodiment.

FIG. 14 is a schematic block diagram illustrating details for layers of an application, according to one embodiment.

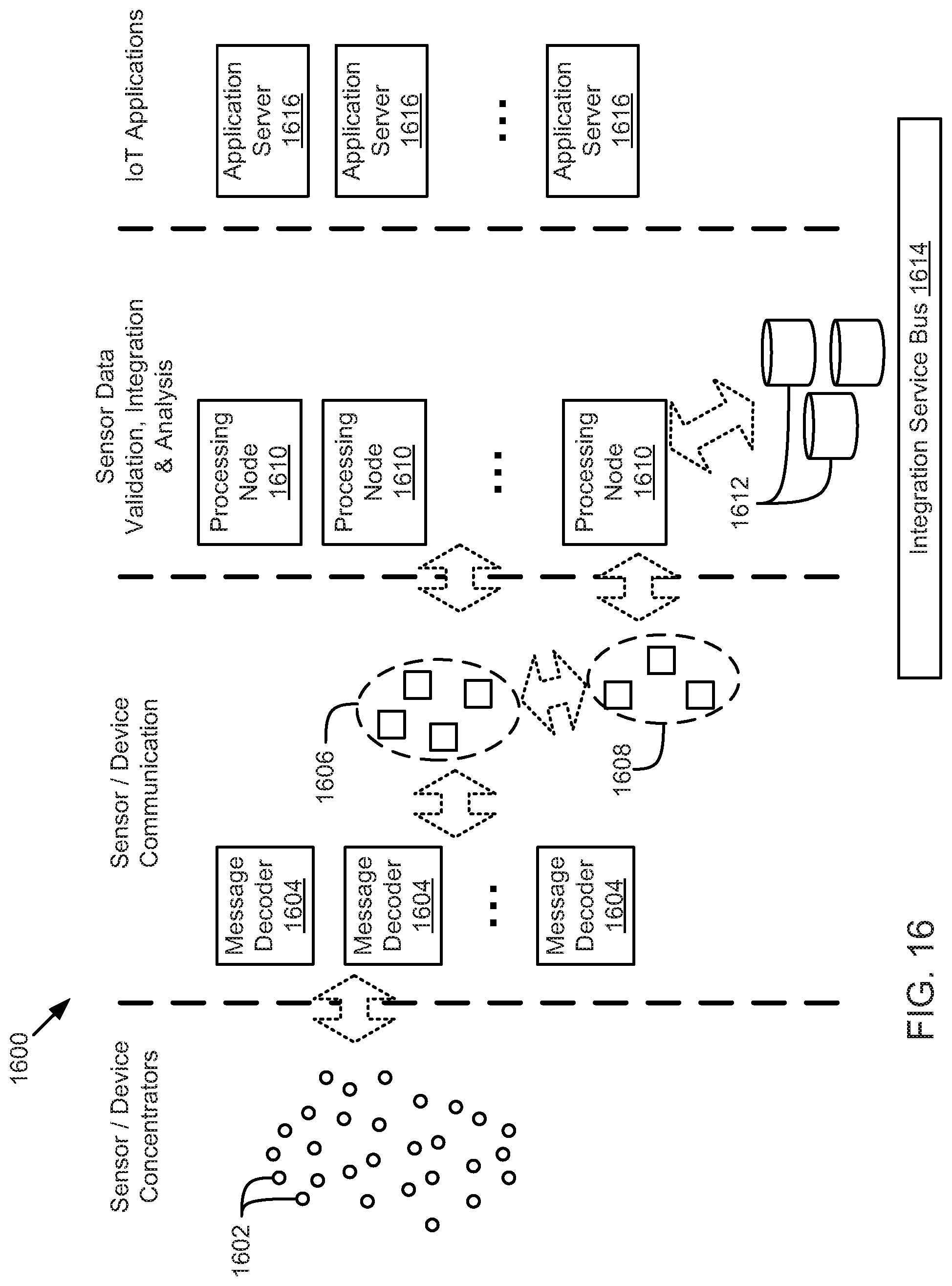

FIG. 15 is a schematic block diagram illustrating an elastic computing environment, according to one embodiment.

FIG. 16 is a schematic block diagram illustrating a sensor network for an enterprise Internet-of-Things platform system, according to one embodiment.

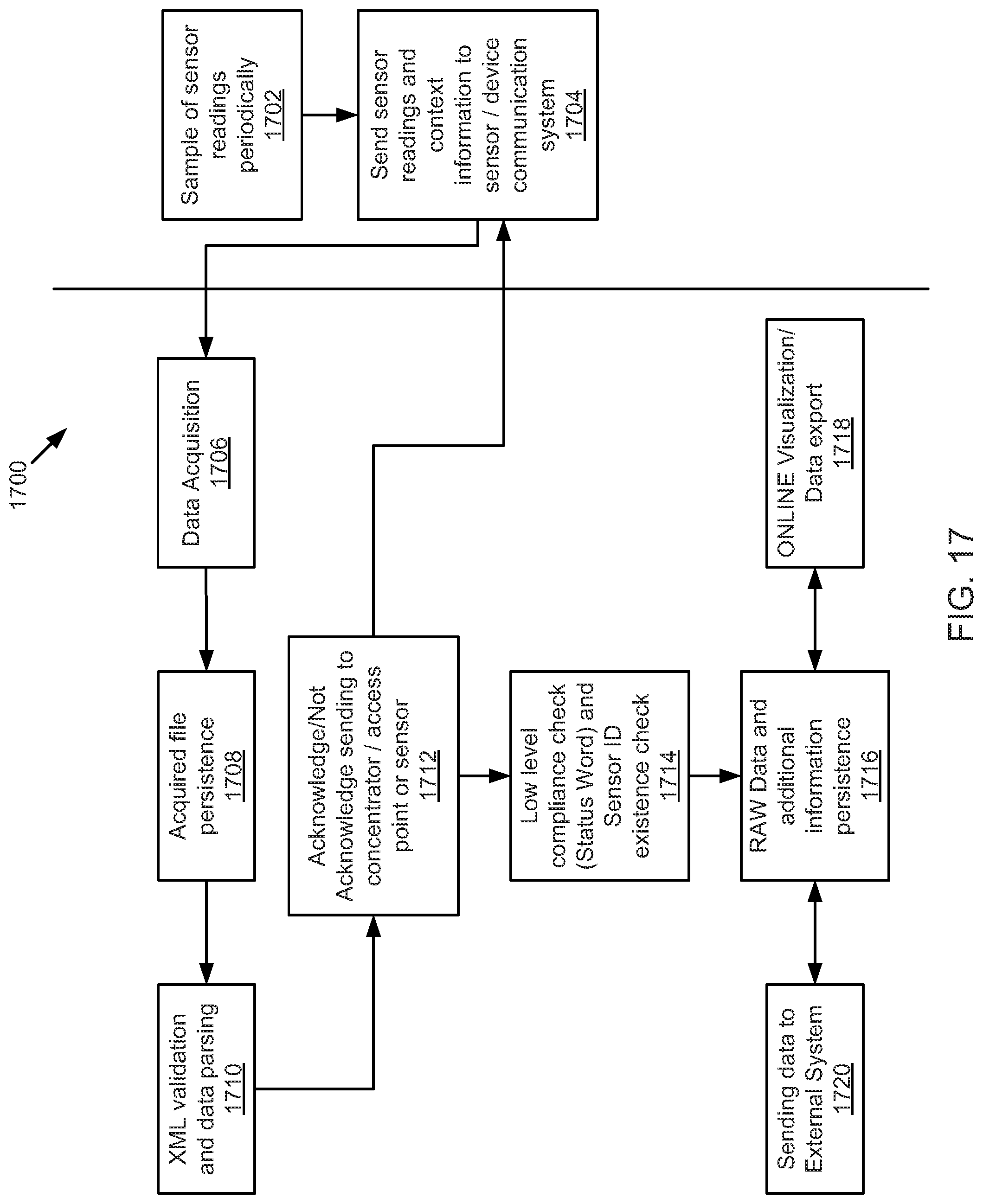

FIG. 17 is a schematic sequence diagram illustrating a usage scenario for data acquisition, according to one embodiment.

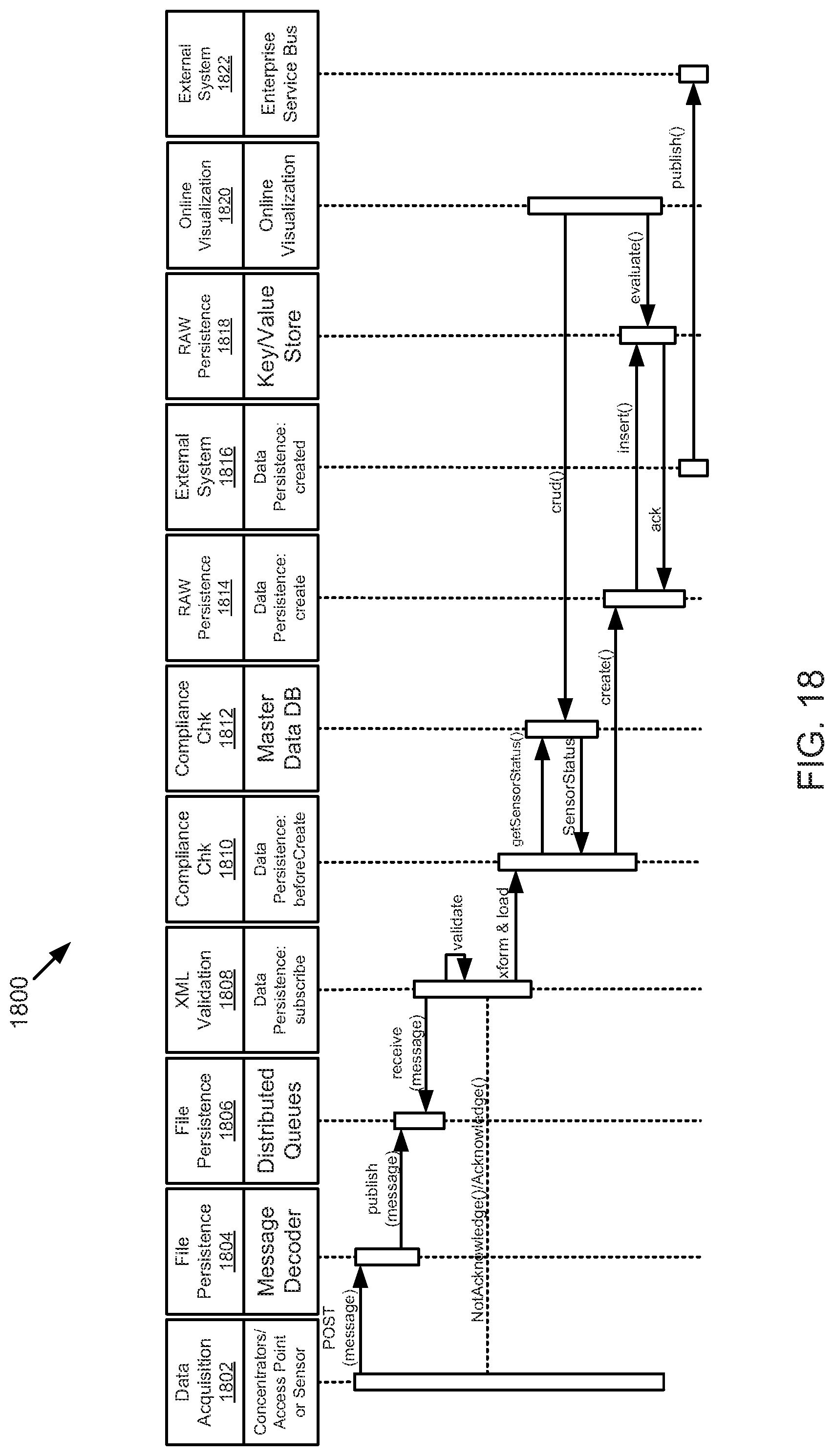

FIG. 18 is a schematic sequence diagram illustrating another usage scenario for data acquisition, according to one embodiment.

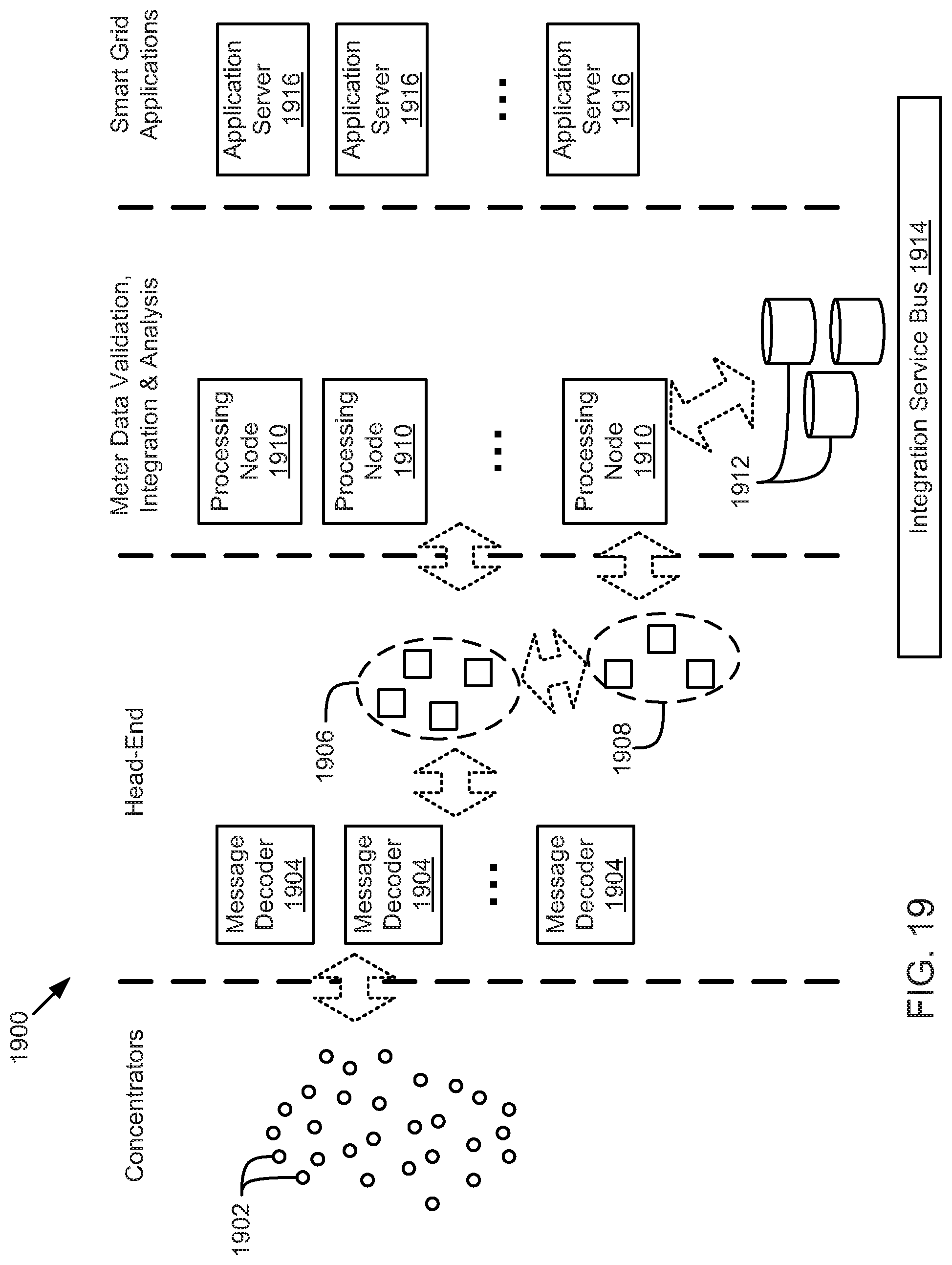

FIG. 19 is a schematic block diagram illustrating a sensor network for an enterprise Internet-of-Things platform system, according to one embodiment.

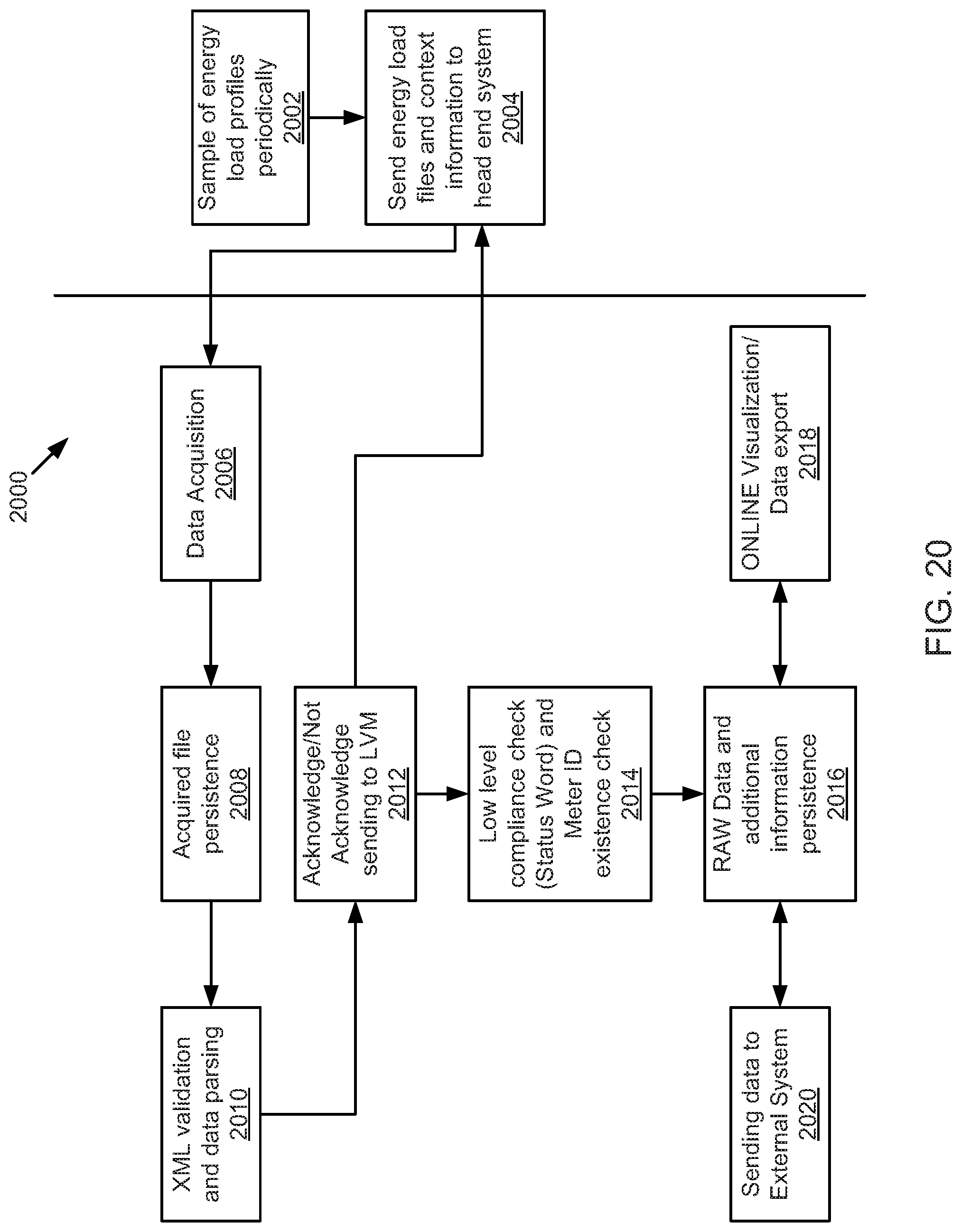

FIG. 20 is a schematic sequence diagram illustrating a usage scenario for data acquisition, according to one embodiment.

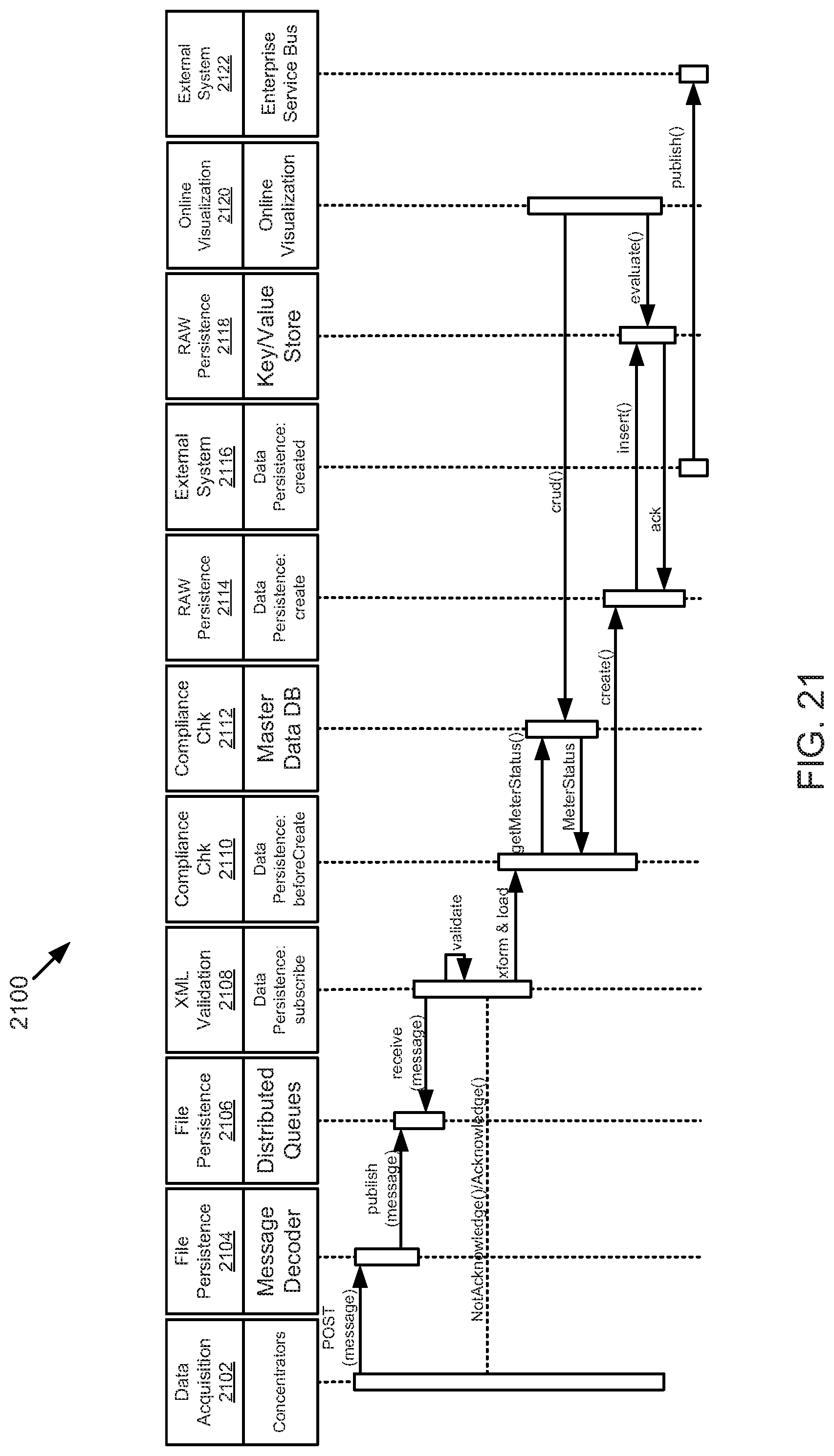

FIG. 21 is a schematic sequence diagram illustrating another usage scenario for data acquisition, according to one embodiment.

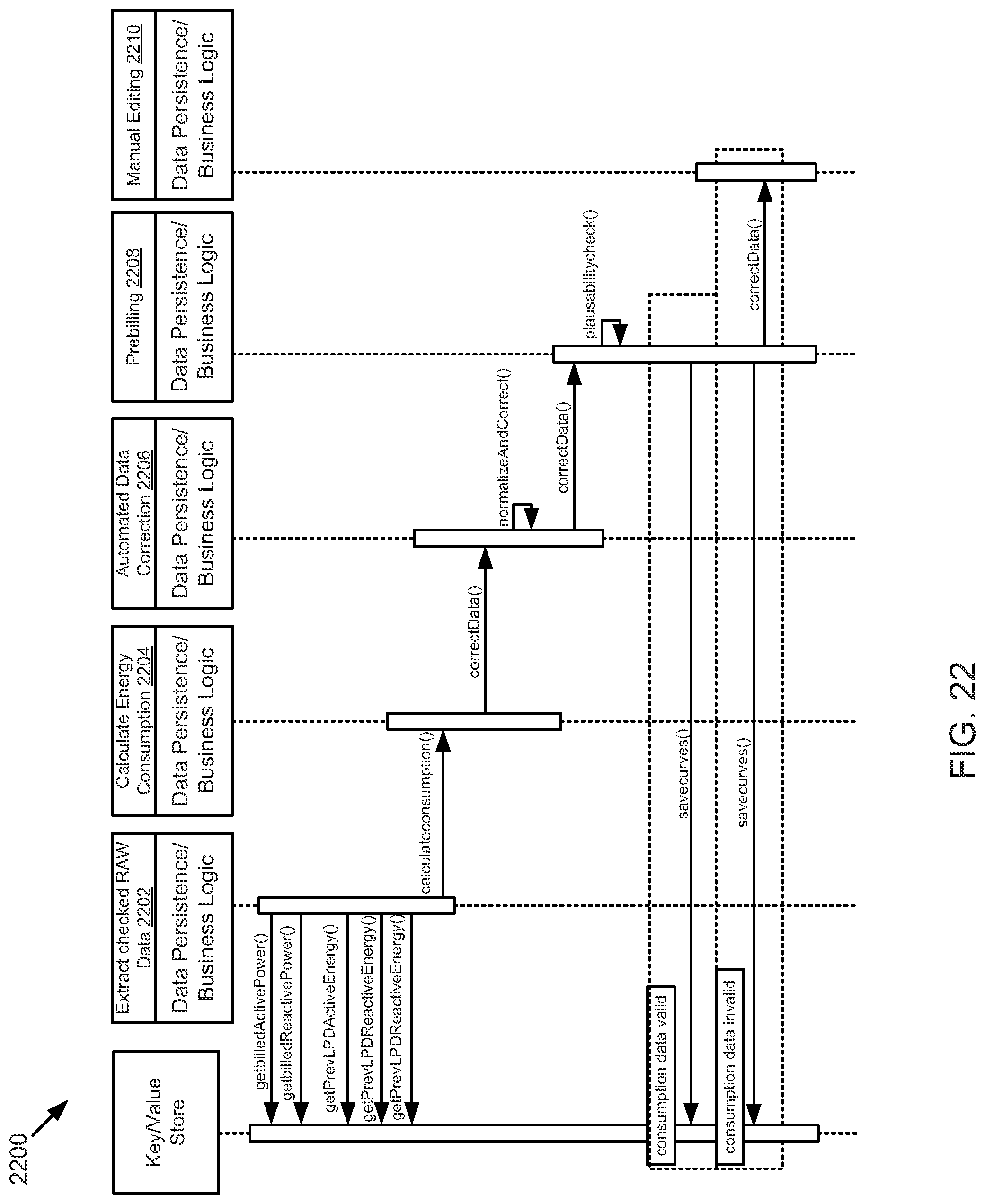

FIG. 22 is a schematic sequence diagram illustrating a usage scenario for a billing cycle, according to one embodiment.

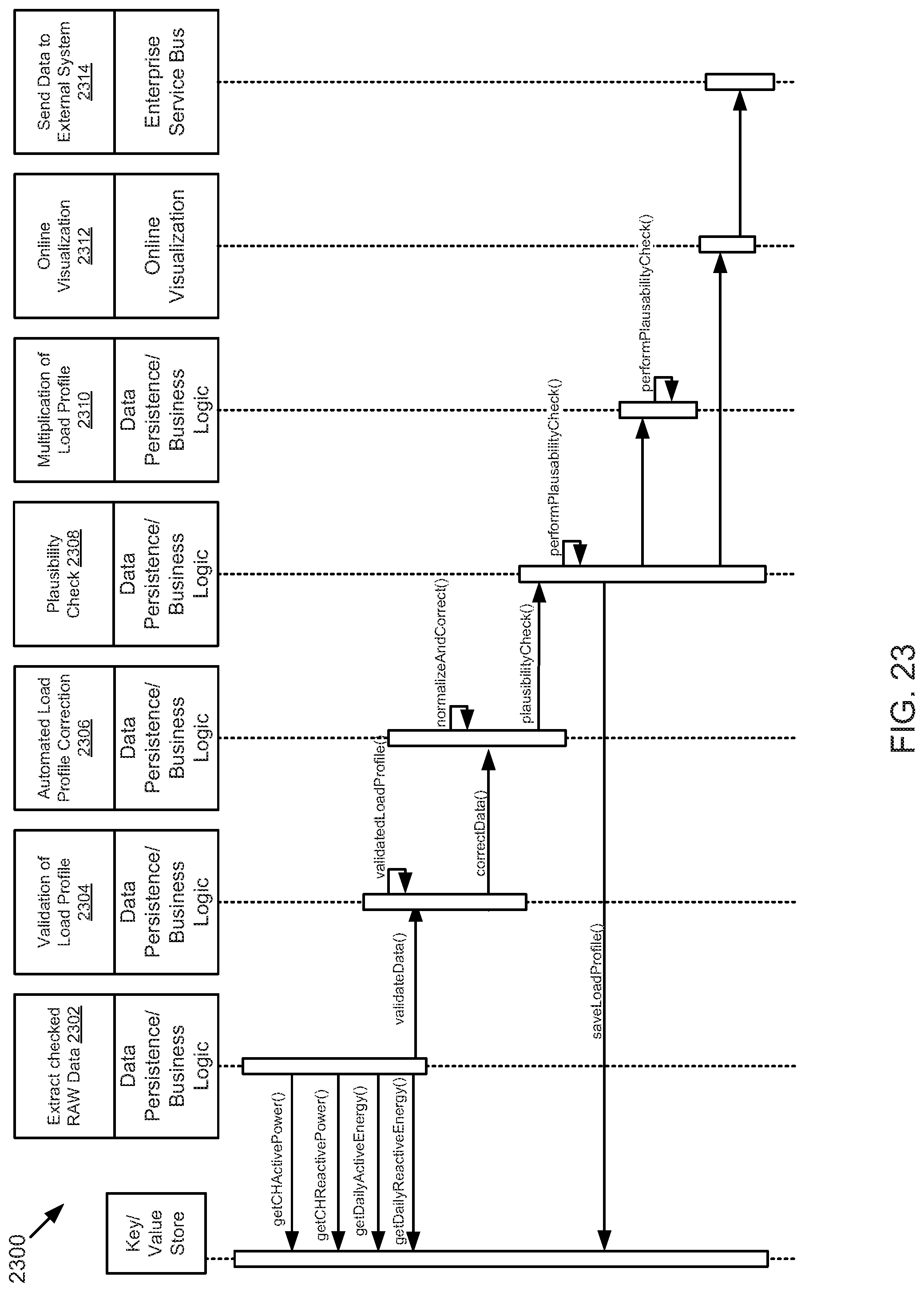

FIG. 23 is a schematic sequence diagram illustrating a usage scenario for daily data processing, according to one embodiment.

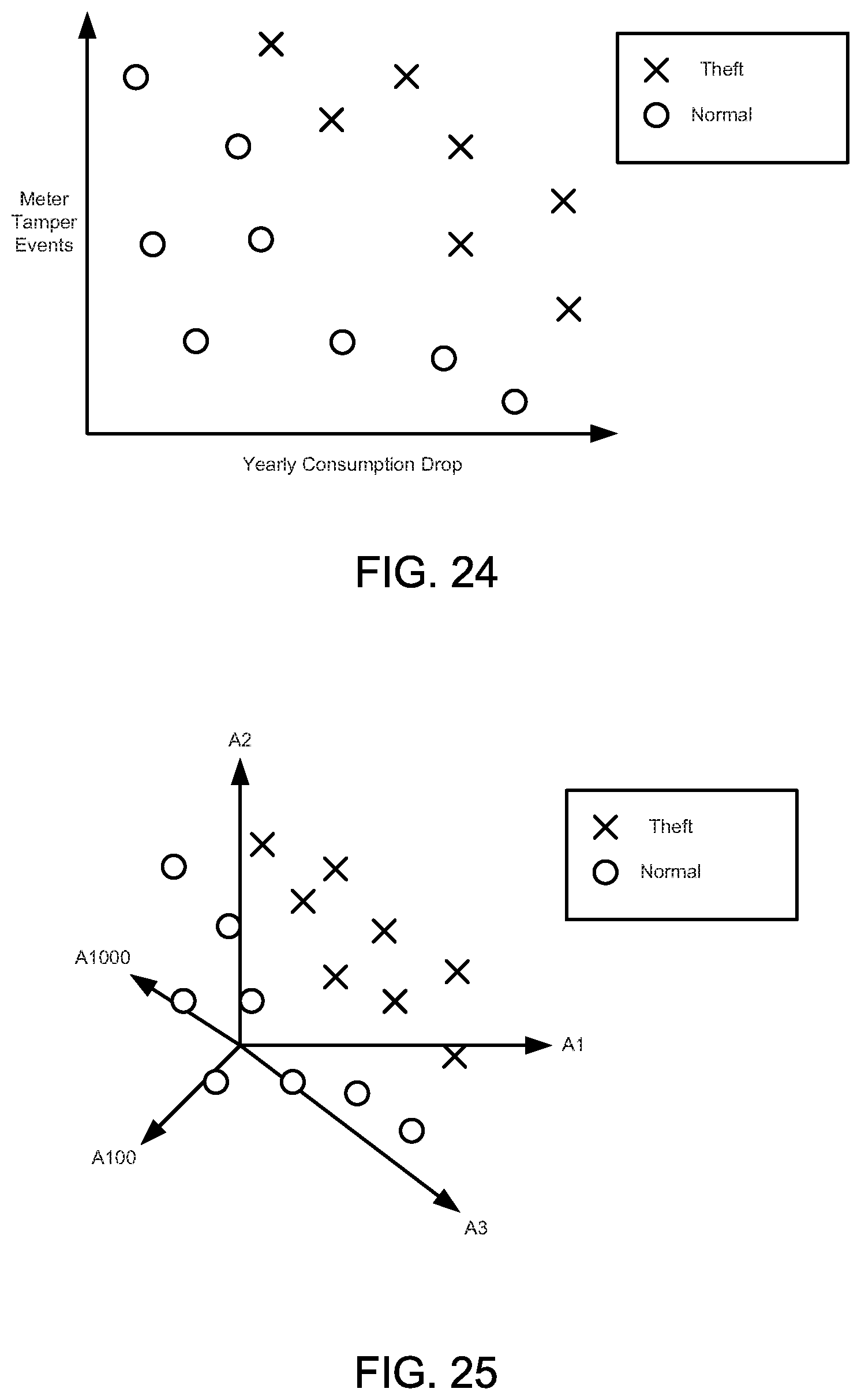

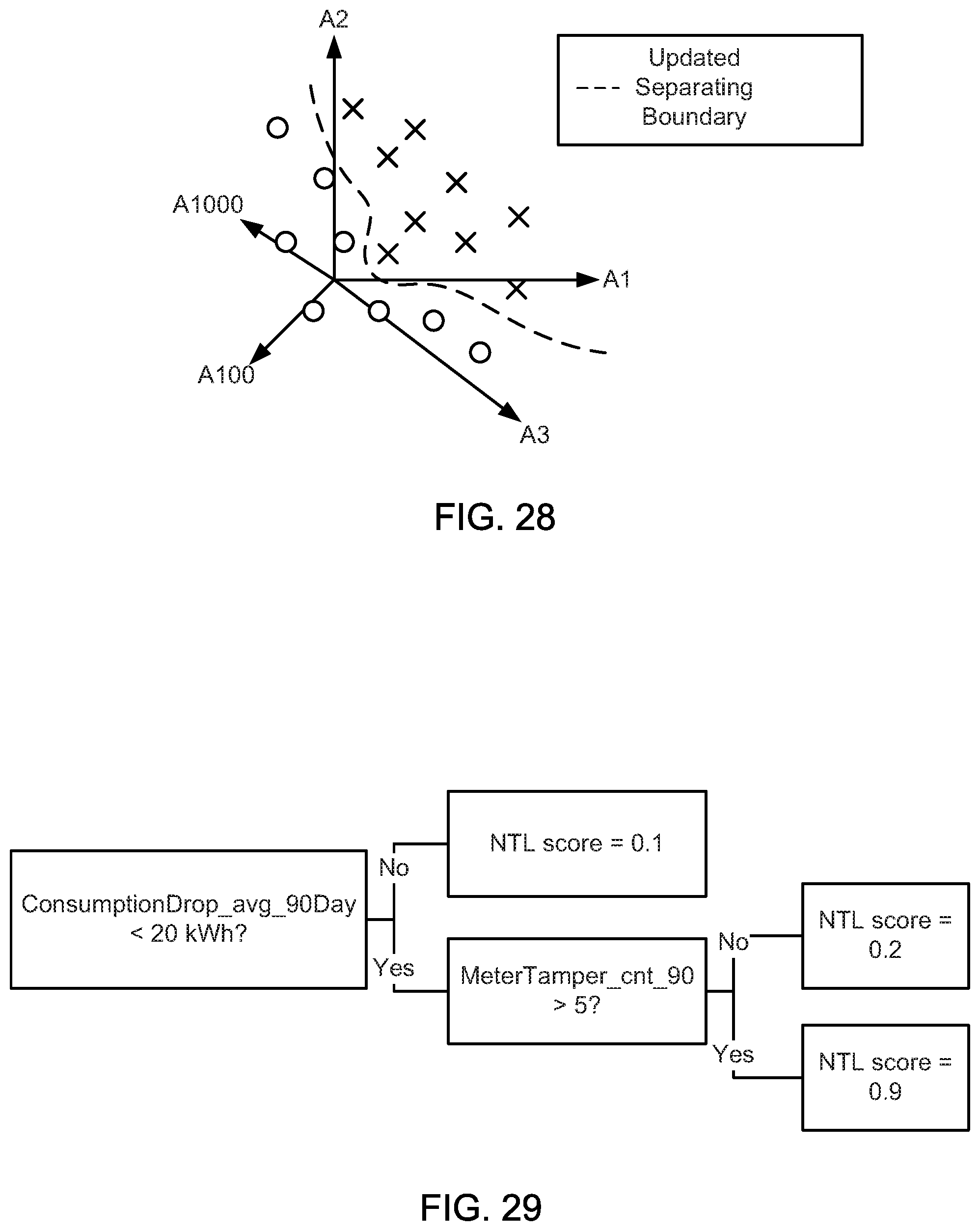

FIGS. 24-28 illustrate graphical diagrams for an illustrative machine learning example.

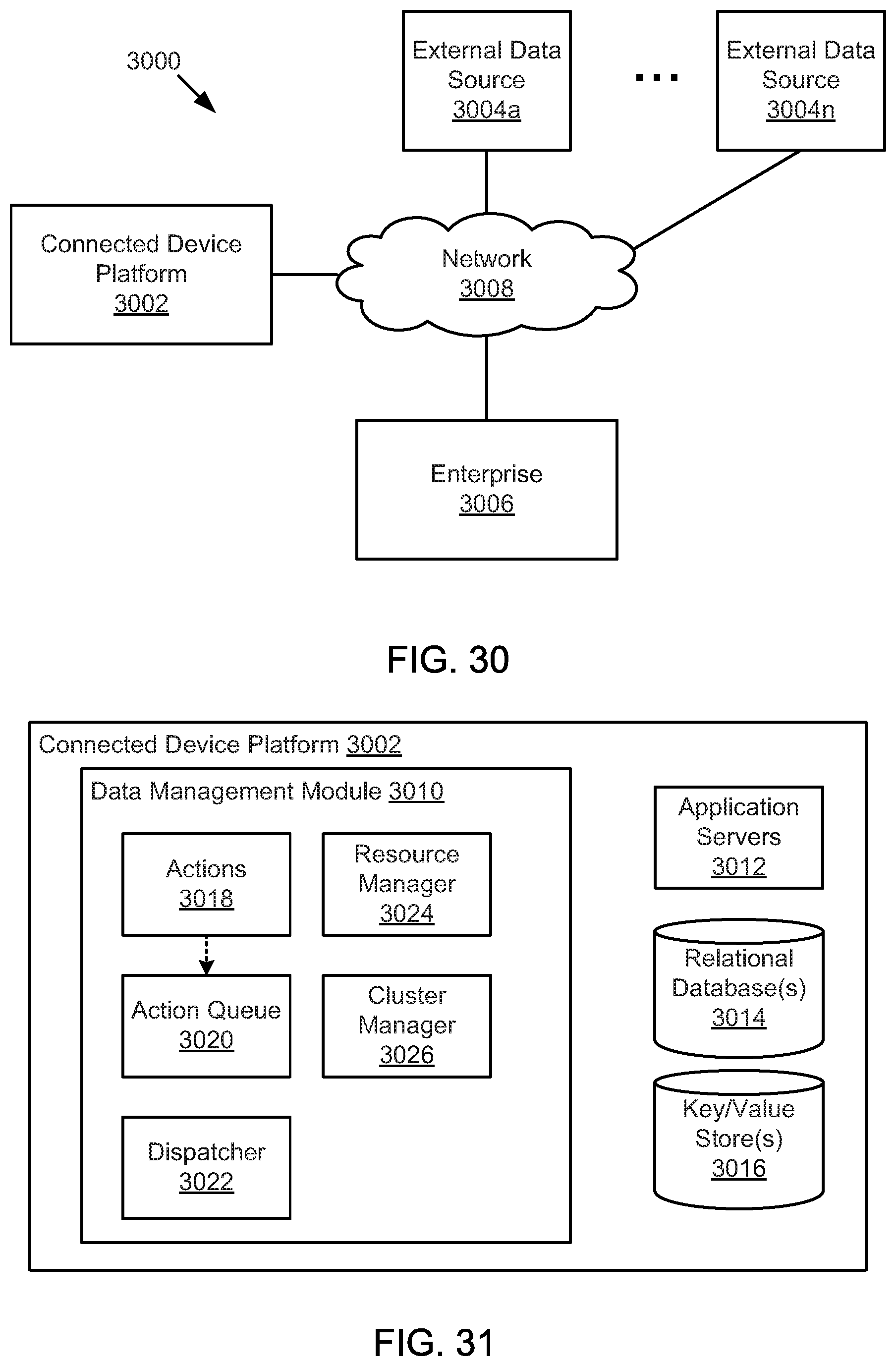

FIG. 29 is a schematic block diagram illustrating an example tree classifier, according to one embodiment.

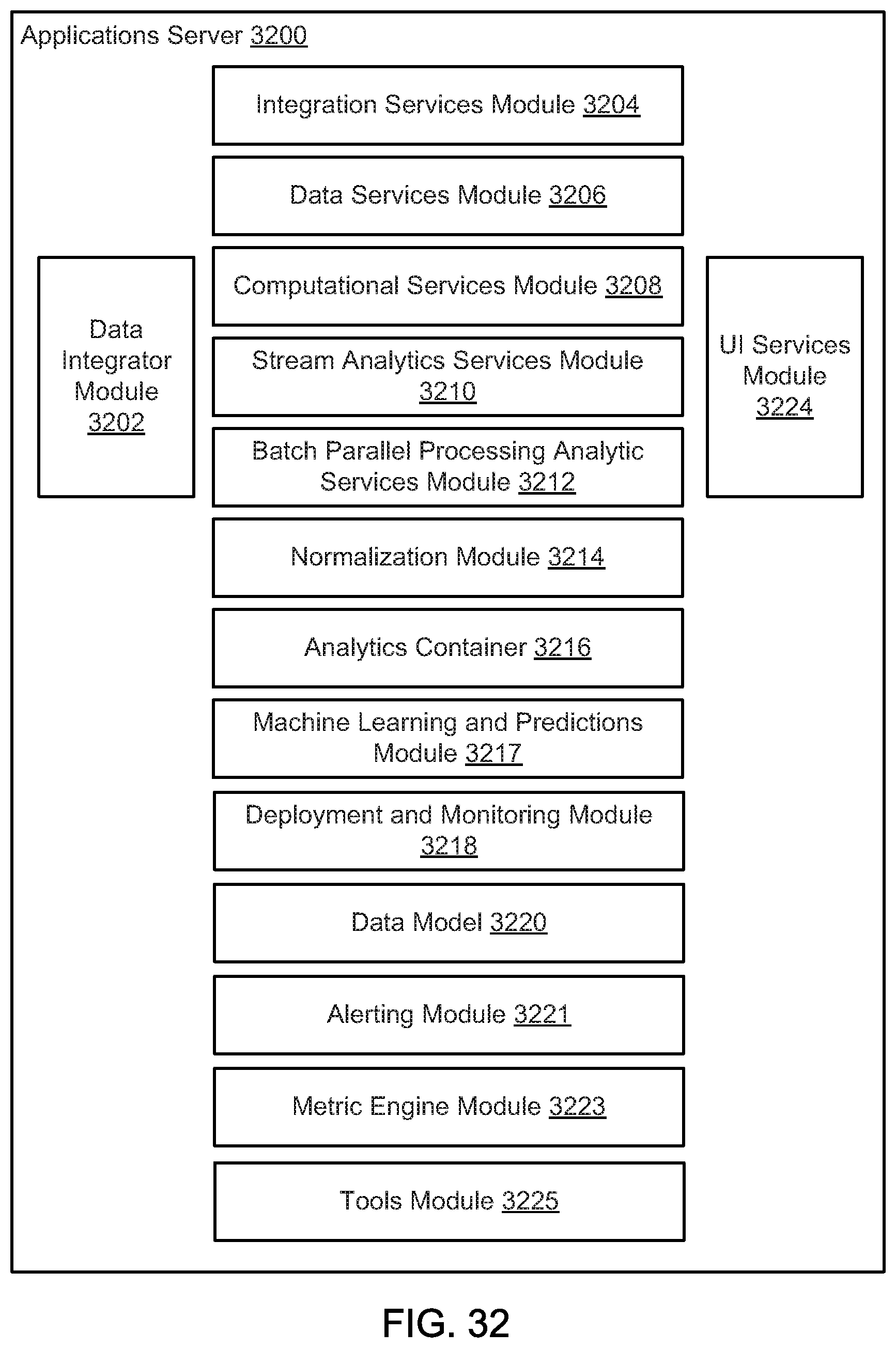

FIG. 30 illustrates an example environment of an enterprise Internet-of-Things application development platform, according to one embodiment.

FIG. 31 illustrates an example enterprise Internet-of-Things application development platform, according to one embodiment.

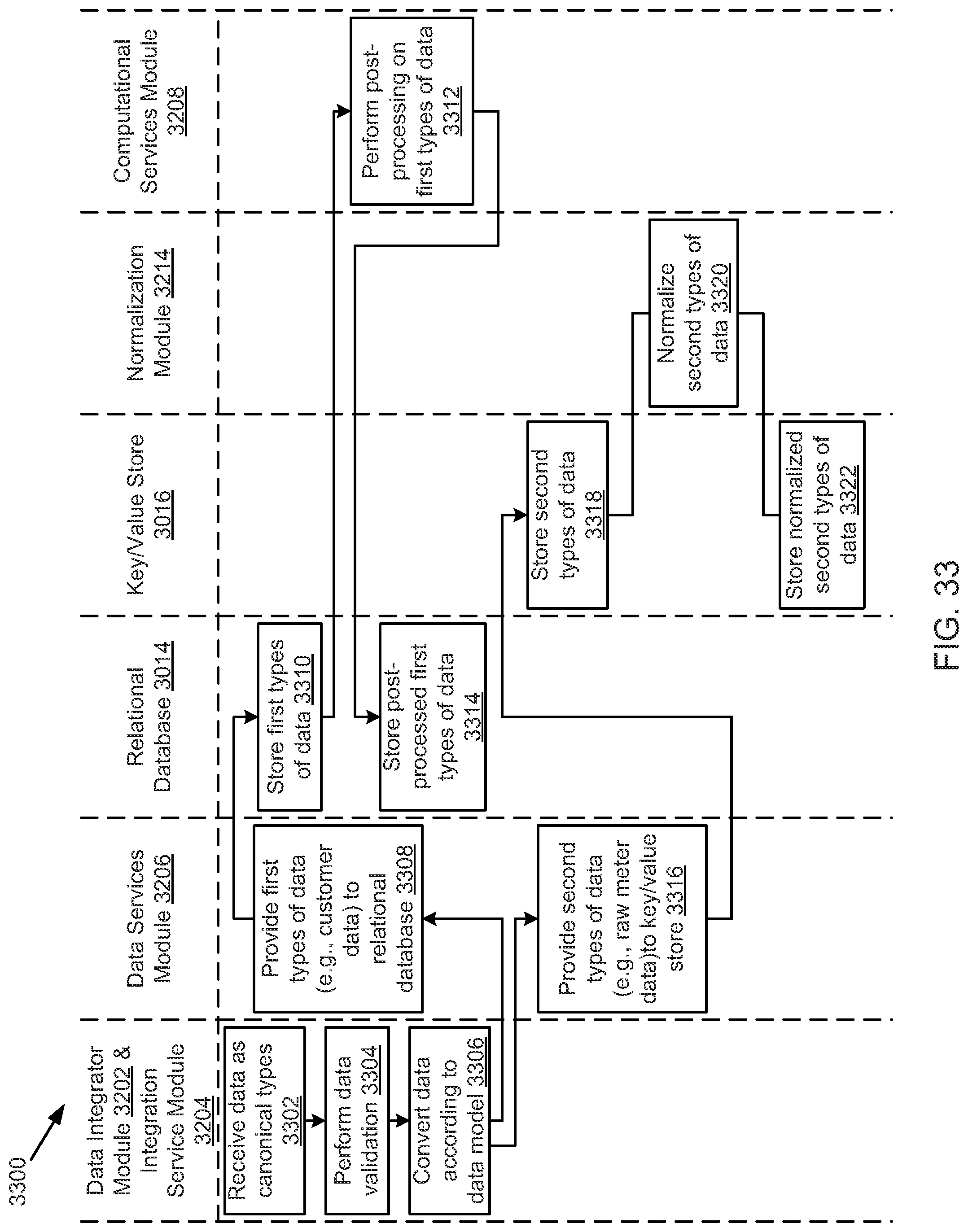

FIG. 32 illustrates an example applications server of an enterprise Internet-of-Things application development platform, according to one embodiment.

FIG. 33 illustrates an example data loading process, according to one embodiment.

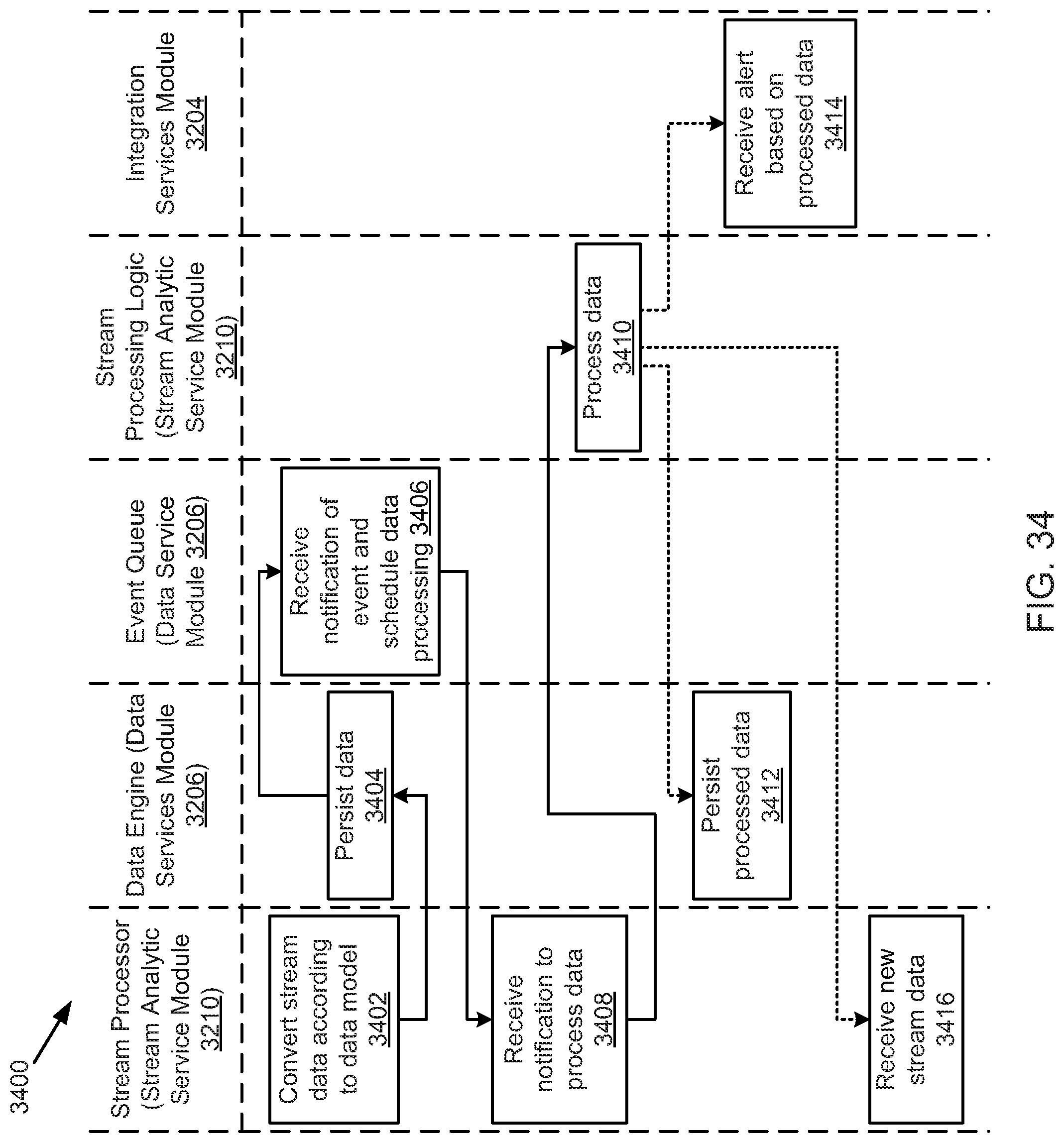

FIG. 34 illustrates an example stream process, according to one embodiment.

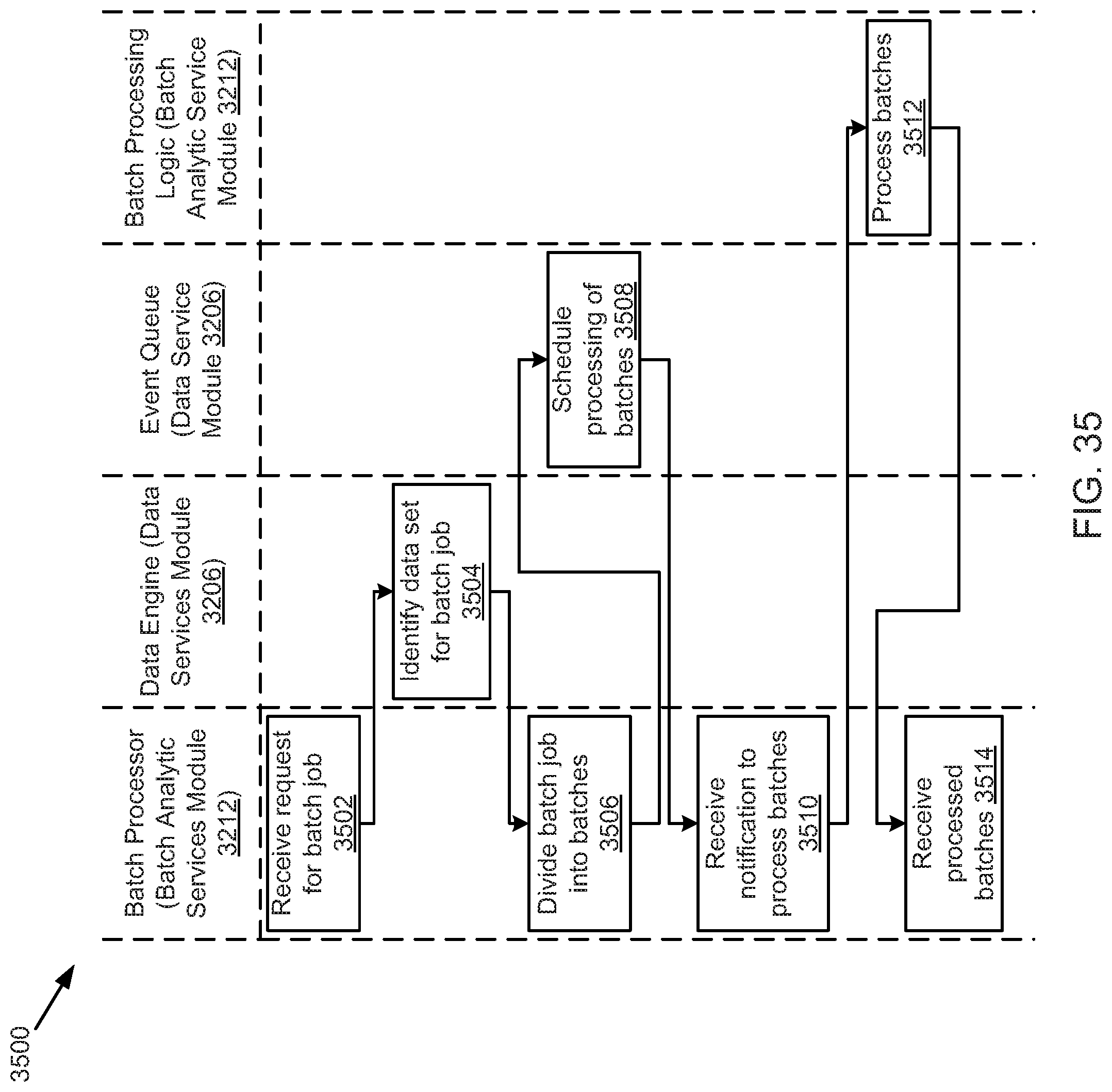

FIG. 35 illustrates an example batch parallel process, according to one embodiment.

FIG. 36 illustrates an example machine within which a set of instructions for causing the machine to perform one or more of the embodiments described herein can be executed, according to one embodiment.

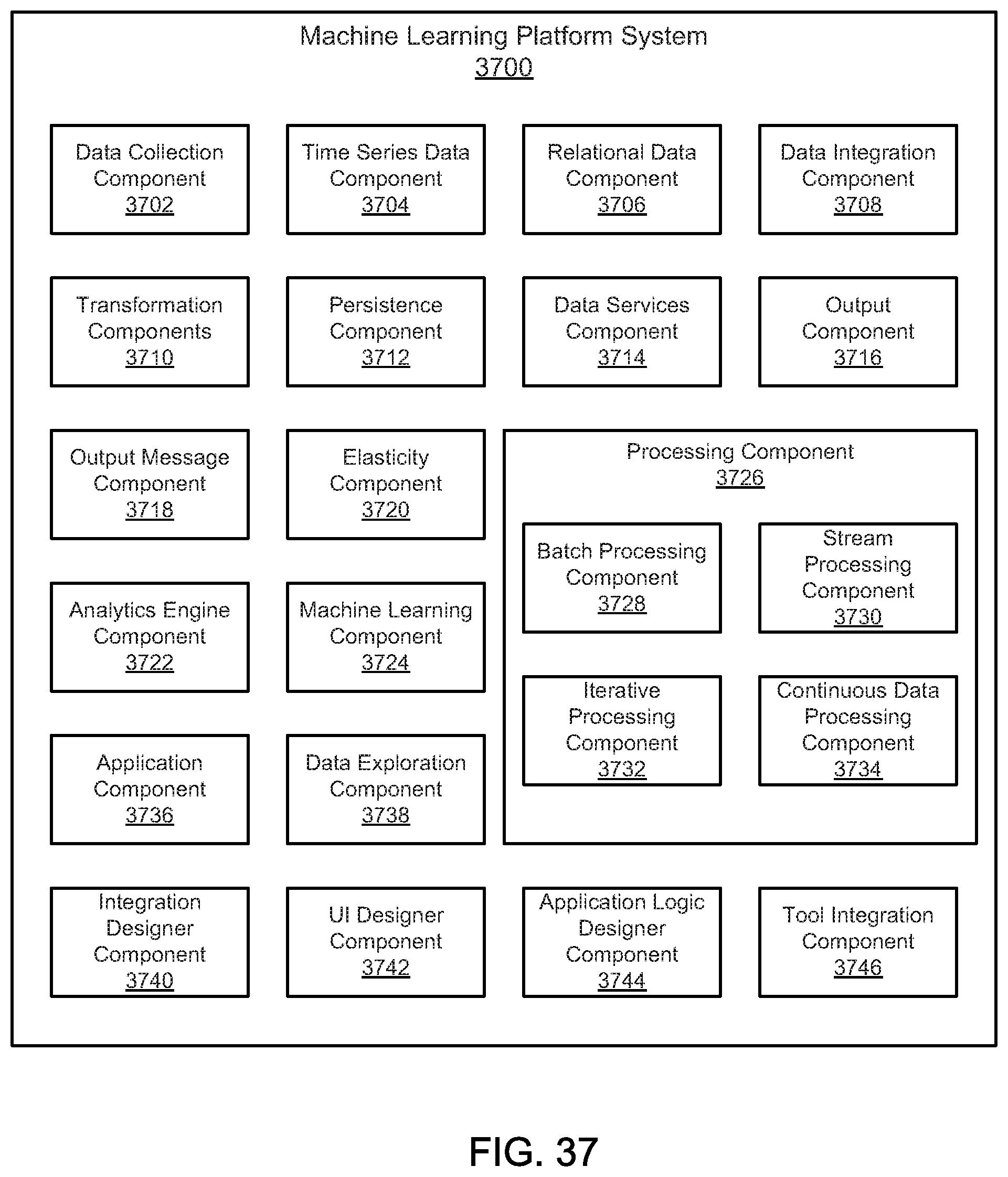

FIG. 37 is a schematic block diagram illustrating one embodiment of an application development platform system.

FIG. 38 illustrates an example method for providing or processing data based on a type system.

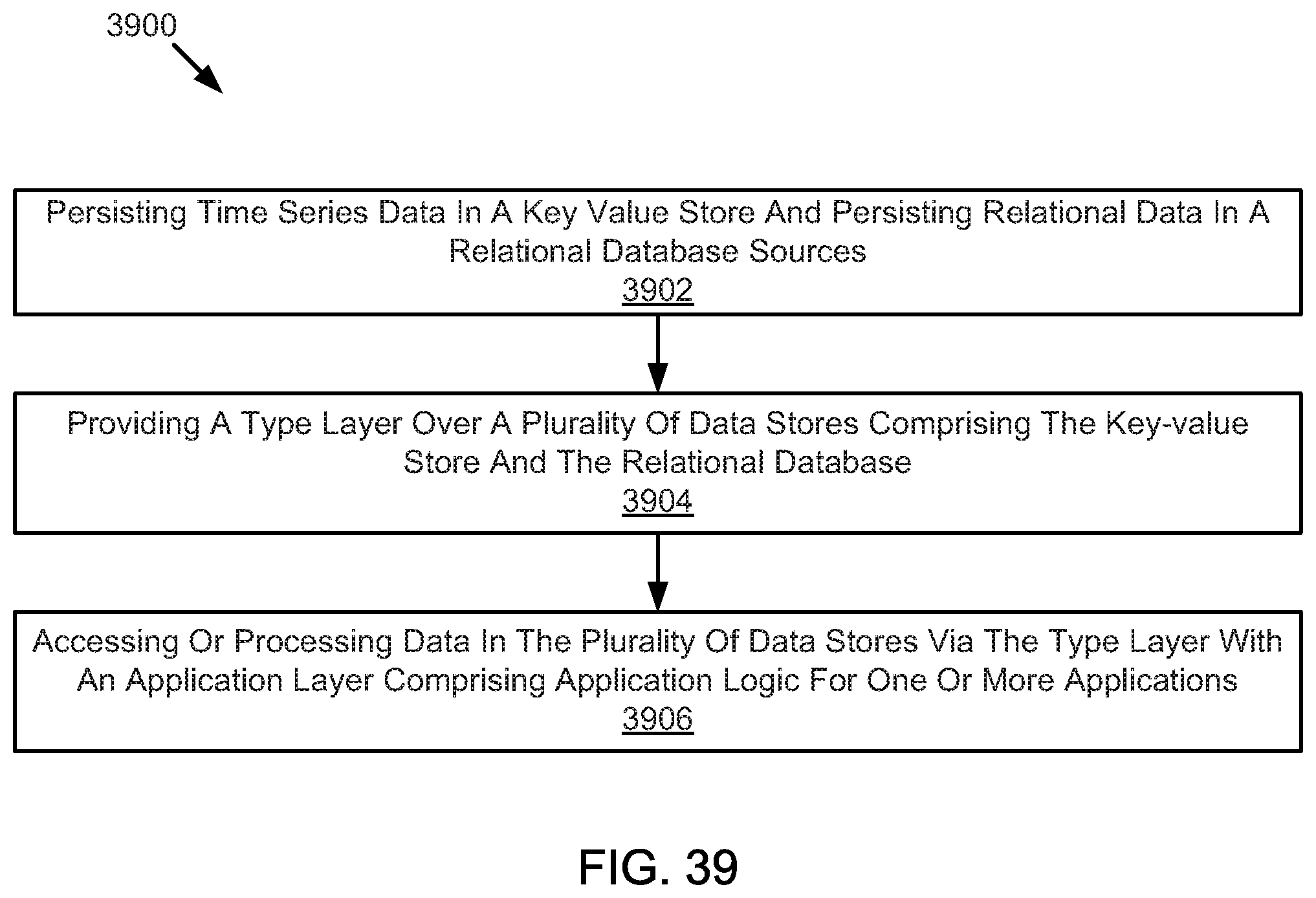

FIG. 39 illustrates an example method for providing or processing data.

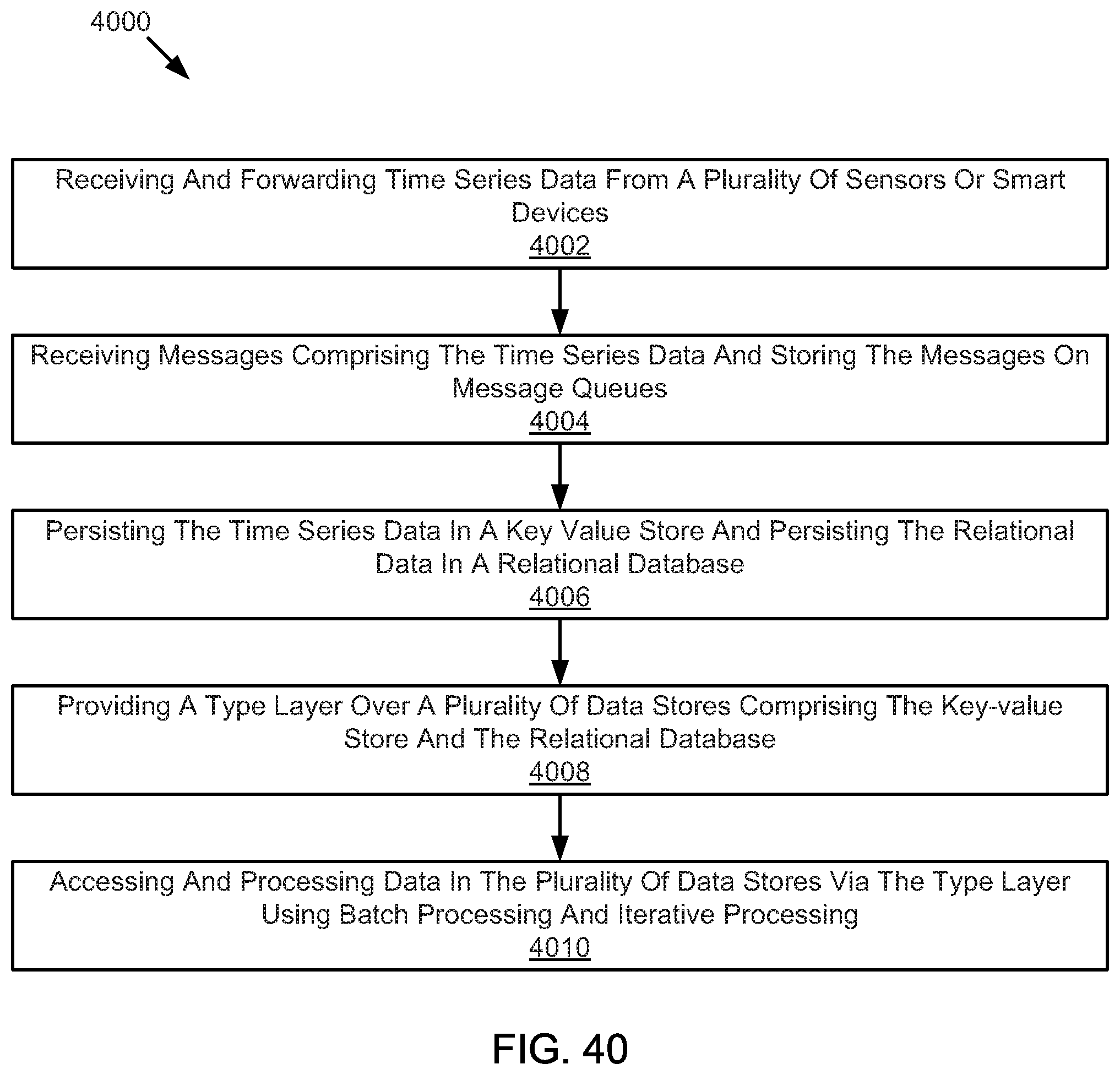

FIG. 40 illustrates an example method for providing or processing data.

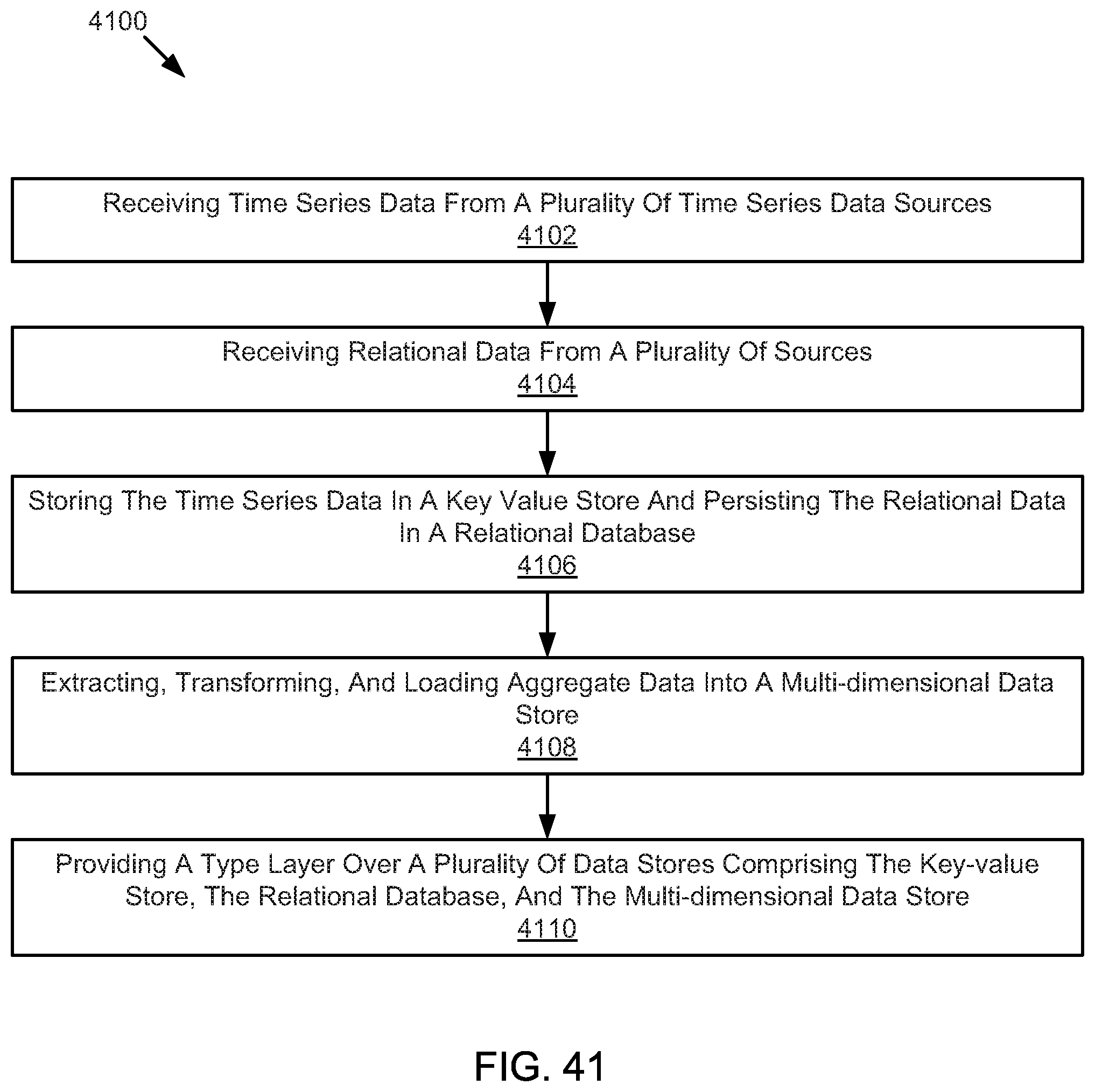

FIG. 41 illustrates an example method for storing data.

DETAILED DESCRIPTION

The IoT Platform disclosed herein is a platform as a service (PaaS) for the design, development, deployment, and operation of next generation cyberphysical software applications and business processes. The applications apply advanced data aggregation methods, data persistence methods, data analytics, and machine learning methods, embedded in a unique model driven architecture type system embodiment to recommend actions based on real-time and near real-time analysis of petabyte-scale data sets, numerous enterprise and extraprise data sources, and telemetry data from millions to billions of endpoints.

The IoT Platform disclosed herein also provides a suite of pre-built, cross-industry applications, developed on its platform, that facilitate IoT business transformation for organizations in energy, manufacturing, aerospace, automotive, chemical, pharmaceutical, telecommunications, retail, insurance, healthcare, financial services, the public sector, and others.

Customers can also use the IoT Platform to build and deploy custom designed Internet-of-Things Applications.

IoT cross-industry applications are highly customizable and extensible. Prebuilt, applications are available for predictive maintenance, sensor health, enterprise energy management, capital asset planning, fraud detection, CRM, and supply network optimization.

To make sense of and act on an unprecedented volume, velocity, and variety of data in real time, the IoT Platform applies the sciences of big data, advanced analytics, machine learning, and cloud computing. Products themselves are being redesigned to accommodate connectivity and low-cost sensors, creating a market opportunity for adaptive systems, a new generation of smart applications, and a renaissance of business process reengineering. The new IoT IT paradigm will reshape the value chain by transforming product design, marketing, manufacturing, and after-sale services.

The McKinsey Global Institute estimates the potential economic impact of new IoT applications and products to be as much as US$3.9-$11.1 trillion by 2025. See McKinsey & Company, "The Internet of Things, Mapping the Value Beyond the Hype," June 2015. Other industry researchers project that 50 billion devices will connect to the Internet by 2020. The IoT Platform disclosed herein offers a new generation of smart, real-time applications, overcoming the development challenges that have blocked companies from realizing that potential. The IoT Platform disclosed herein is PaaS for the design, development, deployment, and operation of next-generation IoT applications and business processes.

Multiple technologies are converging to enable a new generation of smart business processes and applications--and ultimately replace the current enterprise software applications stack. The number of emerging processes addressed will likely exceed by at least an order of magnitude the number of business processes that have been automated to date in client-server enterprise software and modern software-as-a-service (SaaS) applications.

The component technologies include: Low cost and virtually unlimited compute capacity and storage in scale-out cloud environments such as AWS, Azure, Google, and AliCloud; Big data and real-time streaming; IoT devices with low-cost sensors; Smart connected devices; Mobile computing; and Data science: big-data analytics and machine learning to process the volume, velocity, and variety of big-data streams.

This new computing paradigm will enable capabilities and applications not previously possible, including precise predictive analytics, massively parallel computing at the edge of the network, and fully connected sensor networks at the core of the business value chain. The number of addressable business processes will grow exponentially and require a new platform for the design, development, deployment, and operation of new generation, real-time, smart and connected applications.

Data are strategic resources at the heart of the emerging digital enterprise. The new IoT infrastructure software stack will be the nerve center that connects and enables collaboration among previously separate business functions, including product development, market, sales, service support, manufacturing, finance, and human capital management.

The emerging market opportunity is broad. At one end are targeted applications that address the fragmented needs of specific micro-vertical markets--for example, applying machine learning to sensor data for predictive maintenance that reduces expensive unscheduled down time. At the other end are a new generation of core ERP, CRM, and human capital management (HCM) applications, and a new generation of current SaaS applications.

These smart and real-time applications will be adaptive, continually evolving based on knowledge gained from machine learning. The integration of big data from IoT sensors, operational machine learning, and analytics can be used in a closed loop to control the devices being monitored. Real-time streaming with in-line or operationalized analytics and machine learning will enhance business operations and enable near-real-time decision making not possible by applying traditional business intelligence against batch-oriented data warehouses.

Smart, connected products will disrupt and transform the value chain. They require a new class of enterprise applications that correlate, aggregate, and apply advanced machine learning to perform real-time analysis of data from the sensors, extraprise data (such as weather, traffic, and commodity prices), and all available operational and enterprise data across supplier networks, logistics, manufacturing, dealers, and customers.

These new IoT applications will deliver a step-function improvement in operational efficiencies and customer engagement, and enable new revenue-generation opportunities. IoT applications differ from traditional enterprise applications both by their use of real-time telemetry data from smart connected products and devices, but also by operating against all available data across a company's business value chain and applying machine learning to continuously deliver highly accurate and actionable predictions and optimizations. Think Google Now for the enterprise. The following are example use cases in various lines of business.

In the product development and manufacturing: "Industry 4.0" (aka Industrie 4.0) line of business, the use cases may include identifying and resolving product quality problems based on customer use data; and/or detecting and mitigating manufacturing equipment malfunctions.

In the supply networks and logistics line of business, the use case may include continuously tracking product components through supply and logistics networks; and/or predicting and mitigating unanticipated delivery delays due to internal or external factors.

In the marketing and sales lines of business, the use cases may include delivering personalized customer product and service offers and after-sale service offers through mobile applications and connected products; and/or developing, testing, and adjusting micro-segmented pricing; and/or delivering "product-as-a-service," such as "power-by-the-hour engine" and equipment maintenance.

In the after-sale service lines of business, the use case may include shifting from condition based maintenance to predictive maintenance; and/or increasing revenue with new value-added services--for example, extended warranties and comparative benchmarking across a customer's equipment, fleet, or industry.

In the next-generation CRM line of business, the use case may include extending CRM from sales to support, for a full customer lifecycle engagement system; and/or increasing use of data analysis in marketing and product development. This will include connecting all customer end points in an IoT system to aggregate information from the sensors, including smart phones, using those same end user devices as offering vehicles.

As demonstrated, these new IoT applications will deliver a step-function improvement in operational efficiencies and customer engagement, and enable new revenue-generation opportunities. These real-time, anticipatory, and adaptive-learning applications apply across industries, to predict heart attacks; tune insurance rates to customer behavior; anticipate the next crime location, terrorist attack, or civil unrest; anticipate customer churn or promote a customer-specific wireless data plan; or optimize distributed energy resources in smart grids, micro-grids, and buildings.

The IoT and related big data analytics have received much attention, with large enterprises making claims to stake out their market position--examples include Amazon.TM., Cisco.TM., GE.TM., Microsoft.TM., Salesforce.TM., and SAP.TM.. Recognizing the importance of this business opportunity, investors have assigned outsized valuations to market entrants that promise solutions to take advantage of the IoT. Recent examples include Cloudera.TM., MapR.TM., Palantir.TM., Pivotal.TM., and Uptake.TM., each valued at well over $1 billion today. Large corporations also recognize the opportunity and have been investing heavily in development of IoT capabilities. In 2011 G E Digital.TM., for example, invested more than $1 billion to build a "Center of Excellence" in San Ramon, Calif., and has been spending order of $1 billion per year on development and marketing of an industrial internet IoT platform, Predix.TM..

The market growth and size projections for IoT applications and services are staggering. Many thought leaders, including Harvard Business School's Michael E. Porter, have concluded that IoT will require essentially an entire replacement market in global IT. See Michael E. Porter and James E. Hepplemann, "How Smart, Connected Products are Transforming Competition," Harvard Business Review, November 2014. However, virtually all IoT platform development efforts to date--internal development projects as well as industry-giant development projects such as GE's Predix.TM. and Pivotal.TM.--are attempts to develop a solution from the many independent software components that are collectively known as the open-source Apache Hadoop.TM. stack. It is clear that these efforts are more difficult than they appear. The many market claims aside, a close examination suggests that there are few examples, if any, of enterprise production-scale, elastic cloud, big data, and machine learning IoT applications that have been successfully deployed in any vertical market except for applications addressed with the IoT Platform disclosed herein.

The remarkable lack of success results from the lack of a comprehensive and cohesive IoT application development platform. Companies typically look to the Apache Hadoop Open Source Foundation.TM. and are initially encouraged that they can install the Hadoop open-source software stack to establish a "data lake" and build from there. However, the investment and skill level required to deliver business value quickly escalates when developers face hundreds of disparate unique software components in various stages of maturity, designed and developed by over 350 different contributors, using a diversity of programming languages, and inconsistent data structures, while providing incompatible software application programming interfaces. A loose collection of independent, open source projects is not a true platform, but rather a set of independent technologies that need to be somehow integrated into a cohesive, coherent software application system and then maintained by developers.

Apache Hadoop repackagers, e.g., Cloudera.TM. and Hortonworks.TM., provide technical support, but have failed to integrate their Hadoop components into a cohesive software development environment.

To date, there is no successful large-scale enterprise IoT application deployments using the Apache Hadoop technology stack. Adoption is further hampered by complexity and a lack of qualified software engineers and data scientists.

Gartner Research concludes that Hadoop adoption remains low as firms struggle to realize Hadoop's business value and overcome a shortage of workers who have the skills to use it. A survey of 284 global IT and business leaders in May 2015 found that, "The lack of near-term plans for Hadoop adoption suggests that despite continuing enthusiasm for the big data phenomenon, specific demand for Hadoop is not accelerating." Further information is available in the Gartner report "Survey Analysis: Hadoop Adoption Drivers and Challenges." The report can be found at http://www.gartner.com/document/3051617.

Developing next-generation applications with measurable value to the business requires a scalable, real-time platform that works with traditional systems of record and augments them with sophisticated analytics and machine learning. But the risk of failure is high. You don't know what you don't know. IoT is new technology to most enterprise IT-oriented development organizations, and expertise may be difficult to acquire. Time to market is measured in many years. Costs are typically higher than anticipated, often hundreds of millions of dollars. The cost of GE Predix, for example, is measured in billions of dollars.

Next-generation IoT applications require a new enterprise software platform. Requirements extend well beyond relatively small-scale (by Internet standards) business-activity tracking application using transactional/relational databases, division-level process optimization using limited data and linear algorithms, and reporting using mostly offline data warehouses. Next-gen IoT applications manage dynamic, petabyte-size datasets requiring unified federated data images of all relevant data across a company's value chain, and apply sophisticated analytics and machine learning to make predictions in real time as those data change. These applications require cost-effective Internet/cloud-scale distributed computing architectures and infrastructures such as those from AWS.TM. Microsoft.TM., IBM.TM. and Google.TM.. These public clouds are designed to scale horizontally--not vertically, like traditional computer infrastructures--by taking advantage of millions of fast, inexpensive commodity processors and data storage devices. Google.TM., for example, uses a distributed computing infrastructure to process over 26 PB per day at rates of one billion data points per second.

Distributed infrastructure requires new distributed software architectures and applications. Writing application software to take advantage of these distributed architectures is non-trivial. Without a cohesive application development platform, most enterprise caliber IT teams and system integrators do not have the qualifications or experience to succeed.

For an innovative company willing to invest in the development of a new generation of mission-critical enterprise applications, the first requirement is a comprehensive and integrated infrastructure stack. The goal is a Platform as a Service (PaaS): a modern scale-out architecture leveraging big data, open-source technologies, and data science.

Vendors of existing enterprise and SaaS applications face the risk that these disruptive IoT platform technologies will create a market discontinuity--a shift in market forces that undermines the market for existing systems. It should be anticipated that emerging SaaS vendors will indeed disrupt the market. However, there is also a high potential to address the emerging market opportunities with an architecture that can link the two platforms together--traditional systems and modern big data/scale-out architecture--in a complementary and non-disruptive fashion. Market incumbents, legacy application vendors, and SaaS vendors have an advantage because of their enterprise application development expertise, business process domain expertise, established customer base, and existing distribution channels. Application and SaaS vendors can increase the value of their systems of record by complementing them with a new IoT/big data and machine learning PaaS infrastructure stack, unifying the two stacks into a comprehensive and integrated platform for the development and deployment of next-generation business processes.

This approach extends existing applications at the same time it allows for the development of entirely new applications that are highly targeted and responsive to the explosion of new business process requirements.

Given the complexity of the platform for next-generation application design, development, provisioning, and operations, it's important to understand the effects of the build-versus-buy decision on costs and time to market.

Applicant has designed and developed the IoT Platform disclosed herein, a cohesive application development PaaS that enables IT teams to rapidly design, develop, and deploy enterprise-scale IoT applications. These applications exploit the capabilities of streaming analytics, IoT, elastic cloud computing, machine learning, and mobile computing--integrating dynamic, rapidly growing petabyte-scale data sets, scores of enterprise and extraprise data sources, and complex sensor networks with tens of millions of endpoints.

The IoT Platform disclosed herein can be deployed such that companies using the platform's SaaS applications can integrate and process highly dynamic petascale data sets, gigascale sensor networks, and enterprise and extraprise information systems. The IoT Platform disclosed herein monitors and manages millions to billions of sensors, such as smart meters for an electric utility grid operator, throughout the business value chain--from power generation to distribution to the home or building--applying machine learning to loop back and control devices in real time while integrating with legacy systems of record.

The IoT Platform disclosed herein has a broad focus that includes a range of next-generation applications for horizontal markets such as customer relationship management (CRM), predictive maintenance, sensor health, investment planning, supply network optimization, energy and greenhouse gas management, in addition to vertical market applications, including but not limited to manufacturing, oil and gas, retail, computer software, discrete manufacturing, aerospace, financial services, healthcare, pharmaceuticals, chemical and telecommunications.

Enterprises can also use the IoT Platform disclosed herein and its enhanced application tooling to build and deploy custom applications and business processes. Systems integrators can use the IoT Platform disclosed herein to build out a partner ecosystem and drive early network-effect benefits. New applications made possible by the IoT Platform disclosed herein and other big data sources will likely drive a renaissance of business process reengineering.

The Internet-of-Things and advanced data science are rewriting the rules of competition. The advantage goes to organizations that can convert petabytes of realtime and historical data to predictions--more quickly and more accurately than their competitors. Potential benefits and payoffs include better product and service design, promotion, and pricing; optimized supply chains that avoid delays and increase output; reduced customer churn; higher average revenue per customer; and predictive maintenance that avoids downtime for vehicle fleets and manufacturing systems while lowering service costs.

Capitalizing on the potential of the IoT requires a new kind of technology stack that can handle the volume, velocity, and variety of big data and apply operational machine learning at scale.

Existing attempts to build an IoT technology stack from open-source components have failed--frustrated by the complexity of integrating hundreds of software components, data sources, processes and user interface components developed with disparate programming languages and incompatible software interfaces.

The IoT Platform disclosed herein has successfully developed a comprehensive technology stack from scratch for the design, development, deployment, and operation of next-generation cyberphysical IoT applications and business processes. The IoT Platform disclosed herein may provide benefits that allow customers to report measurable ROI, including improved fraud detection, increased uptime as a result of predictive maintenance, lower maintenance costs, improved energy efficiency, and stronger customer engagement. Customers can use prebuilt IoT Applications adapt those applications using the platform's toolset, or build custom applications using the IoT platform as a service.

Conventional platform as a service (PaaS) companies and big data companies have become increasingly prominent in the high technology and information technology industries. The term "PaaS" refers generally to computing models where a provider delivers hardware and/or software tools to users as a service to be accessed remotely via communications networks, such as via the Internet. PaaS companies, including infrastructure companies, may provide a platform that empowers organizations to develop, manage, and run web applications. PaaS companies can provide these organizations with such capabilities without an attendant requirement that the organizations shoulder the complexity and burden of the infrastructure, development tools, or other systems required for the platform. Example PaaS solutions include offerings from Salesforce.com.TM., Cloudera.TM., Pivotal.TM., and GE Predix.TM..

Big data companies may provide technology that allows organizations to manage large amounts of data and related storage facilities. Big data companies, including database companies, can assist an organization with data capture, formatting, manipulation, storage, searching, and analysis to achieve insights about the organization and to otherwise improve operation of the organization. Examples of currently available Big Data solutions include Apache HDFS, Cloudera, and IBM Bluemix.

Infrastructure as a Service (IaaS) provide remote cloud based virtual compute and storage platforms. Examples of IaaS solutions include Amazon AWS.TM., Microsoft Azure.TM., AliCloud, IBM Cloud Services, and the GE Industrial Internet.

Applicants have recognized numerous deficiencies in currently available PaaS and IaaS solutions. For example, some IaaS and PaaS products and companies may offer a "platform" in that they equip developers with low-level systems, including hardware, to store, query, process, and manage data. However, these low-level systems and data management services do not provide integrated, cohesive platforms for application development, user interface (UI) tools, data analysis tools, the ability to manage complex data models, and system provisioning and administration. By way of example, data visualization and analysis products may offer visualization and exploration tools, which may be useful for an enterprise, but generally lack complex analytic design and customizability with regard to their data. For example, existing data exploration tools may be capable of processing or displaying snapshots of historical statistical data, but lack offerings that can trigger analytics on real-time or streaming events or deal with complex time-series calculations. As big data, PaaS, IaaS, and cyberphysical systems have application to all industries, the systems, methods, algorithms, and other solutions in the present disclosure are not limited to any specific industry, data type, or use case. Example embodiments disclosed herein are not limiting and, indeed, principles of the present disclosure will apply to all industries, data types, and use cases. For example, implementations involving energy utilities or the energy sector are illustrative only and may be applied to other industries such as health care, transportation, telecommunication, advertising, financial services, military and devices, retail, scientific and geological studies, and others.

Applicants have recognized that, what is needed is a solution to the big data problem, i.e., data sets that are so large or complex that traditional data processing applications are inadequate to process the data. What is needed are systems, methods, and devices that comprise an enterprise Internet-of-Things application development platform for big data analytics, integration, data processing and machine learning, such that data can be captured, analyzed, curated, searched, shared, stored, transferred, visualized, and queried in a meaningful manner for usage in enterprise or other systems.

Furthermore, the amount of available data is likely to expand exponentially with increased presence and usage of smart, connected products as well as cloud-based software solutions for enterprise data storage and processing. Such cyber-physical systems are often referred to as the Internet-of-things (IoT) and/or the Internet-of-everything (IoE). Generally speaking, the acronyms IoT and IoE refer to computing models where large numbers of devices, including devices that have not conventionally included communication or processing capabilities, are able to communicate over a network and/or perform calculation and processing to control device operation.

However, cyber-physical systems and IoT are not necessarily the same, as cyber-physical systems are integrations of computation, networking, and physical processes. FIG. 1, and the associated description below, provides one example definition and background information about cyber-physical systems based on information available on http://cyberpysicalsystems.org. "Cyber-Physical Systems (CPS) are integrations of computation, networking, and physical processes. Embedded computers and networks monitor and control the physical processes, with feedback loops where physical processes affect computations and vice versa. The economic and societal potential of such systems is vastly greater than what has been realized, and major investments are being made worldwide to develop the technology. The technology builds on the older (but still very young) discipline of embedded systems, computers and software embedded in devices whose principle mission is not computation, such as cars, toys, medical devices, and scientific instruments. CPS integrates the dynamics of the physical processes with those of the software and networking, providing abstractions and modeling, design, and analysis techniques for the integrated whole." See description of Cyber-Physical Systems from http://cyberphysicalsystems.org. Cyber-physical systems, machine learning platform systems, application development platform systems, and other systems discussed herein may include all or some of the attributes as displayed or discussed above in relation to FIG. 1. Some embodiments of cyber-physical systems disclosed herein are sometimes referred to herein as the C3 IoT Platform. However, the embodiments and disclosure presented herein may apply to other cyber-physical systems, PaaS solutions, or IoT solutions without limitation.

Applicants have recognized that next-generation IoT applications and/or cyber-physical applications require a new enterprise software platform. Requirements extend well beyond relatively small-scale (by Internet standards) business-activity tracking using transactional/relational databases (e.g. ERP, CRM, HRM) MRP applications, division-level process optimization using limited data and linear algorithms, and reporting using mostly offline data warehouses. Next-generation IoT and cyber-physical applications need to manage dynamic, petabyte-size datasets consisting of unified, federated data images of all relevant data across a company's value chain, and apply machine learning to make predictions in real-time as those data change. These applications require cost-effective Internet/cloud-scale distributed computing architectures and infrastructures. These public clouds may include those designed to scale out--not up, like traditional compute infrastructures--by taking advantage of millions of fast, inexpensive commodity processors and storage devices.

This distributed infrastructure will require new distributed software architectures and applications as disclosed herein. Writing application software to take advantage of these distributed architectures is non-trivial. Without a cohesive application development platform, most enterprise caliber information technology (IT) teams and system integrators do not have the qualifications or experience to succeed.

IoT platform development efforts to date are attempts to develop a solution from differing subsets of the many independent software components that are collectively known as the open-source Apache Hadoop.TM. stack. These components may include products such as: Cassandra.TM., CloudStack.TM., HDFS, Continum.TM., Cordova.TM., Pivot.TM. Spark.TM., Storm.TM., and/or ZooKeeper.TM.. It is clear that these efforts are more difficult than they appear. The many market claims aside, a close examination suggests that there are few examples, if any, of enterprise production-scale, elastic cloud, big data, and machine learning IoT applications that have been successfully deployed in any vertical market using these types of components.

Applicants have recognized that the use of a platform having a model driven architecture, rather than structured programming architecture, is required to address both big data needs and provide powerful and complete PaaS solutions that include application development tools, user interface (UI) tools, data analysis tools, and/or complex data models that can deal with the large amounts of IoT data.

Model driven architecture is a term for a software design approach that provides models as a set of guidelines for structuring specifications. Model-driven architecture may be understood as a kind of domain engineering and supports model-driven engineering. The model driven architecture may include a type system that may be used as a domain-specific language (DSL) within a platform that may be used by developers, applications, or UIs to access data. In one embodiment, the model driven architecture disclosed herein uses a type system as a domain specific language within the platform. The type system may be used to interact with data and perform processing or analytics based on one or more type or function definitions within the type system.

For IoT, structured programming paradigms dictate that a myriad of independently developed process modules, disparate data sources, censored devices, and user interface modules are linked using programmatic Application Programming Interfaces (APIs). The complexity of the IoT problem using a structured programming model is a product of the number of process modules (M) (the Apache Open Source modules are examples of process modules), disparate enterprise and extraprise data sources(S), unique censored devices (T), programmatic APIs (A), and user presentations or interfaces (U). In the IoT application case this is a very large number, sufficiently large that a programming team cannot comprehend the entirety of the problem, making the problem essentially intractable.

Applicants have recognized that, by using an abstraction layer provided by a type system discussed herein, the complexity of the IoT application problem is reduced by orders of magnitude to order of a few thousand types for any given IoT application that a programmer manipulates using Javascript, or other language, to achieve a desired result. Thus, all of the complexity of the underlying foundation (with an order of M.times.S.times.T.times.A.times.U using structured programming paradigms) is abstracted and simplified for the programmer.

In light of the above, Applicant has developed, and herein presents, solutions for integrating data, processing data, abstracting data, and developing applications for addressing one or more of the needs or deficiencies discussed above. Some implementations may obtain, aggregate, store, manage, process, and/or expose extremely large volumes of data from various sources as well as provide powerful and integrated data management, analytic, machine learning, application development, and/or other tools. Some embodiments may include a model driven architectures that includes a type system. For example, the model driven architecture may implement abstraction of data using a type system to simplify or unify how the data is accessed, processed, or manipulated, reducing maintenance and development costs. In at least one implementation a PaaS platform is disclosed for the design, development, deployment, and operation of IoT applications and business processes.

Example technologies which may be included in one or more embodiments include: nearly free and unlimited compute capacity and storage in scale-out cloud environments, such as AWS; big data and real-time streaming; IoT devices with low-cost sensors; smart connected devices; mobile computing; and data science including big-data analytics and machine learning to process the volume, velocity, and variety of big-data streams.

One or more of the technologies of the computing platforms disclosed herein enable capabilities and applications not previously possible, including precise predictive analytics, massively parallel computing at the edge of a network, and fully connected sensor networks at the core of a business value chain. The number of addressable business processes will grow exponentially and require a new platform for the design, development, deployment, and operation of new generation, real-time, smart and connected applications. Data are strategic resources at the heart of the emerging digital enterprise. The IoT infrastructure software stack will be the nerve center that connects and enables collaboration among previously separate business functions, including product development, market, sales, service support, manufacturing, finance, and human capital management.

The implementations and new developments disclosed herein can provide a significant leap in productivity and reshape the business value chain, offering organizations a sustainable competitive advantage. At least some embodiments may represent or depend on an entirely new technology infrastructure or set of technology layers (i.e., a technology stack). This technology stack may include products with embedded microprocessors and communication capabilities, network communications, and a product cloud. Some embodiments may include a product cloud that includes software running on a hosted elastic cloud technology infrastructure that stores or processes product data, customer data, enterprise data, and Internet data. The product cloud may provide one or more of: a platform for building and processing software applications; massive data storage capacity; a data abstraction layer that implements a type system; a rules engine and analytics platform; a machine learning engine; smart product applications; and social human-computer interaction models. One or more of the layers or services may depend on the data abstraction layer for accessing stored or managed data, communicating data between layers or applications, or otherwise store, access, or communicate data.

At least some embodiments disclosed herein enable rapid product application development and operation powered by the collection, analysis, and sharing of potentially huge amounts of longitudinal data. The data may include data generated inside as well as outside of smart products or even the organization that were heretofore inaccessible and could not be processed. A detailed description of systems and methods consistent with embodiments of the present disclosure is provided below. While several embodiments are described, it should be understood that this disclosure is not limited to any one embodiment, but instead encompasses numerous alternatives, modifications, and equivalents. In addition, while numerous specific details are set forth in the following description in order to provide a thorough understanding of the embodiments disclosed herein, some embodiments may be practiced without some or all of these details. Moreover, for the purpose of clarity, certain technical material that is known in the related art has not been described in detail in order to avoid unnecessarily obscuring the disclosure.

FIG. 2 is a schematic block diagram illustrating a system 200 having a model driven architecture for integrating, processing, and abstracting data related to an enterprise Internet-of-Things application development platform. The system 200 may also include tools for machine learning, application development and deployment, data visualization, and/or other tools. The system 200 includes an integration component 202, a data services component 204, a modular services component 206, and application 210 which may be located on or behind an application layer.

The system 200 may operate as a comprehensive design, development, provisioning, and operating platform for industrial-scale applications in connected device industries, such as energy industries, health or wearable technology industries, sales and advertising industries, transportation industries, communication industries, scientific and geological study industries, military and defense industries, financial services industries, healthcare industries, manufacturing industries, retail, government organizations, and/or the like. The system 200 may enable integration and processing of large and highly dynamic data sets from enormous sensor networks and large scale information systems. The system 200 further provides or enables rapid deployment of software for rigorous predictive analytics, data exploration, machine learning, and data visualization.

The dotted line 212 indicates a region where a type system is implemented such that that the integration component 202, data services component 204, and modular services component 206, in one embodiment, implement a model driven architecture. The model driven architecture may include or implement a domain-specific language or type system for distributed systems. The integration component 202, data services component 204, and modular services component 206 may store, transform, communicate, and process data based on the type system. In one embodiment, the data sources 208 and/or the applications 210 may also operate based on the type system. However, in one embodiment, the applications 210 may be configured to operate or interface with the components 202-206 based on the type system. For example, the applications 210 may include business logic written in code and/or accessing types defined by a type system to leverages services provided by the system 200.

In one embodiment, the model driven architecture uses a type system that provides type-relational mapping based on a plurality of defined types. For example, the type system may define types for use in the applications 210, such as a type for a customer, organization, sensor, smart device (such as a smart utility meter), or the like. During development of an application, an application developer may write code that accesses the type system to read or write data to the system, perform processing or business logic using defined functions, or otherwise access data or functions within defined types. In one embodiment, the model driven architecture enforces validation of data or type structure using annotations/keywords.

A user interface (UI) framework may also interact with the type system to obtain and display data. The types in the type system may include defined view configuration types used for rendering type data on a screen in a graphical, text, or other format. In one embodiment, a server, such as a server that implements a portion of the system 200 may implement mapping between data stored in one or more databases and a type in the type system, such as data that corresponds to a specific customer type or other type.

Type System

The following paragraphs provide a detailed explanation and illustrations of one embodiment of a type system. This type system is given by way of example only, is not limiting, and presents an example type system which may be used in various embodiments and in combination with any other teaching or disclosure of the present description.

In one embodiment, the fundamental concept in the type system is a "type," which is similar to a "class" in object-oriented programming languages. At least one difference between "class" in some languages and "type" in some embodiments of the type system disclosed herein is that the type system is not tied to any particular programming language. As discussed above, at least some embodiments disclosed herein include a model-driven architecture, where types are the models. Not only are types interfaces across different underlying technologies, they are also interfaces across different programming languages. In fact, the type system can be considered self-describing, so here we present an overview of the types that may define the type system itself.

Types

A type is the definition of a potentially complex object that the system understands. Types are the primary interface for all platform services and the primary way application logic is organized. Some types are defined by and built into the platform itself. These types provide a uniform model across a variety of underlying technologies. Platform types also provide convenient functionality and build up higher-level services on top of low-level technologies. Other types are defined by the developers using the platform. Once installed in the environment, they can be used in the same ways as the platform types. There is no sharp distinction between types provided by the platform and types developed using the platform.

Fields and Functions

Types may define data fields, each of which has a value type (see below). It also may define methods, which provide static functions that can be called on the type and member functions that can be called on instances:

TABLE-US-00001 type Point { x : !double y : !double magnitude : member function( ) : double }

In this example, there are two data fields, `x` and `y`, both declared as primitive `double` (numeric) values. Note that an exclamation point before the type may indicate that the values are required for these fields. There is also one member method (function) that calculates the point's magnitude and returns it as a double value.

Mixins

Types can "mix in" other types. This is like sub-classing in the Java or C++ languages, but unlike Java, in one embodiment, multiple types may be mixed in. Mixins may be parametric, which means they have unbound variables which are defined by types that mix them in (at any depth). For example, we might want to have the actual coordinate values in the example above be parametric:

TABLE-US-00002 type Point<V> { x : V y : V } type RealPoint mixes Point<double> type IntPoint mixes Point<int>

In the above example, "Point" is now a parametric type because it has the unbound parametric variable `V`. The RealPoint and IntPoint types mix in Point and bind the variable in different ways. For instances of RealPoint, the fields are bound to `double` values, which has the same effect as the explicit declaration in the first example. However, either type can be passed to a function that declares an argument of type Point.

Value Types

A ValueType (itself a Type) is the metadata for any individual piece of data the system understands. Value types can represent instances of specific Types, but can also represent primitive values, collections and functions. When talking about modeling, the number of "meta levels" may need to be clarified. Data values are meta level 0 (zero); the value 11 (eleven) is just a data value. Value types are the possible types of data values, and thus are meta level 1 (one).

The "double primitive" value type defines one category of values: real numbers representable by a double-precision floating-point format. So the value 11' might be stored in a field declared as a ` double` value type, and then naturally displayed as 11.0' (or maybe 1.1.times.10.sup.1). It might also be stored in a field declared as an `int` or even a `string`. We are talking here about the meta level two: the metadata of metadata. Another way to say it is that we're talking about the shape of the data that describes the shape of actual data values, or that "ValueType" is the model used to define models.

Primitive Types

In one embodiment, the simplest value types are primitives. The values of primitives are generally simple values which have no further sub-structure exposed. Note that they may still have sub-structure, but it's not exposed through the type system itself. For example, a `datetime` value can be thought of as having a set of rules for valid values and interpretation of values as calendar units, but the internal structure of datetime is not documented as value types. These primitive types may be arranged into a natural hierarchy, as shown below: number integer int (32-bit signed integer) long int (64-bit signed integer) byte (8-bit signed integer) real double (IEEE double precision) float (IEEE single precision) decimal (exact representation using BCD) string (sequence of Unicode characters) char (single Unicode character) boolean (true/false) datetime (logical or physical date and time) binary (raw binary data block) json ([JavaScript Object Notation](http://json.org/))

Note that for storage purposes, there are variants of these basic types, but from a coding and display perspective this may be the complete set of primitive value type. Since primitive types have no sub-structure, the value types are simply themselves (such as singletons or an enumeration).

Collection Types

The next group of value types to consider is "collections." There are various shapes of collections for different purposes, but collections may share some common properties, such as: they contain zero or more elements; the elements have an ordering; and/or the have a value type for their elements. Note that collections are strongly typed, so they have sub-structure that is exposed in their value type. The collection types may include: array (an ordered collection of values); set (a unique ordered collection); map (a labelled collection of values); and/or stream (a read-once sequence of values). Collection types may always declare their element types and map types also declare their key type. We use the parametric type notation in our domain specific language (DSL) to represent this:

TABLE-US-00003 type Example { array : [boolean] set : set<string> map : map<string,double> produce : function( ) : stream<int> }

Note that map keys can be any primitive type (not just strings), although strings are the most common case. Sets behave nearly identically to arrays, but ignore insertion of duplicate elements.

Reference Types

Of course, fields can also be instances of types (see above). These may be called "reference types" because they appear as "pointers" to instances of other objects.

TABLE-US-00004 type Cluster { centroid : Point inputs : [Point] boundary : member function( ) : [Point] }

In the example above, Point is a reference to a Point type (or any type that mixes it in). References can appear directly or be used in collections or as function arguments or return values.

The above examples include several examples of method functions. Functions are declared on types in the same way as data fields. Methods can be "static" or "member" functions. Static functions are called on the type itself while member functions must be called on instances of the type.

TABLE-US-00005 type KMeans { cluster : function(points : ![Point], n : !int) : ![Cluster] }

In this example "cluster" is a static function on a "KMeans" type that takes two arguments and returns an array. Like everything else in this embodiment, function argument declaration is strongly typed and so "points: ![Point]" declares: that the argument name is "points"; that its type is an array (collection) of Point instances; and the exclamation point indicates that the argument is required. The return value may also be strongly typed: the function returns an array of Cluster instances and the exclamation point indicates that a value is always returned.

Lambdas

Note that the functions above may be called "methods" because they are defined on a per-type basis. The KMeans type above has exactly one implementation of cluster. (This is true for both static and member methods.) Sometimes a user may want the function implementation to be dynamic, in which case a "lambda" may be used. For example, a user may have multiple populations, each of which comes with a clustering algorithm. For some populations, one clustering technique might be more appropriate than another, or perhaps the parameters to the clustering technique might differ. Instead of hard-coding the clustering algorithm, for example, we could use a "lambda".

TABLE-US-00006 type Population { points : [Point] cluster : lambda(points : [Point]) : [Cluster] }

The declaration of the cluster variable looks somewhat like a method, but the `lambda` keyword indicates that it is a data field. Data fields typically have different values for each instance of the type and lambda fields are no exception. For one population, we might determine that k-means with n=5 produces good clusters and for another OPTICS might produce better clusters with an appropriately tuned .epsilon. and distance function:

TABLE-US-00007 [ { points: pointSet1, cluster: function(points) { return KMeans.cluster(points, 5); } }, { points: pointSet2, cluster: function(points) { return OPTICS.cluster(points, ...); } } ]

Lambda values may also be passed to functions. Lambdas may be thought of as anonymous JavaScript functions, but with strongly typed argument and return values.

In light of the above description of an example type system, further illustrative examples and discussion are provided below. In one embodiment, the type system abstracts underlying storage details, including database type, database language, or storage format from the applications or other services. Abstraction of storage details can reduce the amount of code or knowledge required by a developer to develop powerful applications. Furthermore, with the abstraction of storage, type models, functions, or other details by the type system, customers or developers for a client of a PaaS system are insulated from any changes that are to be made over time. Rather, these changes may be made in the type system without any need for customers or developers to be made aware or any updates made to applications or associated business logic. In one embodiment, the type system, or types or functions defined by the type system, perform data manipulation language (DML) operations, such as structured query language (SQL) CREATE/UPDATE operations, for persisting types to a database in structured tables. The type system may also generate SQL for reading data from the database and materializing/returning results as types.

The type system may also be configured with defined functions for abstracting data conversion, calculating values or attributes, or performing any other function. For example, a type defined by the type system may include one or more defined methods or functions for that type. These methods or functions may be explicitly called within business logic or may be automatically triggered based on other requests or functions made by business logic via the type system. In one embodiment, types may depend on and include each other to implement a full type system that abstract details above the abstraction layer but also abstracts details between types. The specification of types, models, data reads and writes, functions, and modules within the type system may increase robustness of the system because changes may only need to be made in a single (or very small number of locations) and then are available to all other types, applications, or other components of a system.

A model driven architecture for distributed systems may provide significant benefits and utility to a cyber-physical system, such as system 200 of FIG. 2. For example, the type system may provide types, functions, and other services that are optimized for cyber-physical applications, such as analytics, machine learning algorithms, data ingestion, or the like. Additionally, as the system 200 is used and extended over time, continual support for new patterns/optimizations or other features useful for big data, IoT, and/or cyber-physical systems can be implemented to benefit a large number of types and/or applications. For example, if improvements to a machine learning algorithm have been made, these improvements will be immediately available to any other types or applications that utilize that algorithm, potentially without any changes needed to the other types or business logic for applications.

An additional benefit which may result from the model driven architecture includes abstraction of the platform that hides the details of the underlying operations. This improves not only the experience of customers or their application developers, but also maintenance of the system itself. For example, even developers of the type system or cyber-physical system may benefit from abstraction between types, functions, or modules within the type system.

In one embodiment, the type system may be defined by metadata or circuitry within the system 200. The type system may include a collection of modules and types. The modules may include a collection of types that are grouped based on related types or functionality. The types may include definitions for types, data, data shapes, application logic functions, validation constraints, machine learning classifiers and/or UI layouts. Further discussion regarding the model driven architecture is provided throughout the present disclosure, including in relation to the type metadata component 404 of FIG. 4. In one embodiment, for example, the type metadata component 404 defines type models for a type system for a distributed system.

The integration component 202 is configured to integrate disparate data from a wide range of data sources 208. IoT applications need a reliable, efficient, and simple interface to load customer, asset, sensor, billing, and/or other data into the storage in an accessible manner. In one embodiment, the integration component 202 provides the following features: a set of canonical types that act as the public interfaces to applications, analytic, or other solutions; support for operational data sources, such as customer billing and customer management systems, asset management systems, workforce management systems, distribution management systems, outage management systems, meter or sensor data management systems, and/or the like; support for external data sources, such as weather, property characteristics, tax, social media (i.e. Twitter and Facebook), and census data; notifications so users or administrators can accurately monitor data load processes; a set of canonical models that act as public interfaces to an enterprise Internet-of-Things application development platform (as an example, these canonical models may include energy and oil and gas industry data models to accelerate the development of new business applications); extensibility for the canonical data models to allow a business to adapt to unique business data and integration requirements; and transformation, as needed, for data from data sources 208 to a format defined by a common information model.